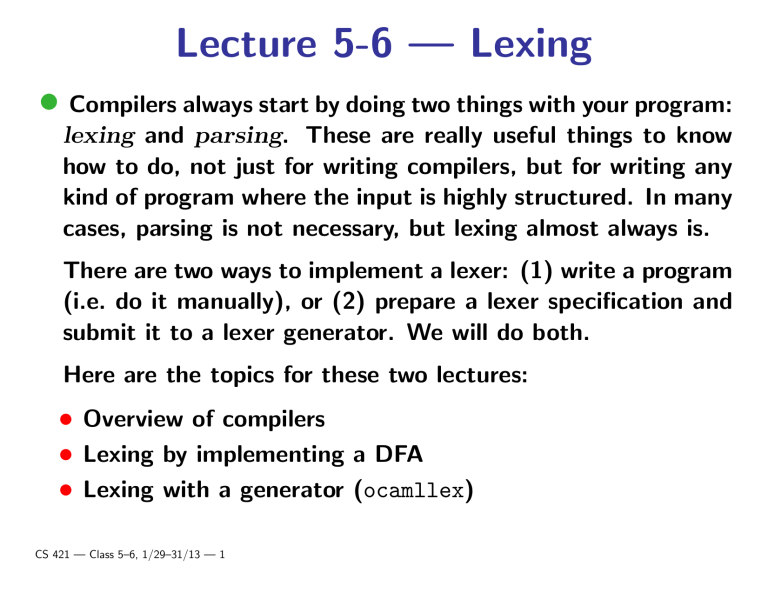

Lecture 5-6 — Lexing

• Compilers always start by doing two things with your program:

lexing and parsing. These are really useful things to know

how to do, not just for writing compilers, but for writing any

kind of program where the input is highly structured. In many

cases, parsing is not necessary, but lexing almost always is.

There are two ways to implement a lexer: (1) write a program

(i.e. do it manually), or (2) prepare a lexer specification and

submit it to a lexer generator. We will do both.

Here are the topics for these two lectures:

• Overview of compilers

• Lexing by implementing a DFA

• Lexing with a generator (ocamllex)

CS 421 — Class 5–6, 1/29–31/13 — 1

Structure of a compiler

Detail of compiler front-end:

CS 421 — Class 5–6, 1/29–31/13 — 2

Lexing and tokens

• Job

of lexer (aka lexical analyzer, scanner, tokenizer)

is to divide input into tokens, and discard whitespace and

comments.

• Token is smallest unit useful for parsing.

• Think of the lexer as a function from a list of characters (the

source file) to a list of tokens.

CS 421 — Class 5–6, 1/29–31/13 — 3

Types of tokens

• Tokens for programming languages are of three types:

• Special characters and character sequences: =, ==, >=,

{, }, ;, etc.

• Keywords: class, if, int, etc.

• Token categories: For parsing purposes, all integers can

be treated the same, and similarly for floats, doubles,

characters, strings, and identifiers.

• Comments and whitespace are also handled by the lexer, but

these are not tokens because they are ignored by the parser;

the lexer deletes them.

• In Java,

three identifiers are reserved (like keywords) but

are treated as identifiers by the parser: true, false, null.

CS 421 — Class 5–6, 1/29–31/13 — 4

Tokens of Java

• Here is a (partial) declaration of the tokens of Java:

type token = EOF | EQ | PLUS | SEMICOLON

| CLASS | INT | LPAREN | RPAREN | ...

| INTLIT of int | FLOATLIT of float

| IDENT of string | STRINGLIT of string

• E.g. Lexer would transform “class

C { int process ( ...”

to

[CLASS; IDENT "C"; LBRACE; INT; IDENT "process"; LPAREN; ... ]

• Now you try it: “x1

[

IDENT "x1"

;

EQ

;

= (3 + y);” becomes:

LPAREN

PLUS ;

CS 421 — Class 5–6, 1/29–31/13 — 5

;

INTLIT

IDENT "y" ;

3

;

RPAREN

;

SEMICOLON

]

Lexing vs. parsing

• Lexing is a simple, efficient pre-processing step

• Tokens can be recognized by finite automata, which are

easily implemented.

• Significant reduction in size from source file to token list

• Parsing is more complicated

• Program cannot be parsed using DFAs. (Why?)

• Many input problems can be solved by lexing alone — which

is why regular expressions are so widely used. (From 373:

finite automata ≡ regular expressions.)

CS 421 — Class 5–6, 1/29–31/13 — 6

Two methods of writing a lexer

• Manual:

• Design a DFA for the tokens, and implement it

• Automatic:

• Write regular expressions for the tokens. Run a program

(“lexer-generator”) that converts regular expressions to a

DFA, and then simulates the DFA.

• Automatic methods usually easier, but sometimes not available

CS 421 — Class 5–6, 1/29–31/13 — 7

Building a lexer by hand

• DFA (non-standard definition):

A directed graph with vertices labelled from the set Tokens ∪ {Error, Discard,

Id or KW}, and edges labelled with sets of characters. The

outgoing edges from any one vertex are labelled with disjoint

sets. One vertex is specified as the start state.

• Note differences from standard definition:

• DFA is not “complete”: You can get to a state where

there are input characters left, but no edge that contains

the next character. This is deliberate.

• No “accept” states: Or rather, states labelled with Error

are reject states and every other state is an accept state.

CS 421 — Class 5–6, 1/29–31/13 — 8

Building a lexer by hand

• Given a DFA as above:

• Open source file, then:

1. From start state, run the DFA as long as possible.

2. When no more transitions are possible, do whatever the

label on the ending state says:

• Token: add to token list

• Error: print error message

• Discard: do nothing at all

• Id or KW: check for keyword; add keyword or ident

to token list.

3. Go to (1).

CS 421 — Class 5–6, 1/29–31/13 — 9

Lexing DFA

• The lexing DFA looks something like this:

• To build this, start by building separate DFAs for each token...

CS 421 — Class 5–6, 1/29–31/13 — 10

•;

Ex: DFA for operators

• <, <=, <<, <<=, <<<

•}

• +, ++, +=

CS 421 — Class 5–6, 1/29–31/13 — 11

Ex: DFA for integer constants

CS 421 — Class 5–6, 1/29–31/13 — 12

Ex: DFA for integer or floating-point

constants

CS 421 — Class 5–6, 1/29–31/13 — 13

Putting DFAs together

• Combine

individual DFAs by putting together their start

states (then making adjustments as necessary to ensure determinism).

• Following slides show how to implement the DFA.

• Number the nodes with positive numbers (0 for the start

state)

• Define transition : int * char → int. transition(s, c)

gives the transition function of the DFA; it returns −1 if

there is no transition from state s on character c.

CS 421 — Class 5–6, 1/29–31/13 — 14

Lexing functions (in pseudocode)

(state * string) get_next_token() {

s = 0; thistoken = "";

while (true) {

c = peek at next char

if (transition(s,c) == -1)

return (s, thistoken)

move c from input to thistoken

s = transition(s,c)

}

token list get_all_tokens() {

tokenlis = []

while (next char not eof) {

(s, thistoken) = get_next_token()

tokenlis = lexing_action(s, thistoken, tokenlis)

}

return tokenlis;

}

CS 421 — Class 5–6, 1/29–31/13 — 15

Lexing actions

lexing_action (state, thistoken, tokenlis) {

switch (label(state)) {

case ERROR: raise lex error

case DISCARD: (* do nothing *)

case ID_OR_KW: Check if tokenchars matches a keyword. If so,

return keyword::tokenlis, else return (Id tokenchars)::tokenlis}

default: return label(state)::tokenlis

}

}

CS 421 — Class 5–6, 1/29–31/13 — 16

DFAs for comments

• C++ comments

• C comments

• OCaml comments

• Not possible!

CS 421 — Class 5–6, 1/29–31/13 — 17

ocamllex

• A lexer generator accepts a list of regular expressions, each

with an associated token; creates a DFA like the one we built

above; and outputs a program that implements the DFA.

• ocamllex is a parser generator whose output is an OCaml

program.

• In the following slides, we describe the input specifications

read by ocamllex, and how to solve various lexing problems

in ocamllex.

• For full details, consult ocamllex manual.

CS 421 — Class 5–6, 1/29–31/13 — 18

Regular expressions in ocamllex

’c’

”c1c2 . . . cn”

r1 r2 . . .

r1 |r2 | . . .

r∗

r+

r?

character c

string (n ≥ 0)

sequence of regular expressions

alternation of regular expressions

Kleene star

same as rr∗

same as r|””

any character

eof

special eof character

[’c1’-’c2’] range of characters

[^’c1’-’c2’] complement of a range of characters

r as id

same as r, but id is bound to the characters matched by r

CS 421 — Class 5–6, 1/29–31/13 — 19

Regular expression examples

• Identifiers: [‘A’ - ‘Z’ ‘a’-‘z’] [‘A’ - ‘Z’ ‘a’-‘z’ ‘0’-‘9’ ‘ ’]*

• Integer literals: [‘0’-‘9’]+

• The keyword if: “if”

• The left-shift operator: “<<”

• Floating-point literals:

[‘0’-‘9’]+‘.’[‘0’-‘9’]+ ([‘E’ ‘e’] [‘+’ ‘-’]? [‘0’-‘9’]+)?

CS 421 — Class 5–6, 1/29–31/13 — 20

Ocamllex specifications

{ header }

let ident = regexp

...

rule main = parse

regexp { action }

| ...

| regexp { action }

{ trailer }

(auxiliary ocaml defs)

(regexp abbreviations)

(action is value returned

when regexp is matched)

(auxiliary ocaml defs)

• header can define names used in actions

• In addition to ordinary regular expression operators, regexps

can include the names given as abbreviations; these are just

replaced by their definitions.

• actions are ocaml expressions; all must be of the same type

CS 421 — Class 5–6, 1/29–31/13 — 21

Ocamllex specifications (cont.)

• ocamllex transforms this specification into a file that defines

the function main : Lexing.lexbuf → type-of-actions. The

name of main’s argument is lexbuf. lexbuf is not a list, but

a “stream” that delivers characters one at a time; the Lexing

module contains a number of functions on lexbufs, but you

won’t need to use them.

• trailer can define functions that call main.

• actions can call main, and can refer to variable lexbuf (the

input stream).

CS 421 — Class 5–6, 1/29–31/13 — 22

Ocamllex specifications (cont.)

• ocamllex puts all the regular expressions together using alternation, then transforms it to an NFA and then a DFA (as in

373). The DFA looks like the one we designed by hand: Each

state is associated with one of the regular expressions (or it’s

an error state). It runs the DFA as long as possible (just as

we did), and then takes the action associated with the state

it ended in.

• If two regexps match:

• If one matches a longer part of the input, that one wins.

• If they both match the exact same characters, the one

that occurs first in the specification wins. (E.g. keywords

should precede regexp for identifiers.)

CS 421 — Class 5–6, 1/29–31/13 — 23

Lexing functions

• ocamllex will define function main, but this is not a convenient functions to use. Instead, in trailer define more useful

functions that call main, e.g.

(* Remember: main: Lexing.lexbuf -> type-of-actions *)

(* Get first token in string s *)

let get_token s = let b = Lexing.from_string (s)

in main b

(* Get list of all tokens in buffer b *)

let rec get_tokens b =

match main b with

EOF -> []

| t -> t :: get_tokens b

(* Get list of all tokens in string s *)

let get_all_tokens s =

get_tokens (Lexing.from_string s)

CS 421 — Class 5–6, 1/29–31/13 — 24

Ocamllex example 1

• In this and following examples, the trailer is omitted; it will

contain definitions like the ones on the previous slide.

• What does this do?

rule main = parse

[’0’-’9’]+

| [’0’-’9’]+’.’[’0’-’9’]+

| [’a’-’z’]+

{ "Int" }

{ "Float" }

{ "String" }

get all tokens "94.7e5" returns ["Float"; "String"; "Int"]

CS 421 — Class 5–6, 1/29–31/13 — 25

Ocamllex examples 2 and 3

let digit = [’0’-’9’]

rule main = parse

digit+

| digit+’.’digit+

| [’a’-’z’]+

{ "Int" }

{ "Float" }

{ "String" }

get all tokens "94.7e5" returns ["Float"; "String"; "Int"]

{

type token = Int | Float | Ident

}

rule main = parse

[’0’-’9’]+

{ Int }

| [’0’-’9’]+’.’[’0’-’9’]+

{ Float }

| [’a’-’z’]+

{ Ident }

get all tokens "94.7e5" returns [Float; Ident; Int]

CS 421 — Class 5–6, 1/29–31/13 — 26

Ocamllex examples 4 and 5

{ type token = Int | Float | Ident }

rule main = parse

[’0’-’9’]+

{ Int }

| [’0’-’9’]+’.’[’0’-’9’]+

{ Float }

| [’a’-’z’]+

{ Ident }

| _

{ main lexbuf }

get all tokens "94.7e5" returns [Float; Ident; Int],

as does get all tokens "94.7 e 5" (which examples 1-3 couldn’t handle)

{ type token = Int of int | Float of float | Ident of string }

rule main = parse

[’0’-’9’]+ as x

{ Int (int_of_string x) }

| [’0’-’9’]+’.’[’0’-’9’]+ as x

{ Float (float_of_string x) }

| [’a’-’z’]+ as id

{ Ident id }

| _

{ main lexbuf }

get all tokens "94.7e5" returns [Float 94.7; Ident "e"; Int 5]

CS 421 — Class 5–6, 1/29–31/13 — 27

Ocamllex example 6

{ type token = Int of int | Float of float | Ident of string | EOF }

rule main = parse

[’0’-’9’]+ as x

| [’0’-’9’]+’.’[’0’-’9’]+ as x

| [’a’-’z’]+ as id

| _

| eof

get all tokens "94.7e5"

EOF]

CS 421 — Class 5–6, 1/29–31/13 — 28

returns

{

{

{

{

{

Int (int_of_string x) }

Float (float_of_string x) }

Ident id }

main lexbuf }

EOF }

[Float 94.7; Ident "e"; Int 5;

Difficult cases...

• Write regular expressions for various kinds of comments:

• C++-style (//...): “//” [ˆ ‘\n’]*

• C-style (/*... */, no nesting):

See ostermiller.org/findcomment.html

• OCaml-style ((*... *), with nesting): Impossible!

CS 421 — Class 5–6, 1/29–31/13 — 29

Simulating DFAs using lexing

functions in ocamllex

• Ocamllex specifications can have more than one function:

rule main = parse

... regexp’s and actions ...

and string = parse

... regexp’s and actions ...

and comment = parse

... regexp’s and actions ...

• Defines functions main, string, and comment.

• Each function has type Lexing.lexbuf → type-of-its-actions.

• Each function can call the other functions. E.g. main might

include:

"/*"

{ comment lexbuf }

CS 421 — Class 5–6, 1/29–31/13 — 30

Handling C-style comments

let open_comment = "/*"

let close_comment = "*/"

rule main = parse

digits ’.’ digits as f

| digits as n

| letters as s

| open_comment

| eof

| _

and comment = parse

close_comment

| _

CS 421 — Class 5–6, 1/29–31/13 — 31

{

{

{

{

{

{

Float (float_of_string f) }

Int (int_of_string n) }

Ident s }

comment lexbuf }

EOF }

main lexbuf }

{ main lexbuf }

{ comment lexbuf }

Handling OCaml-style comments

• Every lexing function has an argument named lexbuf of type

Lexing.lexbuf, but you can add additional arguments. This

allows you to handle nested comments (even though they are

not finite-state).

rule main = parse

| open_comment

{

| eof

{

| _

{

and comment depth = parse

open_comment

{

| close_comment

{

| _

CS 421 — Class 5–6, 1/29–31/13 — 32

comment 1 lexbuf}

[] }

main lexbuf }

comment (depth+1) lexbuf }

if depth = 1

then main lexbuf

else comment (depth - 1) lexbuf }

{ comment depth lexbuf }

Wrap-up

• On Tuesday and today, we discussed:

•

•

•

What a lexer does

How to write a lexer by hand

How to write a lexer using ocamllex

• We discussed it because:

•

•

This is the first step in a compiler

Knowing how to write a lexer is a useful skill

• In the next class, we will:

•

Talk about parsing

• What to do now:

•

•

MP3

Important: Review context-free grammars from CS 373

CS 421 — Class 5–6, 1/29–31/13 — 33