Lab Manual

Subject: Computational Lab-II/ CSL803

Natural Language Processing (NLP)

SEM./YEAR:8TH/4TH

RIZVI COLLEGE OF ENGINEERING

New Rizvi Educational Complex, Off Carter Rd, Bandra West, Mumbai-50.

S.NO.

Name of Experiment

01

02

Word Analysis

INDEX

Date of

Commencement

Date of

Completion

Grade Remark

Pre-processing of text

(Tokenization, Filtration, Script

Validation, Stop Word

Removal, Stemming)

03

Morphological Analysis

04

N-gram model

05

. POS tagging

06

Chunking

07

Named Entity Recognition

08

Case Study/ Mini Project

based on Application

mentioned in Module 6.

Name of student & Signature

Subject Teacher

-------------------------------------

Prof. Vikas R Dubey

RIZVI COLLEGE OF ENGINEERING, MUMBAI

DEPARTMENT OF COMPUTER ENGINEERING

New Rizvi Educational Complex, Off Carter Rd, Bandra West, Mumbai-50.

CERTIFICATE OF SUBMISSION

This is to certify that Mr./Mrs.-----------------------------------------------(Roll no.), student of department of computer engineering, studying in semester

8th (4th year) has submitted the term work/oral/practical/report for the subject

Computational lab-2(Natural Language Processing)for the academic session

2021-22(even) for the partial fulfilment of the bachelor of engineering (B.E.).

Subject In charge

Prof. Vikas R Dubey

Head of Department

Prof. Shiburaj Pappu

Principal

Dr.Varsha Shah

Syllabus

Laboratory Work/Case study/Experiments: Description: The Laboratory Work

(Experiments) for this course is required to be performed and to be evaluated in

CSL803: Computational Lab-II

The objective of Natural Language Processing lab is to introduce the students with the basics of NLP

which will empower them for developing advanced NLP tools and solving practical problems in this

field.

Reference for Experiments: http://cse24-iiith.virtual-labs.ac.in/#

Reference for NPTEL: http://www.cse.iitb.ac.in/~cs626-449

Sample Experiments: possible tools / language: R tool/ Python programming Language Note:

Although it is not mandatory, the experiments can be conducted with reference to any Indian

regional language.

1. Word Analysis

2. Pre-processing of text (Tokenization, Filtration, Script Validation, Stop Word Removal, Stemming)

3. Morphological Analysis

4. N-gram model

5. POS tagging

6. Chunking

7. Named Entity Recognition

8. Case Study/ Mini Project based on Application mentioned in Module 6.

EXPT NO.01

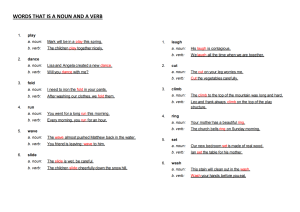

AIM: Word Analysis

Theory:

Analysis of a word into root and affix(es) is called as Morphological analysis of

a word. It is mandatory to identify root of a word for any natural language

processing task. A root word can have various forms. For example, the word

'play' in English has the following forms: 'play', 'plays', 'played' and 'playing'.

Hindi shows more number of forms for the word 'खेल' (khela) which is

equivalent to 'play'. The forms of 'खेल'(khela) are the following:

खेल(khela), खेला(khelaa), खेली(khelii), खेलूंगा(kheluungaa), खेलूंगी(kheluungii), खेलेगा(khelegaa),

खेलेगी(khelegii), खेलते(khelate), खेलती(khelatii), खेलने(khelane), खेलकर(khelakar)

For Telugu root ఆడడం (Adadam), the forms are the following::

Adutaanu, AdutunnAnu, Adenu, Ademu, AdevA, AdutAru, Adutunnaru, AdadAniki, Adesariki,

AdanA, Adinxi, Adutunxi, AdinxA, AdeserA, Adestunnaru, ...

Thus we understand that the morphological richness of one language might

vary from one language to another. Indian languages are generally

morphologically rich languages and therefore morphological analysis of

words becomes a very significant task for Indian languages.

Types of Morphology

Morphology is of two types,

1. Inflectional morphology

Deals with word forms of a root, where there is no change in lexical category. For example,

'played' is an inflection of the root word 'play'. Here, both 'played' and 'play' are verbs.

2. Derivational morphology

Deals with word forms of a root, where there is a change in the lexical category. For example,

the word form 'happiness' is a derivation of the word 'happy'. Here, 'happiness' is a derived

noun form of the adjective 'happy'.

Morphological Features:

All words will have their lexical category attested during morphological analysis.

A noun and pronoun can take suffixes of the following features: gender, number, person,

case

For example, morphological analysis of a few words is given below:

Languageinput:word

Hindi

लडके (ladake)

output:analysis

rt=लड़का(ladakaa), cat=n, gen=m, num=sg, case=obl

Hindi

Hindi

English

English

लडके (ladake) rt=लड़का(ladakaa), cat=n, gen=m, num=pl, case=dir

लड़क ूं (ladakoM)rt=लड़का(ladakaa), cat=n, gen=m, num=pl, case=obl

boy

rt=boy, cat=n, gen=m, num=sg

boys

rt=boy, cat=n, gen=m, num=pl

A verb can take suffixes of the following features: tense, aspect, modality, gender, number,

person

Languageinput:wordoutput:analysis

rt=हँस(hans), cat=v, gen=fem, num=sg/pl, per=1/2/3 tense=past,

Hindi

हँसी(hansii)

aspect=pft

English toys

rt=toy, cat=n, num=pl, per=3

'rt' stands for root. 'cat' stands for lexical category. Thev value of lexicat category can be

noun, verb, adjective, pronoun, adverb, preposition. 'gen' stands for gender. The value of

gender can be masculine or feminine.

'num' stands for number. The value of number can be singular (sg) or plural (pl).

'per' stands for person. The value of person can be 1, 2 or 3

The value of tense can be present, past or future. This feature is applicable for verbs.

The value of aspect can be perfect (pft), continuous (cont) or habitual (hab). This feature is

not applicable for verbs.

'case' can be direct or oblique. This feature is applicable for nouns. A case is an oblique case

when a postposition occurs after noun. If no postposition can occur after noun, then the case

is a direct case. This is applicable for hindi but not english as it doesn't have any

postpositions. Some of the postpsitions in hindi are: का(kaa), की(kii), के(ke), क (ko), में(meM)

Procedure:

STEP1: Select the language.

OUTPUT: Drop down for selecting words will appear.

STEP2: Select the word.

OUTPUT: Drop down for selecting features will appear.

STEP3: Select the features.

STEP4: Click "Check" button to check your answer.

OUTPUT: Right features are marked by tick and wrong features are marked by cross.

Execution and output:

Word Analysis

Select a Language which you know better

Select a word from the below dropbox and do a morphological analysis on that

word

---Select Word---

Select the Correct morphological analysis for the above word using dropboxes

(NOTE : na = not applicable)

WORD

ROOT

watching

watch

verb

CATEGORY

male

GENDER

plural

NUMBER

PERSON

second

na

CASE

TENSE

Check

past-perfect

Conclusion : Thus word analysis is done

EXPT NO.02

AIM: Pre-processing of text (Tokenization, Filtration, Script Validation, Stop Word Removal,

Stemming)

Theory: Preprocessing

a) Tokenizer:

In simple words, a tokenizer is a utility function to split a sentence into

words.keras.preprocessing.text.Tokenizer tokenizes(splits) the texts into tokens(words) while

keeping only the most occurring words in the text corpus.

#Signature:

Tokenizer(num_words=None, filters='!"#$%&()*+,-./:;<=>?@[\\]^_`{|}~\t\n',

lower=True, split=' ', char_level=False, oov_token=None, document_count=0, **kwargs)

The num_words parameter keeps a pre specified number of words in the text only. This is helpful as

we don’t want our models to get a lot of noise by considering words that occur very infrequently. In

real-world data, most of the words we leave using num_words param are normally misspells. The

tokenizer also filters some non-wanted tokens by default and converts the text into lowercase.

The tokenizer once fitted to the data also keeps an index of words (dictionary of words which we can

use to assign a unique number to a word) which can be accessed by tokenizer.word_index. The

words in the indexed dictionary are ranked in order of frequencies.

CODING/PROGRAM:

So the whole code to use tokenizer is as follows:

from keras.preprocessing.text import Tokenizer

## Tokenize the sentences

tokenizer = Tokenizer(num_words=max_features)

tokenizer.fit_on_texts(list(train_X)+list(test_X))

train_X = tokenizer.texts_to_sequences(train_X)

test_X = tokenizer.texts_to_sequences(test_X)

where train_X and test_X are lists of documents in the corpus.

Filtration:

NLP is short for Natural Language Processing. As you probably know, computers are not as great at

understanding words as they are numbers. This is all changing though as advances in NLP are

happening everyday. The fact that devices like Apple’s Siri and Amazon’s Alexa can (usually)

comprehend when we ask the weather, for directions, or to play a certain genre of music are all

examples of NLP. The spam filter in your email and the spellcheck you’ve used since you learned to

type in elementary school are some other basic examples of when your computer is understanding

language.

As a data scientist, we may use NLP for sentiment analysis (classifying words to have positive or

negative connotation) or to make predictions in classification models, among other things. Typically,

whether we’re given the data or have to scrape it, the text will be in its natural human format of

sentences, paragraphs, tweets, etc. From there, before we can dig into analyzing, we will have to do

some cleaning to break the text down into a format the computer can easily understand.

For this example, we’re examining a dataset of Amazon products/reviews which can be found and

downloaded for free on data.world. I’ll be using Python in Jupyter notebook.

Here are the imports used:

(You may need to run nltk.download() in a cell if you’ve never previously used it.)

Read in csv file, create DataFrame & check shape. We are starting out with 10,000 rows and 17

columns. Each row is a different product on Amazon.

I conducted some basic data cleaning that I won’t go into detail about now, but you can read my

post about EDA here if you want some tips.

In order to make the dataset more manageable for this example, I first dropped columns with too

many nulls and then dropped any remaining rows with null values. I changed

the number_of_reviews column type from object to integer and then created a new DataFrame

using only the rows with no more than 1 review. My new shape is 3,705 rows and 10 columns

and I renamed it reviews_df.

NOTE: If we were actually going to use this dataset for analysis or modeling or anything besides a

text preprocessing demo, I would not recommend eliminating such a large percent of the rows.

The following workflow is what I was taught to use and like using, but the steps are just general

suggestions to get you started. Usually I have to modify and/or expand depending on the text

format.

1. Remove HTML

2. Tokenization + Remove punctuation

3. Remove stop words

4. Lemmatization or Stemming

While cleaning this data I ran into a problem I had not encountered before, and learned a cool new

trick from geeksforgeeks.org to split a string from one column into multiple columns either on

spaces or specified characters.

The column I am most interested in is customer_reviews, however, upon

taking a closer look, it currently has the review title, rating, review date, customer name, and review

all in one cell separated by //.

Pandas .str.split method can be applied to a Series. First parameter is the repeated part of the string

you want to split on, n=maximum number of separations and expand=True will split up the sections

into new columns. I set the 4 new columns equal to a new variable called reviews.

Pandas .str.split method can be applied to a Series. First parameter is the repeated part of the string

you want to split on, n=maximum number of separations and expand=True will split up the sections

into new columns. I set the 4 new columns equal to a new variable called reviews.

Pandas .str.split method can be applied to a Series. First parameter is the repeated part of the string

you want to split on, n=maximum number of separations and expand=True will split up the sections

into new columns. I set the 4 new columns equal to a new variable called reviews.

Then you can rename the new 0, 1, 2, 3, 4 columns in the original reviews_df and drop the original

messy column.

I ran the same method over the new customer_name column to split on the \n \n and then dropped

the first and last columns to leave just the actual customer name. There is a lot more we could do

here if this were a longer article! Right off the bat, I can see the names and dates could still use some

cleaning to put them in a uniform format.

Removing HTML is a step I did not do this time, however, if data is coming from a web scrape, it is a

good idea to start with that. This is the function I would have used.

Pretty much every step going forward includes creating a function and then applying it to a series. Be

prepared, lambda functions will very shortly be your new best friend! You could also build a function

to do all of these in one go, but I wanted to show the break down and make them easier to

customize.

Remove punctuation:

One way of doing this is by looping through the Series with list comprehension and keeping

everything that is not in string.punctuation, a list of all punctuation we imported at the beginning

with import string.

“ “.join will join the list of letters back together as words where there are no spaces.

If you scroll up you can see where this text previously had commas, periods, etc.

However, as you can see in the second line of output above, this method does not account for user

typos. Customer had typed “grandson,am” which then became one word “grandsonam” once the

comma was removed. I still think this is handy to know in case you ever need it though.

Tokenize:

This breaks up the strings into a list of words or pieces based on a specified pattern using Regular

Expressions aka RegEx. The pattern I chose to use this time (r'\w') also removes punctuation and is a

better option for this data in particular. We can also add.lower() in the lambda function to make

everything lowercase.

see in line 2: “grandson” and “am” are now separate.

Some other examples of RegEx are:

‘\w+|\$[\d\.]+|\S+’ = splits up by spaces or by periods that are not attached to a digit

‘\s+’, gaps=True = grabs everything except spaces as a token

‘[A-Z]\w+’ = only words that begin with a capital letter.

Stop Word Removal:

We imported a list of the most frequently used words from the NL Toolkit at the beginning with from

nltk.corpus import stopwords. You can run stopwords.word(insert language) to get a full list for

every language. There are 179 English words, including ‘i’, ‘me’, ‘my’, ‘myself’, ‘we’, ‘you’, ‘he’, ‘his’,

for example. We usually want to remove these because they have low predictive power. There are

occasions when you may want to keep them though. Such as, if your corpus is very small and

removing stop words would decrease the total number of words by a large percent.

Stemming:

Stemming & Lemmatizing:

Both tools shorten words back to their root form. Stemming is a little more aggressive. It cuts off

prefixes and/or endings of words based on common ones. It can sometimes be helpful, but not

always because often times the new word is so much a root that it loses its actual meaning.

Lemmatizing, on the other hand, maps common words into one base. Unlike stemming though, it

always still returns a proper word that can be found in the dictionary. I like to compare the two to

see which one works better for what I need. I usually prefer Lemmatizer, but surprisingly, this time,

Stemming seemed to have more of an affect.

Lemmatizer : can barely even see a difference

You see more of a difference with Stemmer so I will keep that one in place. Since this is the final

step, I added " ".join() to the function to join the lists of words back together.

Now your text is ready to be analyzed! You could go on to use this data for sentiment analysis, could

use the ratings or manufacture columns as target variable based on word correlations. Maybe build

a recommender system based on user purchases or item reviews or customer segmentation with

clustering. The possibilities are endless!

CONCLUSION : Pre-processing of text (Tokenization, Filtration, Script Validation, Stop Word

Removal, Stemming)

EXPT. NO. 03

Aim: Morphological Analysis

Theory : In linguistics, morphology is the study of words, how they are formed, and their

relationship to other words in the same language. It analyzes the structure of words and parts

of words, such as stems, Morphological Analysis

Polyglot offers trained morfessor models to generate morphemes from words. The goal of the

Morpho project is to develop unsupervised data-driven methods that discover the regularities

behind word forming in natural languages. In particular, Morpho project is focussing on the

discovery of morphemes, which are the primitive units of syntax, the smallest individually

meaningful elements in the utterances of a language. Morphemes are important in automatic

generation and recognition of a language, especially in languages in which words may have many

different inflected forms.

root words, prefixes, and suffixes.

Morphology

Morphology is the part of linguistics that deals with the study of words, their internal structure and

partially their meanings. It refers to identification of a word stem from a full word form. A

morpheme in morphology is the smallest units that carry meaning and fulfill some grammatical

function.

Morphological analysis

Morphological Analysis is the process of providing grammatical information of a word given its suffix.

Models

There are three principal approaches to morphology, which each try to capture the distinctions

above in different ways. These are,

• Morpheme-based morphology also known as Item-and-Arrangement approach.

• Lexeme-based morphology also known as Item-and-Process approach.

• Word-based morphology also known as Word-and-Paradigm approach.

Morphological Analyzer

A morphological analyzer is a program for analyzing the morphology of an input word, it detects

morphemes of any text. Presently we are referring to two types of morph analyzers for Indian

languages: 1. Phrase level Morph Analyzer 2. Word level Morph Analyzer

Role of TDIL

Morphological analyzer is developed for some Indian languages under Machine Translation project

of TDIL.

CODING:

Languages Coverage

Using polyglot vocabulary dictionaries, we trained morfessor models on the most frequent words

50,000 words of each language.

from polyglot.downloader import downloader

print(downloader.supported_languages_table("morph2"))

1. Piedmontese language

2. Lombard language

3. Gan Chinese

4. Sicilian

5. Scots

6. Kirghiz, Kyrgyz

7. Pashto, Pushto

8. Kurdish

9. Portuguese

10. Kannada

11. Korean

12. Khmer

13. Kazakh

14. Ilokano

15. Polish

16. Panjabi, Punjabi

17. Georgian

18. Chuvash

19. Alemannic

20. Czech

21. Welsh

22. Chechen

23. Catalan; Valencian

24. Northern Sami

25. Sanskrit (Saṁskṛta)

26. Slovene

27. Javanese

28. Slovak

29. Bosnian-Croatian-Serbian 30. Bavarian

31. Swedish

32. Swahili

33. Sundanese

34. Serbian

35. Albanian

36. Japanese

37. Western Frisian

38. French

39. Finnish

40. Upper Sorbian

41. Faroese

42. Persian

43. Sinhala, Sinhalese

44. Italian

45. Amharic

46. Aragonese

47. Volapük

48. Icelandic

49. Sakha

50. Afrikaans

51. Indonesian

52. Interlingua

53. Azerbaijani

54. Ido

55. Arabic

56. Assamese

57. Yoruba

58. Yiddish

59. Waray-Waray

60. Croatian

61. Hungarian

62. Haitian; Haitian Creole 63. Quechua

64. Armenian

65. Hebrew (modern)

66. Silesian

67. Hindi

68. Divehi; Dhivehi; Mald... 69. German

70. Danish

71. Occitan

72. Tagalog

73. Turkmen

74. Thai

75. Tajik

76. Greek, Modern

77. Telugu

78. Tamil

79. Oriya

80. Ossetian, Ossetic

81. Tatar

82. Turkish

83. Kapampangan

84. Venetian

85. Manx

86. Gujarati

87. Galician

88. Irish

89. Scottish Gaelic; Gaelic 90. Nepali

91. Cebuano

92. Zazaki

93. Walloon

94. Dutch

95. Norwegian

96. Norwegian Nynorsk

97. West Flemish

98. Chinese

99. Bosnian

100. Breton

101. Belarusian

102. Bulgarian

103. Bashkir

104. Egyptian Arabic

105. Tibetan Standard, Tib...

106. Bengali

107. Burmese

108. Romansh

109. Marathi (Marāṭhī)

110. Malay

111. Maltese

112. Russian

113. Macedonian

114. Malayalam

115. Mongolian

116. Malagasy

117. Vietnamese

118. Spanish; Castilian

119. Estonian

120. Basque

121. Bishnupriya Manipuri 122. Asturian

123. English

124. Esperanto

125. Luxembourgish, Letzeb... 126. Latin

127. Uighur, Uyghur

128. Ukrainian

129. Limburgish, Limburgan...

130. Latvian

131. Urdu

132. Lithuanian

133. Fiji Hindi

134. Uzbek

135. Romanian, Moldavian, ...

Download Necessary Models

%%bash

polyglot download morph2.en morph2.ar

[polyglot_data] Downloading package morph2.en to

[polyglot_data] /home/rmyeid/polyglot_data...

[polyglot_data] Package morph2.en is already up-to-date!

[polyglot_data] Downloading package morph2.ar to

[polyglot_data] /home/rmyeid/polyglot_data...

[polyglot_data] Package morph2.ar is already up-to-date!

Example

from polyglot.text import Text, Word

words = ["preprocessing", "processor", "invaluable", "thankful", "crossed"]

for w in words:

w = Word(w, language="en")

print("{:<20}{}".format(w, w.morphemes))

preprocessing

['pre', 'process', 'ing']

processor

['process', 'or']

invaluable

['in', 'valuable']

thankful

['thank', 'ful']

crossed

['cross', 'ed']

If the text is not tokenized properly, morphological analysis could offer a smart of way of splitting

the text into its original units. Here, is an example:

blob = "Wewillmeettoday."

text = Text(blob)

text.language = "en"

text.morphemes

WordList([u'We', u'will', u'meet', u'to', u'day', u'.'])

!polyglot --lang en tokenize --input testdata/cricket.txt | polyglot --lang en morph | tail -n 30

which

which

India

In_dia

beat

beat

Bermuda

Ber_mud_a

in

in

Port

Port

of

of

Spain

Spa_in

in

in

2007

2007

,

,

which

which

was

wa_s

equalled

equal_led

five

five

days

day_s

ago

ago

by

by

South

South

Africa

Africa

in

in

their

t_heir

victory

victor_y

over

over

West

West

Indies

In_dies

in

in

Sydney

Syd_ney

.

.

Demo

This demo does not reflect the models supplied by polyglot, however, we think it is indicative of

what you should expect from morfessor

Demo

This is an interface to the implementation being described in the Morfessor2.0: Python

Implementation and Extensions for Morfessor Baseline technical report.

@InProceedings{morfessor2,

title:{Morfessor 2.0: Python Implementation and Extensions for Morfessor Baseline},

author: {Virpioja, Sami ; Smit, Peter ; Grönroos, Stig-Arne ; Kurimo, Mikko},

year: {2013},

publisher: {Department of Signal Processing and Acoustics, Aalto University},

booktitle:{Aalto University publication series}

}

Morphological Analyzer (Hindi - हहूं दी) - CFILT - IIT Bombay

www.cfilt.iitb.ac.in › ~ankitb

Morphological Analyzer Tool (Beta) ... View the results in the area under "Morph Output".

TRANSLITERATION ENGINE(For Easy Typing) Type in Hindi (Press ...

Search Results

Web results

Morphological analyzer - TDIL-DC

OUTPUT: : Morphological Analyzer Tool (Beta)

Top of Form

Input Word to be Analyzed

If you wish to analyze words in a batch, then Click Here

Morphologial Analysis:

Queried Word: साथ

------------------Set of Roots and Features are---------------------Token : साथ, Total Output : 3

[ Root : साथ, Class : , Category : nst, Suffix : Null ]

[ Gender : , Number : , Person : , Case : , Tense : , Aspect : , Mood : ]

[ Root : साथ, Class : , Category : adverb, Suffix : Null ]

[ Gender : , Number : , Person : , Case : , Tense : , Aspect : , Mood : ]

[ Root : साथ, Class : A, Category : noun, Suffix : Null ]

[ Gender : +masc, Number : +-pl, Person : x, Case : -oblique, Tense : x, Aspect : x, Mood : x ]

Instructions of Usage :

1.Type or paste Hindi word in the text area under "Input Word".

2.Click on Submit and wait.

3.View the results in the area under "Morph Output".

Bottom of Form

TRANSLITERATION ENGINE(For Easy Typing)

Type in Hindi (Press Ctrl+g to toggle between English and Hindi)

Morphological Analysis

Conclusion: Thus morphological analysis has been done.

Expt.No.4

Aim: N-gram model

Theory:

Introduction

Statistical language models, in its essence, are the type of models that assign probabilities to the

sequences of words. In this article, we’ll understand the simplest model that assigns probabilities to

sentences and sequences of words, the n-gram

You can think of an N-gram as the sequence of N words, by that notion, a 2-gram (or bigram) is a

two-word sequence of words like “please turn”, “turn your”, or ”your homework”, and a 3-gram (or

trigram) is a three-word sequence of words like “please turn your”, or “turn your homework”

Intuitive Formulation

Let’s start with equation P(w|h), the probability of word w, given some history, h. For example,

Here,

w = The

h = its water is so transparent that

And, one way to estimate the above probability function is through the relative frequency count

approach, where you would take a substantially large corpus, count the number of times you see its

water is so transparent that, and then count the number of times it is followed by the. In other

words, you are answering the question:

Out of the times you saw the history h, how many times did the word w follow it

Now, you can imagine it is not feasible to perform this over an entire corpus; especially it is of a

significant a size.

This shortcoming and ways to decompose the probability function using the chain rule serves as the

base intuition of the N-gram model. Here, you, instead of computing probability using the entire

corpus, would approximate it by just a few historical words

The Bigram Model

As the name suggests, the bigram model approximates the probability of a word given all the

previous words by using only the conditional probability of one preceding word. In other words, you

approximate it with the probability: P(the | that)

And so, when you use a bigram model to predict the conditional probability of the next word, you

are thus making the following approximation:

This assumption that the probability of a word depends only on the previous word is also known

as Markov assumption.

Markov models are the class of probabilisitic models that assume that we can predict the probability

of some future unit without looking too far in the past.

You can further generalize the bigram model to the trigram model which looks two words into the

past and can thus be further generalized to the N-gram model

Probability Estimation

Now, that we understand the underlying base for N-gram models, you’d think, how can we estimate

the probability function. One of the most straightforward and intuitive ways to do so is Maximum

Likelihood Estimation (MLE)

For example, to compute a particular bigram probability of a word y given a previous word x, you

can determine the count of the bigram C(xy) and normalize it by the sum of all the bigrams that

share the same first-word x.

Challenges

There are, of course, challenges, as with every modeling approach, and estimation method. Let’s

look at the key ones affecting the N-gram model, as well as the use of MLE

Sensitivity to the training corpus

The N-gram model, like many statistical models, is significantly dependent on the training corpus. As

a result, the probabilities often encode particular facts about a given training corpus. Besides, the

performance of the N-gram model varies with the change in the value of N.

Moreover, you may have a language task in which you know all the words that can occur, and hence

we know the vocabulary size V in advance. The closed vocabulary assumption assumes there are no

unknown words, which is unlikely in practical scenarios.

Smoothing

A notable problem with the MLE approach is sparse data. Meaning, any N-gram that appeared a

sufficient number of times might have a reasonable estimate for its probability. But because any

corpus is limited, some perfectly acceptable English word sequences are bound to be missing from it.

As a result of it, the N-gram matrix for any training corpus is bound to have a substantial number of

cases of putative “zero probability N-grams”

Sources:

[1] CHAPTER DRAFT — | Stanford Lagunita. https://lagunita.stanford.edu/c4x/Engineering/CS224N/asset/slp4.pdf

[2] Speech and Language Processing: An Introduction to Natural Language Processing,

Computational Linguistics, and Speech

Albert Au Yeung

Notes on machine learning and A.I.

•

•

•

•

Generating N-grams from Sentences Python

About

Archive

Github

LinkedIn

CODING: Generating N-grams from Sentences Python

Jun 3, 2018

N-grams are contiguous sequences of n-items in a sentence. N can be 1, 2 or any other positive

integers, although usually we do not consider very large N because those n-grams rarely appears in

many different places.

When performing machine learning tasks related to natural language processing, we usually need to

generate n-grams from input sentences. For example, in text classification tasks, in addition to using

each individual token found in the corpus, we may want to add bi-grams or tri-grams as features to

represent our documents. This post describes several different ways to generate n-grams quickly

from input sentences in Python.

The Pure Python Way

In general, an input sentence is just a string of characters in Python. We can use build in functions in

Python to generate n-grams quickly. Let’s take the following sentence as a sample input:

s = "Natural-language processing (NLP) is an area of computer science " \

"and artificial intelligence concerned with the interactions " \

"between computers and human (natural) languages."

If we want to generate a list of bi-grams from the above sentence, the expected output would be

something like below (depending on how do we want to treat the punctuations, the desired output

can be different):

[

"natural language",

"language processing",

"processing nlp",

"nlp is",

"is an",

"an area",

...

]

The following function can be used to achieve this:

import re

def generate_ngrams(s, n):

# Convert to lowercases

s = s.lower()

# Replace all none alphanumeric characters with spaces

s = re.sub(r'[^a-zA-Z0-9\s]', ' ', s)

# Break sentence in the token, remove empty tokens

tokens = [token for token in s.split(" ") if token != ""]

# Use the zip function to help us generate n-grams

# Concatentate the tokens into ngrams and return

ngrams = zip(*[token[i:] for i in range(n)])

return [" ".join(ngram) for ngram in ngrams]

Applying the above function to the sentence, with n=5, gives the following output:

>>> generate_ngrams(s, n=5)

['natural language processing nlp is',

'language processing nlp is an',

'processing nlp is an area',

'nlp is an area of',

'is an area of computer',

'an area of computer science',

'area of computer science and',

'of computer science and artificial',

'computer science and artificial intelligence',

'science and artificial intelligence concerned',

'and artificial intelligence concerned with',

'artificial intelligence concerned with the',

'intelligence concerned with the interactions',

'concerned with the interactions between',

'with the interactions between computers',

'the interactions between computers and',

'interactions between computers and human',

'between computers and human natural',

'computers and human natural languages']

The above function makes use of the zip function, which creates a generator that aggregates

elements from multiple lists (or iterables in genera). The blocks of codes and comments below offer

some more explanation of the usage:

# Sample sentence

s = "one two three four five"

tokens = s.split(" ")

# tokens = ["one", "two", "three", "four", "five"]

sequences = [tokens[i:] for i in range(3)]

# The above will generate sequences of tokens starting from different

# elements of the list of tokens.

# The parameter in the range() function controls how many sequences

# to generate.

#

# sequences = [

# ['one', 'two', 'three', 'four', 'five'],

# ['two', 'three', 'four', 'five'],

# ['three', 'four', 'five']]

bigrams = zip(*sequences)

# The zip function takes the sequences as a list of inputs (using the * operator,

# this is equivalent to zip(sequences[0], sequences[1], sequences[2]).

# Each tuple it returns will contain one element from each of the sequences.

#

# To inspect the content of bigrams, try:

# print(list(bigrams))

# which will give the following:

#

# [('one', 'two', 'three'), ('two', 'three', 'four'), ('three', 'four', 'five')]

#

# Note: even though the first sequence has 5 elements, zip will stop after returning

# 3 tuples, because the last sequence only has 3 elements. In other words, the zip

# function automatically handles the ending of the n-gram generation.

Using NLTK

Instead of using pure Python functions, we can also get help from some natural language processing

libraries such as the Natural Language Toolkit (NLTK). In particular, nltk has the ngrams function that

returns a generator of n-grams given a tokenized sentence. (See the documentaion of the

function here)

import re

from nltk.util import ngrams

s = s.lower()

s = re.sub(r'[^a-zA-Z0-9\s]', ' ', s)

tokens = [token for token in s.split(" ") if token != ""]

output = list(ngrams(tokens, 5))

The above block of code will generate the same output as the function generate_ngrams() as shown

above.

Lesson Goals

Like in Output Data as HTML File, this lesson takes the frequency pairs collected in Counting

Frequencies and outputs them in HTML. This time the focus is on keywords in context (KWIC) which

creates n-grams from the original document content – in this case a trial transcript from the Old

Bailey Online. You can use your program to select a keyword and the computer will output all

instances of that keyword, along with the words to the left and right of it, making it easy to see at a

glance how the keyword is used.

Once the KWICs have been created, they are then wrapped in HTML and sent to the browser where

they can be viewed. This reinforces what was learned in Output Data as HTML File, opting for a

slightly different output.

At the end of this lesson, you will be able to extract all possible n-grams from the text. In the next

lesson, you will be learn how to output all of the n-grams of a given keyword in a document

downloaded from the Internet, and display them clearly in your browser window.

Files Needed For This Lesson

•

obo.py

If you do not have these files from the previous lesson, you can download programming-historian-7,

a zip file from the previous lesson

From Text to N-Grams to KWIC

Now that you know how to harvest the textual content of a web page automatically with Python,

and have begun to use strings, lists and dictionaries for text processing, there are many other things

that you can do with the text besides counting frequencies. People who study the statistical

properties of language have found that studying linear sequences of linguistic units can tell us a lot

about a text. These linear sequences are known as bigrams (2 units), trigrams (3 units), or more

generally as n-grams.

You have probably seen n-grams many times before. They are commonly used on search results

pages to give you a preview of where your keyword appears in a document and what the

surrounding context of the keyword is. This application of n-grams is known as keywords in context

(often abbreviated as KWIC). For example, if the string in question were “it was the best of times it

was the worst of times it was the age of wisdom it was the age of foolishness” then a 7-gram for the

keyword “wisdom” would be:

the age of wisdom it was the

An n-gram could contain any type of linguistic unit you like. For historians you are most likely to use

characters as in the bigram “qu” or words as in the trigram “the dog barked”; however, you could

also use phonemes, syllables, or any number of other units depending on your research question.

What we’re going to do next is develop the ability to display KWIC for any keyword in a body of text,

showing it in the context of a fixed number of words on either side. As before, we will wrap the

output so that it can be viewed in Firefox and added easily to Zotero.

From Text to N-grams

Since we want to work with words as opposed to characters or phonemes, it will be much easier to

create n-grams using a list of words rather than strings. As you already know, Python can easily turn

a string into a list using the split operation. Once split it becomes simple to retrieve a subsequence of

adjacent words in the list by using a slice, represented as two indexes separated by a colon. This was

introduced when working with strings in Manipulating Strings in Python.

message9 = "Hello World"

message9a = message9[1:8]

print(message9a)

-> ello Wo

However, we can also use this technique to take a predetermined number of neighbouring words

from the list with very little effort. Study the following examples, which you can try out in a Python

Shell.

wordstring = 'it was the best of times it was the worst of times '

wordstring += 'it was the age of wisdom it was the age of foolishness'

wordlist = wordstring.split()

print(wordlist[0:4])

-> ['it', 'was', 'the', 'best']

print(wordlist[0:6])

-> ['it', 'was', 'the', 'best', 'of', 'times']

print(wordlist[6:10])

-> ['it', 'was', 'the', 'worst']

print(wordlist[0:12])

-> ['it', 'was', 'the', 'best', 'of', 'times', 'it', 'was', 'the', 'worst', 'of', 'times']

print(wordlist[:12])

-> ['it', 'was', 'the', 'best', 'of', 'times', 'it', 'was', 'the', 'worst', 'of', 'times']

print(wordlist[12:])

-> ['it', 'was', 'the', 'age', 'of', 'wisdom', 'it', 'was', 'the', 'age', 'of', 'foolishness']

In these examples we have used the slice method to return parts of our list. Note that there are two

sides to the colon in a slice. If the right of the colon is left blank as in the last example above, the

program knows to automatically continue to the end – in this case, to the end of the list. The second

last example above shows that we can start at the beginning by leaving the space before the colon

empty. This is a handy shortcut available to keep your code shorter.

You can also use variables to represent the index positions. Used in conjunction with a for loop, you

could easily create every possible n-gram of your list. The following example returns all 5-grams of

our string from the example above.

i=0

for items in wordlist:

print(wordlist[i: i+5])

i += 1

Keeping with our modular approach, we will create a function and save it to the obo.py module that

can create n-grams for us. Study and type or copy the following code:

# Given a list of words and a number n, return a list

# of n-grams.

def getNGrams(wordlist, n):

return [wordlist[i:i+n] for i in range(len(wordlist)-(n-1))]

This function may look a little confusing as there is a lot going on here in not very much code. It uses

a list comprehension to keep the code compact. The following example does exactly the same thing:

def getNGrams(wordlist, n):

ngrams = []

for i in range(len(wordlist)-(n-1)):

ngrams.append(wordlist[i:i+n])

return ngrams

Use whichever makes most sense to you.

A concept that may still be confusing to you are the two function arguments. Notice that our

function has two variable names in the parentheses after its name when we declared it: wordlist, n.

These two variables are the function arguments. When you call (run) this function, these variables

will be used by the function for its solution. Without these arguments there is not enough

information to do the calculations. In this case, the two pieces of information are the list of words

you want to turn into n-grams (wordlist), and the number of words you want in each n-gram (n). For

the function to work it needs both, so you call it in like this (save the following

as useGetNGrams.py and run):

#useGetNGrams.py

import obo

wordstring = 'it was the best of times it was the worst of times '

wordstring += 'it was the age of wisdom it was the age of foolishness'

allMyWords = wordstring.split()

print(obo.getNGrams(allMyWords, 5))

Notice that the arguments you enter do not have to have the same names as the arguments named

in the function declaration. Python knows to use allMyWords everywhere in the function

that wordlist appears, since this is given as the first argument. Likewise, all instances of n will be

replaced by the integer 5 in this case. Try changing the 5 to a string, such as “elephants” and see

what happens when you run your program. Note that because n is being used as an integer, you

have to ensure the argument sent is also an integer. The same is true for strings, floats or any other

variable type sent as an argument.

You can also use a Python shell to play around with the code to get a better understanding of how it

works. Paste the function declaration for getNGrams (either of the two functions above) into your

Python shell.

test1 = 'here are four words'

test2 = 'this test sentence has eight words in it'

getNGrams(test1.split(), 5)

-> []

getNGrams(test2.split(), 5)

-> [['this', 'test', 'sentence', 'has', 'eight'],

['test', 'sentence', 'has', 'eight', 'words'],

['sentence', 'has', 'eight', 'words', 'in'],

['has', 'eight', 'words', 'in', 'it']]

There are two concepts that we see in this example of which you need to be aware. Firstly, because

our function expects a list of words rather than a string, we have to convert the strings into lists

before our function can handle them. We could have done this by adding another line of code above

the function call, but instead we used the split method directly in the function argument as a bit of a

shortcut.

Secondly, why did the first example return an empty list rather than the n-grams we were after?

In test1, we have tried to ask for an n-gram that is longer than the number of words in our list. This

has resulted in a blank list. In test2 we have no such problem and get all possible 5-grams for the

longer list of words. If you wanted to you could adapt your function to print a warning message or to

return the entire string instead of an empty list.

We now have a way to extract all possible n-grams from a body of text. In the next lesson, we can

focus our attention on isolating those n-grams that are of interest to us.

Code Syncing

To follow along with future lessons it is important that you have the right files and programs in your

“programming-historian” directory. At the end of each chapter you can download the

“programming-historian” zip file to make sure you have the correct code. If you are following along

with the Mac / Linux version you may have to open the obo.py file and change

“file:///Users/username/Desktop/programming-historian/” to the path to the directory on your own

computer.

•

python-lessons8.py (zip sync)

TOOLS USED:

Ngram Analyzer - A Programmer's Guide to Data Mining

Conclusion

N-Grams model is one of the most widely used sentence-to-vector models since it captures the

context between N-words in a sentence. In this article, you saw the theory behind N-Grams model.

You also saw how to implement characters N-Grams and Words N-Grams model. Finally, you studied

how to create automatic text filler using both the approaches.

Expt.No.5

Aim: POS tagging

Theory: POS Tagging - Hidden Markov Model

A Hidden Markov Model (HMM) is a statistical Markov model in which the system being

modeled is assumed to be a Markov process with unobserved (hidden) states.In a regular

Markov model (Markov Model (Ref: http://en.wikipedia.org/wiki/Markov_model)), the state

is directly visible to the observer, and therefore the state transition probabilities are the only

parameters. In a hidden Markov model, the state is not directly visible, but output, dependent

on the state, is visible.

Hidden Markov Model has two important components1)Transition Probabilities: The one-step transition probability is the probability of transitioning

from one state to another in a single step.

2)Emission Probabilties: : The output probabilities for an observation from state. Emission

probabilities B = { bi,k = bi(ok) = P(ok | qi) }, where okis an Observation. Informally, B is the

probability that the output is ok given that the current state is qi

For POS tagging, it is assumed that POS are generated as random process, and each process

randomly generates a word. Hence, transition matrix denotes the transition probability from

one POS to another and emission matrix denotes the probability that a given word can have

a particular POS. Word acts as the observations. Some of the basic assumptions are:

1. First-order (bigram) Markov assumptions:

a. Limited Horizon: Tag depends only on previous tag

P(ti+1 = tk | t1=tj1,âŚ,ti=tji) = P(ti+1 = tk | ti = tj)

b. Time invariance: No change over time

P(ti+1 = tk | ti = tj) = P(t2 = tk | t1 = tj) = P(tj -> tk)

2. Output probabilities:

Probability of getting word wk for tag tj: P(wk | tj) is independent of oth

er tags or words!

Calculating the Probabilities

Consider the given toy corpus

EOS/eos

They/pronoun

cut/verb

the/determiner

paper/noun

EOS/eos He/pronoun

asked/verb

for/preposition

his/pronoun

cut/noun.

EOS/eos

Put/verb

the/determiner

paper/noun

in/preposition

the/determiner

cut/noun

EOS/eos

Calculating Emission Probability Matrix

Count the no. of times a specific word occus with a specific POS tag in the corpus.

Here, say for "cut"

count(cut,verb)=1

count(cut,noun)=2

count(cut,determiner)=0

... and so on zero for other tags too.

count(cut) = total count of cut = 3

Now, calculating the probability

Probability to be filled in the matrix cell at the intersection of cut and verb

P(cut/verb)=count(cut,verb)/count(cut)=1/3=0.33

Similarly,

Probability to be filled in the cell at he intersection of cut and determiner

P(cut/determiner)=count(cut,determiner)/count(cut)=0/3=0

Repeat the same for all the word-tag combination and fill the

Calculating Transition Probability Matrix

Count the no. of times a specific tag comes after other POS tags in the corpus.

Here, say for "determiner"

count(verb,determiner)=2

count(preposition,determiner)=1

count(determiner,determiner)=0

count(eos,determiner)=0

count(noun,determiner)=0

... and so on zero for other tags too.

count(determiner) = total count of tag 'determiner' = 3

Now, calculating the probability

Probability to be filled in the cell at he intersection of determiner(in the column) and verb(in

the row)

P(determiner/verb)=count(verb,determiner)/count(determiner)=2/3=0.66

Similarly,

Probability to be filled in the cell at he intersection of determiner(in the column) and noun(in

the row)

P(determiner/noun)=count(noun,determiner)/count(determiner)=0/3=0

Repeat the same for all the tags

Note: EOS/eos is a special marker which represents End Of Sentence.

Procedure:

STEP1: Select the corpus.

STEP2: For the given corpus fill the emission and transition matrix. Answers are rounded to 2

decimal digits.

STEP3: Press Check to check your answer.

Wrong answers are indicated by the red cell.

Execution and outputs:

POS Tagging - Hidden Markov Model

---Select Corpus---

EOS/eos Book/verb a/determiner car/noun EOS/eos Park/verb the/determiner car/noun

EOS/eos The/determiner book/noun is/verb in/preposition the/determiner car/noun

EOS/eos The/determiner car/noun is/verb in/preposition a/determiner park/noun

EOS/eos

Emission Matrix

book

determiner 0

park

car

is

in

a

the

0

0

0

0

0

0

noun

0

0

0

0

0

0

0

verb

prepositio

n

0

0

0

0

0

0

0

0

0

0

0

0

0

0

Transition Matrix

eos

0

eos

determiner

noun

verb

preposition

0

0

0

0

determiner

0

0

0

0

0

noun

0

0

0

0

0

verb

0

0

0

0

0

preposition

0

0

0

0

0

Check

Results: Thus POS(Part of speech) tags are studied and get the results

Expt.No.6

Aim: chunking

Theory:

Chunking

Chunking of text invloves

dividing a text into

syntactically correlated words.

Eg: He ate an apple to satiate his hunger.

[NP He ] [VP ate

] [NP an apple] [VP to satiate] [NP his hunger]

Eg: दरवाज़ा खुल गया

[NP दरवाज़ा] [VP खुल गया]

Chunk Types

The chunk types are based on the

syntactic category part. Besides the head a chunk also

contains modifiers (like determiners, adjectives,

postpositions in NPs).

The basic types of chunks in English are:

Chunk Type

Tag Name

1.

Noun

NP

2.

Verb

VP

3.

Adverb

ADVP

4.

Adjectivial

ADJP

5.

Prepositional

PP

The basic Chunk Tag Set for Indian Languages

Sl. No

1

2.1

2.2

2.3

3

4

Chunk Type

Noun Chunk

Finite Verb Chunk

Non-finite Verb Chunk

Verb Chunk (Gerund)

Adjectival Chunk JJP

Adverb Chunk

NP

Noun Chunks

Tag Name

NP

VGF

VGNF

VGNN

RBP

Noun Chunks will be given the tag NP and include

non-recursive noun phrases and postposition for Indian

languages and preposition for English. Determiners,

adjectives and other modifiers will be part of the noun

chunk.

Eg:

(इस/DEM हकताब/NN में/PSP)NP

'this' 'book' 'in'

((in/IN the/DT big/ADJ room/NN))NP

Verb Chunks

The verb chunks are marked as VP for English, however they

would be of several types for Indian languages. A verb

group will include the main verb and its auxiliaries, if

any.

For English:

I (will/MD be/VB loved/VBD)VP

The types of verb chunks and their tags are described below.

1. VGF

Finite Verb Chunk

The auxiliaries in the verb group mark the finiteness of the

verb at the chunk level. Thus, any verb group which is

finite will be tagged as VGF. For example,

Eg: मैंने घर पर (खाया/VM)VGF

'I erg''home' 'at''meal'

2. VGNF

'ate'

Non-finite Verb Chunk

A non-finite verb chunk will be tagged as VGNF.

Eg: सेब (खाता/VM हुआ/VAUX)VGNF लड़का जा रहा है

'apple' 'eating' 'PROG' 'boy' go' 'PROG' 'is'

3. VGNN Gerunds

A verb chunk having a gerund will be annotated as VGNN.

Eg: शराब (पीना/VM)VGNN सेहत के हलए हाहनकारक है sharAba

'liquor' 'drinking'

'heath'

'for' 'harmful'

JJP/ADJP

'is'

Adjectival Chunk

An adjectival chunk will be tagged as ADJP for English and

JJP for Indian languages. This chunk will consist of all

adjectival chunks including the predicative adjectives.

Eg:

वह लड़की है (सुन्दर/JJ)JJP

The fruit is (ripe/JJ)ADJP

Note: Adjectives appearing before a noun will be grouped

together within the noun chunk.

RBP/ADVP

Adverb Chunk

This chunk will include all pure adverbial phrases.

Eg:

वह (धीरे -धीरे /RB)RBP चल रहा था

'he' 'slowly' 'walk' 'PROG' 'was'

He walks (slowly/ADV)/ADVP

PP Prepositional Chunk

This chunk type is present

for only English and not for Indian languages. It consists

of only the preposition and not the NP argument.

Eg:

(with/IN)PP a pen

IOB prefixes

Each chunk has an open boundary and close boundary that

delimit the word groups as a minimal non-recursive

unit. This can be formally expressed by using IOB prefixes:

B-CHUNK for the first word of the chunk and I-CHUNK for each

other word in the chunk. Here is an example of the file

format:

Tokens

POS

Chunk-Tags

He

ate

an

apple

to

satiate

his

hunger

PRP

VBD

DT

NN

TO

VB

PRP$

NN

B-NP

B-VP

B-NP

I-NP

B-VP

I-VP

B-NP

I-NP

Execution and outputs: Chunking

";

Lexicon POS Chunk

John NNP

gave VBD

Mary NNP

a

DT

book

NN

Submit

Conclusion: Thus chunking of English is studied

Expt.No.7

Aim: Named Entity Recognition

Theory:

Named entity recognition (NER)is probably the first step towards information extraction that seeks

to locate and classify named entities in text into pre-defined categories such as the names of

persons, organizations, locations, expressions of times, quantities, monetary values, percentages,

etc. NER is used in many fields in Natural Language Processing (NLP), and it can help answering many

real-world questions, such as:

•

Which companies were mentioned in the news article?

•

Were specified products mentioned in complaints or reviews?

• Does the tweet contain the name of a person? Does the tweet contain this person’s location?

Execution and outputs:

from bs4 import BeautifulSoup

import requests

import redef url_to_string(url):

res = requests.get(url)

html = res.text

soup = BeautifulSoup(html, 'html5lib')

for script in soup(["script", "style", 'aside']):

script.extract()

return " ".join(re.split(r'[\n\t]+', soup.get_text()))ny_bb =

url_to_string('https://www.nytimes.com/2018/08/13/us/politics/peter-strzok-firedfbi.html?hp&action=click&pgtype=Homepage&clickSource=story-heading&module=first-columnregion&region=top-news&WT.nav=top-news')

article = nlp(ny_bb)

len(article.ents)

188

There are 188 entities in the article and they are represented as 10 unique labels:

labels = [x.label_ for x in article.ents]

Counter(labels)

Figure 10

The following are three most frequent tokens.

items = [x.text for x in article.ents]

Counter(items).most_common(3)

Figure 11

Let’s randomly select one sentence to learn more.

sentences = [x for x in article.sents]

print(sentences[20])

Figure 12

Let’s run displacy.render to generate the raw markup.

displacy.render(nlp(str(sentences[20])), jupyter=True, style='ent')

sentence and its dependencies look like:

displacy.render(nlp(str(sentences[20])), style='dep', jupyter = True, options = {'distance': 120})

Next, we verbatim, extract part-of-speech and lemmatize this sentence.

[(x.orth_,x.pos_, x.lemma_) for x in [y

for y

in nlp(str(sentences[20]))

if not y.is_stop and y.pos_ != 'PUNCT']]

Figure 15

dict([(str(x), x.label_) for x in nlp(str(sentences[20])).ents])

Named entity extraction are correct except “F.B.I”.

print([(x, x.ent_iob_, x.ent_type_) for x in sentences[20]])

Finally, we visualize the entity of the entire.

Conclusion: Thus Name Entity Recognition is done

Expt.No.8.

Aim: Case Study/ Mini Project based on Application mentioned in Module 6.

Theory: Mini project should contents

Abstract about project(1 or half page)

Aim ,objectives,methodology(proposed and existed),implementation plan, results and outputs)