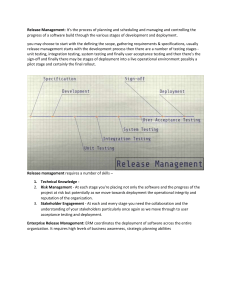

CHAPTER 5 Building and Testing Testing leads to failure, and failure leads to understanding. —Burt Rutan American aerospace engineer CHAPTER 5 BUILDING AND TESTING • 5.1 Introduction • 5.2 Moving a System Through the Deployment Pipeline • 5.3 Crosscutting Aspects • 5.4 Development and Pre-commit Testing • 5.5 Build and Integration Testing • 5.6 UAT/Staging/Performance Testing • 5.7 Production • 5.8 Incidents • 5.9 Summary CI CDel CDep • What does the infrastructure need to support for development and deployment? • • • • • • • • • • • Team members can work on different versions of the system concurrently. Code developed by one team member does not overwrite the code developed by another team member by accident. Work is not lost if a team member suddenly leaves the team. Team members’ code can be easily tested. Team members’ code can be easily integrated with the code produced by other members of the same team. The code produced by one team can be easily integrated with code produced by other teams. An integrated version of the system can be easily deployed into various environments (e.g., testing, staging, and production). An integrated version of the system can be easily and fully tested without affecting the production version of the system. A recently deployed new version of the system can be closely supervised. Older versions of the code are available in case a problem develops once the code has been placed into production. Code can be rolled back in the case of a problem. AUTOMATED DEPLOYMENT PIPELINE developer environment Continuou Continuo Continuous s us Deployme Delivery Integratio nt n quasi-production environment 5.2 MOVING A SYSTEM THROUGH THE DEPLOYMENT PIPELINE • Committed code does not move of its own volition, rather, it is moved by tools. • Tools are controlled by their programs (called scripts in this context) or by developer/operator commands. 1. Traceability 2. The environment associated with each step of the pipeline TRACEABILITY • For any system in production, it is possible to determine exactly how it came to be in production. This requires to keep track not only of source code but also of all the commands to all the tools that acted on the elements of the system. Controlling tools by scripts is far better and easier than controlling tools by commands. The scripts and associated configuration parameters and tests should be kept under version control or configuration management systems (e.g., Chef). Infrastructure-as-Code means scripts and associated configuration parameters should also be tested; changes to it should be regulated in some fashion, and its different parts should have owners, just as the application code. To allows to re-create an exact environment (from local development to production) To allows fast and flexible re-dployment of your application in a new environment Consider software project management tools like Apache Maven (or Gradle, Jenkins, all open source) to manage the complexities of third party library usage, storage, and change. THE ENVIRONMENT • An executing system (environment ) = executing code (VMs)+ configuration + data + external systems • How do these items work together when a system moves through the deployment pipeline? • • • • Pre-commit Build and integration testing UAT/staging/performance testing Production • The configuration for each of these environments will be different—it is important to keep changes in configuration to a minimum. A typical environment 5.3 CROSSCUTTING ASPECTS About Tests: • A collection of software (test execution engine), data, and script repositories (written in in JAVA , Python, Ruby, etc.) • They are used to execute and automate a test • They can also utilize a test library to generate reports 1. Test harnesses—essential to automate tests; it generates a report; it identifies at least which tests failed; it drives most of the types of tests 2. Negative tests—ensures that an application can gracefully handle invalid input or unexpected user behavior that fails the assumption. For example, if a user tries to type a letter in a numeric field, the correct behavior in this case would be to display the “Incorrect data type, please enter a number” message. 3. Regression testing—performed after changes have been made; to ensure that (1) no new bugs, or regressions are generated, and (2) no any fixed bugs are reintroduced later on. Failures detected at later points in the deployment pipeline can be automatically recorded and added as new tests into unit or integration testing. *Regression--a trend or shift toward a lower or less perfect state About Deployment Pipeline: 4. Traceability of errors (also referred to as lineage or a form of provenance)— which version of the source code is running; Option 1: to associate identifying tags to the packaged application, containers, or VM images Option 2: to have an external configuration management system that contains the provenance of each machine in production (e.g., Chef can keep track of all changes it applies to a machine) 5. Small components—small teams; microservices; fewer paths and interfaces and parameters; easier to test with fewer test cases; additional challenges in integration 6. Environment tear down--freeing resources; avoiding unintended interactions with resources; unused/untracked resources provide a possible attack surface from a malicious user 5.4 DEVELOPMENT AND PRE-COMMIT TESTING • Version Control • • • • 1950s manual version control systems 1980s CVS (Concurrent Versions System) - centralized 2000 SVN (Subversion) - centralized 2005 Git is currently a popular free version control system distributed (pull/push) identify distinct versions of the source code, share code revisions between developers record who made a change from one version to the next record the scope of a change • Branching A version in production Developing new features Merging “trunk” a new branch Fixing critical errors Released into production • Too many branches Lose track of which branch should be working on for a particular task which branch a particular change should be made short-lived tasks should not create a new branch • Merging branches can be difficult Alternative to branching: and a commit having all developers working on the trunk directly developer deals with integration issues at each FEATURE TOGGLE (OR SWITCH) • It solves the problem with doing all of the development on one trunk: A developer may be working on several different tasks within the same module simultaneously. When one task is finished, the module cannot be committed until the other tasks are completed. Incomplete and untested code for the new feature will be introduced into the deployment pipeline. • It is an “if” statement around immature code. If (Feature_Toggle) then // A new feature that is ready for testing or production is enabled in the source code itself new code else old code // the new feature is not included end; • Common practice places the switches for features into configuration (Continued) FEATURE TOGGLE (OR SWITCH) It allows to continuously deliver new releases, which may include unfinished new features. Do not reuse toggle names. Same name could mean something else in the previous version When a switch is on, some production servers may be still running an old version with a switch of the same name but different feature Integrate the feature and get rid of the toggle tests as soon as is timely. Managing many feature toggles could become complicated using a specialized tool or library CONFIGURATION PARAMETER • It is an externally settable variable that changes the behavior of a system. • • • • • • the language to expose to the user the location of a data file the thread pool size the color of the background on the screen the feature toggle settings …… • It controls the relation of the system to its environment. • It can also control behavior related to the stage in the deployment pipeline in which the system is currently run. (Continued) CONFIGURATION PARAMETER • Total parameters should be kept at a manageable level, although more parameters provide high flexibility. • Consider using good libraries for robust configuration handling • checking that values have been specified (or default values are available) and are in the right format and range • ensuring that URLs are valid • checking whether settings are compatible with multiple configuration options • ….. • Parameters could be grouped according to usage time (e.g., build time, deployment, startup, or runtime) • Parameters values could be the same or different in different steps of the deployment pipeline (i.e., values are the same in multiple environments; values are different depending on the environment; values must be kept confidential) TESTING DURING DEVELOPMENT AND PRE-COMMIT TESTS • Testing processes during development: 1. Test-driven development—before writing the actual code for a piece of functionality, you develop an automated test for it 2. Unit tests—each is testing individual classes and methods without involving interactions with the file system or the database • These tests are run automatically before a commit is executed to enforce precommit tests. • In addition to a set of unit tests, a pre-commit test also include a few smoke tests to ensure any bugs that pass unit tests (i.e., the overall functionality of the service is still performed) but break the overall system can be found long before integration testing. *Smoke tests are typically used to test product basic or key features in a limited time. It helps to prevent a full test of product that can’t even complete basic functions due to certain bugs PRE-COMMIT TESTS Code consistency Code style All unit tests pass Code coverage standards met (100%) Performance tests / benchmarks met Security checks pass Feature toggles on/off properly … ….. .. 5.5 BUILD AND INTEGRATION TESTING Continuous Integration (CI) Server All Source Code Build Executable Artifact • Compilin g • Packagin g Configuration Libraries DLL HTML Integrati on Testing Other parts of the system JavaScript “build” • The “build” command invokes a set of scripts to control the CI server • Repeatable • Traceable PACKAGING • Depending on the production environment, packaging method includes: 1. Runtime-specific packages—Java archives, web application archives, and federal acquisition regulation archives in Java, or .NET assemblies 2. Operating system packages—tools can be used for deployment if the application is packaged into software packages of the target OS (e.g., Debian or Red Hat) 3. VM images—can be instantiated for the various environments but require a compatible hypervisor (e.g., Amazon Machine Image (AMI), VMware, Hyper-V, etc.), and the test environments must also use the same cloud service for production 4. Lightweight containers—require no hypervisor and do not contain the whole operating system on the host machine, but, like VM images, they have all libraries and other software necessary to run the application HEAVILY BAKED VS. LIGHTLY BAKED IMAGES (of VMs and containers) • For “immutable servers” (i.e., once a VM or container is started, no changes can be applied at runtime) • The same image is used in all subsequent test phases and at production. • Whenever changes to the packages are required, a new image is baked and tested. • It increases trust in the image and removes uncertainty and delay during launch/runtime of new VM or container. • Certain changes to the instances are allowed at runtime (e.g., restart the web application server with a new PHP code) • Less confidence in images but more efficient in terms of time and money CONTINUOUS INTEGRATION AND BUILD STATUS • “Breaking the build” (i.e., the build is not good enough or failed) results Other team members on the same branch can also not build Continuous integration testing is effectively shut down Fixing the build becomes a high-priority item • Test status can be shown in a variety of ways (electronic widgets, monitors, desktop notifications, etc.) • If a project is split into multiple components, these components can be built separately. In version control, they may be kept as one source code project or as several. It also enables decentralized building. Must ensure that only compatible versions of the components are deployed. INTEGRATION TESTING • The built executable artifact is tested with connections to external services or databases. • Very important to distinguish between production and test requests By providing mock services in a test network (to separate from the real services) By using a test version provided by the owner of the service By marking test messages to communicate with that service Affecting the production database during test is much worse than breaking the build. 5.6 UAT/STAGING/PERFORMANCE TESTING • The staging environment mirrors, as much as possible, the production environment. • Tests that occur at this step: 1.User acceptance tests (UATs) • It is the final stage of any SDLC. • This is when actual users test the software to see if it is able to carry out the required tasks it was designed to address in real-world situations. • It tests adherence to customers’ requirements to validate changes that were made against original requirements. 2.Automated acceptance tests 3.Smoke tests • run relatively fast • one for every user story 4.Nonfunctional tests and availability) (e.g., performance, security, capacity, “Have we produced the result that customers want?” Reverting to running a more thorough UAT test is not the highest cost. The loss of reputation is. 5.7 PRODUCTION • Early Release Testing 1. Beta release 2. Canary testing 3. A/B testing • Error Detection • Even systems that have passed all of their tests may still have functional and/or nonfunctional errors. • Monitoring of the system can be used to determine nonfunctional errors. • Once the error is diagnosed and repaired, the cause of the error can be made one of the regression tests for future releases. • Live Testing—is a mechanism to continue to test even after placing a system in production • It actually and actively perturbs the running system by injecting particular types of errors into the production system or injecting delays into messages to simulate slow networks. • When one or a few more VM instances are killed or messages are delayed due to injected errors/latency, overall metrics such as response time are monitored to ensure that the system is resilient to that type of failure. SMOKE TEST • It’s a kind of Software Testing performed after software build to ascertain that the critical functionalities of the program are working fine. • It is executed "before" any detailed functional or regression tests are executed on the software build. • To reject a badly broken application so that the QA team does not waste time installing and testing the software application. • Here the test cases are chosen to cover the most important functionality or component of the system. • It should not perform exhaustive testing. • For Example, a typical smoke test would be - verify if the application launches successfully, check if GUI is responsive, ... etc. CANARY TEST • The word ‘canary’ was selected because canaries were once used in coal mining (as an early-warning signal) to alert miners when toxic gases (e.g., CO) reached dangerous levels. • It “pushes” code changes to a small group of end users who are unaware that they are receiving new code. • It allows to quickly evaluate whether or not the code release provides the desired outcome. • Its impact is relatively small. • Changes can be reversed quickly should the new code prove to be buggy. • It is often automated and is run after testing a sandbox environment. A/B TESTING By randomly serving visitors two versions of a website that differ only in the design of a single button element, the relative efficacy of the two designs can be measured. *AWS adds user monito ring and A/B testing to CloudWatch Example of A/B testing on a website. A/B TESTING • Also known as bucket testing or split-run testing. • It’s is a user experience (UX) research methodology. • It consists of a randomized experiment with two variants, A and B, that might affect a user's behavior. • It compares two versions of a single variable and determine which of the two variants is more effective before you commit to the change. • Adding more variants to the test only makes the test become more complex. 5.8 INCIDENTS • Post-deployment errors • Examples: 1. A developer connected test code to a production database. 2. Version dependencies existing among the components but the deployment order does not follow. 3. A change in a dependent system coincided with a deployment. 4. Parameters for dependent systems were set incorrectly. 5.9 SUMMARY • The deployment pipeline has at least five major steps—pre-commit, build and integration testing, UAT/staging/performance tests, production, and promoting to normal production. • Each step operates within a different environment and with a set of different configuration parameter values. • As the system moves through the pipeline, you can have progressively more confidence in its correctness. • Feature toggles are used to make code inaccessible during production. • Tests should be automated, run by a test harness, and report results back to the development team and other interested parties. 5.9 SUMMARY (continued) • An architect involved in a DevOps project should ensure the following: 1. 2. 3. 4. The various tools and environments are set up to enable their activities to be traceable and repeatable. Configuration parameters should be organized based on whether they will change for different environments and on their confidentiality. Each step in the deployment pipeline has a collection of automated tests with an appropriate test harness. Feature toggles are removed when the code they toggle has been placed into production and been judged to be successfully deployed.