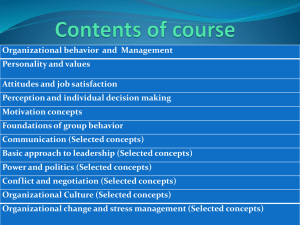

3. Borman, Daniel R. Ilgen, Richard J. Klimoski, Irving B. Weiner - Handbook of psychology. Industrial and organizational psychology. Volume 12-Wiley (2003)

advertisement