Pinocchio: Action Representation Language for Cognitive Robotics

advertisement

Cognitive Robotics 2 (2022) 119–131

Contents lists available at ScienceDirect

Cognitive Robotics

journal homepage: http://www.keaipublishing.com/en/journals/cognitive-robotics/

Pinocchio: A language for action representation

Pietro Morasso a,1,∗, Vishwanathan Mohan b

a

b

Italian Institute of Technology, RBCS (Robotics, Brain, and Cognitive Sciences) Department, Genoa, Italy

University of Essex, School of Computer Science and Electronic Engineering, Wivenhoe Park, CO34SQ, UK

a r t i c l e

i n f o

Keywords:

Action representation

Passive motion paradigm

Motor imagery

Embodied cognition

Neural simulation

Cognitive architectures

a b s t r a c t

The development of a language of action representation is a central issue for cognitive robotics,

motor neuroscience, ergonomics, sport, and arts with a double goal: analysis and synthesis of

action sequences that preserve the spatiotemporal invariants of biological motion, including the

associated goals of learning and training. However, the notation systems proposed so far only

achieved inconclusive results. By reviewing the underlying rationale of such systems, it is argued

that the common flaw is the choice of the ‘primitives’ to be combined to produce complex gestures:

basic movements with a different degree of “granularity”. The problem is that in motor cybernetics

movements do not add: whatever the degree of granularity of the chosen primitives their simple

summation is unable to produce the spatiotemporal invariants that characterize biological motion.

The proposed alternative is based on the Equilibrium Point Hypothesis and, in particular, on

a computational formulation named Passive Motion Paradigm, where whole-body gestures are

produced by applying a small set of force fields to specific key points of the internal body schema:

its animation by carefully selected force fields is analogous to the animation of a marionette using

wires or strings. The crucial point is that force fields do add, thus suggesting to use force fields as a

consistent set of primitives instead of basic movements. This is the starting point for suggesting a

force field-based language of action representation, named Pinocchio in analogy with the famous

marionette. The proposed language for action description and generation includes three main

modules: 1) Primitive force field generators, 2) a Body-Model to be animated by the primitive

generators, and 3) a graphical staff system for expressing any specific notated gesture. We suggest

that such language is a crucial building block for the development of a cognitive architecture of

cooperative robots.

1. Introduction

We may define an action as a purposive human motion, namely a sequence of motions of different body parts, including the use

of suitable tools, that allow a human skilled agent to achieve a goal, alone or in cooperation with another agent. This definition also

applies to the cooperative robots of Industry 4.0 or the next generations of advanced service robots. However in the vast literature on

motor cognition, both in humans and robots, the two terms ‘motion’ and ‘action’ are frequently mixed and/or considered as synonyms.

We believe it is essential to keep them separate for investigating the principles for developing a cognitive architecture of cooperative

robots, grounded in human motor cognition.

∗

Corresponding author at: Italian Institute of Technology, Center for Human Technologies, Robotics, Brain and Cognitive Sciences Dept, Via

Enrico Melen 83, Bldg B, Genova 16152, Italy.

E-mail address: pietro.morasso@iit.it (P. Morasso).

1

W: http://sites.google.com/site/pietromorasso/

https://doi.org/10.1016/j.cogr.2022.03.007

Received 14 March 2022; Received in revised form 30 March 2022; Accepted 30 March 2022

Available online 6 April 2022

2667-2413/© 2022 The Authors. Publishing Services by Elsevier B.V. on behalf of KeAi Communications Co. Ltd. This is an open access article

under the CC BY-NC-ND license (http://creativecommons.org/licenses/by-nc-nd/4.0/)

P. Morasso and V. Mohan

Cognitive Robotics 2 (2022) 119–131

In a previous paper [1] it was argued that a “psychological” requirement in the design of cooperative robots, aimed at inducing

humans to accept robots as Trustable Partners, is to organize the Robotic Cogniware in such a way to exhibit the same spatiotemporal

invariances that characterize “biological motion”. In this manner, robotic gestures become predictable and generally ‘readable’ in

the eyes of a human partner, thus establishing a solid basis of human-robot communication through body language. In this paper,

we address a further requirement, namely the Internal Representation of Actions which is a fundamental building block for planning,

reasoning, and supporting growth and retrieval of procedural and episodic memory. In this framework, we also believe that a robust

equivalence between the robotic and human mechanisms of action representation, on top of the equivalence at the gesture-formation

level [2], is essential for a more general equivalence at the motor cognitive level that is the cornerstone for human-robot partnership.

However, the representation of human actions is a topic that has been addressed in many fields and for a long time without

arriving at a satisfactory agreed formalisation. The representational issue is fundamental for capturing the compositional nature of

actions required for skilled behavior: generally, complex gestures are built by selecting “motor primitives”, adapting them to specific

task requirements, and concatenating them in space and time. In this paper we review the main formal systems that have been

developed so far: 1) the movement notation system proposed by Rudolph Laban [3], mainly focused on dance and choreography; 2)

the Therblig action analysis [4] for the optimization of industrial procedures; 3) the Human Action Language [5] intended to link

action description to natural language. The marginal success of these systems will be specifically analyzed in the following sections

but the main reason, in our opinion, is the choice of the motor primitives in terms of simple movements. The alternative solution,

proposed in this study, is to use force fields as motor primitives and this choice is grounded in motor neuroscience.

Historically, the interest in movement analysis at the beginning of the 20th century was motivated by the technical advances in

chronophotography made possible by Eadweard Muybridge and Etienne-Jules Marey at the end of the 19th century. Later on, the

development of motion capture systems provided the means for the precise acquisition of the kinematic and kinetic aspects of human

movements. However, the abundance of empirical data made available by such systems is not sufficient, per se, to understand the

deep organization of human actions, emerging from the basic challenge faced by the brain for supporting intelligent behavior and

defeating motor redundancy, namely what Nicholas Bernstein defined as the Degrees of Freedom Problem (DoF Problem) [6]. In our

opinion, a language for action representation should be grounded on a theory of motor control capable to address the DoF Problem.

The novel bio-inspired approach proposed in this paper is based on the Passive Motion Paradigm (PMP) of synergy formation [7–10],

which was recently shown to address the DoF Problem directly [11].

The proposed language of action representation is called Pinocchio, as a tribute to the most famous marionette, invented by Carlo

Collodi [12]. Pinocchio, regarded as a language, consists of the sequence of force fields (the basic motion primitives) to be applied

to Pinocchio, regarded as a marionette, representing the body model. The language is similar to the staff system used for music

notation, with the ‘notes’ corresponding to the force fields applied to the end-effectors of the body, analogous to the strings or threads

used for animating a marionette. A preliminary implementation of the proposed language for action description and generation is

formulated as a high-dimensional, non-linear ordinary differential equation in Matlab®(MathWorks). It includes three main modules:

1) Primitive force field generators, 2) a Body-Model to be animated by the primitive generators, and 3) a graphical staff system for

expressing any specific notated gesture.

2. Movement notation systems

In this section three paradigmatic approaches to movement notation are briefly reviewed, focusing on what we think are the main

weak points and thus providing the baseline for the force-field-based approach proposed in this paper.

2.1. Labanotation/Laban motion analysis

Labanotation, or Kinetography Laban, is a system of recording on paper human movement, with emphasis on dance, originally

proposed by Rudolph Laban [3,13]. The notation is somehow similar to the staff system for music notation (musical pentagram),

originally invented by Guido d’Arezzo in the 10th century. The key point is that while music notation was quickly adopted as

a worldwide standard, Labanotation succeeded to cover only a minor portion of the fundamental functions of a notation system:

composition, performance, memorization, and retrieval.

The music staff system consists of five horizontal lines and four corresponding spaces and is essentially a graph: the vertical axis is

pitch, and its horizontal axis is time (running from left to right, in agreement with the Greek-Roman convention of written language).

Graphical symbols identify the notes with a duration coded by graphical markings. Similarly, the Laban staff system is a kind of graph

that plots the sequence of primitive motions to be performed by a dancer. The staff consists of three (instead of five) parallel lines

but they are vertical instead of horizontal, thus time runs bottom-up in the graph, the reason being that it is supposed to be ‘read’

by a moving performer; in other words, it is written from the performer’s point of view (see Fig. 1 for a comparison of the two staff

systems).

According to Labanotation, the center line corresponds to the spine of the dancer: actions taking place on the right side of the

body are written on the right side of the staff and vice versa. In particular, the space between the central line and either lateral line

is virtually divided into two or three vertical columns and additional columns occur outside the vertical lines. The shape and texture

of graphical symbols identify features of basic movements of the body parts and the corresponding duration is coded by the length

of the symbols. Since dance is usually accompanied by music, both the measures and the metric structure of the music notation,

represented by vertical bars, are reproduced in the Laban notation by corresponding horizontal bars.

120

P. Morasso and V. Mohan

Cognitive Robotics 2 (2022) 119–131

Fig. 1. Example of music notation (top panel) and Labanotation (bottom panel). Please note that music notation, employing a five-line staff system,

is written horizontally and is read from left to right. In contrast, Labanotation, employing a three-line staff system, is usually written vertically and

should be read bottom-up, i.e. it is meant as a specific guide for the performer: for convenience, the depicted example is rotated 90°.

In summary, the Laban notation system consists of placing in the staff specific graphical symbols to represent movement components through which a performer can interpret and reproduce a notated dance sequence. Several graphical symbols are used for

characterizing the movement components and additional families of symbols, including pins and hooks, are available for representing

minor body parts and denoting details that modify the main action.

The problem with the Laban system and other notation systems that were developed more recently, such as the Benesh system

[14] or the Eshkol-Wachman system [15], is that few choreographers—and even fewer dancers—are literate in them. As currently

practiced, most use of Labanotation is for recording rather than creating and learning. The crucial point is that, in comparison with

standard music notation, these systems are rather static and provide quite poor 3D expressiveness. Moreover, although they are focused

on movements, they can only provide a very approximated representation of movement. Presently, the shaky tradition of movement

notation has even been challenged by new technological possibilities for the visual and digital representation of movement, including

computer graphics, motion capture systems, and animation technologies. Such systems are capable of storing the rotation patterns of

all the joints and the trajectories of body parts in three-dimensional space. The most recent attempt in this framework is the Motion

Bank project by William Forsythe [16]. However, the massive use of such technologies and the big data flow they generate runs into

an even greater challenge to movement representation: the DoF Problem mentioned above. In summary, the symbolic representation

of whole-body movements provided by the Laban notation is insufficient because it is too little detailed. On the other hand, the

representation provided by motion capture systems is useless because it is too detailed and thus is opaque to the ‘cognitive content’

of the movements. In both cases, it is not the appropriate framework on which to ground the cognitive architecture of cooperative

robots.

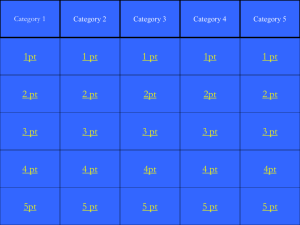

2.2. Therblig analysis

Different from the Laban Movement Analysis, which focused on the representation of whole-body movements, the Therblig Analysis deals with the representation of actions in specific industrial contexts, such as the assembly line or the construction scenario,

with the purpose to evaluate motion economy in the workplace, for optimization. This method of action representation was invented

by two industrial psychologists (Frank Bunker Gilbreth and Lillian Moller Gilbreth), from whom the name ‘Therblig’ is derived (it is

a reversal of the name Gilbreth, with ’th’ transposed).

In particular, Therbligs are defined as the basic motion elements or primitive actions required for a worker to perform a manual

operation or task. The key point of the method is that, according to the proposers [4,17], Therbligs can be numbered in the sense

that a small set of Therbligs is sufficient to characterize any human activity in industrial contexts. In particular, this set includes

18 elements, each describing a standardized activity: Search, Find, Select, Grasp, Hold, Transport loaded, Transport empty, Position,

Assemble, Use, Disassemble, Inspect, Preposition, Release load, Unavoidable delay, Avoidable delay, Plan, Rest. Each Therblig can also be

represented by an iconic symbol (Fig. 2), thus a given task can be visualized by a sequence of icons in the formalized language.

Therblig analysis implies a pyramidal view of the structure of work: work consists of tasks; a task consists of work elements; a work

element consists of a sequence of elementary actions, i.e. Therbligs.

A tool of the Therblig analysis is the use of SIMO Charts, where “SIMO” stands for Simultaneous-Motion Cycle. Such a chart

presents graphically the separable steps of each pertinent limb of the operator under study, for example the left and right hand. It

represents simultaneously the different Therbligs performed by different parts of the body of one or more operators on a common

time scale, measured in “Winks” (1 wink = 1/2000 min = 30 ms). The main goal of the analysis is to find unnecessary or inefficient

motions and to utilize or eliminate identified intervals of ‘wasted time’. In addition to the optimization of work efficiency, Therblig

121

P. Morasso and V. Mohan

Cognitive Robotics 2 (2022) 119–131

Fig. 2. The set of 18 Therbligs. Courtesy of Prof. Christoph Roser.

analysis has been applied in the context of an industrial engineering approach for Human Factors Design and Ergonomics, intended

to reduce fatigue and injury-producing motions.

Although the Therblig analysis has been and still is quite successful in the industrial engineering framework, its potential application in cognitive robotics is marginal until the consolidation of robotic cognitive architectures. Consider SIMO, which is defined as

a ‘micro-motion’ study: this implies that the composing Therbligs are primitive motions that can be implemented right away without

any further analysis. In contrast, from the point of view of motor neuroscience and robot control, Therbligs are complex actions, not

primitive movements: Therbligs require multiple motions to be recruited and adapted carefully for different tasks and varying environmental conditions. For example, the ‘assemble’ or ‘disassemble’ Therblig implies a synergy formation and compositional process

with a structure that is impossible to pre-define and pre-assign.

2.3. Natural language and action representation

Since the time of Charles Darwin [18], there is a large agreement about the idea that skilled tool use and fluent spoken language

evolved concurrently, based on the hypothesis that the evolution of the human hand, characterized by the opponent thumb, was

motivated by the need to facilitate the control of tools and the evolution of the human vocal tract was meant to allow the articulatory

gestures of spoken language. This is enough to suggest a parallel between language and action representation, although to many

linguists the analogy between linguistic syntax and action organization seems too loosely defined to carry much interpretive weight

[19].

However, two notable examples can be singled out that attempt to address the issue in a computational framework: 1) HAL

(Human Action Language [5]) for symbolically describing actions; 2) Development of a method for deriving linguistic descriptions

from whole-body motion descriptions [20–22]. In both cases, the initial step for the definition of a formal language is the identification

of whole-body motor primitives from an archive of motion capture data through segmentation and symbolization. The action symbols

can then be treated with well-established symbolic processing methods developed in computational linguistics. The two linguistic

approaches mentioned above differ in the complexity and dimensionality of the targeted symbolic motor primitives. In the HAL

formulation, the motor primitives are extracted from what is called ‘motor space’, i.e. whole-body joint angular rotations (including

122

P. Morasso and V. Mohan

Cognitive Robotics 2 (2022) 119–131

angular speed and acceleration) vs. time stored in a motion capture data archive. Such a high-dimensional data set is analyzed using

statistical methods to identify invariant clusters: the clusters, which may be called ‘kinematic synergies’, are analogous to the ‘muscle

synergies’ [23] similarly extracted from high-dimensional data sets. In this framework, the motor primitives are kinematic or muscle

activation patterns that recur frequently in the (potentially infinite) domain of natural human motions. In the system for generating

action descriptions [20–22] the motor primitives identified after statistical analysis of the raw motion capture data are expressed as

basic actions such as ‘type’, ‘drink’ or ‘pick up’: in particular, in the quoted study 24 motor primitives were identified.

While Labanotation and Therblig analysis were conceived at a time when motion capture technologies were not available, in

contrast, the two ‘linguistic’ systems reviewed in this section are motivated by the availability of such techniques, with the attempt

to bridge the gap between the representation of action by natural language, on one side, and sensory-motor capabilities on the other.

Although there are specific applications in which such developments can find practical usage, such as automatic video annotation,

still the gap between natural language and a language for natural actions is too wide to provide a credible and plausible basis for

conceiving, learning, reasoning, and executing general action sequences. An appropriate language for natural actions should achieve

a level of generative expressiveness similar to music notation, providing a powerful tool for both the composer and the performer.

Moreover, it should provide a generalized embodied framework in which the internal body image can play a double role: as a simulation

model, in preparation for acting, and as a musical instrument, for playing. In the two linguistic approaches summarized above the

linkage between natural language and natural actions is hidden in neural networks or statistical procedures and thus is opaque to

interpretation and generalization.

2.4. An alternative approach

Despite the differences, the attempts to define languages for natural action description, whether preceding or following the motion

capture era, share the notion that the molecular elements of the language, i.e. the motion primitives, are elementary motions, defined

in different manners and with different “granularity”. In our opinion, this is the common flaw of the attempts reviewed in this section

because in a complex and redundant kinematic structure as the human body motion primitives, whatever the specific definition, “do

not add”: gluing together motion segments extracted from continuous & fluid human gestures will never succeed to reproduce the

continuity, fluidity, and spatiotemporal invariants of biological motion. Such failure is analogous to the fact that by joining LEGO□

blocks it is possible to approximate any given shape but the overall result will never be smooth. Moreover, the smaller the motion

primitive, the greater the number of required elements to describe/reproduce a given pattern, with a vanishing cognitive relevance

of each composing primitive. The alternative view is that whatever the chosen granularity of action observation, the corresponding

primitive motions, detected and extracted from the high-dimensional kinematic patterns, are the effects, not the causes of the synergy

formation process: the causes are hidden force fields, low-dimensional and in small numbers. The crucial point is that force fields,

in contrast with motions, do add up, producing gestures that are continuous and smooth in any case, while remaining consistent

with the spatiotemporal invariants typical of biological motion. This view is a consequence of the Equilibrium Point Hypothesis [24–

26] which explains purposive movements as transitions from an equilibrium configuration to another in a multi-dimensional force

field. Moreover, in this framework, the number of primitives can be limited and their cognitive relevance is preserved. The proposed

Pinocchio language for action description and generation is based on the Passive Motion Paradigm (PMP), a computational derivation

of the Equilibrium Point Hypothesis and it includes three main modules: 1) Primitive force field generators, 2) a Body-Model to be

animated by the primitive generators, and 3) a graphical staff system for expressing any specific notated gesture.

3. The PMP-based animated body model

The Passive Motion Paradigm is a synergy formation computational model [7–10] that generalizes the Equilibrium Point Hypothesis [24–26]: it can explain the spatiotemporal invariants of natural human actions, incorporating them implicitly in its computational

organization [11]. The body is modeled as a skeleton of rigid links interconnected, in a serial and/or parallel manner, through viscouselastic joints whose rest length is shifted during the animation of the Body-Model in such a way to recover overall equilibrium along

with the action. Thus, a high-dimensional elastic energy function characterizes the state of the network at any given instant: such state

will automatically evolve from an initial configuration to the nearest equilibrium point. The rationale of the PMP is that purposive

actions are represented by applying force fields to the Body-Model to attract it to target configurations. Such force fields are the motor

primitives of this mechanism of synergy formation, as an alternative to the definition of primitives as elementary movements adopted

by the movement notation systems reviewed in the previous section. The crucial point is that while primitive motions do not add,

force fields as primitive motor sources do add, providing a general compositional scheme. The basic mechanism of the PMP-Model is

as follows: starting from an equilibrium state the model reacts to the perturbation by systematically yielding to it, in such a way to

recover the lost equilibrium.

Fig. 3 illustrates the structure and the dynamics of primitive force field generators, to be instantiated and chained for expressing a

given composite gesture according to the Pinocchio language. Each generator is characterized by two elements: 1) a vector 𝑃𝑇 , which

is the final equilibrium point or source of the main force field, and 2) a time-base generator Γ(𝑡) which gates both the main and the

subsidiary force fields, specifying the initiation and the duration of each primitive generator. The main force field, modulated by the

stiffness parameter 𝐾𝑇 , generates a moving equilibrium point 𝑝𝑇 (𝑡) attracted by the final equilibrium 𝑃𝑇 , while the subsidiary force

field, modulated by the stiffness parameter 𝐾𝑒𝑒 , attracts the end-effector of the Body-Model 𝑝𝑒𝑒 (𝑡) towards the moving equilibrium

point 𝑝𝑇 (𝑡) by generating the force 𝐹𝑒𝑒 (𝑡) to be transmitted to the Body-Model. The Γ-command (see the Appendix for a simple

implementation of this function) synchronizes the two gradient-descent processes, in agreement with terminal-attractor dynamics

123

P. Morasso and V. Mohan

Cognitive Robotics 2 (2022) 119–131

Fig. 3. (A): Primitive force field generator, characterized by a target equilibrium point 𝑃𝑇 and a moving equilibrium point 𝑝𝑇 (𝑡). Two synergic

force fields are concurrently produced: one of them (𝐹𝑇 ) attracts the moving equilibrium point to the final point and the other (𝐹𝑒𝑒 ) attracts the

end-effector to the moving equilibrium point. They are jointly activated and synchronized by the non-linear gating function Γ(t). This generator

transmits to the Body model the 𝐹𝑒𝑒 force field, to be combined with the other force fields converging to the same end-effectors, and receives back

from the Body model the predicted time-varying position of the end-effector. (B): Time course of the Γ-function and corresponding symbol used by

the Pinocchio notation system.

[27,28]. A remarkable feature of the non-linear gating provided by the Γ-command is that it induces, in an implicit manner, the

bell-shaped speed profile of the moving equilibrium point generated by the primitive.

Fig. 4 sketches a simplified Body-Model used for the simulations illustrated in the following: the model includes two arms, each

with 7 DoFs,1 and the trunk, with 3 DoFs,2 for a total of 17 DoFs. The 7 DoFs of each arm are serially connected, whereas the two

arms are connected in parallel to the common trunk. This Body-Model is animated through combinations of primitive force fields

applied to the two end-effectors. The model could be extended to include the two legs, with the corresponding force fields, and the

pelvis, animated through a field that expresses a balance subtask. In the simulations demonstrated in this study, the legs and the

pelvis are kept fixed.

In agreement with the PMP model, the force field transmitted to each arm (𝐹𝑒𝑒 ) is mapped into the corresponding torque field (𝜏𝑒𝑒 )

through the transpose Jacobian matrix of the arm (𝐽 𝑇 ). The 𝜏𝑒𝑒 torque field can be combined with other fields that express specific

task or body constraints, such as the Range-of-Motion constraint, represented in the figure by the RoM module. This module computes,

for each joint of the arm, a torque 𝜏𝑅𝑜𝑀 that repulses the corresponding joint angle 𝑞 away from the joint limits (see the Appendix for

a simple implementation of this function). The two torque vectors (𝜏𝑒𝑒. and 𝜏𝑅𝑜𝑀 ) are combined, jointly inducing the relaxation of the

arm (i.e. the time-derivative of the joint rotation vector 𝑞̇ ) to the goal-driven disturbance, modulated by the compliance matrix 𝐶 of

each arm. According to the Body-Model in Fig. 4 such a joint rotation pattern is mapped into the related motion of the end-effector

𝑝̇ 𝑒𝑒 through the same Jacobian, closing the loop with the primitive generators that animate the Body-Model. This loop includes a

coordinate change determined by the concurrent rotation of the trunk, expressed by the rotation matrix 𝑅 (function of the trunk

DoFs) to account for the fact that the arm Jacobians provide arm coordinates related to the trunk.

The trunk part of the body model participates in the whole-body synergy formation indirectly, because its orientation is perturbed

by the force fields transmitted by the two arms: they are transformed into the corresponding torque fields (𝜏𝑡𝑟𝑘 of the right and left

arms, respectively) and combined with a 𝜏𝑅𝑜𝑀 torque field that constrains the compliance of the trunk to the goal-driven perturbations

(𝐹𝑒𝑒 of the right arm and 𝐹𝑒𝑒 of the left arm). Two additional loops connect the dynamics of the trunk model to the dynamics of the

two arm models, in such a way to distribute the action to all the 17 DoFs of the Body-Model in a smooth and soft manner: the output

of the trunk-model, i.e. the three joint angles that characterize the trunk orientation in space, updates the rotation matrix 𝑅 that

reorients the position of the end-effector of each arm in the external space.

In summary, the Passive Motion Paradigm that animates the Body-Model is Passive in the sense that the synergy formation process

is the response of the elastic marionette to the perturbations generated by external or internal force fields but this response consists

of the active yielding of all the DoFs to the perturbation as it is distributed throughout the network. Remarkably, the computational

process is distributed and runs through local interactions without the need for any global scheduling, optimization mechanism, or illposed inverse transformations (like inverse kinematics) but counting on the terminal attractor dynamics induced by the gating action

1

The 7 DoFs of each arm are, referred to the trunk, the yaw, pitch & roll angles of the shoulder, the roll angle of the elbow, and the yaw, pitch &

roll angle of the wrist.

2

Yaw, pitch & roll angles of the trunk, referred to the environment.

124

P. Morasso and V. Mohan

Cognitive Robotics 2 (2022) 119–131

Fig. 4. Body-Model. It includes three modules related, respectively, to the right arm (7 DoFs), left arm (7 DoFs), and trunk (3 DoFs). Animation

of the Body-Model is a process organized according to the PMP: the force fields 𝐹𝑒𝑒 transmitted to the body model by the primitive generators on

either arm, expressing the planned equilibrium points, are mapped into the corresponding torque fields 𝜏𝑒𝑒 by the transpose Jacobians 𝐽 𝑇 of the two

arms; these fields are combined with other fields (𝜏𝑅𝑜𝑀 ) that enforce the satisfaction of the RoM (Range of Motion) of the DoFs of each arm. The

combined torque field of each arm induces a yielding motion 𝑞̇ of the arm joints and then the related motion of the end-effector 𝑝̇ 𝑒𝑒 through the

same Jacobian, closing the loop with the primitive generators while taking into account the rotation of the trunk (rotation matrix 𝑅). The trunk

motion is driven by the combination of the two torque fields (𝜏𝑡𝑟𝑘−𝑙𝑒𝑓 𝑡 and 𝜏𝑡𝑟𝑘−𝑙𝑒𝑓 𝑡 ) induced by the force field generators of the two arms, through

the corresponding Jacobians, in parallel with the RoM functions of the trunk.

of the force fields through the Γ-functions. Moreover, the local interactions can be modulated by tuning the stiffness and compliance

parameters that can be changed in a large range without pushing the overall dynamics to instability.

As already emphasized, the Body-Model of the figure is just an example that includes the trunk and two arms. It can be generalized

to include other body parts, such as legs, head, and hands. The recruitment and coordination of the corresponding DoFs require

additional force fields but the overall animation of the Body-Model provided by the PMP mechanism is the same, namely a highdimensional, non-linear dynamical system characterized by attractor dynamics, driven by a small set of low-dimensional primitives.

4. The graphical staff system of the Pinocchio action-representation language

The animated Body-Model formalism illustrated in the previous section is the starting point for developing a compositional framework that allows to represent and reproduce complex arbitrary gestures by combining and chaining primitive gestures, i.e. the

Pinocchio action representation language. For the Body-Model of Fig. 4, the basic action primitives are sequences of force field generators (PGs), one sequence for the right hand and the other for the left hand. Fig. 5 (top panel) clarifies in which manner a composite

gesture is organized and represented. First of all, an ordered sequence of PGs must be selected (like the notes of a musical phrase).

Each PG of the sequence is characterized by two items: 1) a 3D vector that identifies the origin of the force field (𝑃1 , 𝑃2 , … 𝑃𝑛 )

and 2) a Γ-function, identified by a start time (𝑡1 , 𝑡2 , … 𝑡𝑛 ) and a duration (𝑇1 , 𝑇2 , … 𝑇𝑛 ), that has the function of activating the

corresponding PG. The output of each primitive is a time-varying force field (𝐹1 , 𝐹2 , … 𝐹𝑛 ), gated by the corresponding Γ-functions.

The sequence of PGs is connected to the Body-Model following the principle that primitive force fields add up while the primitive

motions do not: this means that the output force fields of the PGs are added, thus stimulating the body model, for each time instant,

with the composition of the active primitives. At the same time, the Body-Model returns to each active PG the current position of the

corresponding end-effector, thus closing the loop between the rolling list of PGs and the Body-Model.

The bottom part of Fig. 5 sketches the corresponding staff system that includes three lines: one for the left end-effector, one for

the right end-effector, and the third one for the trunk. The figure also shows that different PGs can be separated in time or partially

overlapped. As clarified by the Body-Model in Fig. 4 the input force field of each arm drives the relaxation of the related joint angular

vector and thus the evolution of the end-effector, which is relayed back to the force field generators. In summary, the interaction

between the animated Body-Model and the primitive modules is characterized by the fact that the forces are added and the position

125

P. Morasso and V. Mohan

Cognitive Robotics 2 (2022) 119–131

Fig. 5. Action representation and organization of the synergy formation process for the 17 DoFs Body-Model according to the Pinocchio language.

The composite action is driven by sequences of primitive force field generators (𝑃 𝐺1 , 𝑃 𝐺2 , …) applied to both hands and transmitted to the BodyModel in an additive manner (upper portion of the figure). The bottom part of the figure shows the graphical rendering of a composite gesture

expressed as a sequence of PGs that may be separated or partially overlapped in time: this Pinocchio-graph is similar to the staff system of music

notation.

of the end-effector is broadcasted to the active PGs. The fact that subsequent PGs can overlap in time or be separated suggests an

analogy with the musical notation: PG overlapping corresponds to a chord and separated PGs correspond to the instantiation of single

notes.

4.1. Pinocchio notation of a simple 9-primitive string

In order to clarify how the approach described above can be formalized in a prototype of action language let us consider a simple

example that includes 9 primitives for each arm. Fig. 6 shows the corresponding script: the action notation for representing this

bimanual gesture is similar to the musical staff system with three lines that correspond, respectively, to the right hand, left hand, and

trunk. Time runs from left to right.

In analogy with the graphical symbols used in music notation for identifying a sound primitive (a note), the Γ-function of each

primitive is graphically represented with an isosceles triangle, whose vertex identifies the initiation of the primitive generator and

whose height indicates the corresponding duration. In the example, the first two primitives are overlapped in time and are followed

by a string of three overlapped primitives, separated by the final string of overlapped primitives. In this example, the timing of one

hand is replicated for the other but this is not necessary.

In addition to the timing, represented by the triangles, each primitive is characterized by the spatial position of its equilibrium

point, i.e. the source of the corresponding force field: this is identified by a vector in a spherical coordinate system and is represented in

Fig. 6 graphically and with an equivalent triplet, i.e. a code of three numbers that represent, respectively, the azimuth angle, elevation

126

P. Morasso and V. Mohan

Cognitive Robotics 2 (2022) 119–131

Fig. 6. Pinocchio notation of a bimanual gesture that includes 9 primitive force field generators (PGs). Each PG is identified by the graphic symbol

of the corresponding Γ-function and the specification of the target equilibrium point, expressed in two equivalent manners: as a vector 𝑃𝑇 , in a

spherical coordinate system, and as a triplet of values that correspond, respectively, to azimuth, elevation, and normalized length of the vector 𝑃𝑇 .

angle and normalized length of the vector. By running this script on the simulation system of Fig. 5, namely by numerical integration

of the 17 DoFs differential equation, it is possible to generate a movie of the composite action. Fig. 7 shows a representative set of

frames extracted from the movie.3 The simulation was carried out using Matlab®(MathWorks), with the forward Euler method for

integrating the differential equations with a time step of 0.1 ms. The numerical values of the model parameters used in the simulation

are listed in the appendix.

Fig. 8 shows the kinematics of both hands and the corresponding joint rotation patterns for the 17 DoFs of the Body-Model.

The figure demonstrates that the minimalistic Pinocchio action notation formalism can reproduce the smoothness and texture of

biological motion, via animation of the Body-Model, thus capturing the deep structure of the biological motion that characterizes

human gestures. In particular, panels A and B of the figure plot the speed profiles of the two hands and clarify that in most cases the

velocity peaks occur at the start time of the primitive force field generator. This feature can help to extract the Pinocchio script from

motion capture data of recorded movements.

5. Action representation and motor imagery

The plausibility of the proposed Pinocchio language of action representation is strongly associated with the crucial role of motor

imagery in motor cognition, within an embodied cognitive framework.

Motor imagery can be defined as a dynamic state during which a subject mentally simulates a given action. This type of phenomenal

experience implies that the subject feels himself performing a given action and it corresponds to the so-called internal imagery (or

first-person perspective) of sports psychologists. Thus, motor imagery pertains to the same category of processes as those involved in

programming and preparing actual actions, with the difference that in the latter case execution would be blocked at some level of the

cortico-spinal flow. Moreover, there is repeated evidence that motor imagery has significant positive effects on motor skill learning

[29]. From the neurophysiological point of view, the analysis of the functional correlates of motor imagery has demonstrated that

imagined and executed actions share the same central structures [30]. More specifically, it was found that a great overlap exists

between activation patterns recorded during motor execution and motor imagery [31,32].

The crucial cognitive importance of motor imagery is confirmed by the success of the mental practice in professional sportsmen and

music performers: the same brain areas are involved in visuomotor transformation/motor planning and music processing, emphasizing

the multimodal properties of cortical areas involved in music and motor imagery in musicians and clarifying that the main component

of mental rehearsal is indeed motor imagery. Motor Imagery, defined as a dynamic mental state during which the representation of a

given motor act or movement is rehearsed in working memory without any overt motor output [33], is strongly associated with Mental

Imagery, which implies a cognitive simulation process by which we can represent perceptual information in our minds in the absence of

spcific sensory input [34]. Motor imagery is a powerful tool for exploring embodied cognition – the idea that cognitive representations

are grounded in, and simulated through, sensorimotor activity [35]. More specifically, Moulton and Kosslyn [36] suggested that motor

3

Movie corresponding to the script of Fig. 6: https://bit.ly/3JKDTbE

127

P. Morasso and V. Mohan

Cognitive Robotics 2 (2022) 119–131

Fig. 7. A representative set of frames from the animation of the composite gesture notated in Fig. 6. The numbers correspond to the different PGs

that generate the gesture for the right arm. Each red ‘star’ identifies the final equilibrium point of an active PG and the red dashed lines represent

the directions of active force fields in any given frame. In the frames 2, 5, 8, 9 only one primitive is active (primitives 2, 4, 6, 7, respectively); in all

the other frames two primitives are acting simultaneously on the end-effector. The frames are extracted from the following movie:

https://bit.ly/3JKDTbE. (For interpretation of the references to color in this figure legend, the reader is referred to the web version of this article.).

imagery is actually proprioceptive or kinaesthetic imagery, in the sense that one experiences the bodily sensations of movement, not

the movement commands themselves, although the two features are the two faces of the same coin.

A relevant issue about the cognitive role of motor imagery is the distinction between the first-person and third-person perspectives.

Decety [30] suggested that motor imagery ‘corresponds to the so-called internal imagery (or first-person perspective) of sport psychologists. This idea was endorsed by Jeannerod [37] who distinguished between visual/third-person imagery, whereby people imagine

seeing either themselves or someone else performing the action, and motor imagery proper, which is experienced from within, as the

result of a ‘first-person’ process, where the self feels like an actor rather than a spectator. As clarified by Fourkas et al. [38] people

can commonly form motor images using either a first-person or a third-person perspective. From a more specifically computational

point of view, Decety and Ingvar [39] proposed that mental practice (or the systematic use of motor imagery to rehearse an action

covertly before physically executing it) is a virtual simulation of motor actions and they also postulated that motor imagery requires

the construction of a dynamic motor representation in working memory which makes use of spatial and kinesthetic components

retrieved from long-term memory.

Motor imagery techniques are widely used to enhance skill learning and skilled performance in special populations such as elite

athletes [40–42], musicians [43], and surgeons [44]. In particular, there is evidence that experts might utilize their kinesthetic

imagery more efficiently than novices, but only for the activity in which they had expertise [45]. Moreover, Holmes and Collins

128

P. Morasso and V. Mohan

Cognitive Robotics 2 (2022) 119–131

Fig. 8. The three panels correspond to the composite gesture, generated by 9 PG sequence, illustrated in Figs. 6 and 7. The dotted green lines

correspond to the starting time of the 9 primitives (all of them with 1 s duration). (A): Trajectory of the left hand (x: blue, y: red, z: black; overall

speed profile: gray). (B): Trajectory of the right hand). (C): Time course of the 17 DoFs (joint angles of the right hand: blue; joint angles of the

left hand: red; joint angles of the trunk: black). (For interpretation of the references to color in this figure legend, the reader is referred to the web

version of this article.).

[46] investigated in detail the relationship between motor imagery and the movement it represents, identifying six critical issues for

optimization of performance: Physical, Environmental, Task, Timing, Learning, and Emotional.

6. Discussion

The research threads summarized above on motor imagery suggest that the brain relies on some kind of language for representing,

assembling, disassembling, rehearsing, and ultimately executing composite gestures and complex actions. Moreover, this language

is not abstract, although it deals with internal, muscle-less representations [10]: it is intrinsically embodied, in the sense that its

primitives comply with the spatiotemporal invariants of biological motion, and it generates an information flow through an internal

simulation process [32,47–49].

From the implementation point of view, Pinocchio is currently an early prototype, developed in Matlab®(MathWorks), that can

be expanded in many directions. One is related to the Body-Model, in such a way to include the head and lower limbs, and a module

to deal with equilibrium, among many other possibilities. An important extension is in the direction of motion capture data: this

will require the development of procedures for extracting Pinocchio-scripts from digitized gestures, namely the identification of the

primitive force field generators (start time, duration, position of the target equilibrium point) as well as the stiffness and compliance

parameters of the body-model. The identification of such scripts from the empirical kinematic data of given gestures can serve multiple

purposes of which the following list is just an example:

129

P. Morasso and V. Mohan

Cognitive Robotics 2 (2022) 119–131

• Exploring an endless set of gesture variations, obtained by smoothly changing the spatiotemporal parameters of the Pinocchio

script & generating the corresponding animations.

• Allowing choreographers and performers to compose, generalize, extend action scripts in a combinatorial manner.

• Annotating videos of recorded gestures.

• Training robots to learn action sequences not in a rigid manner, based on a pure kinematic playback, but with the flexibility of a

modifiable script.

It is worth noting that the Pinocchio language intrinsically matches the rationale of motor imagery at large, including metaphorical

aspects for the memorization, rehearsal, and execution of formalized actions like Taichi-Chuan [50]. Thus, Pinocchio scripts, in

conjunction with the force-field-based internal Body-Model, can support working memory, procedural memory as well as episodic

memory related to the representation of actions.

In the context of Cognitive Robotics, there is no doubt that language is an important pillar, at the basis of learning, training,

teaching, reasoning, understanding, and human-robot interaction. Pinocchio is a contribution to the representation of action and

skills in the framework of embodied cognition. On the other hand, the cognitive architecture of intelligent robots should also have

access to other sources of information that are ‘disembodied’ and cannot be phrased in a first-person perspective. In particular,

several cognitive architectures for intelligent robots are being developed, such as CRAM [51] or Clarion [52]: they allow high-level

instructions to be translated into robot actions by reasoning with a large repository of knowledge, using the symbolic methods typical

of mainstream AI [53] including abstract, quasi-natural language. However, such approaches do not yet address many other issues

that are typical of embodied cognition, including the emphasis on prospection. Thus, a promising direction of research is to integrate

the embodied cognitive approach of the Pinocchio language with the disembodied abstract approach of AI. At the same time, we

should also take into account the current criticism of mainstream AI that suggests a transition from Artificial Intelligence to Brain

Intelligence [54].

Declaration of Competing Interest

The authors declare that have no known competing financial interest or personal relationship that could have appeared to influence

the work reported in this paper.

Acknowledgments

This work was supported by Fondazione Istituto Italiano di Tecnologia, RBCS Department, in the framework of the iCog Initiative.

Appendices

A1. Γ-function

This function gates the force fields applied to the end-effectors of the body model in order to induce terminal attractor dynamics.

Having defined 𝑡0 as the initiation time instant of the function and 𝑇 the corresponding duration, the function is given by the following

equation:

{

̇

Γ = 1−𝜉 𝜉 𝑓 𝑜𝑟 0 < 𝑡 − 𝑡0 < 𝑇

Γ = 0 𝑓 𝑜𝑟 𝑡 − 𝑡0 ≤ 0 𝑎𝑛𝑑 𝑡 − 𝑡0 ≥ 𝑇

where 𝜉 = 6 𝛾 5 − 15 𝛾 4 + 10 𝛾 3 is a smooth 0→1 transition and 𝛾 is the normalized time: 𝛾 =

𝑡−𝑡0

.

𝑇

A2. RoM module

This module generates, for any joint of the body model a torque that repulses the joint angle from its joint limits.

Let us define the RoM of the joint angle 𝑞 as the interval between 𝑞𝑚𝑖𝑛 and 𝑞𝑚𝑎𝑥 . The RoM module repulses the joint angle away

from such limit values by generating a torque according to the following equation:

[ (𝑞−𝑞 )

]

−(𝑞−𝑞𝑚𝑖𝑛 )

𝑚𝑎𝑥

𝜏𝑅𝑜𝑀 = −𝐾𝑅𝑜𝑀 𝑒 Δ𝑞 − 𝑒 Δ𝑞

where Δ𝑞 = (𝑞𝑚𝑎𝑥 − 𝑞𝑚𝑖𝑛 ).

A3. Parameter values used in the simulation

In the simulation illustrated in Figs. 7 and 8 the following parameter values were used:

• Parameters of the Primitive Force Field Generators

𝐾𝑇 = 1 N/m

𝐾𝑒𝑒 = 100 N/m

130

P. Morasso and V. Mohan

Cognitive Robotics 2 (2022) 119–131

• Parameters of the Body-Model

𝐶𝑎𝑟𝑚 = 20 rad2 /Nms

𝐶𝑡𝑟𝑢𝑛𝑘 = 1 rad2 /Nms

References

[1]

[2]

[3]

[4]

[5]

[6]

[7]

[8]

[9]

[10]

[11]

[12]

[13]

[14]

[15]

[16]

[17]

[18]

[19]

[20]

[21]

[22]

[23]

[24]

[25]

[26]

[27]

[28]

[29]

[30]

[31]

[32]

[33]

[34]

[35]

[36]

[37]

[38]

[39]

[40]

[41]

[42]

[43]

[44]

[45]

[46]

[47]

[48]

[49]

[50]

[51]

[52]

[53]

[54]

P. Morasso, V. Mohan, The body schema: neural simulation for covert and overt actions of embodied cognitive agents, Curr. Opin. Physiol. 21 (2021) 219–225.

P. Morasso, (2021) Gesture formation: a crucial building block for cognitive-based human–robot partnership, Cognitive Robot. 1 (2021) 92–110.

R. Laban, Schrifttanz, Universal Editions, Wein, Leipzig, 1928.

F.B. Gilbreth Jr., E.G. Carey, Cheaper By the Dozen, HarperCollins, New York, 1948.

G. Guerra-Filho, Y. Aloimonos, A language for human action, IEEE Comput. 40 (5) (2007) 42–51.

N. Bernstein, The Co-Ordination and Regulation of Movements, Pergamon Press, Oxford, 1967.

F.A. Mussa Ivaldi, P. Morasso, R. Zaccaria, Kinematic networks. A distributed model for representing and regularizing motor redundancy, Biol. Cybern. 60 (1988)

1–16.

Editor F.A. Mussa Ivaldi, P. Morasso, N. Hogan, E. Bizzi, Network models of motor systems with many degrees of freedom, in: M.D. Fraser (Ed.), Advances in

Control Networks and Large Scale Parallel Distributed Processing Models Ablex Publ. Corp., Norwood, NJ, 1989. Editor.

V. Mohan, P. Morasso, Passive motion paradigm: an alternative to optimal control, Front. Neurorobot. 5 (4) (2011) 4–28.

V. Mohan, A. Bhat, P. Morasso, Muscleless motor synergies and actions without movements: from motor neuroscience to cognitive robotics, Phys. Life Rev. 30

(2019) 89–111.

P. Morasso, A vexing question in motor control: the degrees of freedom problem, Front. Bioeng. Biotechnol. 9 (2022) 783501.

C. CollodiLe avventure di Pinocchio – Storia di un burattino, Felice Paggi, Libraio Editore, Firenze, 1881. The Story of a Puppet, or, the Adventures of Pinocchio

(Translated By M.A. Murray), Cassel Publ. Co., New York, 1892.

A. Hutchinson-Guest, Labanotation: the System of Analyzing and Recording Movement, Routledge, Abingdon-on-Thames, UK, 2005.

R. Benesh, J. Benesh, Reading Dance: the Birth of Choreology, McGraw-Hill Book Company, New York„ New York, 1983.

N. Eshkol, A. Wachman, Movement Notation, Weidenfeld & Nicolson, London, 1958.

Forsythe Dance Company, Motion bank project, http://motionbank.org/, 2010.

L. Aft, Therblig Analysis. Work Measurement and Methods Improvement, John Wiley & Sons, Inc, New York, 2000.

C. Darwin, The Descent of Man, and Selection in Relation to Sex, John Murray, London, UK, 1871.

J. Steele, P.F. Ferrari, L. Fogassi, From action to language: comparative perspectives on primate tool use, gesture and the evolution of human language, Philos.

Trans. R. Soc. B 367 (2012) 4–9.

W. Takano, Y. Nakamura, Statistical mutual conversion between whole body motion primitives and linguistic sentences for human motions, Int. J. Robot. Res.

34 (10) (2015) 1314–1328.

W. Takano, Y. Yamada, Y. Nakamura, Linking human motions and objects to language for synthesizing action sentences, Autonomous Robots 43 (2019) 913–925.

W. Takano, Annotation generation from IMU-Based human whole-body motions in daily life behavior, IEEE Trans. Hum.-Mach. Syst. 50 (1) (2020) 13–21.

A. d’Avella, E. Bizzi, Shared and specific muscle synergies in natural motor behaviors, Proc. Natl. Acad. Sci. 102 (2005) 3076–3081.

A.G. Feldman, Functional tuning of the nervous system with control of movement or maintenance of a steady posture: II controllable parameters of the muscle,

Biophysics 11 (1966) 565–578.

A.G. Feldman, Once more on the equilibrium-point hypothesis (𝜆 model) for motor control, J. Mot. Behav. 18 (1986) 17–54.

E. Bizzi, N. Hogan, F.A. Mussa-Ivaldi, S. Giszter, Does the nervous system use equilibrium-point control to guide single and multiple joint movements? Behav.

Brain Sci. 15 (4) (1992) 603–613.

M. Zak, Terminal attractors for addressable memory in neural networks, Phys. Lett. 133 (1988) 218–222.

J. Barhen, S. Gulati, M. Zak, Neutral learning of constrained nonlinear transformations, Computer 22 (1989) 67–76.

M. Denis, Visual imagery and the use of mental practice in the development of motor skills, Can. J. Appl. Sport Sci. 10 (1985) 4–16.

J. Decety, Do imagined and executed actions share the same neural substrate? Cognitive Brain Res. 3 (1996) 87–93.

M. Jeannerod, V. Frak, Mental imaging of motor activity in humans, Curr. Opin. Neurobiol. 9 (1999) 735–739.

M. Jeannerod, Neural simulation of action: a unifying mechanism for motor cognition, Neuroimage 14 (2001) S103–S109.

C. Collet, A. Guillot, F. Leon, T. MacIntyre, A. Moran, Measuring motor imagery using psychometric, behavioural and psychophysiological tools, Exercise Sport

Sci. Rev. 39 (2011) 85–92.

J. Munzert, B. Lorey, K. Zentgraf, Cognitive motor processes: the role of motor imagery in the study of motor representations, Brain Res. Rev. 60 (2009) 306–326.

L. Shapiro, Embodied Cognition, Routledge, London, 2010.

S.T. Moulton, S.M. Kosslyn, Imagining predictions: mental imagery as mental emulation, Philos. Trans. Royal Soc. B 364 (2009) 1273–1280.

M. Jeannerod, The Cognitive Neuroscience of Action, Blackwell Publishing, Oxford, UK, 1997.

A.D. Fourkas, V. Bonavolontà, A. Avenanti, S. Aglioti, Kinesthetic imagery and tool specific modulation of corticospinal representations in expert tennis players,

Cereb. Cortex 18 (2008) 2382–2390.

J. Decety, D.H. Ingvar, Brain structures participating in mental simulation of motor behaviour: a neuropsychological interpretation, Acta Psychol. 73 (1990)

13–34.

A. Moran, Conceptual and methodological issues in the measurement of mental imagery skills in athletes, J. Sport Behav. 16 (1993) 156–170.

A. Moran, Cognitive psychology in sport: progress and prospects, Psychol. Sport Exercise 10 (2009) 420–426.

R. Weinberg, Does imagery work? Effects on performance and mental skills, J. Imagery Res. Sport Phys. Activity 3 (1) (2008), doi:10.2202/1932-0191.1025.

I.G. Meister, T. Krings, H. Foltys, B. Boroojerdi, M. Müller, R. Töpper, A. Thron, Playing piano in the mind—An fMRI study on music imagery and performance

in pianists, Cognitive Brain Res. 19 (2004) 219–228.

S. Arora, R. Aggarwal, N. Sevdalis, A. Moran, P. Sirimanna, R. Kneebone, A. Darzi, Development and validation of mental practice as a training strategy for

laparoscopic surgery, Surg. Endosc. 24 (2010) 179–187.

G. Wei, J. Luo, Sport expert’s motor imagery: functional imaging of professional motor skills and simple motor skills, Brain Res. 1341 (2009) 52–62.

P. Holmes, D. Collins, The PETTLEP approach to motor imagery: a functional equivalence model for sport psychologists, J. Appl. Sport Psychol. 13 (2001) 60–83.

H. O’Shea, A. Moran, Does motor simulation theory explain the cognitive mechanisms underlying motor imagery? A critical review, Front. Hum. Neurosci 11

(2017) 72.

R. Grush, The emulation theory of representation: motor control, imagery, and perception, Behav. Brain Sci. 27 (2004) 377–396.

R. Ptak, A. Schnider, J. Fellrath, The dorsal frontoparietal network: a core system for emulated action, Trends Cogn. Sci. 7 (21) (2017) 589–599.

P. Morasso, M. Morasso, Taichi Meets Motor Neuroscience: an Inspiration For Contemporary Dance and Humanoid Robotics, Cambridge Scholars Publ., Newcastle

upon Tyne, UK, 2021.

M. Beetz, L. Mösenlechner, M. Tenorth, CRAM - a cognitive robot abstract machine for everyday manipulation in human environments, in: Proc. IEEE/RSJ Int.

Conf. Intel. Robots and Systems, Taipei, Taiwan, 2010, pp. 1012–1017.

R. Sun, Anatomy of the Mind: Exploring Psychological Mechanisms and Processes with the Clarion Cognitive Architecture, Oxford University Press, Oxford, UK,

2016.

Y. LeCun, Y. Bengio, G. Hinton, Deep learning, Nature 521 (7553) (2015) 436–444.

H. Lu, Y. Li, M. Chen, H. Kim, S. Serikawa, Brain Intelligence: go beyond Artificial Intelligence, Mobile Netw. Appl. 23 (2) (2018) 368–375.

131