Hexacopter with Soft Grasper for Autonomous Object Grasping

advertisement

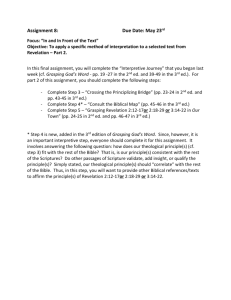

Proceedings of the ASME 2018 Dynamic Systems and Control Conference DSCC 2018 September 30 - October 3, 2018, Atlanta, USA DSCC 2018-9107 DESIGN AND CONTROL OF A HEXACOPTER WITH SOFT GRASPER FOR AUTONOMOUS OBJECT DETECTION AND GRASPING Shatadal Mishra The Polytechnic School Ira A. Fulton Schools of Engineering Arizona State University Mesa, Arizona 85212 Email: smishr13@asu.edu Dangli Yang School for Engineering of Matter, Transport and Energy Ira A. Fulton Schools of Engineering Arizona State University Tempe, Arizona 85281 Email: dyang43@asu.edu Wenlong Zhang∗ The Polytechnic School Ira A. Fulton Schools of Engineering Arizona State University Mesa, Arizona 85212 Email: wenlong.zhang@asu.edu Panagiotis Polygerinos The Polytechnic School Ira A. Fulton Schools of Engineering Arizona State University Mesa, Arizona 85212 Email: polygerinos@asu.edu INTRODUCTION ABSTRACT In this paper, an image based visual servo (IBVS) scheme is developed for a hexacopter, equipped with a robotic soft grasper to perform autonomous object detection and grasping. The structural design of the hexacopter-soft grasper system is analyzed to study the soft grasper’s influence on the multirotor’s aerodynamics. The object detection, tracking and trajectory planning are implemented on a high-level computer which sends position and velocity setpoints to the flight controller. A soft robotic grasper is mounted on the UAV to enable the collection of various contaminants. The use of soft robotics removes excess weight associated with traditional rigid graspers, as well as simplifies the controls of the grasping mechanics. Collected experimental results demonstrate autonomous object detection, tracking and grasping. This pipeline would enable the system to autonomously collect solid and liquid contaminants in water canal based on GPS and multi-camera system. It can also be used for more complex aerial manipulation including in-flight grasping. ∗ Address all correspondence to this author. Carly Thalman The Polytechnic School Ira A. Fulton Schools of Engineering Arizona State University Mesa, Arizona 85212 Email: cmthalma@asu.edu Unmanned aerial vehicles (UAVs) have been widely studied and developed for a variety of applications including agriculture inspection and sowing, disaster rescue and package delivery. With longer flight time, larger payload and further flight distance, UAVs have the potential of supporting more complex missions, which includes monitoring and surveillance. The canal system in remote areas of Arizona [1], which is broad and mostly desert, needs periodic investigation and maintenance. Dispatching labor force to perform field test in such area is hazardous and timeconsuming, and UAVs, in this case, can perform autonomous patrolling and collecting water contaminants for further analysis. Multirotors are consummate platforms for canal system’s maintenance and water contaminants’ collection. Admittedly, fixed-wing aerial vehicles can perform long distance flying consuming less amounts of energy compared to rotary wing aerial vehicles [2], but fixed-wing aerial vehicles cannot hover, which means it is harder to use them for water contaminants’ collection. Rotary-wing aerial vehicles on the other hand, can hover steadily 1 and they can be used to perform more complex air manipulation within limited amount of space. Helicopter and multirotor are the two types of most commonly used rotary-wing aerial vehicles. Compared to helicopters, multirotors have less complex rotary-wing mechanical structure design but they need to drive more amount of air and consume more energy. However, multirotors provide higher durability and larger payload [3]. When one or two of the motors are damaged or not functioning properly due to unpredictable environmental issues or mechanical failures, multirotors can still maintain stable flying. In addition, a multirotor’s frame is low cost because of its simplicity and modulus design. Most of the parts can be easily manufactured using plastic molding, which makes massive production possible and with swarm robotics control techniques, we can produce and control large amount of multirotors for canal services much easier compared to other types of aerial vehicles. All these advantages make them the consummate choice for collection of water contaminants when the aerial vehicles need to operate under broad, complex and unpredictable environment. In existing literature, considerable work has been done on aerial manipulation and grasping using multirotors. Thomas et al. [4] worked on a multirotor grasping inspired by avian shows a fast and accurate grasping motion navigated by a motion capture system. The grasper is designed for cylinder or rod-shape object. During the grasping motion, the multirotor will first approach the target horizontally and the grasper closes after it reaches the target position. This grasping motion happens when the grasping object is being placed at the top of a support structure which is far above the ground and in this case, because the clearance between the aerial vehicle and the ground is large enough, the ground effect that will influence the multirotor’s flight status is very small and can be neglected. When a multirotor needs to operate near ground, the ground effect can no longer be neglected. Previous work by Pounds et al. [5] shows how a helicopter picks up an object with manual control and discusses the ground effect when the helicopter is operated with low clearance to the ground. Neither low level position control, nor high level feedback control with motion capture system can maintain the grasping-specimen within the grasper operating range with a 100% success rate. For grasping objects of different weights and geometries, the experimental success rate can be lowered to 67%. To achieve accurate air manipulation performance, instead of doing precise control when operating near ground, extending the grasper arm to maximize its clearance is another solution. Kim et al. [6] developed an origami-inspired robotic arm that can extend 700mm long to collect bottles in river. This robotic arm can be mounted on a multirotor and the arm can operate with only one actuator. With this arm extend distance, the multirotor can significantly reduce the influence caused by the ground effect. After grasping the object, the mass and center of mass of the UAV will be different from the original physical model in the flight controller. To optimize the performance, the flight controller will then need to be tuned and adapted to the new physical model. Orsag, et al. worked on the stability of aerial manipulation [7]. A robot arm with one degree of freedom (DOF) was installed at the bottom of a multirotor. The stability was analyzed as the multirotor grasped different objects with the robot arm at different angles which caused the quadcopter’s center of mass to deviate significantly. From previous review of grasping motion and stability analysis, it is found that the grasper design influences the flight dynamics and stability. The design, fabrication, and integration of a soft robotic grasper is implemented to improve adaptability to the flight dynamics and simplify the grasper control algorithm. It also facilitates the collection and retrieval of contaminants in the remote canal systems, both liquid and solid. The utilization of soft materials eliminates the need for the complex programming associated with traditional rigid graspers, as the vast grasping capabilities are mechanically programmed into the grasper upon fabrication [8, 9]. Soft robotics has become well known for producing lightweight, affordable methods of actuation, while still providing high force-to-weight ratios. The combination of these advantageous properties suggests it would be an ideal solution to provide the UAV with the ability to pick up contaminants of unpredictable sizes, shapes, and weights with little need for adjustment between grasps. To allow the grasper to collect liquid samples, a reservoir has been designed with the same silicone materials, which connects to the grasper and sucks water up through a small lumen affixed to grasper. A soft silicone robotic grasper would also remain fully functional in damp or wet applications such as this with no fear of damaging electrical or mechanical components [10,11]. The UAV can be integrated with rafts to facilitate smooth landing on water surfaces to prevent any damages to electrical components. The remainder of the paper is structured as follows: In Section II, the hexacopter and soft grasper design is introduced. In Section III, the vision based control techniques are described. Section IV demonstrates the real-time flight results. Conclusions and future work are discussed in Section V. DESIGN OF THE HEXACOPTER AND SOFT GRASPER ASSEMBLY This section describes the detailed design of the hexacopter and the soft grasper. The soft grasper is integrated with the hexacopter, using portable method pneumatic actuation which utilizes carbon dioxide cartridges. These cartridges, when punctured at the main outlet release nearly 6200 kPa. The grasper runs at 100 kPa, therefore pressure from the cartridge must be regulated to prevent damage to the actuators. The flow of regulated pressure is controlled through a 2-way, 3 channel valve, which allows pressurization and depressurization of the grasper upon triggering from the flight controller. The flight controller’s GPIO (gen2 FIGURE 1: SYSTEM SETUP FIGURE 3: FINITE ELEMENT ANALYSIS (FEA) OF THE SOFT GRASPER DESIGN INFLATED TO THE REGULATED PRESSURE of 100 kPa eral purpose input-output) pin is utilized for triggering the soft grasper. The effective weight soft grasper and all for the components associated with its control and actuation add roughly 500 grams to the UAV, with only about 80 grams of that additional weight allocated to the grasper itself, and the suction mechanism weighing only about 50 grams. locity estimates and drift-attenuated position estimates. The position control is achieved by implementing an integrated disturbance observer and PID control structure [13]. 2. The attitude estimation loop is achieved by implementing a quaternion based complementary filter and the attitude control was performed by implementing a cascaded PID control for controlling attitude and angular velocity. Rotation matrices are used to represent the current and desired attitude. The use of rotation matrices eliminates the issue of gimbal lock, which is commonly encountered while using an Euler angles’ representation. The orientation error is generated as described in [14], where the rotation matrices belong to the nonlinear space SO(3). The orientation error is based on the current rotation matrix and the desired rotation matrix which is represented as, Hexacopter design A standard hexacopter, as shown in Figure 1, is selected as it has a higher payload capacity compared to quadcopters. Additionally, hexacopter can provide larger lifting power and fly with higher durability [3]. The current all up weight (AUW) is 1.8 kilograms and the hover throttle percentage is 55%. The current throttle percentage allows for additional payload capacity. The hexacopter used 920 KV brushless DC motors and 9 inch propellers. The current setup provided a mixed flight time of 15 minutes. The low-level motion control modules are implemented on a flight control unit which are described as follows: 1 eR = (RTsp Rc − RTc Rsp )ˇ 2 1. The outer position loop estimation is achieved by the optical flow sensor and IMU. The optical flow sensor generates body frame velocities and a hybrid low-pass and de-trending filter [12] is implemented for achieving low-pass filtered ve- (1) where Rsp represents the rotation matrix setpoint and Rc represents the current rotation matrix. ˇ represents the vee map which is a mapping from SE(3) to IR3 . Soft grasper design The soft grasper is comprised of a silicone-based body with internal channels to allow air pressure to pass to each section of the grasper. The principal of operation that governs the movement of the grasper is inspired by previous work with pneumatic networks (Pneu-Nets) of inflatable actuators. These Pneu-Net designs have been thoroughly investigated and categorized through past modeling and practical applications [9,10,15]. Three Pneu-Net bending actuators have been designed and placed in a star formation for the soft grasper on the hexacopter. The soft grasper is actuated via a single input, which FIGURE 2: SOFT GRASPER AND SUCTION MECHANISM DESIGN 3 FIGURE 6: SIMULATED VELOCITY PLOT OF AIR FLOW SURROUNDING IN-FLIGHT HEXACOPTER WITHOUT SOFT GRASPER (LEFT) AND WITH SOFT GRASPER (RIGHT) FIGURE 4: FINITE ELEMENT ANALYSIS OF THE SOFT SUCTION MECHANISM SURFACE and create a negative pressure effect. When the mechanism is depressurized, the negative pressure creates a vacuum within the chamber, which then sucks and liquid samples through the tubing and up into the inner chamber, which effectively functions as an eye dropper. The system operation can be denoted as, routes the compressed fluid through internal channels in the main body to each of the grasper fingers. The specific dimensions of the soft grasper are based on previously successful sizing for Pneu-Net actuators, the overall design, and dimensioning of each chamber and channel within the soft grasper are shown in Figure 2. However, this design is limited to grasping and carrying solid samples. The scope of this project also spans to collecting liquid samples through the grasper as well as solid samples. An additional channel has been added externally to a single grasper finger for liquid collection. This channel, a small piece of tubing, is connected to a custom soft suction mechanism which sits above the grasper. This mechanism operates with similar principles to that of an eye dropper used in medical applications, demonstrated in Figure 2, and utilizes the pressure difference between the two chambers, similar to small pneumatic pumps [16]. The soft suction mechanism is comprised of two cylindrical chambers, one encased within the other. The outer chamber is cast from silicone with Shore Hardness A 30, and the inner chamber is cast from a much softer silicone of Shore Hardness A 10. When positive pressure is applied to the space between the inner and outer chambers, the inner chamber walls are displaced Pinner < Pouter , (2) where Pinner is the pressure inside the inner chamber and Pouter is the pressure in the outer chamber. Pressurizing the system once prepares the inner chamber for liquid collection, and pressurizing with the tubing submerged induces suction. Pressurizing again creates enough negative pressure to force the liquid sample back out of the chamber for further inspection, as illustrated on the right in Figure 2 . To test the efficacy of the design of the soft grasper and suction mechanism, a finite element methods (FEM) analysis is performed (Abaqus, Dassault Systems, Vlizy-Villacoublay, France) on the system to analyze the bending of the grasper and the displacement occurring within the inner chambers of the suction mechanism during pressurization. Since the system operates on a single pressure control valve, both components must be able to function properly using 100 kPa to avoid adding unnecessary components, and thus weight to the hexacopter. The soft grasper is able to operate successfully, creating a bending angle between each actuator to reach a fully closed grasping position. Figure 3 demonstrates the finite element analysis of the soft grasper design when pressurized to 100 kPa. However, it is noted that when the suction mechanism is pressurized to 100 kPa, a significant amount of compressive force is being lost as the system begins to expand in the outer walls. The volumetric displacement inside the inner chamber is only measured at 1.4 mL for an initial chamber volume of 5 mL. To improve the efficiency of the system, an inextensible layer has been wrapped around the outer walls to prevent deformation and direct the forces inward toward the inner chamber. The difference between the two can be seen in Figure 4, and improves significantly. The displacement of the inner chamber is evaluated for volumetric change as a result of FIGURE 5: SIMULATED FLOW TRAJECTORY PLOT OF HOVERING HEXACOPTER 4 the positive pressure, and the final volume displaced within the chamber is found to be 2.9 mL with the addition of the inextensible layers. dinates of the centroid are tracked every frame. Multiple cores of the high-level computer are utilized to read camera frames and perform object tracking as it improves the online image processing. Hexacopter Aerodynamics Simulation To study the soft grasper’s influence on the hexacopter’s aerodynamics, Solidworks Flow Simulation is used to calculate the air flow condition during hovering flight state. When the propellers are rotating, the air above the hexacopter is drawn toward the propellers and pushed downward after going through the propellers. At the outer edge of the propellers’ rotation regions, rotor-tip vortices are formed because of the vortex theory [17]. The vertices generated during this process will exert pressure on surrounding components, which will then cause extra disturbances into the flight dynamic control system. When all six rotors rotate at 4800 rpm the air flow surrounding the hexacopter is shown in Figure 5. As predicted, vortices are formed both on the inner edges and outer edges of the propellers’ rotation regions. To determine the influence of the soft grasper on the hexacopter, we compare the cutting plots of air flow velocity from two simulations without and with the softgrasper installed as demonstrated in Figure 6. As can be seen in the left plot of Figure 6, the air flow velocity shows the same pattern as Figure 5, vorticies are created. Whereas, in the right plot of Figure 6, the soft grasper slightly causes the air flow domain below the propellers to increase vertically, which means there will be greater ground effect as the hexacopter getting closer to the ground [18]. For the inner rotary-tip vortices, the grasper doesn’t influence it greatly. The maximum acceleration is 0.0388m/s2 which is approximately 0.004g and it occurs along the Y axis. As the maximum acceleration is significantly small, the disturbances caused by the soft grasper won’t significantly influence the flight dynamics of the hexacopter. Image based control law After the feature detection and tracking is achieved, the camera is calibrated [19] using a pinhole camera model to calculate the intrinsic parameters which would be utilized for designing the image based control law. The image plane coordinates of a point in the image are represented by (u, v). The image plane coordinates are projected to a plane with unit focal length according to the following equations: u − u0 , λx v − v0 , ycam = λy xcam = (3) where (u0 , v0 ) are the coordinates of the principal point and (λx , λy ) are the ratios between the focal length of the camera and the size of a pixel. (u0 , v0 , λx , λy ) are the intrinsic parameters of the camera which are obtained from camera calibration. For implementing an image based visual servo (IBVS) scheme, the interaction matrix is constructed as follows [21]: J= 2 ) y −1/zd 0 xcam /zd xcam ycam −(1 + xcam cam , (4) 0 −1/zd ycam /zd 1 + y2cam −xcam ycam −xcam where zd is the depth of the object in the camera frame. In the current system setup, zd is calculated from the onboard lidar, which is also used for measuring height of the hexacopter. A depth camera can also be used for estimating the depth. The objective of an image based control law is to reduce the error [22] defined as: VISION BASED CONTROL This section describes the methods implemented to perform object detection, tracking and visual servoing based control. e(t) = s(t) − s∗ (t), Object detection and tracking The object detection is achieved using color and shape based detection. A monocular camera is utilized for the aforementioned purpose which outputs 640 × 480 images at 20 fps. The camera frames are read using ViSP [19]. Initially, the camera RGB space is converted to an HSV space and thresholds are applied to detect colors of interest. Morphological operations like erode and dilate are implemented to remove background noise and filling up intensity bumps in a frame. Subsequently, the circle hough transform (CHT) technique [20] is implemented to detect spherical objects. These operations ensured robust detection. The moments of the detected contour are calculated and the pixel coor- (5) where s(t) represents the current image coordinates for object detected in the image and s∗ (t) represents the desired image coordinates for the object. To reduce the error exponentially, ė(t) = −β e(t), the control law is designed as: u(t) = −β J −1 e(t), (6) where u(t) is the control input to the system and β is a diagonal gain matrix. The image Jacobian matrix is decomposed into 5 High Level Computer Object Detection Yes No Flight Controller Object Tracking s ⇤ (t) Image Based Tdes ẋdes Position Rdes Attitude And Control Control Control ⌧✓ , ⌧ , ⌧ s(t) ẏdes , żdes xc , yc , zc , Localization <latexit sha1_base64="pKvFfcc55YxFoB5f15R6sIbC2FE=">AAAB/XicbVDLSsNAFJ34rPVVHzs3g0VwVRIRdFl047JCX9CGMJnctEMnkzAzEWoI/oobF4q49T/c+TdO2iy09cAwh3PuZc4cP+FMadv+tlZW19Y3Nitb1e2d3b392sFhV8WppNChMY9l3ycKOBPQ0Uxz6CcSSORz6PmT28LvPYBULBZtPU3AjchIsJBRoo3k1Y6HfswDNY3MlbW9LACV516tbjfsGfAycUpSRyVaXu1rGMQ0jUBoyolSA8dOtJsRqRnlkFeHqYKE0AkZwcBQQSJQbjZLn+MzowQ4jKU5QuOZ+nsjI5EqAprJiOixWvQK8T9vkOrw2s2YSFINgs4fClOOdYyLKnDAJFDNp4YQKpnJiumYSEK1KaxqSnAWv7xMuhcNx24495f15k1ZRwWdoFN0jhx0hZroDrVQB1H0iJ7RK3qznqwX6936mI+uWOXOEfoD6/MHgyCV5Q==</latexit> <latexit sha1_base64="BSkd9xMaKYrGwZxSH8E0hwkIyWg=">AAAB/XicbVDLSsNAFJ3UV62v+Ni5CRbBVUlE0GXRjcsqthbaECaT23boZCbMTIQagr/ixoUibv0Pd/6NkzYLbT0wzOGce5kzJ0wYVdp1v63K0vLK6lp1vbaxubW9Y+/udZRIJYE2EUzIbogVMMqhralm0E0k4DhkcB+Orwr//gGkooLf6UkCfoyHnA4owdpIgX3QDwWL1CQ2V3YbZBGoPA/suttwp3AWiVeSOirRCuyvfiRIGgPXhGGlep6baD/DUlPCIK/1UwUJJmM8hJ6hHMeg/GyaPneOjRI5AyHN4dqZqr83MhyrIqCZjLEeqXmvEP/zeqkeXPgZ5UmqgZPZQ4OUOVo4RRVORCUQzSaGYCKpyeqQEZaYaFNYzZTgzX95kXROG57b8G7O6s3Lso4qOkRH6AR56Bw10TVqoTYi6BE9o1f0Zj1ZL9a79TEbrVjlzj76A+vzB4AKleM=</latexit> <latexit sha1_base64="JKM+o0L+JmrUlDEscd1dNKH6l9U=">AAACFXicbVDLSsNAFJ34rPUVdelmsAhVpCQi6LLoxmUF+4A2lsl00g6dTMLMjVhCfsKNv+LGhSJuBXf+jdO0C209cLmHc+5l5h4/FlyD43xbC4tLyyurhbXi+sbm1ra9s9vQUaIoq9NIRKrlE80El6wOHARrxYqR0Bes6Q+vxn7zninNI3kLo5h5IelLHnBKwEhd+6QD7AH8IM07h1Rn2V16nM3KZTjKsq5dcipODjxP3CkpoSlqXfur04toEjIJVBCt264Tg5cSBZwKlhU7iWYxoUPSZ21DJQmZ9tL8qgwfGqWHg0iZkoBz9fdGSkKtR6FvJkMCAz3rjcX/vHYCwYWXchknwCSdPBQkAkOExxHhHleMghgZQqji5q+YDogiFEyQRROCO3vyPGmcVlyn4t6claqX0zgKaB8doDJy0TmqomtUQ3VE0SN6Rq/ozXqyXqx362MyumBNd/bQH1ifP1qNoMk=</latexit> <latexit sha1_base64="G+FyYwfZxQPV38TYiumDOYuAa6M=">AAACBHicbVC7TsMwFHV4lvIKMHaxqJCYqgQhwVjBwlgk+pDaKnIcp7XqOJF9g6iiDCz8CgsDCLHyEWz8DU6bAVqOZPnonHvte4+fCK7Bcb6tldW19Y3NylZ1e2d3b98+OOzoOFWUtWksYtXziWaCS9YGDoL1EsVI5AvW9SfXhd+9Z0rzWN7BNGHDiIwkDzklYCTPrg38WAR6GpkrGwQxZA+5lwVM5zn27LrTcGbAy8QtSR2VaHn2l3mBphGTQAXRuu86CQwzooBTwfLqINUsIXRCRqxvqCQR08NstkSOT4wS4DBW5kjAM/V3R0YiXcxpKiMCY73oFeJ/Xj+F8HKYcZmkwCSdfxSmAkOMi0RwwBWjIKaGEKq4mRXTMVGEgsmtakJwF1deJp2zhus03NvzevOqjKOCaugYnSIXXaAmukEt1EYUPaJn9IrerCfrxXq3PualK1bZc4T+wPr8AScfmQo=</latexit> Thrust Allocator Motor Dynamics Rigid Body Dynamics <latexit sha1_base64="lY4lXj6oKif3VMhCf1MN83G7M5Y=">AAACHXicbVDLSsNAFJ3UV62vqEs3g0VwISWRgi6LblxWsA9oQ5hMJu3QyYOZG6GE/Igbf8WNC0VcuBH/xkmbRW29MHPPPedeZu7xEsEVWNaPUVlb39jcqm7Xdnb39g/Mw6OuilNJWYfGIpZ9jygmeMQ6wEGwfiIZCT3Bet7kttB7j0wqHkcPME2YE5JRxANOCWjKNZtDLxa+moY6ZUMgqavvMQOSX+CyTMZ8oVA8z12zbjWsWeBVYJegjspou+bX0I9pGrIIqCBKDWwrAScjEjgVLK8NU8USQidkxAYaRiRkyslm2+X4TDM+DmKpTwR4xi5OZCRUxQK6MyQwVstaQf6nDVIIrp2MR0kKLKLzh4JUYIhxYRX2uWQUxFQDQiXXf8V0TCShoA2taRPs5ZVXQfeyYVsN+75Zb92UdlTRCTpF58hGV6iF7lAbdRBFT+gFvaF349l4NT6Mz3lrxShnjtGfML5/AbSCo4Q=</latexit> <latexit sha1_base64="uKY9LBf+hYJkoN8iZKv+RtQNT+g=">AAAB/3icbVDLSsNAFJ34rPUVFdy4GSxC3ZREBF0W3bisYB/QhjKZTtqhk0mYuRFLzMJfceNCEbf+hjv/xmmahbYeuNzDOfcyd44fC67Bcb6tpeWV1bX10kZ5c2t7Z9fe22/pKFGUNWkkItXxiWaCS9YEDoJ1YsVI6AvW9sfXU799z5TmkbyDScy8kAwlDzglYKS+fdgD9gB+kOadQ6qrcJplfbvi1JwceJG4BamgAo2+/dUbRDQJmQQqiNZd14nBS4kCTgXLyr1Es5jQMRmyrqGShEx7aX5/hk+MMsBBpExJwLn6eyMlodaT0DeTIYGRnvem4n9eN4Hg0ku5jBNgks4eChKBIcLTMPCAK0ZBTAwhVHFzK6YjoggFE1nZhODOf3mRtM5qrlNzb88r9asijhI6Qseoilx0geroBjVQE1H0iJ7RK3qznqwX6936mI0uWcXOAfoD6/MHzaSWlg==</latexit> <latexit sha1_base64="vgFOJuO6GFxRNghzlO5iV2JVgEc=">AAACH3icbVBNS8MwGE79nPOr6tFLcAgeZLQi6nHoxeME9wFbKWmabWFpUpJUqKX/xIt/xYsHRcTb/o3pVkE3Hwh5eJ73Td73CWJGlXacibW0vLK6tl7ZqG5ube/s2nv7bSUSiUkLCyZkN0CKMMpJS1PNSDeWBEUBI51gfFP4nQciFRX8Xqcx8SI05HRAMdJG8u2LfiBYqNLIXFk/FDpLcz8LicrzU7jgPf54vl1z6s4UcJG4JamBEk3f/jIP4CQiXGOGlOq5Tqy9DElNMSN5tZ8oEiM8RkPSM5SjiCgvm+6Xw2OjhHAgpDlcw6n6uyNDkSrGNJUR0iM17xXif14v0YMrL6M8TjThePbRIGFQC1iEBUMqCdYsNQRhSc2sEI+QRFibSKsmBHd+5UXSPqu7Tt29O681rss4KuAQHIET4IJL0AC3oAlaAIMn8ALewLv1bL1aH9bnrHTJKnsOwB9Yk28UcqV4</latexit> <latexit sha1_base64="LDoU/yW4FM0UmdnDaMtHETVKojI=">AAACCnicbVC7TsMwFHV4lvIKMLIYKiQGVCUICcYKFsYi0YfURpHjOK1Vx4lsBxGizCz8CgsDCLHyBWz8DU6aAVqOZN2jc+6V7z1ezKhUlvVtLCwuLa+s1tbq6xubW9vmzm5XRonApIMjFom+hyRhlJOOooqRfiwICj1Get7kqvB7d0RIGvFblcbECdGI04BipLTkmgdDL2K+TENdsns3w/kJTKfloSy5azasplUCzhO7Ig1Qoe2aX0M/wklIuMIMSTmwrVg5GRKKYkby+jCRJEZ4gkZkoClHIZFOVp6SwyOt+DCIhH5cwVL9PZGhUBbb6s4QqbGc9QrxP2+QqODCySiPE0U4nn4UJAyqCBa5QJ8KghVLNUFYUL0rxGMkEFY6vboOwZ49eZ50T5u21bRvzhqtyyqOGtgHh+AY2OActMA1aIMOwOARPINX8GY8GS/Gu/ExbV0wqpk98AfG5w+v+Jrd</latexit> ẋc , ẏc , żc <latexit sha1_base64="szy/jNLqO9VPLsqo1LQ/u8byCis=">AAACNXicdVC7TsMwFHXKq5RXgJHFokJiQFWCkGCsYGFgKBJ9SG0UOY7TWnWcyHYQIcpPsfAfTDAwgBArv4DTZoAWrmT56Jx7dO89XsyoVJb1YlQWFpeWV6qrtbX1jc0tc3unI6NEYNLGEYtEz0OSMMpJW1HFSC8WBIUeI11vfFHo3VsiJI34jUpj4oRoyGlAMVKacs2rgRcxX6ah/rKBH6nsLncznOdHcE5J/1Xup4pr1q2GNSk4D+wS1EFZLdd80nachIQrzJCUfduKlZMhoShmJK8NEklihMdoSPoachQS6WSTq3N4oBkfBpHQjys4YX86MhTKYkndGSI1krNaQf6l9RMVnDkZ5XGiCMfTQUHCoIpgESH0qSBYsVQDhAXVu0I8QgJhpYOu6RDs2ZPnQee4YVsN+/qk3jwv46iCPbAPDoENTkETXIIWaAMMHsAzeAPvxqPxanwYn9PWilF6dsGvMr6+ASOIr0Y=</latexit> Attitude !x , !y , !z IMU Estimation <latexit sha1_base64="OqrOafXCtttPcmuaGZxEsfZWmfg=">AAAB+XicbVDLSgMxFL1TX7W+Rl26CRbBVZkRQZdFNy6r2Ae0w5DJpG1oJhmSTKEM/RM3LhRx65+482/MtLPQ6oGQwzn3kpMTpZxp43lfTmVtfWNzq7pd29nd2z9wD486WmaK0DaRXKpehDXlTNC2YYbTXqooTiJOu9HktvC7U6o0k+LRzFIaJHgk2JARbKwUuu4gkjzWs8Re+UNI5qFb9xreAugv8UtShxKt0P0cxJJkCRWGcKx13/dSE+RYGUY4ndcGmaYpJhM8on1LBU6oDvJF8jk6s0qMhlLZIwxaqD83cpzoIpydTLAZ61WvEP/z+pkZXgc5E2lmqCDLh4YZR0aiogYUM0WJ4TNLMFHMZkVkjBUmxpZVsyX4q1/+SzoXDd9r+PeX9eZNWUcVTuAUzsGHK2jCHbSgDQSm8AQv8OrkzrPz5rwvRytOuXMMv+B8fAMNZ5Pq</latexit> <latexit sha1_base64="BtDi2at8xFqxMM1EOLqXThj6UOs=">AAACMnicdVDLSsNAFJ34rPUVdelmsAgupCQi6LLoRncV7AOaEiaTSTt0kgkzEzGGfJMbv0RwoQtF3PoRTtostK0Hhjmccy/33uPFjEplWa/GwuLS8spqZa26vrG5tW3u7LYlTwQmLcwZF10PScJoRFqKKka6sSAo9BjpeKPLwu/cESEpj25VGpN+iAYRDShGSkuuee14nPkyDfWXOTwkA+Rm93l+DOcZ6X/GQ567Zs2qW2PAWWKXpAZKNF3z2fE5TkISKcyQlD3bilU/Q0JRzEhedRJJYoRHaEB6mkYoJLKfjU/O4aFWfBhwoV+k4Fj93ZGhUBY76soQqaGc9gpxntdLVHDez2gUJ4pEeDIoSBhUHBb5QZ8KghVLNUFYUL0rxEMkEFY65aoOwZ4+eZa0T+q2VbdvTmuNizKOCtgHB+AI2OAMNMAVaIIWwOARvIB38GE8GW/Gp/E1KV0wyp498AfG9w+NUa1d</latexit> <latexit sha1_base64="BtDi2at8xFqxMM1EOLqXThj6UOs=">AAACMnicdVDLSsNAFJ34rPUVdelmsAgupCQi6LLoRncV7AOaEiaTSTt0kgkzEzGGfJMbv0RwoQtF3PoRTtostK0Hhjmccy/33uPFjEplWa/GwuLS8spqZa26vrG5tW3u7LYlTwQmLcwZF10PScJoRFqKKka6sSAo9BjpeKPLwu/cESEpj25VGpN+iAYRDShGSkuuee14nPkyDfWXOTwkA+Rm93l+DOcZ6X/GQ567Zs2qW2PAWWKXpAZKNF3z2fE5TkISKcyQlD3bilU/Q0JRzEhedRJJYoRHaEB6mkYoJLKfjU/O4aFWfBhwoV+k4Fj93ZGhUBY76soQqaGc9gpxntdLVHDez2gUJ4pEeDIoSBhUHBb5QZ8KghVLNUFYUL0rxEMkEFY65aoOwZ4+eZa0T+q2VbdvTmuNizKOCtgHB+AI2OAMNMAVaIIWwOARvIB38GE8GW/Gp/E1KV0wyp498AfG9w+NUa1d</latexit> !x , !y , !z Position Estimation vx , vy , vz <latexit sha1_base64="b6dSqxZGoQBZmOXkGm1kHl8exUs=">AAACHXicbVDLSsNAFJ34rPUVdelmsAgupCRS0GXRjcsK9gFtCJPJpB06mYSZSTGG/Igbf8WNC0VcuBH/xkmbhW09MMzhnHu59x4vZlQqy/oxVlbX1jc2K1vV7Z3dvX3z4LAjo0Rg0sYRi0TPQ5IwyklbUcVILxYEhR4jXW98U/jdCRGSRvxepTFxQjTkNKAYKS25ZmPgRcyXaai/bOI+5OdwXkmXlMfcNWtW3ZoCLhO7JDVQouWaXwM/wklIuMIMSdm3rVg5GRKKYkby6iCRJEZ4jIakrylHIZFONr0uh6da8WEQCf24glP1b0eGQlkspytDpEZy0SvE/7x+ooIrJ6M8ThTheDYoSBhUESyigj4VBCuWaoKwoHpXiEdIIKx0oFUdgr148jLpXNRtq27fNWrN6zKOCjgGJ+AM2OASNMEtaIE2wOAJvIA38G48G6/Gh/E5K10xyp4jMAfj+xcxrKPW</latexit> Optical Flow Camera FIGURE 7: AUTONOMOUS OBJECT DETECTION, TRACKING AND GRASPING PIPELINE Hardware Setup The low-level motion estimation and control modules were implemented on the PIXHAWK. IMUs were used for attitude estimation. An optical flow sensor [24] and a lidar were used for localization. A standard 5 megapixels monocular camera was used for visual servoing tasks. The camera was connected over USB to the high-level computer. The UP-Board [25] was used as the high-level computer for implementing object detection, tracking, trajectory planning and communicating with the flight controller over serial protocol. A simple communication framework was developed using Boost ASIO libraries [26] and ZeroMQ [27] to communicate between the high-level computer and the flight controller. Motion capture data was collected at 100 Hz to log the trajectory of the hexacopter. A low-level autonomous grasping module was implemented to actuate the soft grasper when the hexacopter completed autonomous landing on the object. As the grasper and the monocular camera were mounted at different locations, the grasper’s location was calculated with respect to the camera’s location. In the camera frame, the grasper’s x-y coordinates were (-0.15,0.05). Eventually, these offsets were scaled with the current depth and were considered during the calculation of s∗ (t), which was the desired location of the detected object. translational and rotational components, and the rotational components are isolated from the control law as follows [23]: ux ωx uy = Jv−1 (−β e(t) − Jω ωy ), uz ωz (7) where Jv is the matrix formed by stacking the first three columns of the image Jacobian, Jω is the matrix formed by stacking the last three columns of the image Jacobian and [ux , uy , uz ]T are the camera frame translational velocities. The camera frame’s X-axis is aligned with the X-axis of the hexacopter’s body frame and the rotation matrix, which transforms a vector from the camera frame to the body frame, is constructed. Eventually, the camera frame translational velocities are rotated into the hexacopter’s body frame and sent to the flight controller as velocity setpoints. REAL TIME FLIGHT TESTS This section outlines the experimental setup for executing the flight tests. The motion capture data were solely used to demonstrate the trajectory of the hexacopter and is not used for motion planning or control. Experimental Results The IBVS scheme was implemented real-time and the complete pipeline is shown in Figure 7. It can be noted that the object detection, tracking and IBVS scheme were implemented on the high level computer. The position control module received desired velocity commands from the high level computer. Desired position commands were generated from the desired velocity commands in the position control module and a cascaded PID control structure was used to control the velocity and position. The optical flow camera generated the raw body frame velocities, represented by (vx , vy , vz ), which were used by the position estimation module to estimate the current inertial frame position and velocity, represented by (xc , yc , zc , ẋc , ẏc , żc ). The position control module generates a desired rotation matrix, Rdes , which is used by the attitude control module to generate the control torques. The desired thrust, Tdes , and the control torques, (τθ , τφ , τψ ), are FIGURE 8: HEXACOPTER AND SOFT GRASPER HARD- WARE SETUP 6 sent to the thrust allocator which allocates thrust to every rotor based on the geometry of the hexacopter. The pipeline has the following steps: The precise localization of the hexacopter with respect to the object is shown in Figure 11. It demonstrates the position of the hexacopter and the position of the ball during the localization phase of the trajectory pipeline. The steady state error is defined as the difference between the position of the center of mass of the hexacopter and that of the ball, after the hexacopter has reached a steady state. The steady state error along the X-axis and Yaxis were -0.011 meters and 0.0181 meters respectively. The Euclidean steady state error was 0.021 meters. Figure 12 shows a sequence of images from a video demonstration, showing the autonomous object detection and grasping. The video demonstration can be accessed through the following link: https: //www.youtube.com/watch?v=Mcomn3Bg_MQ. The grasper performance was evaluated for the tip force and maximum lifting capacity. To verify the forces generated by the tip of each individual finger of the grasper, the tip of one finger was placed against a load cell with the base of the actuator fixed level with the tip starting position. The top of the actuator was fixed to prevent bending to ensure all forces generated during pressurization are translated to the tip of the actuator. The resulting tip force was measured at 11 ± 1.1 N averaged across three trials. The payload of the grasper was tested by positioning the grasper over a box as shown on the right in Figure 13. Weights were added to the inside of the box until the box begins to slip out of the firm hold of the grasper. Three iterations of this procedure showed an average maximum payload of 1.5 ± 0.5 kg when pressurized to 100 kPa. Finally, the suction mechanism was tested individually for its ability to effectively collect liquid samples. The tubing at the base of the suction mechanism was submerged in water and the outer chamber was pressurized to the final pressure of 100 kPa. Pressure was released from the outer 1. The hexacopter started an autonomous zigzag scanning pattern. 2. Once the object was detected, the scanning stopped and the hexacopter localized itself with respect to the object due to visual servoing. 3. After localization, the hexacopter autonomously landed on the object and grasped it. 4. After a successful grasping, the hexacopter waited for 10 seconds and then autonomously took off and returned towards the home location. Figure 9 represents the motion capture data of the trajectory of the hexacopter for autonomous object scanning, detection and grasping. The different sections of the trajectory planning pipeline are represented with different colors. The object was located at (-2.262, -0.244, -0.334). It can be noted that the hexacopter successfully detected, grasped the object and returned towards the home location. Figure 10 represents the top view of the trajectory demonstrating the zigzag scanning pattern of the hexacopter. The transition from scanning to localization, once the object was detected, is also shown. After the object grasping, the hexacopter took off and loitered at the current location, before returning towards its home location. The hexacopter performed a loiter to search for other objects of interest in the scene. A GPS and optical flow sensor fusion would substantially reduce the error in the position of the start location and return location as shown in the plot. The hexacopter lands on the object, instead of hovering, to grasp it due to the ground-wash it experiences when it is close to the ground. FIGURE 10: TOP VIEW OF THE TRAJECTORY FOR AUTONOMOUS OBJECT SCANNING, DETECTION AND GRASPING FIGURE 9: TRAJECTORY FOR AUTONOMOUS OBJECT SCANNING, DETECTION AND GRASPING 7 FIGURE 13: PERFORMANCE OF A SINGLE FINGER OF THE GRASPER, EVALUATED FOR TIP FORCE (LEFT), AND THE MAXIMUM PAYLOAD OF THE GRASPER WHEN PRESSURIZED TO 100 kPa (RIGHT) FIGURE 11: MOTION CAPTURE DATA OF HEXACOPTER’S AND OBJECT’S POSITION DURING DETECTION sually guiding the hexacopter to perform autonomous grasping. A durable robotic soft grasper was used for the purpose of grasping which was actuated by an onboard pneumatic system. With the use of a soft grasper, the complex task of grasping objects, of different shapes and sizes, was made straightforward. Eventually, this pipeline can also be potentially used for inspection, manipulation and transportation tasks. The future work includes integration of rafts to the hexacopter base for smooth landing on water surfaces along with integration of an additional camera for improving object detection and tracking. The soft grasper’s performance will be evaluated while grasping entities of different shapes and sizes. A combination of IBVS and a position based visual servo (PBVS) approach will be developed and the performance will be compared to the current approach. Finally, outdoor flight tests will be executed for collecting solid and liquid contaminants from water canals. chamber to collect liquid, and then pressurized again to release the liquid onto a petri dish to be measured. This process was repeated a total of five times. The suction mechanism was able to collect 3.2 ± 0.2 mL of liquid, which was within 9.8% error of the predicted values of 2.9 mL of displaced volume shown in the FEM model. CONCLUSION AND FUTURE WORK In this paper, a fully autonomous unmanned aerial system, equipped with low-cost sensors and a robotic soft grasper, was demonstrated to perform object detection, tracking and grasping. Robust low-level motion estimation and control modules improved the hexacopter’s localization with respect to the object. An IBVS scheme was developed and implemented for vi- ACKNOWLEDGMENT This project is supported by Salt River Project (SRP), Arizona. The authors would like to thank Michael Ploughe at Water Quality Services, SRP, for his support and guidance in this project. Carly Thalman is funded by the National Science Foundation, Graduate Research Fellowship Program (NSF - GRFP). REFERENCES [1] Zarbin, E., 2001. “Desert land schemes: William j. murphy and the arizona canal company”. The Journal of Arizona History, 42(2), pp. 155–180. [2] Filippone, A., 2006. Flight performance of fixed and rotary wing aircraft. Elsevier. [3] Agrawal, K., and Shrivastav, P., 2015. “Multi-rotors: A revolution in unmanned aerial vehicle”. International Journal of Science and Research, 4(11), pp. 1800–1804. FIGURE 12: DEMONSTRATION OF AUTONOMOUS OB- JECT DETECTION AND GRASPING 8 [4] Thomas, J., Polin, J., Sreenath, K., and Kumar, V., 2013. “Avian-inspired grasping for quadrotor micro uavs”. In ASME 2013 international design engineering technical conferences and computers and information in engineering conference, American Society of Mechanical Engineers, pp. V06AT07A014–V06AT07A014. [5] Pounds, P. E., and Dollar, A. M., 2010. “Towards grasping with a helicopter platform: Landing accuracy and other challenges”. In Australasian conference on robotics and automation, Australian Robotics and Automation Association (ARAA), Citeseer. [6] Kim, S.-J., Lee, D.-Y., Jung, G.-P., and Cho, K.-J., 2018. “An origami-inspired, self-locking robotic arm that can be folded flat”. Science Robotics, 3(16), pp. 1–10. [7] Orsag, M., Korpela, C., Pekala, M., and Oh, P., 2013. “Stability control in aerial manipulation”. In American Control Conference (ACC), 2013, IEEE, pp. 5581–5586. [8] Polygerinos, P., Wang, Z., Overvelde, J. T., Galloway, K. C., Wood, R. J., Bertoldi, K., and Walsh, C. J., 2015. “Modeling of soft fiber-reinforced bending actuators”. IEEE Transactions on Robotics, 31(3), pp. 778–789. [9] Mosadegh, B., Polygerinos, P., Keplinger, C., Wennstedt, S., Shepherd, R. F., Gupta, U., Shim, J., Bertoldi, K., Walsh, C. J., and Whitesides, G. M., 2014. “Pneumatic networks for soft robotics that actuate rapidly”. Advanced functional materials, 24(15), pp. 2163–2170. [10] Hao, Y., Wang, T., Ren, Z., Gong, Z., Wang, H., Yang, X., Guan, S., and Wen, L., 2017. “Modeling and experiments of a soft robotic gripper in amphibious environments”. International Journal of Advanced Robotic Systems, 14(3), p. 1729881417707148. [11] Galloway, K. C., Becker, K. P., Phillips, B., Kirby, J., Licht, S., Tchernov, D., Wood, R. J., and Gruber, D. F., 2016. “Soft robotic grippers for biological sampling on deep reefs”. Soft robotics, 3(1), pp. 23–33. [12] Mishra, S., and Zhang, W., 2016. “Hybrid low pass and de-trending filter for robust position estimation of quadcopters”. In ASME 2016 Dynamic Systems and Control Conference, DSCC 2016, American Society of Mechanical Engineers, pp. V002T29A004–V002T29A004. [13] Mishra, S., and Zhang, W., 2017. “A disturbance observer approach with online q-filter tuning for position control of quadcopters”. In American Control Conference (ACC), 2017, IEEE, pp. 3593–3598. [14] Lee, T., Leok, M., and McClamroch, N. H., 2010. “Geometric tracking control of a quadrotor uav on se(3)”. In 49th IEEE Conference on Decision and Control (CDC), pp. 5420–5425. [15] de Payrebrune, K. M., and O’Reilly, O. M., 2016. “On constitutive relations for a rod-based model of a pneu-net bending actuator”. Extreme Mechanics Letters, 8, pp. 38– 46. [16] Rothemund, P., Ainla, A., Belding, L., Preston, D. J., Kurihara, S., Suo, Z., and Whitesides, G. M., 2018. “A soft, bistable valve for autonomous control of soft actuators”. Science Robotics, 3(16), p. eaar7986. [17] Li, H., Burggraf, O., and Conlisk, A., 2002. “Formation of a rotor tip vortex”. Journal of aircraft, 39(5), pp. 739–749. [18] Light, J. S., 1989. “Tip vortex geometry of a hovering helicopter rotor in ground effect”. [19] Marchand, É., Spindler, F., and Chaumette, F., 2005. “Visp for visual servoing: a generic software platform with a wide class of robot control skills”. IEEE Robotics & Automation Magazine, 12(4), pp. 40–52. [20] Ballard, D. H., 1987. “Generalizing the hough transform to detect arbitrary shapes”. In Readings in computer vision. Elsevier, pp. 714–725. [21] Chaumette, F., 1998. “Potential problems of stability and convergence in image-based and position-based visual servoing”. In The confluence of vision and control. Springer, pp. 66–78. [22] Santamaria-Navarro, À., and Andrade-Cetto, J., 2013. “Uncalibrated image-based visual servoing”. In Robotics and Automation (ICRA), 2013 IEEE International Conference on, IEEE, pp. 5247–5252. [23] Corke, P. I., and Hutchinson, S. A., 2001. “A new partitioned approach to image-based visual servo control”. IEEE Transactions on Robotics and Automation, 17(4), pp. 507–515. [24] Honegger, D., Meier, L., Tanskanen, P., and Pollefeys, M., 2013. “An open source and open hardware embedded metric optical flow cmos camera for indoor and outdoor applications”. In Robotics and Automation (ICRA), 2013 IEEE International Conference on, IEEE, pp. 1736–1741. [25] UpWiki, 2017. Hardware specification — upwiki,. [Online; accessed 1-April-2018]. [26] Kohlhoff, C., 2013. “Boost. asio”. Online: http://www. boost. org/doc/libs/1, 48(0), pp. 2003–2013. [27] Hintjens, P., 2013. ZeroMQ: messaging for many applications. ” O’Reilly Media, Inc.”. 9