The Contents

The Parser Generator.........................................................................................3

Parsers and Grammars...................................................................................3

What Does a Parser Do?...............................................................3

Lexical Analysis: The Scanner.....................................................3

The Components of a Parser.............................................................5

Grammars.........................................................................................6

How to specify grammars..............................................................................6

BNF notational conventions.............................................................7

The Different Types of Parsers......................................................................7

The Parser Generator....................................................................7

Files containing the parser generator............................................7

How to Compile and Use the Parser Generator................................7

A Simple Example Using the Parser Generator.............................................7

Clarifying the underlying concepts..............................................7

The Complications: Precedence, Associativity and Ambiguity.....................7

Input to Parser Generator..............................................................................7

Creating a Simple Calculator............................................................7

Converting to Parser Generator Syntax............................................7

Identifying User-Defined Domains/Productions..........................7

Substituting Parser Generator Syntax...........................................7

Developing Priority Groups.........................................................7

Separating Productions into Priority Groups................................7

Ordering the Priority Groups........................................................7

Ordering Productions within Each Priority Group.......................7

Identifying Right-Associative Productions...................................7

Adding Separator Information to List Productions.......................7

Describing the Output Terms.......................................................7

Preserving Token Locations.........................................................7

Generating the Parser...................................................................7

Using the Generated Parser..............................................................7

Lexical Analysis............................................................................................7

Parsing..........................................................................................................7

Using the Parser's Output..............................................................................7

Filling in Holes.................................................................................7

Improving the User Interface............................................................7

False Alarms.....................................................................................7

Changing syntax_error/2..................................................................7

Changing evaluate__expression/1................................................7

Additional Clauses for expect/3...................................................7

Extra Tokens................................................................................7

The Upgraded Program................................................................7

The Scanner Revisited......................................................................7

Calculator Wrap-up......................................................................7

Extending the Calculator Project......................................................7

Bootstrapping the Parser Generator..............................................7

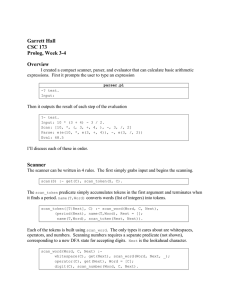

The Parser Generator

This document demonstrates how to use the parser generator to take a grammar

specified by you and automatically create a parser for that grammar. The

document is organized in five sections.

Section One gives an overview of parsers, grammars and parser generators, and

how they work. It is intended to introduce these topics to those with a limited

knowledge of parsing. Section Two discusses how to use the parser generator. It

illustrates the development process by means of a simple example. Section Three

explains in detail how to define languages by grammars, which are acceptable to

the parser generator. Using that information. Section Four then takes you on a

tour through the development process of a small compiler, which takes advantage

of the more advanced features of generated parsers, such as error handling etc.

Finally, for the very hardy. Section Five discusses how to recreate the parser

generator itself using "bootstrapping."

Parsers and Grammars

What Does a Parser Do?

A parser is a program that is able to recognize the underlying structure in a source

text. For example, a Pascal compiler uses a parser to recognize the if, while,

repeat and case statements and the procedures, functions and expressions in a

Pascal source file. Parsers are used not only with programming languages but also

with command interpreters to interpret user input for various types of programs—

expert system shells and natural-language interfaces, for example.

A parser translates source text into a format that is suitable for the next phase of a

compiler, interpreter or other program. For example, using the output from a

parser, the next phase of a compiler would be to generate the corresponding

object code.

Before the real process of parsing can begin, however, there is a preliminary stage

in which the source text must be broken up into tokens, or logical units that mean

something in the language under consideration. This stage is carried out by part of

the parser called a scanner (sometimes this is referred to as the lexical analyzer).

Lexical Analysis: The Scanner

The scanner is perhaps the simplest part of any compiler. It reads the source input

a character at a time, searching for the next token. For example, given:

NewX = OldY + 1;

the scanner in the PDC Prolog compiler would break this string up into the

meaningful tokens:

NewX, =, OldY, +, 1, ;

A scanner can also attach some attributes to each token. For example, the tokens

NewX and OldY in the above Prolog statement are represented as tokens of type

"identifier," with their names as attributes, id("NewX"), id("OldY").

The file XSCAN.PRO contains a simple scanner written in PDC Prolog. Note that

much of the work is done by the standard predicate fronttoken, which itself

represents a scanner, in that it breaks strings up into potential tokens; the rest of

the program serves to classify or remap those tokens into the ones defined for a

given language.

DOMAINS

TOK = id(STRING);

int(INTEGER);

plus;

minus;

mult;

if_;

then;

else;

write;

lpar;

rраr

TOKL = ТОК*

PREDICATES

tokl(STRING, TOKL)

str_tok(STRING, ТОК)

Visual Prolog 4.0, Beta 2.0, 6/17/2019

Page 3

CLAUSES

tokl("", [] ) :- !.

tokl(STR, [ TOK|TOKL] ) :fronttoken(STR, STRTOK, RESTSTR),

str_tok(STRTOK, ТОК),

tokl(RESTSTR, TOKL).

str_tok(" + ", plus):- !.

str_tok(" - ", minus):- !.

str_tok(" - ", mult) :- !.

str_tok("(", lpar) :- !.

str_tok(")", rpar) :- !.

str_tok("if", if_) :- !.

str_tok("then", then) :- !.

str_tok("else", else) :- !.

str_tok("write", write_ ) :- !.

str_tok(INTSTR, int(INTEGER)) :str_int(INTSTR, INTEGER), !.

str_tok(ID, id(ID)) :isname(ID), !.

maketok(STR, ТОК, S, S) :str_tok(STR, ТОК), !.

To see what it does, supply the goal:

tokl("if a then write(6)", TOKL)

The result will be:

TOKL = [ if_ , id("a") , then , write_, lpar, int(6),rpar ]

Notice that if represented as a scanner token is if_ and that write becomes write_.

This is to avoid confusion between Prolog keywords and scanner tokens.

Likewise, the tokenized forms of (and) are lpar and rpar, respectively. Also,

integer values in the source text are given in terms of the functor int() and

identifiers in terms of id().

More specialized requirements for a scanner might involve a different algorithm

for grouping sequences of characters into tokens than that embodied in

fronttoken, though fronttoken will suffice for the scanners illustrated in this

document.

The Components of a Parser

In translating a source language, a parser usually performs the following sequence

of steps:

1. Asks the scanner for the next token in the input source.

2. Checks that the token corresponds to one of the allowed patterns as specified

by the language's grammar; that is, performs basic error checking.

3. Imposes on the token, in relation to previous and/or succeeding tokens, a

structure that can be used by subsequent phases of the compiler, interpreter or

other program.

Grammars

The structures to be recognized by a parser are normally described by a grammar.

The advantages of describing the input for a parser by a grammar include:

A grammar gives a precise, readily comprehensible specification of the

input--the language to be parsed.

Creating an efficient parser for a certain kinds of well-designed grammars

can easily be automated.

A grammar helps determine the structure of the program, which should

handle the input for the parser. In our case, there is a close connection

between the grammar and the domain definitions for the Prolog program that

should handle the input.

We are all familiar with English grammar from school. Thus, "My dog eat meat"

is considered bad grammar because the form of the verb "to eat" that goes with

"my dog" should be "eats," not "eat." There are many different kinds of

grammars, including those for French, Swedish, German, Arabic and even PDC

Prolog. It is a language's grammar that specifies which tokens and which

sequences of tokens legally belong to that language. What follows is a brief

introduction to how grammars are specified in computing.

How to specify grammars

There are various ways of describing a grammar. For input to the parser

generator, we use a type of grammar specification very close to what is known as

BNF (Backus-Naur Form). The precise differences between a BNF and parsergenerator type of grammars will be explained later. To understand a BNF

grammar, let's first define some terms.

A grammar generally involves four entities: terminals, non-terminals, a start

symbol, and production rules.

Terminals are the basic symbols from which sentences are created in a language.

The word token, as used above, is a synonym for terminal. For example, in the

sentence mary likes big cars the terminals are mary, likes, big, and cars.

Visual Prolog 4.0, Beta 2.0, 6/17/2019

Page 5

Non-terminals are symbols that describe the general types of object allowed in a

grammar. (In the English language, for example, these would correspond to the

types "verbs," "adjectives," "nouns," etc., as opposed to any actual words). Using

terminals and non-terminals, we then list the allowed production rules for forming

valid sentences in the language specified by the grammar.

Production rules define the ways in which grammatical structures can be built

from one another and from terminals. The syntax for a production rule is:

<name-of-non-terminal> ::= a sequence of non-terminals and/or terminals

where the ::= symbol is read as "is defined to be".

For example, given the following production rule:

<SENTENCE> ::= <SUBJECT> likes <OBJECT>

there is one terminal, likes, and the non-terminals are <SENTENCE>,

<SUBJECT> and <OBJECT>. If <SUBJECT> and <OBJECT> are now defined

as follows:

<SUBJECT> ::= mary | john

<OBJECT> ::= dogs | cats

(where the vertical bar ( | ) should be read as "or", so that a <SUBJECT> is one of

the words mary or john), then all possible <SENTENCE>s are as follows:

mary

mary

John

John

likes

likes

likes

likes

dogs

cats

dogs

cats

One non-terminal symbol in a grammar is always selected as the start symbol.

As its name implies, the start symbol is where a parser begins when determining

how to parse its source input. It is also frequently used to denote the language

being defined. If our example above:

<SENTENCE> ::= <SUBJECT> likes <OBJECT>

<SUBJECT> ::= mary | john

<OBJECT> ::= dogs | cats

was regarded as a complete grammar, then we would take <SENTENCE> to be

its start symbol and read this as a grammar for sentences.

BNF notational conventions

Some notational conventions to keep in mind when using BNF are:

Non-terminals are surrounded by < and > to easily distinguish them from

terminal symbols. An example of a non-terminal is <STATEMENT>.

The asterisk (*) is used in production rules to indicate the possibility of zero

or more instances of the non-terminal or terminal symbol. For example, a

language could be defined as a series of zero or more statements:

<LANGUAGE> ::= <STATEMENT>*

The plus sign (+) is used in production rules to indicate the possibility of one or

more instances of the non-terminal or terminal symbol. For example, a number in

the above language could be defined as <NUMBER> ::= <DIGITS>+ , indicating

that a number is made up of one or more digits.

The | mark is used to indicate "or" in a production rule. For example,

<LETTER-OR-DIGIT> ::= <LETTER> | <DIGIT>

A non-terminal surrounded by [ and ] in a production rule may be used zero

or one times. That is, it is optional. For example,

<SIGNED-NUMBER> ::= [<SIGN>] <NUMBER>

Thus, the grammar

<SENTENCE>

::= <SUBJECT> likes <OBJECT>

| does <SUBJECT> like <OBJECT>

<SUBJECT>

::= john | mary

<OBJECT>

::= <ADJECTIVE> <NOUN>

<ADJECTIVE> ::= big | medium | small

<NOUN>

::= books | cars

defines a total of five production rules: one defining the non-terminal <

SENTENCE > and then four defining each of the remaining non -terminals

<SUBJECT>, <OBJECT>, <ADJECTIVE> and <NOUN>. (Notice that the nonterminal <OBJECT> is itself defined in terms of other non-terminals). The words

likes, does, like, john, mary, big, medium, small, cars and books are the terminal

symbols of the language. In other words, they are the symbols from which all

valid sentences are made up according to the production rules.

It follows that:

mary likes big cars

is a valid sentence in the language described by this grammar because of the

following chain of productions:

1. A <SENTENCE> takes the form <SUBJECT> likes <OBJECT>.

Visual Prolog 4.0, Beta 2.0, 6/17/2019

Page 7

2. mary is a <SUBJECT>.

3. An <OBJECT> takes the form <ADJECTIVE> <NOUN>.

4. big is an <ADJECTIVE>.

5. cars is a <NOUN>.

Hence, big cars is an <OBJECT> and mary likes big cars is a <SENTENCE>.

The Different Types of Parsers

There are two main classes of parsers: top-down and bottom-up. Given a sentence

to parse, top-down parsers start with the most general production in the grammar

(the start symbol) and try to produce a list of productions that generate that

sentence (exactly as we did when trying to prove that mагу likes big cars was

valid in our introductory example).

On the other hand, bottom-up parsers start with a given sentence (i.e., a list of

terminal symbols) and try to work backwards to prove that it is an instance of one

of the grammar's production rules. Because of this, a bottom-up parser usually

requires access to a parsing table. A parsing table for a grammar is a table in

which the entries are production rules from that grammar and the columns and

rows are indexed by terminals and non-terminals. The table simply records which

production rule the parser should use when a given terminal symbol is next

considered in the parser's attempt to verify the input sentence.

When working through the given sentence, a bottom-up parser looks ahead a

certain amount. Otherwise, it wouldn't be able to determine, for example, whether

a sentence that begins with if is going to be of the form if…then... or of the form

if...then...else…

Most parser generators produce a bottom-up parser, because it's relatively easy to

generate a parsing table to drive a bottom-up parser for almost any reasonable

grammar.

On the other hand, if a top-down parser is to be constructed that is sufficiently

general so that each of the parsers produced by the parser generator can be a

special case of it, then the initial, general parser must be able to backtrack--a

feature that comes free with Prolog.

The parser generator produces top-down parsers for several reasons, the main one

being that it is then easier to build structures during the parsing process. Further,

the difference list technique in Prolog makes it relatively easy to construct a very

efficient top-down parser with an arbitrary look-ahead length combined with

backtracking. As you will discover, the resulting parsing speed of a generated

parser can be very fast, particularly if you code the accompanying scanner (lexical

analyzer) in С or assembler.

The Parser Generator

A parser generator is a program that generates a parser from the specification of a

grammar. Thus, from a BNF-like grammar, our parser generator produces a

Prolog program capable of recognizing sentences conforming to that grammar.

A parser generator makes it easier to write parsers. Even with Prolog, which was

originally designed with natural language parsing in mind, it is still a relatively

complicated task to write a parser on your own. Since not everyone has the time

to develop expertise in writing parsers (as well as in everything else they must

know about in computing), a parser generator allows even non-experts to

construct parsers.

Files containing the parser generator

The parser generator is built from the files, which are all placed in the VPI\

PROGRAMS\PARSER subdirectory:

How to Compile and Use the Parser Generator

Before using the parser generator it is necessary to compile it to an .EXE file.

The first step is to load the file containing your prepared parser definition (or to

type it in directly using the edit option). Activating the main menu entry,

Generate parser, will then generate the required parser, which will consist of

two parts, each placed in a separate file. If the name of the file containing the

parser generator input is PASCAL.GRM, the generated parser's domain

declarations will be in the file PASCAL.DOM, and the predicate declarations and

clauses will be generated in the file PASCAL.PAR. The generated parser can then

be viewed by selecting Display parser from the main menu.

A Simple Example Using the Parser Generator

There are many small but important details to be explained before considering a

realistic example of parser generator use. These will be discussed in later sections,

but in this section we will give an extremely simple example just to familiarize

you with the mechanics of getting a parser generated: which files you should

create and which files are automatically generated.

There are two important things to keep in mind while reading the following

sections. First, for every suitable grammar given as input, the parser generator

Visual Prolog 4.0, Beta 2.0, 6/17/2019

Page 9

produces a Prolog program that is a parser for that grammar. Second, in every

case you must then write the accompanying scanner that tokenizes the source

language input so that it is suitable for use by the automatically generated parser.

Since we have provided examples of such scanners in the files XCALC.SCA,

XCALC2.SCA and XCALCULA.SCA, it should generally require only minor

modifications to make (a copy of) one of these works with a different grammar.

Our previous example of a sentence grammar, recast in Parser Generator format,

might look like this:

productions

SENTENCE =

SUBJECT likes OBJECT -> likes(SUBJECT, OBJECT),

does SUBJECT like OBJECT -> does_like(SUBJECT, OBJECT)

SUBJECT = john -> john,

mагу -> mагу

OBJECT = ADJECTIVE NOUN -> attribute(ADJECTIVE, NOUN)

ADJECTIVE = big -> big,

red -> red

NOUN = books -> books,

cars -> cars

which, if input as SENTENCE.GRM, would generate a file

SENTENCE.DOM that looks like:

/****************************************************************

DOMAIN DEFINITIONS

****************************************************************/

DOMAINS

SENTENCE = likes(SUBJECT,OBJECT);

does_like(SUBJECT,OBJECT)

SUBJECT = john( );

mary( )

OBJECT = attribute(ADJECTIVE, NOUN)

ADJECTIVE = big( );

red( )

NOUN = books( );

cars( )

ТОК = likes( );

does( );

like( );

john( );

mary( );

big( );

red( );

books( );

cars( );

nil

and a file SENTENCE.PAR, which looks like

/***************************************************************

PARSING PREDICATES

***************************************************************/

PREDICATES

s_sentence(TOKL, TOKL, SENTENCE)

s_subject(TOKL,TOKL,SUBJECT)

s_object(TOKL.TOKL,OBJECT)

s_adjective(TOKL,TOKL,ADJECTIVE)

s_noun(TOKL, TOKL, NOUN )

CLAUSES

s_sentence(LL1,LLO,likes(SUBJECT,OBJECT)):s_subject(LL1,LL2,SUBJECT),

expect ( t ( likes,_),LL2,LL3),

s_object( LL3,LLO,OBJECT), !.

s_sentence( [t( does,_) | LL1],LLO, does_like(SUBJECT ,OBJECT)):-!,

s_subject(LL1,LL2,SUBJECT),

expect(t(like,_),LL2,LL3),

s_object(LL3,LLO,OBJECT).

s_sentence(LL,_,_):-syntax_error(sentence, LL), fail.

s_subject( [ t ( john,_) | LL] ,LL, john):-!.

s_subject( [ t (mary,_) | LL ] ,LL, mагу):-!.

s_subject( LL,_,_):-syntax_error(subject,LL),fail.

s_object(LL1,LLO,attribute(ADJECTIVE,NOUN)):s_adjective(LL1,LL2,ADJECTIVE),

s_noun(LL2,LLO,NOUN),!.

s_adjective( [ t (big,_) | LL],LL , big):-!.

s_adjective( [ t (red,_) | LL],LL , red):-!.

s_adjective(LL,_,_):-syntax_error(adjective ,LL),fail.

Visual Prolog 4.0, Beta 2.0, 6/17/2019

Page 11

s_noun( [ t (books, _) | LL] ,LL, books): -!.

s_noun( [ t (cars,_) | LL] ,LL ,cars):-!.

s_noun(LL,_,_):-syntax_error(noun ,LL),fail.

If you then took a suitable scanner, defined to produce lists of the tokens defined

above (see the ТОК. domain above), the following sequence, entered as a goal

tokl("does john like red cars" ,TOKLIST),

s_sentence(TOKENLIST, [], RESULT)

would bind RESULT to does_like(john,attribute(red,cars)). The issues of

what happens from there on, and whether or not that is a correct or sufficient

"interpretation" of the parsed text, are best addressed in the context of a particular

application, though clearly the choices made in the grammar specification will

contribute to the structure of the overall solution.

Clarifying the underlying concepts

While reading the detailed description of the parser generator in subsequent

sections, it is important to remember that, for every grammar given as input, the

parser generator produces a program similar in basic structure to the above

sentence parser. The actual parser generated is more complicated only because of

considerations of priority, associatively, efficiency and the detection of errors.

The next section examines a more complete example embracing these concepts,

but, first, in this section we will discuss the grammar used to specify input for the

parser generator in more detail, relate it to BNF grammars and take a closer look

at the "environment" of the generated parser. With these concepts clarified, you

will be ready to expand the example programs and eventually design your own

parser.

The Complications:

Ambiguity

Precedence,

Associativity

and

There are three particular issues that have to be addressed when dealing with a

realistic grammar. These are:

Precedence: Addition, multiplication and exponentiation are normally given

different priorities so that multiplication binds tighter than addition. These

different priorities are sometimes reflected in the grammar.

Associativity. In an expression like 2+3+4, the addition operator is usually

assumed to be left associative, which means that the operation 2 + 3 should be

carried out first, before the operation 3+4. In the expression 2^3^4, however,

the operation 3^4 should be carried out first, i.e., ^ is right associative.

Ambiguity: There is not always just one way to combine the input to a parser

into a grammatical structure. The if…then…else sentence is the most often

cited example. In the context of the above grammar, consider the

construction:

if a then

if b then sent1

else sent2

The grammar does not specify whether this construction should be interpreted as:

if a then ( if b then sent1 else sent2)

or as

if a then ( if b then sent1) else sent2

In the parser generator, these problems have been solved by making the following

constraints on the way in which the source language grammar should be

specified:

Precedence: Productions for one production name should be separated into

groups having the same priority. Thus, plus (+) and minus (-) should share the

same group, and multiplication (*) and division (/) should both belong to

another group. Groups are separated by the symbol --.

Associativity: Operators are by default left associative. If they are to be right

associative, then the relevant productions must be preceded by the keyword

rightassoc.

Ambiguity: This is solved by considering the order of the productions in such

a way that a parser will choose productions appearing earlier in the

production list in preference to those appearing later. In the above example

this means that, should the second of the above two interpretations be the one

required, the if sentence with an else should come before the if sentence

without an else.

Input to Parser Generator

A formal specification of the format you must use when inputting information to

the parser generator is given at the end of this section. The formal specification is

in BNF form, so refer back to "Backus-Naur Form" on page 186 if you have any

questions about how BNF works. Note the distinction between the format or

syntax of the parser generator's input and its formal description. BNF notation is

used to describe a language that itself closely conforms to BNF notation.

Visual Prolog 4.0, Beta 2.0, 6/17/2019

Page 13

First, let's follow through an informal specification in words.

Input for the parser generator consists of three different kinds of sections, each of

which is optional:

1. Specification of user-defined predicates.

2. Specification of user defined domains.

3. Production rules.

If you wish to manually code some parsing predicates, they should be declared to

the parser generator at the beginning of your grammar definition, after the

keywords userdefined predicates. Similarly, user-defined domains should be

declared after the keywords userdefined domains and before the predicates.

Following the userdefined sections, there can be any number of production rule

sections, but the keyword productions must precede each such section. A

production begins with the name of that production in upper-case letters. There

are two kinds of productions: list productions and general productions.

A list production is a production name followed by an asterisk or a plus sign. An

asterisk means zero or more occurrences of that grammatical object can appear in

a valid sentence; a plus means one or more. Then, a list production can contain an

optional separator specification. When no separator is given, the list simply

doesn't have any separator symbols. For example, you can declare that an

expression list is a possibly empty list of expressions separated by commas as

follows:

EXPLIST = EXP* separator comma

This specification generates the following PDC Prolog domain declaration in the

domain definition file of the parser generator output (we'll give more details about

the generated parser later):

DOMAINS

EXPRLIST = EXP*

This domain declaration is independent of whether an asterisk or a plus is used

and whether a separator is used or not.

A general production consists of one or more groups of individual productions,

each with the same priority. The priority groups are separated by two minus signs

(--), while the productions in each group are separated by a comma (,).

An individual production can be preceded by the keyword rightassoc. If the

production is right-associative, then its name can be followed by a (possibly

empty) list of grammatical tokens. These are either names of other productions or

scanner tokens. It can have an optional parameter list for those cases where the

scanner places some attributes in the token--for example, the value of an integer

or the name of an identifier.

After the list of grammatical tokens comes an arrow (->), which is followed by a

specification of the Prolog term that should be built from this production. This

term can have zero or more arguments, the arguments being the names of

productions or PDC Prolog terms.

Productions may also specify the source-text locations of one or more tokens,

using the :CURSOR keyword.

The production names in the list of grammatical tokens must be in the same order

and are assumed to be the same as the production names used in the

corresponding Prolog term: the first production name in the grammatical token

list must correspond to the first name in the term, and so on. If two corresponding

names are not equal, a conversion between the two names is inserted in the parser.

For example:

MYPRODNAME = upper(STRING) -> MYPRODNAME

generates the following parse-predicate:

s_prodname( [t(upper(STRING), ) | LL ] , LL , MYPRODNAME) :- !,

STRING = MYPRODNAME.

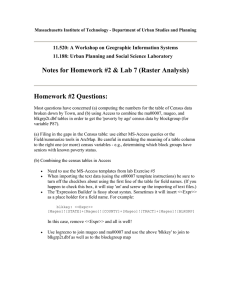

The BNF grammar in the following table shows how input for the parser

generator must be specified.

<parser generator input> ::=

userdefined productions <production names>

| userdefined domains <production names>

| productions <productions>

<associativity> ::= rightassoc

<asterisk or plus> ::= *

| +

<general production> ::= <priority group>

{<priority group> }*

<grammatical tokens> ::= <grammatical token>*

<grammatical token» ::=

<production name>

|< scanner token> [: CURSOR]

<individual production> ::=

[<associativity>] <grammatical tokens> -> <term>

Visual Prolog 4.0, Beta 2.0, 6/17/2019

Page 15

<lower case string> ::= {a | b | c | d | e | f | g | h| i | j | k | l

| m | n | o | p | q | r | s | t | u | v | w | x | y | z}+

<priority group>::=

<individual production» {, <individual production>}*

<production>::=

<production name> = <production body»

<productions> ::= <production>+

<production body>::=

<general production>

| <production name> <asterisk or plus>

[<separator>]

<production name> ::= <upper case string>

<production names> ::=

<production name> {, <production name> }

<scanner token> ::=

<lower case string> (<production names>)

| <lower case string»

<separator > : : = <string>

<term> ::= <lower case string>

| <lower case string> (<production names>)

| <production name>

<upper case string> ::= {A | B | C | D | E | F | G | H | J | K | L

M | N | O | P | Q | R | S | T | U | V | W | X | Y | Z}+

Figure 1: BNF specification of the Grammar

Notice that there are several important differences between the standard BNF

syntax described at the beginning of the document and the syntax towhich the

parser generator's input must conform:

The < and > characters that normally surround a non-terminal are not

allowed, and the non-terminals are capitalized.

Instead of the ::= operator to indicate the body of a production, the parser

generator uses =.

The parser generator provides support for priority groups and right

associativity.

The parser generator requires that each individual production be accompanied

with a description of the Prolog term that is to be constructed when that

production is recognized.

The next sections focus on two complete demonstrations using the parser

generator. Throughout these examples we'll be discussing issues generic to all

programs developed with the assistance of this tool.

Creating a Simple Calculator

Our first objective will be to build a program that accepts equations like

(20 * (12 + 14.5)) ^ 40

and returns the result. To start with, we'll need a grammar. Using BNF syntax,

let's describe what input to our "calculator" will look like. (Note that everything to

the right of each percent sign (%) is a comment and would not be part of

conventional BNF syntax).

<expr> :: = (

| <expr> *

| <expr> +

| <expr> ^

| <expr> | <expr> /

| <numbег>

<expr> ) % parenthetical expressions

<expr>

% multiplication

<expr>

% addition

<expr>

% exponentiation

<expr>

% subtraction

<expr>

% division

% floating-point/integer constants

Two things to note about this grammar

The recursive use of <expr> provides support for expressions within an

expression.

Developing production rules for <number> is not necessary in our case

because PDC Prolog contains built-ins that recognize numeric constants.

Converting to Parser Generator Syntax

Our next task is to convert from the BNF representation to that of the parser

generator. This is a fairly straightforward process involving seven steps:

1. Identifying user-defined domains and productions.

2. Substituting parser generator syntax.

3. Developing the priority groups.

4. Identifying the right-associative productions (if any).

5. Adding optional separator info to list productions.

Visual Prolog 4.0, Beta 2.0, 6/17/2019

Page 17

6. Describing, for each non-list production, the Prolog term that is to be

constructed when that production is found in the source text.

7. Identifying tokens whose location should be preserved in the output term.

As you'll see, not all of these steps are needed for our calculator example.

Identifying User-Defined Domains/Productions

In special situations, it might become necessary to define your own domains and

parsing predicates. For these situations, two special sections are supported:

userdefined domains and userdefined productions. After the appropriate section

heading, list the names (separate them with commas if there are more than one) of

the user-defined domains/ productions.

userdefined domains

DOMAIN1,DOMAIN2

userdefined productions

PRODUCTION1,PRODUCTION2

These sections must appear first in the parser generator input. Note that no userdefined sections are required for our calculator's grammar.

Substituting Parser Generator Syntax

The next step in converting a BNF to the syntax of the parser generator involves

making a few cosmetic changes to the current form of the grammar. This is a sixstep process:

1. Remove the < and > characters that surround the non-terminals and shift the

name of each non-terminal to upper case.

2. Substitute a unique lower-case name for each of the terminals (+, -, ^, etc., in

our example).

3. Those non-terminals that you'd rather treat as terminals (perhaps because it

would be more convenient to recognize them at the lexical analysis stage)

should be represented as a standard domain surrounded by a functor,

<number> in our calculator's grammar is one such non-terminal.

4. Replace all ::= with =

5. Separate every production with a comma (,) instead of a bar ( | ).

6. Head the section with the word "productions".

For our calculator's BNF, the result of this step would be:

productions

EXPR = lpar EXPR rpar,

EXPR mult EXPR,

EXPR plus EXPR,

EXPR power EXPR,

EXPR minus EXPR,

EXPR div EXPR,

number (REAL)

Developing Priority Groups

This step involves grouping/ordering of the production rules and is performed in

three passes:

1. Separating the productions into priority groups.

2. Ordering the priority groups.

3. Ordering the productions within each priority group.

Separating Productions into Priority Groups

To be consistent with conventional rules of precedence, certain operators should

be given an identical evaluation priority (addition and subtraction are at one

priority, multiplication and division are at another, etc.). Production rules must

accordingly be grouped with others having the same priority.

For our calculator's grammar, four priority groups should be fairly apparent. The

double hyphen (--) is the parser generator's priority group separator, where

productions within each group are separated by a comma):

productions

EXPR = lpar EXPR rpar,

number( REAL)

- EXPR mult EXPR,

EXPR div EXPR

- EXPR plus EXPR,

EXPR minus EXPR,

- EXPR power EXPR

Visual Prolog 4.0, Beta 2.0, 6/17/2019

Page 19

Ordering the Priority Groups

Once the priority groups are established, they must be ordered where the group

having the loosest binding appears first in the parser generator input. This

ordering will provide guidance when evaluating expressions, so that, instead of a

simple left-to-right evaluation, those operations that bind most tightly will be

performed before the others.

For example, in the expression 20+30*40-50, the multiplication (an operation that

binds more tightly than addition or subtraction) should be performed first.

The priority groups for our calculator's grammar should therefore be ordered as

follows:

productions

EXPR = EXPR plus EXPR ,

EXPR minus EXPR

- EXPR mult EXPR,

EXPR div EXPR

- EXPR power EXPR

- lpar EXPR rраr,

number(REAL)

Ordering Productions within Each Priority Group

In situations where more than one production (within a given priority group)

could match the same source text, a parser produced by the parser generator will

assume that the first applicable production is the correct one.

For example, if we converted the following BNF (plus the productions for

recognizing a <condition>) into a valid parser generator grammar . . .

<statement> ::=

if <condition> then <statement>

| if <condition> then <statement>

else <statement>

then generated a parser from this specification. The parser would interpret

if A < 5

if A > 1

print "A

else

print "A

then

then

< 5 and A > 1"

>= 5"

to mean

if A < 1 then ( if A > 1 then print "A < 5 and A > 1"

else print "A >= 5" )

because the if/then production appears before the if/then/else production (in the

parser generator input). If the order of the two production rules were reversed, the

interpretation would be

if A < 5 then ( if A > 1 then print "A < 5 and A > 1")

else print "A >= 5"

Note that these issues do not apply to our calculator's grammar.

Identifying Right-Associative Productions

Normally, evaluations involving operators of like priority are performed on a leftto-right basis. For example, in the expression 20+30-40, the addition should be

carried out before the subtraction. Productions where evaluation begins on the left

are said to be "left associative" (the parser generator's default).

But for some operations, left associativity yields an incorrect result. For example,

evaluating the expression

20 ^ 30 ^ 40

should begin on the right, working towards the left. As you would suspect,

productions requiring this right-to-left evaluation are termed "right associative."

In our calculator's grammar, only the production that handles the exponentiation

is right associative.

productions

EXPR = EXPR plus EXPR,

EXPR minus EXPR

- EXPR mult EXPR,

EXPR div EXPR

- rightassoc EXPR power EXPR

- lpar EXPR rpar,

number(REAL)

Visual Prolog 4.0, Beta 2.0, 6/17/2019

Page 21

Adding Separator Information to List Productions

In addition to the + (one or more) and * (zero or more) BNF operators, the parser

generator grammar provides for the specification of a separator token (optional).

FIELD_LIST = FIELD* separator semicolon

Note: In all list productions, the list element must be itself a production (a nonterminal) -- the only token that may appear in a list production is the separator

token.

Our calculator's grammar doesn't have any list productions.

Describing the Output Terms

It's during this step that we specify how the parser should identify which

production was applied. For example, if we provide our calculator with the

equation

5 + 7.3

we want our calculator's parser to respond with the term

add(number(5),number(7.3))

to indicate that it found the addition operator separating two numbers (and what

the actual values of the numbers were). The productions, and each one's

associated output term (those we've chosen to use), for our calculator program are

as follows:

productions

EXPR = EXPR plus EXPR -> add(EXPR, EXPR),

EXPR minus EXPR -> subtract(EXPR, EXPR)

- EXPR div EXPR -> divide(EXPR, EXPR),

EXPR mult EXPR -> multiply(EXPR. EXPR)

- rightassoc EXPR power EXPR -> power(EXPR, EXPR),

- lpar EXPR rpar -> parenthetical(EXPR),

number(REAL) -> number(REAL)

This is also the final form of our parser generator input (contained in

XCALC.GRM).

Preserving Token Locations

Some applications require references back to the source text after the

scanning/parsing stage. In these situations, the grammar can specify that one or

more token locations are stored in a production's output term. This is done with

the :CURSOR keyword

NUMBER = number (REAL):CURSOR -> number(REAL , CURSOR)

or

RANGE = from int (INTEGER) : CURSOR

to int (INTEGER) : CURSOR

-> range( INTEGER, CURSOR, INTEGER, CURSOR)

Our calculator grammar does not require use of the :CURSOR keyword.

Generating the Parser

If we have made no syntax errors in our grammar and we instruct the parser

generator to generate a parser from it, two files will automatically be produced:

one containing the parsing predicates (having a .PAR extension), and the other

containing some of the domains needed by the parsing predicates (having a .DOM

extension).

In the case of our calculator program, since the input grammar is contained in the

file XCALC.GRM, the generated parsing predicates will be placed in

XCALC.PAR and the domains in XCALC.DOM.

Once you've compiled the parser generator, run PARSER, load XCALC.GRM as

the input grammar, and instruct the program to generate the parser.

The following two files should be created:

/***********************************************************

PARSING PREDICATES

***********************************************************/

PREDICATES

s_expr(TOKL,TOKL,EXPR)

s_expr1(TOKL,TOKL,EXPR)

s_expr5 ( TOKL, TOKL, EXPR, EXPR )

s_expr2( TOKL, TOKL, EXPR )

s_expr6( TOKL, TOKL, EXPR, EXPR )

s_expr3( TOKL, TOKL, EXPR )

s_expr7( TOKL,TOKL,EXPR,EXPR)

s_expr4 ( TOKL, TOKL, EXPR)

Visual Prolog 4.0, Beta 2.0, 6/17/2019

Page 23

CLAUSES

s_expr(LL1,LLO,EXPR) :s_expr1(LL1,LLO,EXPR).

s_expr1(LL1,LLO,EXPR_):s_expr2(LL1,LL2, EXPR ),

s_expr5(LL2,LLO,EXPR,EXPR_).

s_expr2( LL1, LLO, EXPR.) :s_expr3(LL1,LL2,EXPR),

s_expr6(LL2,LLO,EXPR,EXPR_).

s_expr3(LL1, LLO,EXPR_) :s_expr4(LL1, LL2, EXPR),

s_expr7(LL2,LLO,EXPR,EXPR_).

s_expr4( [t ( lpar,_) | LL1], LLO, perenthetical( EXPR)):-!,

s_expr( LL1,LL2,EXPR),

expect( t ( rpar,_),LL2, LLO).

s_expr4( [t ( number(REAL),_) | LL], LL, number(REAL)):-!.

s_expr4(LL,_,_):- syntax_error (expr4, LL), fail.

s_expr5( [t ( plus,_) | LL1 ] , LLO,EXPR,EXPR_):-!,

s_expr2( LL1,LL2,EXPR1),

s_expr5( LL2, LLO, add(EXPR,EXPR1), EXPR_).

s_expr5( [t ( minus,_) | LL1] , LLO,EXPR,EXPR_):-!,

s_expr2(LL1,LL2,EXPR1),

s_expr5(LL2,LLO,subtract(EXPR,EXPR1),EXPR_).

s_expr5(LL,LL,EXPR,EXPR).

s_expr6( [t ( mult,_) | LL1 ], LLO,EXPR,EXPR_):-!,

s_expr3(LL1,LL2,EXPR1),

s_expr6(LL2,LLO,multiply(EXPR,EXPR1),EXPR_)

s_expr6( [t (div,_) | LL1 ], LLO,EXPR,EXPR_):-!,

s_expr3(LL1,LL2,EXPR1),

s_expr6(LL2,LLO,divide(EXPR,EXPR1),EXPR_).

s_expr6(LL,LL,EXPR,EXPR).

s_expr7( [t ( power,_) | LL1 ] , LLO, EXPR,power(EXPR,EXPR1 )):-!,

s_expr3(LL1, LLO,EXPR1 ).

s_expr7(LL,LL,EXPR,EXPR).

and XCALC.DOM:

/***********************************************************

DOMAIN DEFINITIONS

***********************************************************/

DOMAINS

EXPR = add ( EXPR , EXPR ) ;

subtract(EXPR.EXPR);

multiply(EXPR, EXPR);

divide(EXPR,EXPR);

роwer(ЕХРР,EXPR);

parenthetical(EXPR);

number(REAL)

ТОК = plus( );

minus( );

mult( );

div( );

роwer( );

lpar( );

rраr( );

number(REAL);

nil

Using the Generated Parser

Now that we've generated the calculator's parser, it's time to consider the overall

structure of our program.

We want to read equations from the user (entered from the keyboard) and

evaluate each one, so we use the following predicate as our primary control

structure.

user_interface :write("\n\n\n\nEnter expression (Esc to quit): "),

readln(EXPR),

% Fails on esc.

!,

evaluate_expression(EXPR),

user_interface.

user_interface.

Evaluating each expression turns out to be a three-step process:

1. Converting the expression into a list of tokens—the data structure required as

input to the parser.

2. Passing the list of tokens to the parser.

3. Performing the actual calculation, using the term returned from the parser.

Visual Prolog 4.0, Beta 2.0, 6/17/2019

Page 25

We'll use the following as our evaluation predicate.

evaluate_expression( EXPR ) :tokenize(EXPR, TOKENS),

write("\nTokens -> ", TOKENS),

parse ( TOKENS, TERM),

write("\n\nTerm ---> ", ТЕRM),

!,

calculate(TERM, RESULT),

write("\n\nAnswer -> ", RESULT).

evaluate expression(_) :sound(30, 300),

write("\n\n<<Illegal Expression>>").

It's important to note that tokenize/2 is designed to fail if the user entered

unrecognized tokens, and parse/2 will fail if the tokens don't comprise a valid

equation (per our calculator's grammar).

Lexical Analysis

Lexical analysis, or scanning, refers to the phase of identifying the tokens in the

input string. Our calculator source code employs tokenize/2 for this purpose:

tokenize(EXPRESSION, TOKENS) :scan(0, EXPRESSION, TOKENS).

It calls scan/3 to actually convert the expression (a string) into the list of tokens.

To maintain compatibility with the parsing predicates, the returned list must be of

the domain TOKL, which is defined as:

DOMAINS

CURSOR = INTEGER

CURSORTOK = t ( TOK, CURSOR )

TOKL = CURSORTOK*

You may have noticed the ТОК domain as defined in XCALC.DOM. It contains a

list of all of the tokens our scanner will need to recognize. CURSOR is used to

keep track of where each is found in the input expression.

As an example, if the input to our calculator was

20 * 30 ^ 40

the scanner should return the list

[t(number(20),0),t(mult,2),t(number(30),4),t(power,6),t(number (40),8)]

The following definition of scan/3 (contained in XCALC.SCA) should suit our

needs nicely.

DOMAINS

NUMBER_OF_EXTRA_CHARACTERS = INTEGER

NUMBER_OF_SPACES = INTEGER

PREDICATES

is_a_space( CHAR)

scan( CURSOR, SOURCE, TOKL)

skip_spaces(SOURCE, SOURCE, NUMBER_OF_SPACES)

string_token(STRING, ТОК)

CLAUSES

is_a_space(' ').

is_a_space('\t').

is_a_space('\n').

scan(STARTING_POSITION,SOURCE,[t(TOKEN, LOCATION_OF_TOKEN )|ТАIL] ) :skip_spaces(SOURCE,NEW_SOURCE,NUMBER_OF_SPACES),

LOCATION_OF_TOKEN=STARTING_POSITION+NUMBER_OF_SPACES ,

fronttoken(NEW_SOURCE, FRONTTOKEN, REST),

!,

%

Make the case of each token unimportant.

upper_lower(FRONTTOKEN,

LOWER_CASE_FRONTTOKEN ),

string_ token(LOWER_CASE_ FRONTTOKEN, TOKEN),

str_len( FRONTTOKEN, LENGTH_OF_FRONTTOKEN ),

NEW_STARTING_POSITION = LOCATION_OF_TOKEN + LENGTH_OF_FRONTTOKEN,

scan(NEW_ STARTING_ POSITION, REST , TAIL).

scan( _ , _ , [] ).

skip_spaces(SOURCE, NEW_SOURCE, NUMBER_OF_SPACES) :frontchar(SOURCE, CHAR, SOURCE1),

is_ a_ space( CHAR),

!,

skip_spaces (SOURCE1, NEW_ SOURCE, NUMBER OF

SPACES_ IN_SOURCE1),

NUMBER_OF_SPACES = NUMBER_OF_SPACES_IN_SOURCE1 + 1.

skip_spaces(SOURCE, SOURCE, 0).

Visual Prolog 4.0, Beta 2.0, 6/17/2019

Page 27

string_token("/", div) :- !.

string_token("(", lpar) ;- !.

string_token("-", minus) :- !.

string_token("*", mult) :- !.

string_token(STRING,number(INTEGER)):-str_int(STRING,INTEGER ),!.

string_token(STRING,number(REAL)):-str_real(STRING,REAL), !.

string_token(" + ", plus) :- !.

string_token(" ^ ", power) :- !.

string_token(")", rраr) :- !.

Parsing

Parsing basically involves calling the highest-level parsing predicate (the one

corresponding to the start production), handing it the tokens returned by the

lexical analysis pass. The predicate naming convention used by the parser

generator is simply the letter s, followed by an underscore, followed by the name

of a non-terminal.

The start production for our calculator grammar is EXPR (actually, EXPR is the

only non-terminal we defined), so the predicate we call to handle our parsing is

named s_expr.

parse(TOKENS, TERM) :s_expr(TOKENS,UNUSED_TOKENS,TERM),

UNUSED_TOKENS = [ ].

Notice that s_expr returns a list of unused tokens as its second parameter. This is

because the parsers produced by the parser generator perform their magic via a

technique known as "parsing with difference lists." In this strategy, each parsing

predicate takes something off the front of the input list and returns the remainder

of the list (the unused portion). This remainder is handed as input to the next

parsing predicate, which returns its remainder, etc. When the list is exhausted, a

successful parse is normally assumed to have occurred. Hence, parse/2's test to

ensure that UNUSED_TOKENS is empty--if there are unused tokens when

s_expr returns, the parse failed.

Using the Parser's Output

As mentioned earlier, the parsers produced by the parser generator return a term

(a tree) that represents the structure of the input expression. For example, if the

input to our calculator's parser was the tokenized form of

20 * 30 ^ 40

then the returned term would be

multiply ( number(20) , power ( number(30),number(40)))

So to obtain the solution to the user's equation, we need a predicate that knows

how to traverse these trees, performing the appropriate calculations along the

way. The following seven clauses (one for each production in the calculator's

grammar) will do the job for us.

calculate( add(EXPR1, EXPR2), RESULT ) :calculate(EXPR1, RESULT1),

calculate(EXPR2,RESULT2),

RESULT = RESULT1 + RESULT2.

calculate(subtract(EXPR1, EXPR2), RESULT) :calculate(EXPR1, RESULT1),

calculate(EXPR2, RESULT2),

RESULT = RESULT1 - RESULT2.

calculate(multiply(EXPR1, EXPR2), RESULT) :calculate(EXPR1, RESULT1),

calculate(EXPR2, RESULT2),

RESULT = RESULT1 * RESULT2.

calculate(divide(EXPR1, EXPR2), RESULT) :calculate(EXPR1, RESULT1),

calculate(EXPR2, RESULT2),

RESULT = RESULT1 / RESULT2.

calculate(power(EXPR1, EXPR2), RESULT) :calculate(EXPR1, RESULT1),

calculate(EXPR2, RESULT2),

RESULT = exp(ln(RESULT1) ^ RESULT2).

calculate(parenthetical(EXPR), RESULT) :calculate(EXPR, RESULT).

calculate(number(RESULT), RESULT).

Filling in Holes

All of the various pieces of this program come together in the main program file

(XCALC.PRO).

check_determ

CONSTANTS

Dialog_window = 1

Visual Prolog 4.0, Beta 2.0, 6/17/2019

Page 29

DOMAINS

CURSOR = INTEGER

CURSORTOK = t(ТОК, CURSOR)

MESSAGE = STRING

RESULT = REAL

SOURCE = STRING

TOKL = CURSORTOK*

include "xdemos\\xcalc.dom"

% Parser domains ( from parser generator).

PREDICATES

expect (CURSORTOK, TOKL, TOKL)

syntax_error(MESSAGE, TOKL)

% Contained Predicates

% -------------------include "parser\\windows.pro"

include "xdemos\\xcalc.sca"

include "xdemos\\xcalc.par"

include "xdemos\\xcalc.гun"

include "xdemos\\xcalc.ui

CLAUSES

expect(TOK, [ TOK | L ], L).

syntax_error(_, _).

%

%

%

%

%

window_...

scan/3

s_expr/3

calculate/2

user_interface/0

GOAL

window_Make(dialog_window,

white_on_blue,

white_on_black,

"Simple Calculator",

full),

window_ChangeFrame(bottom,center,

" Legal operators include *, /, + , -, ^, and parentheses. ",

blue_on_white),

user_interface,

removewindow.

The only important aspects of this program that we haven't yet discussed are the

predicates expect/3 and syntax_error/2. A version of these two must be supplied

by any application using a parser-generator-produced parser. The predicate

expect/3 is called when a grammatical entity should be followed by a token (of

the ТОК domain). Its three parameters are the expected token (input), the list of

tokens currently being processed (input) and the tail of the above list (output).

The predicate expect/3 should fail if the expected token is not the first token in the

list (of tokens). Otherwise, it should return the (possibly empty) tail of the list.

The predicate syntax_error/2 is called when a production is determined not to

match the particular list of tokens. Its two parameters are the name of the

production that didn't match (input) and the list of tokens being processed (input).

Note that our calculator program uses the absolute minimum definitions for these

predicates. In the next section, we'll look at more elaborate versions of these two,

as used in a better error-reporting scheme. But before we go on, try compiling

XCALC.PRO, entering calculations and observing the output. It's important that

you have a good understanding of what we've done thus far before going on to the

more advanced topics.

Improving the User Interface

Currently, when the calculator program can't make sense out of the user's input,

only the message

"<<Illegal Expression>>”

is provided as feedback. It would be nice if the program could instead provide a

more descriptive error message and even go so far as "point" (visually) at the

problem.

As it turns out, making the changes necessary to accomplish this feat is a simple

and straightforward task. The next few sections detail this procedure.

False Alarms

As the parser works its way down through the Prolog clauses that implement the

production rules, some productions will naturally fail to match the input

expression. When this happens, the parser makes a call to syntax_error/2. But,

since the first production tried is not always the correct one, this may not actually

be an error (a real syntax error occurs only if no matching production can be

found). Therefore, in the event of an unsuccessful parse, we need a strategy for

deciding which of these "false alarms" to identify as the syntax error.

One such strategy is simply to keep track of the error that occurred at the deepest

level in the source text. Each time syntax_error/2 is called, compare the cursor

position of the current token with the cursor position of the last syntax error. If the

newest error occurred further into the source text than the previous error, save it

as the deepest level achieved. Then, should the parse fail, display the currently

saved error information as the syntax error. The declaration

DATABASE

determ error(MESSAGE, CURSOR)

Visual Prolog 4.0, Beta 2.0, 6/17/2019

Page 31

identifies a good storage place for this information. We'll use error/2 in the

upcoming changes to the calculator program.

Changing syntax_error/2

So our first order of business is to replace our minimal version of syntax_error/2

syntax_error(_, _).

with one that really does something.

syntax_error(PROD, [t(_, CURSOR) | _] ) :is_a_deeper_error( CURSOR ),

format(MESSAGE, "Syntax error in %", PROD),

replace_error(MESSAGE, CURSOR).

syntax_error(PROD, [ ] ) :format(MESSAGE,

"Syntax error in % (premature end of text).", PROD),

replace_error(MESSAGE, end_of_text).

The first clause implements our "deepest error" strategy with the assistance of a

couple of utility predicates.

is_a_deeper_error(CURSOR):error(_, OLD_CURSOR),

!,

CURSOR > OLD_CURSOR.

is_a_deeper_error(_).

replace_error(MESSAGE, CURSOR) :retractall(error(_, _)),

assert(error(MESSAGE, CURSOR)).

The second syntax_error clause handles an unexpected end of source text (The

identifier end_of_text is a special one we use to denote the cursor position of the

end of the input expression). Note that there is no need to check whether this is

the deepest error thus far; you can't get any deeper than the end of text!

Changing evaluate__expression/1

To report the deepest error found (upon an unsuccessful parse), we need to

change the second clause of evaluate expression/1 from

evaluate_expression(_) :sound(30, 300),

write("\n\n<<illegal Expression>>").

to one which will display this additional information.

evaluate expression(EXPR) :sound(30, 300),

write("\n\n<<illegal Expression>>"),

retract(error(MESSAGE, CURSOR)),

% Get error info.

nl,

nl,

write(MESSAGE),

% Don't point if error is regarding end-of-text.

point_to_error_if_appropriate(CURSOR, EXPR).

The actual pointing at the problem will be handled by

point_to_error_if_appropriate(end_of_text, _) :- !.

% Don't if end-of-text.

point_to_error_if_appropriate(CURSOR, EXPR) :str_len( FILLER, CURSOR),

writef("\n\n%s%c", FILLER, down_ arrow),

nl,

write(EXPR).

where we've decided (arbitrarily) not to point if it's a problem with the end of text.

Additional Clauses for expect/3

Now that we've taken care of syntax errors, there are similar changes we could

make to expect/3 that will also help pinpoint the problem in the source text.

Namely, adding the following two alternative clauses

expect ( t (TOKEN, _), [ t ( _, CURSOR) | _ ], _) :is_a_deeper_error(CURSOR ),

string_ token ( STRING, TOKEN ) ,

format(MESSAGE, "Expected %.", STRING),

replace_error(MESSAGE, CURSOR),

fail.

expect(t(TOKEN, _), [ ], _) :string token(STRING, TOKEN),

format ( MESSAGE,

"Expected % (premature end of text).", STRING),

replace_error(MESSAGE, end of text),

fail.

after the present one

expect(TOK, [ТОК | L] , L) :- !.

Visual Prolog 4.0, Beta 2.0, 6/17/2019

Page 33

will flag an error when the expected token is not found where anticipated. Notice

the calling of string_token/2 (defined in XCALC2.SCA) to convert the expected

token to its raw form.

Extra Tokens

Instead of the parse simply failing when our top-level production predicate returns

a non-empty list of tokens

parse(TOKENS, ТЕRM) :s_ expr(TOKENS, UNUSED_TOKENS, TERM),

UNUSED_TOKENS = [ ].

it would be nice to specifically report the problem. This is easily handled by

rewriting this predicate as

parse(TOKENS, TERM) :s_expr(TOKENS, UNUSED_TOKENS, TERM),

insure_list_is_empty(UNUSED_TOKENS).

where insure_list_is_empty/1 is written as

insure list_is_empty(n) :- !.

insure_list_is_empty( [ t (_, CURSOR) | _ ] ) :is_a_deeper_error( CURSOR ),

replace_ error("Unexpected token.", CURSOR),

fail.

The Upgraded Program

All of these changes are contained in the files XCALC2.PRO and XCALC2.UI.

First, XCALC2.PRO:

check_determ

CONSTANTS

dialog_window = 1

DOMAINS

CURSOR = INTEGER

CURSORTOK = t(ТОК, CURSOR)

MESSAGE = STRING

RESULT = REAL

SOURCE = STRING

TOKL = CURSORTOK*

include “xdemos\\xcalc.dom"

% Parser domains (from pагsег generator).

DATABASE

determ error(MESSAGE, CURSOR)

PREDICATES

expect(CURSORTOK, TOKL, TOKL)

is a deeper_error(CURSOR)

replace error(MESSAGE, CURSOR)

syntax_error(MESSAGE, TOKL)

% Contained Predicates

% ------------------------include "parser\\windows.pro"

% window_...

include "xdemos\\xcalc2.sca"

% scan/3

include "xdemos\\xcalc.par"

% s_expr/3

include "xdemos\\xcalc.run"

% calculate/2

include "xdemos\\xcalc2.ui"

% user_interface/0

CLAUSES

expect( TOK, [ ТОК | L], L) :-!.

expect ( t ( TOKEN, _ ) , [ t ( _, CURSOR) | _ ], _ ) :is_a_deeper_error( CURSOR ),

string_token(STRING, TOKEN),

format ( MESSAGE, "Expected %." , STRING),

replace error ( MESSAGE, CURSOR),

fail.

expect ( t (TOKEN, _ ), [ ] , _) :string_token(STRING, TOKEN),

format(MESSAGE,"Expected % (premature end of text).",STRING),

replace error(MESSAGE, end of text),

fail.

is_a_deeper_error(CURSOR) :еrrоr(_, OLD_CURSOR),

!,

CURSOR > OLD_CURSOR.

is_a_deeper_error(_).

replace_error(MESSAGE, CURSOR) :retractall( error(_, _)),

assert(error(MESSAGE, CURSOR)).

Visual Prolog 4.0, Beta 2.0, 6/17/2019

Page 35

syntax_error(PROD, [ t ( _, CURSOR) l _ ] ) :is_a_ deeper_error(CURSOR),

format(MESSAGE, "Syntax error in %.", PROD),

replace_error(MESSAGE, CURSOR).

syntax_error ( PROD, [ ]) :format (MESSAGE,

"Syntax error in % (premature end of text).", PROD),

replace_error(MESSAGE, end_of_text).

GOAL

window_Make(dialog_window,

white_on_blue,

white_on_black,

" Simple Calculator (with improved error reporting)",

full),

window_ChangeFrame(bottom,

center,

" Legal operators include *, /, +, -, ^, and parentheses. ",

blue_on_white),

user_interface,

removewindow.

XCALC2.UI defines the user interface:

CONSTANTS

down_arrow = 25

end of text = -1

PREDICATES

insure_list_is_empty(TOKL)

evaluate_expression(string)

parse(TOKL, EXPR)

point_to_error_if_appropriate(INTEGER, STRING)

tokenize(STRING, TOKL)

user_interface

CLAUSES

evaluate_expression( EXPR ) :tokenize(EXPR, TOKENS),

write("\nTokens -> ", TOKENS),

parse(TOKENS, TERM),

write("\n\nTerm ---> ", TERM),

!,

calculate(TERM, RESULT),

write("\n\nAnswer -> ", RESULT).

evaluate_expression(EXPR) :sound(30,300),

write("\n\n <<Illegal Expression>>"),

retract(error(MESSAGE, CURSOR)),

nl,

nl,

write(MESSAGE),

% Get error info.

% Don't point if error is regarding end-of-text.

point_to_error_if_appropriate( CURSOR, EXPR ).

insure_list_is_empty([]) :-!.

insure_list_is_empty([t( _ , CURSOR) | -] ) :is_a_deeper_error(CURSOR),

replace_error("Unexpected token.", CURSOR),

fail.

parse(TOKENS, TERM) :s_expr(TOKENS, UNUSED_TOKENS, TERM),

insure_list_is_empty(UNUSED_TOKENS).

point_to_error_if_appropriate(end_of_text, _) :- !.

% Don't if end-of-text.

point to_error if_appropriate(CURSOR, EXPR) :str_len(FILLER,CURSOR),

writef("\n\n%s%c", FILLER, down_arrow),

nl,

write(EXPR).

tokenize(EXPR, TOKENS) :- scan(0, EXPR, TOKENS).

user_interface :write("\n\n\n\nEnter expression (Esc to quit): "),

readln(EXPR),

% Fails on esc.

!,

evaluate_expression( EXPR ),

user_interface.

user_interface.

Visual Prolog 4.0, Beta 2.0, 6/17/2019

Page 37

The Scanner Revisited

That's it for the parsing side of things, but a minor change to the scanner will also

allow us to identify errors which occur during lexical analysis.

Basically, what we want to do is to report unrecognized tokens. In the case of our

calculator, this involves identifying tokens, which won't unify with any of the

string_token/2 clauses. The predicate get_token/3 will work for this purpose.

get_token(FRONTTOKEN, TOKEN, _) :string_token(PROUTTOKEN, TOKEN), !.

get_token(_, _, CURSOR) :is_a_deeper_error( CURSOR ),

replace error("Illegal token.", CURSOR),

fail.

We'll need to change the first scan/3 clause to call get_token instead of

string_token, making the new definition:

scan(STARTING_POSITION,SOURCE,[t(TOKEN,CURSOR)|TAIL]):skip_spaces ( SOURCE, NEW_SOURCE,NUMBER_OF_SPACES),

CURSOR = STARTING_POSITION + NUMBER_OF_SPACES,

fronttoken(NEW_SOURCE, FRONTTOKEN, REST),

!,

% Make the case of each token unimportant.

upper_lower(FRONTTOKEN, LOWER_CASE_FRONTTOKEN),

get_token(LOWER_CASE_FRONTTOKEN. TOKEN, CURSOR),

str_len(FRONTTOKEN,LENGTH_OF_FRONTTOKEN),

NEW_STARTING_POSITION = CURSOR + LENGTH_OF_FRONTTOKEN,

scan(NEW_STARTING_POSITION, REST. TAIL).

scan(_, _, [ ] ).

The new scanner is contained in XCALC2.SCA:

DOMAIN

NUMBER_OF_EXTRA_CHARACTERS = INTEGER

NUMBER_OF_SPACES = INTEGER

PREDICATES

get_token( STRING, ТОК, CURSOR)

is_a_space( CHAR)

scan( CURSOR, SOURCE, TOKL)

skip_spaces(SOURCE, SOURCE, NUMBER_OF SPACES)

string_token(STRING, ТОК)

CLAUSES

get_token(FRONTTOKEN,TOKEN,_ ):-string_token(FRONTTOKEN,TOKEN ) , !.

get_token(_, _, CURSOR) :is_a_deeper_error ( CURSOR ),

replace error("lllegal token.", CURSOR),

fail.

is_a_space(' ').

is_a_space('\t').

is_a_space('\n').

scan(STARTING_POSITION, SOURCE, [t(TOKEN, CURSOR)| (TAIL]) :skip_spaces(SOURCE, NEW_SOURCE, NUMBER_OF_SPACES),

CURSOR = STARTING_POSITION + NUMBER_OF_ SPACES,

fronttoken(NEW_SOURCE, FROUTTOKEN, REST),

!,

% Make the case of each token unimportant.

upper_lower(FRONTTOKEN, LOWER_CASE_FRONTTOKEN ),

get_token(LOWER_CASE_FRONTTOKEN, TOKЕN, CURSOR),

str_len(FRONTTOKEN, LENGTH_OF_FRONTTOKEN),

NEW_STARTING_POSITION = CURSOR + LENGTH_OF_FRONTTOKEN,

scan(NEW_STARTING_POSITION, REST, TAIL).

scan(_, _, [ ] ).

skip_spaces(SOURCE, NEW_SOURCE, NUMBER_OF_SPACES) :frontchar(SOURCE, CHAR, SOURCE1),

is_a_space (CHAR),

!,

skip_spaces(SOURCE1,NEW_SOURCE,SPACES_IN_ SOURCE1),

NUMBER_OF_SPACES = SPACES_IN_SOURCE1 + 1.

skip_spaces(SOURCE, SOURCE, 0).

string_token("/", div) :- !.

string_token("(", lpar) :-!.

string_token("-", minus) :- !.

string_token("*", mult) :- !.

string_token(STRING, number( INTEGER)) :str_int(STRING, INTEGER), !.

string_token(STRING, number(REAL)) :str_real(STRING, REAL), !.

string_token("+", plus) :- !.

string_token("+", power) :- !.

string_token(")", rpar) :- !.

Visual Prolog 4.0, Beta 2.0, 6/17/2019

Page 39

Calculator Wrap-up

Try running XCALC2.PRO and supplying it with your favorite poorly formed

expressions. You might be surprised at how well this "deeper error" strategy

works in this context.

In the next section, we'll take a huge leap forward as we consider the

implementation of an actual compiler for a simple programming language.

Extending the Calculator Project

Now let's expand our calculator grammar into that of an actual programming

language, build an interpreter for it, and even include the ability to compile and

link our language's source-code (producing executable files).

The language, which we'll call Calcula, will be designed to accept programs like

clear

write "Process up to what number? "

read MAX_VALUE

nl write "Ном many exponents? "

read NUMBER_OF_EXPONENTS

for VALUE = 1 to MAX_VALUE do

nl

for EXPONENT = 1 to NUMBER_OF_EXPONENTS - 1 do

write VALUE, "^", EXPONENT

write " = ", VALUE^EXPONENT, ", "

enddo

write VALUE, "^", NUMBER_OP_EXPONENTS

write " = ", VALUE^NUMBER_OF_EXPONENTS

enddo

So let's start with the following as our Calcula BNF:

<program> ::= <stmnt>+

% A program has one or more statements.

<cond> ::= <expr> <oper> <expr>

<expr> ::= ( <expr> )

| <expr> * <expr>

| <expr> + <expr>

| <expr> ^ <expr>

| <expr> - <expr>

| <expr> / <expr>

|.<number>

% parenthetical expressions

% multiplication

% addition

%exponentiation

% subtraction

% division

% floatina-point/integer constants

<oper> ::= =

| <>

| <

| >

| <=

| >=

%

%

%

%

%

%

equal to

not equal to

less than

greater than

less than or equal to

greater than or equal to

<output terms> ::= <expr>

| “<string>”

<output terms> ::= <output term> {, <output term> }

% Output terms are

% output term possibly

% followed by a

% comma and another

% output term.

<stmnt> ::= <var> = <expr>

% Bind a variable to an

% expression

% Clear screen

| clear

| while <cond>

do <program> enddo

| for <var> = <expr> to <expr>

do <program> enddo

| if <cond>

the <program> endif

| if <cond>

then <program>

else <program> endif

|

|

|

|

|

input <vars>

memory

nl

output <output terms>

time

% do while

loop

% for loop

% if/then

% if/then/else

%

%

%

%

%

Read from user

Display memory info

Start a new line

Write to screen

Display current time

%

%

%

%

Variables are a

variable possibly

followed by a comma

and another variable

<var> ::= <name>

<vars> ::= <var> {, <var> }

Note that <number>, <string> and <name> are not defined; they are already

recognized by the PDC Prolog system.

After applying the previously outlined BNF conversion process, we might end up

with the following parser generator grammar:

Visual Prolog 4.0, Beta 2.0, 6/17/2019

Page 41

userdefined domains

OP

productions

PROGRAM = STMNT+

COND

= EXPR OPER EXPR

EXPR

= EXPR plus EXPR

EXPR minus EXPR

-EXPR mult EXPR

EXPR div EXPR

-rightassoc EXPR power

-lpar EXPR rpar

number (REAL)

VAR

OPER

= op(OP) :CURSOR

OUTPUT_TERM = EXPR

str(STRING)

-> condition(EXPR,OPER,EXPR)

-> add (EXPR,EXPR),

-> subtract(EXPR,EXPR),

-> multiply (EXPR,EXPR),

-> DIVIDE (EXPR,EXPR)

-> power( EXPR,EXPR)

-> parenthetical (EXPR),

-> number(REAL)

-> var(VAR)

-> operator(OP,CURSOR)

-> expr(EXPR),

-> str(STRING)

OUTPUT_TERM = OUTPUT_TERM+ separator comma

STMNT

= VAR OPER EXPR

clear

while COND

do PROGRAM enddo

-> bind(VAR,OPER,EXPR),

-> clear,

-> do_while (COND,PROGRAM),

for VAR OPER

EXPR TO EXPR

do PROGRAM enddo

->for(VAR,OPER,EXPR,EXPR,PROGRAM),

if_ COND then

PROGRAM endif

-> if_then(COND,PROGRAM),

if_COND then

PROGRAM else

PROGRAM endif

->if_then_else ( COND,PROGRAM,PROGRAM),

input VARS

-> input(VARS),

memory

-> memorу,

nl

-> nl,

output OUTPUT_TERMS -> output(OUTPUT_TERMS),

time

-> time

VAR

= name(STRING):CURSOR-> var(STRING, CURSOR)

VARS

= VAR+ separator comma

Of particular interest in this grammar are:

We'll be defining our own OP domain (used in the OPER production).

Unlike the calculator's grammar (where our start production was EXPR),

Calcula's top-level production is PROGRAM. A PROGRAM is a list of

STMNTs (one or more, with no separator between them), where a STMNT

takes one of the 11 identified forms.

The terms produced when the productions OPER and ***MISSING TEXT

(FBB)*** are found will contain the location of the identified token.

The token if_ is used (instead of if) to prevent confusion with Prolog syntax.

After supplying the above grammar as input to the parser generator, the following

two files result: XCALCULA.DOM and XCALCULA.PAR

/***********************************************************

XCALCULA.DOM -- DOMAIN DEFINITIONS

***********************************************************/

DOMAINS

PROGRAM = STMNT*

COND = condition(EXPR,OPER,EXPR)

EXPR = add( EXPR, EXPR) ;

subtract(EXPR,EXPR);

multiply(EXPR, EXPR);

divide(EXPR,EXPR);

power(EXPR,EXPR);

parenthetical(EXPR);

number(REAL);

var(VAR)

OPER = operator(OP,CURSOR)

OUTPUT_TERM = expr(EXPR);

str(STRING)

OUTPUT_TERMS = OUTPUT_TERM*

Visual Prolog 4.0, Beta 2.0, 6/17/2019

Page 43

STMNT = bind(VAR,OPER,EXPR);

clear( );

do_while( COND, PROGRAM ) ;

for( VAR, OPER, EXPR, EXPR .PROGRAM );

if_then(COND.PROGRAM);

if_then_eIse( COND, PROGRAM, PROGRAM ) ;

input (VARS);

memory ();

nl( );

output (OUTPUT_ TERMS);

time()

VAR = var(STRING,CURSOR)

VARS = VAR*

TOK = comma();

plus();

minus();

mult();

div();

роwer();

lpar();

rраr();

number( REAL);

op(OP);

str(STRING);

clear();

while();

do();

enddo();

for();

to_();

if_();

then();

endif();

else();

input();

memory( );

nl();

output();

time();

name( STRING );

nil

/***********************************************************

XCALCULA.PAR -- PARSING PREDICATES

***********************************************************/

PREDICATES

s_program(TOKL,TOKL,PROCRAM)

s_program(TOKL,TOKL,PROGRAM)

s_output_terms( TOKL, TOKL, OUTPUT_TERMS )

s_output_terms1 (TOKL, TOKL ,OUTPUT_TERMS)

s_vars(TOKL,TOKL,VARS)

s_vars1(TOKL,TOKL,VARS)

s_cond( TOKL, TOKL, COND )

s_expr(TOKL,TOKL,EXPR)

s_expr1(TOKI,TOKL,EXPR)

s_expr2 ( TOKL, TOKL, EXPR, EXPR )

s_expr5(TOKL,TOKL,EXPR)

s_expr6( TOKL, TOKL, EXPR, EXPR )

s_expr3( TOKL, TOKL, EXPR )

s_expr7< TOKL, TOKL, EXPR, EXPR)

s_expr4(TOKL,TOKL,EXPR)

s_oper(TOKL,TOKL,OPER)

s_output_ term( TOKL, TOKL, OUTPUT TERM)

s_stmnt (TOKL, TOKL, STMNT)

s_var(TOKL,TOKL,VAR)

CLAUSES