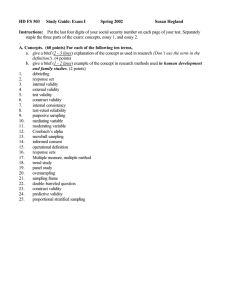

SUMMARY - RESEARCH METHODS IN SOCIAL RELATIONS One to rule them all FEBRUARY 7, 2015 UCR Edited by Bree 19 1|Page Contents Taxonomy .............................................................................................................................................................................. 2 Chapter 1 – Ways of Knowing................................................................................................................................................ 5 Chapter 2 – Evaluating Social Science Theories and Research .............................................................................................. 9 Chapter 4 - Fundamentals of measurements ...................................................................................................................... 12 Chapter 5 – Modes of Measurement .................................................................................................................................. 14 Chapter 6 - Single-Item Measures in Questionnaires .......................................................................................................... 17 Chapter 7 - Scaling and Multiple-Item Measures ................................................................................................................ 21 Chapter 8 - Fundamentals of sampling................................................................................................................................ 26 Chapter 9 - Probability Sampling Method ........................................................................................................................... 28 Chapter 11 - Randomized Experiments ............................................................................................................................... 31 Chapter 13 - Nonrandomized Designs ................................................................................................................................. 36 2|Page Taxonomy Quality of: Types A Measure Construct Validity Reliability Description Threats How to improve Are we measuring what we think we are measuring? Face Validity Can we do it again and get the same result? Test-Retest Convergent Discriminant Split-Half Inter-Rater A Sample Sampling Does the Sample differ from the corresponding population? Selection Bias, Measurement or Response Bias, Random Sampling Nonresponse Bias A Study (research design) Internal Validity Can we say that A causes B? Threats: Selection, History, Maturation, Selection by Maturation, Random Assignment, Control Groups Other efforts Mortality, Instrumentation Artifacts External Validity Does our sample represent the society? How broadly applicable is the theory? Particularistic Random Sampling, Natural setting Replication Universalistic 3|Page Modes of Measurement Type Subtype Examples Randomization Sampling Probability/ Non-Probability Comments Assignment Between: Randomly assigned to Groups Within: Randomly assigned to order of treatment Observational Qualitative Direct Experimental Indirect Randomized Experiments Narrative Analysis Any None Oral History Any None Inductive Research. Participant Observation Any/None None Greater depth/breath of knowledge. Focus Groups Any None Interviews (face to face, telephone) Any None Questionnaires (mailed, internet) Any None Experience Sampling Any None Physiological Monitoring Any None Observation Any None Collateral Reports Any None Randomized Two Group Any Between Pretest-Posttest Two Group Any Between Solomon FourGroup Any Between BetweenParticipants Factorial Design Any Between Repeated Any Within Potential for strong external validity. For some things the best way to find out is to ask. For some things it’s better not to ask directly. Best Internal Validity, can have low External Validity. 4|Page Measures Design Crosssectional Comparison Non-Random Experiments QuasiExperimental Panel Study Any None Static Group Comparison Any None Pretest-Posttest nonequivalent Any None One Group Pretest-Posttest Any None Interrupted timeseries Design Any None Replicated Interrupted timeseries Design Any None Internal Validity not as strong as true experiments but external validity is improved 5|Page General 1. A construct is an abstract concept that we would like to measure (love, intelligence, aggression, selfesteem, success, taste perception). 2. The operational definition of a construct is the set of procedures we use to measure or manipulate it. 3. A social science hypothesis, naïve or not, is a falsifiable statement of the association between two or more constructs that have to do with human social behaviour. 4. Construct validity: to what extent are the constructs of theoretical interest successfully operationalised in the research? (Do you measure what you want to measure?) 5. To maximise construct validity, we need to measure each construct in more than one way – using multiple operational definitions and then comparing them to see whether they seem to be measuring the same things. (p.33) 6. Internal validity: to what extent does the research design permit us to reach causal conclusions about the effect of the independent variable on the dependent variable? (Can just conclusions be drawn about causality?) 7. External validity: to what extent can we generalise from the research sample and setting to the populations and settings specified in the research hypothesis? (Can we generalise our conclusions to our population?) 8. Correlational fallacy: inappropriately inferring causality from a simple association between two variables (correlation does not imply causality!!!) (p.36) 9. Qualitative research: research with non-numerical data 10. Quantitative research: research with numerical data 11. Reliability: something is reliable if repetition of research leads to the same results 12. Achieve reliability: large sample, test-retest, multiple methods, split-half 13. Results can be reliable but not valid – non-reliable results can never be valid! 14. When researchers create rather than measure levels of an independent variable, we call it a manipulated variable (with manipulated independent variables, the researcher must test the manipulation by subsequently measuring its effects to determine its construct validity) 15. Systematic error reflects influences form other constructs besides the desired one 16. Random error reflects non-systematic, ever-changing influences on the score (p.81) 6|Page 17. The reliability of a measure is defined as the extent to which it is free from random error. 18. The validity is the extent to which a measure reflects only the desired construct without contamination from other systematically varying constructs. 7|Page Chapter 1 – Ways of Knowing Place of Values in social science research - Society’s values may have both positive and negative effects on what should and can be investigated by social scientists. (ex. a study on how to increase the academic success of at-risk youth would be encouraged, whereas one on sexual behavior in teens is not) - Furthermore, the results of some social science research have been publicly misinterpreted and condemned. An example is that of Rind ET. Co, who published an article stating that sexual abuse in childhood does not lead to long-term negative outcomes on the individual. Contestability in social and physical sciences - The results of social science researchers seem to be more contestable than those of physical sciences, in the general population. This is due to: seemingly ordinary quality of most methods of observation and the fact that most topics researched are identifiable with personal convictions or political interpretations. - Even though they sometimes look like casual observations, SSC researches are not. Casual Observation: - The hypotheses derived from casual observation are useful to oneself in the sense that we have ideas about how others will behave and thus we can behave in such a way that will cause the desired response. - Note they are not always correct, as they have a general character - Example: Birds of a feather flock together Similarity results in increased contact. Construct – abstract concept that we would like to measure. Operational definition of a construct – set of procedures we use to measure or manipulate it. SSC Hypothesis – falsifiable statement of the association between 2 or more constructs that have to do with human behavior. Causal associations (construct causes construct) Theory – set of interrelated hypotheses that are used to explain a phenomenon and make predictions about associations among constructs relevant to the phenomenon. Sources of support for naïve theories (derived from casual observation): 1) Logical analysis (being unemployed depression divorce) 2) Authority (a doctor’s opinion for ex. On how to deal with a difficult child) 3) Consensus (getting others’ opinions) 5) Observation 6) Past experience 8|Page Toward a science of social behavior Most important threat – a biased conclusion; also, we can never accept a hypotheses of absolutely true, beyond a doubt. Empirical research – observation that is systematic in an attempt to avoid bias. Operationism – the assumption that all constructs can be measured or observed. Replication – an empirical research that reveals the same conclusion as one previously conducted. 9|Page Chapter 2 – Evaluating Social Science Theories and Research Theory about social behavior has three features: 1. Constructs that are if theoretical interest and that it attempts to explicate or account for in some way. 2. Describes associations among these constructs. 3. Theory incorporates hypothesized links between the theoretical constructs and observable variables that can be used to measure the constructs. Theory must generate testable hypothesis – a theory is comprised of hypotheses, which in turn are comprised of statements about associations among constructs. A variable is any attribute that changes values across people or things being studied. Theory is made up of hypotheses; 2 types: 1. Hypothesized associations among constructs 2. Hypothesized associations between constructs and observable variables. Falsifiable – could conceive of a pattern of findings that would contradict the theory. Necessary and minimum requirement for a theory 1. A productive theory is one that addresses some important phenomenon or social behavior that needs explication. 2. Theory must provide a plausible and empirically defensible explanation for that phenomenon. Measurement Research – conducted by examining whether two or more ways of measuring the same construct give the same results. Four different functions of empirical research: 1. Discovery 2. Demonstration 3. Refutation 4. Replication Discovery – develop/generate a hypothesis; inductive. Demonstration – demonstrating the proof of the hypothesis. Research designed to demonstrate a hypothesis is deductive rather than inductive. The hypothesis generates the research. Refutation – can never conclusively prove a hypothesis, possible to refute it. Replication – the only way to overcome biases one has to replicate the research. The variable used to measure the causal construct, crowdedness of classrooms – independent variable The variable used to assess the affected construct, educational achievement – dependent variable 10 | P a g e The degree to which both the independent and dependent variables accurately reflect or measure the constructs of interest – construct validity Internal validity concerns the extent to which conclusions can be drawn about the causal effects of one variable on another External validity – validity of generalized (causal) inferences in scientific studies, usually based on experiments as experimental validity. Variables measure not only the construct of interest but also what we might call constructs of disinterest – things we would rather not measure. Variables have three components: 1. The constructs of interest 2. Constructs of disinterest (other things that we do not want to measure) 3. Random errors Construct validity – best examined by employing multiple operational definitions, or multiple ways of measuring, and then comparing them to see whether they seem to be measuring the same things. Maximizing Internal Validity: Correlation; inappropriately inferring causality from a simple association between two variables – correlational fallacy – “correlation does not imply causality”. “Hidden third variable” problem, hidden because the researcher might have only measured X and Y but not Z. Reciprocal causation Selective placement is known as the selective threat to internal validity. Selection by maturation threat to internal validity. Research studies carried out with random assignment to the independent variable – randomized experiments. When control is not possible, quasi-experimental research design is used instead of a randomized experimental design. Quasi-experiments are those in which research participants are not randomly assigned to levels of all independent variables implicated in the hypothesis. Maximizing External Validity: The only way we can be confident about generalizing from a sample to a population of interest is to draw a random sample. - Theoretical basis Deductive and inductive refer to two distinct logical processes. Deductive reasoning is a logical process in which a conclusion drawn from a set of premises contains no more information than the premises taken collectively. All dogs are animals; this is a dog; therefore, this is an animal: The truth of the conclusion is dependent only on the method. All 11 | P a g e men are apes; this is a man; therefore, this is an ape: The conclusion is logically true, although the premise is absurd. Inductive reasoning is a logical process in which a conclusion is proposed that contains more information than the observations or experience on which it is based. Every crow ever seen was black; all crows are black: The truth of the conclusion is verifiable only in terms of future experience and certainty is attainable only if all possible instances have been examined. In the example, there is no certainty that a white crow will not be found tomorrow, although past experience would make such an occurrence seem unlikely. 12 | P a g e Chapter 4 - Fundamentals of measurements Constructs: Abstract concepts of discussion in theories (social status, gender roles, intelligence). These can be measured in many different ways. Variables: Concrete representation of constructs which are measurable. Operational definition: specifies how to measure a variable in order to assign a score as high, medium or low social power. Means by which we obtain the numbers/categories of variables. Sequence of steps or procedure to be followed to obtain measurements. Can be easily repeated by anyone. Definitional Operationism: Assumption that the operational definition is the construct, ignoring the facts that measurements can be affected by different internal and external factors. To avoid problems we advocate MULTIPLE operational definitions. Taking into account that each definition has a level of error and imperfection, place is left for improvement. “Observed Score= true score + systematic error + random error.” True score: function of the construct we are attempting to measure. Systematic error: Influences from other constructs besides desired one. Random error: non-systematic, ever changing influences on the score. Reliability: extent to which a measure is free from random error. “A measure can be totally reliable but invalid. Only when the results are reliable AND valid can they be used in a research.” To increase reliability, experiments should provide clear instructions, optimal testing situations, decrease people’s tendencies to make random error and simple mistakes. Types: Test-Retest reliability: Correlation between scores on the same measure administered on two separate occasions. Provides an estimate of measure’s reliability. Two occasions should be far enough apart so that subjects do not remember specific responses.- can be difficult to obtain !!!! People might not want to use their time for it or might remember the previous test. Other options 13 | P a g e Internal consistency: random error varies over time but also from question/test item to another within the same measure Split-half: set of items in the measure is split in half. Correlation between two halves provides an estimate of reliability. However it depends on the way items were split *Coefficient alpha* (preferred): Derives from correlation of each item to each other and does not rest on arbitrary choice of way of dividing. (Usually computed by software). Inter-rater Reliability: when there is possibility of random errors, a strategy could be to use several observers to rate the responses. Reliability can be estimated with the Coefficient alpha. Validity: extent to which a measure reflects only the desired construct without contamination from other systematically varying constructs. It requires reliability as a prerequisite. Face validity: evaluated by experts or judges who read/ look at a measuring technique and decide whether or not it measures what it names suggests. Convergent validity: overlap of alternative measures which tap the same construct but have different source of systematic error. Discriminant validity: measures that are supposed to tap different constructs. Validity is based on an assessment of how much one method of measuring a construct agrees with other measures of the same or similar constructs and disagree with measures of dissimilar constructs. Multitrait-multimethods Matrix (MTMM) is a table of correlation coefficient to evaluate convergent and discriminant validity of a construct. The table is based on principles that the more features 2 measurements have in common, the higher the correlation. Measurements share 2 sets of features: - TRAITS: underlying construct the measurement is supposed to tap. - METHODS: mode of measurement. Same traits+ same method = reliability coefficient Same traits+ diff method= convergent validity coefficient 14 | P a g e Chapter 5 – Modes of Measurement There are many forms or retrieving information. (Operationally define constructs). In this chapter, we review modes of measurement commonly used in research on social behavior. Direct Questioning: Paper-and-pencil questionnaire: Pros: Low costs, no interviewer bias, people can stay anonymous. Less pressure for immediate response. Cons: The sample quality is usually low because people do not respond very often. It is difficult to clarify certain questions (answer may not be reliable). You are very dependent on whether or not the person the person has enough knowledge to answer the questions. Face to face interviews: Pros: You can correct any misunderstandings either in the questions or the answers. You can add visual aids more easily. Quality of information is very high. Often a very high response rate. Cons: Costs, Interviewer effects (they influence the interview). For small area it is possible but not for very large geographic area. Telephone interviews: Pros: Often a high response rate. Do not impose strict limits on interview length. Same as face to face interviews. Motivate the respondent. Lower costs than face to face interviews. Speed (fast pace). Whoever is taking the interview can do it easily from for instance a laptop. Cons: Not everyone has a telephone. Not all numbers are listed in the directory. (This can be avoided by RANDOM DIGIT DIALING). Interviewer effects are possible. No visual aids. People will hate you. Direct Questioning via the Internet: Pros: 15 | P a g e It can be global. You can reach much more people. You can stay more anonymous than you are with email. Low costs. You can reach people via groups that would normally be hard to reach. Cons: Response rate is low. It is not always clear how many people have responded when you post it on a website (how many actually saw the questionnaire). Similar to mail surveys. You do not know if people are telling the truth. Hard to get unbiased selection. Experience Sampling: Basically writing down your experiences, for instance in a diary. Usually an app or something asks input on random occasions during the day, then you fill in a short questionnaire. Pros: Detailed information about the experiences of the respondents. Relatively short lapse in time between the event of interest and participants’ responses to it. Fewer participants are needed in order to meet the sample size. Cons: You are very reliant on the participant to generate the data according to the sometimes strict rules of sampling required to test the hypothesis of interest. Costs are high. Low anonymity. Indirect: Collateral Reports: Common when researching children. A third party (e.g. parents, teacher) responses to questionnaire or interview. Mostly used in combination with information from the actual participant. Pros: Potential to overcome biases inherent in self-reports of constructs of interest to social scientists. Cons: It may not always be reliable. Parents perceive things differently than children. It can double the costs of the study. Not everyone is willing to be such an informant. Observation: Observing social constructs. Pros: Relative objectivity of rating. Occasionally, by good fortune, a researcher can find video footage that is relevant but was taped for other purposes; this keeps the costs down. Can often be accomplished while observant is in their natural surroundings. Cons: Many constructs are not amenable to this type of measurements. Emotions are not always visible on the outside. Hard to test causal propositions. 16 | P a g e Physiological Monitoring: (And psychophysiology: study of the interplay of physiological systems and people’s thoughts, emotions etc.) Pros: People cannot control their own bodies (the outcome). Little concern of biases. Permits the introduction of time into hypothesis and research design. Cons: You need to introduce control factors to make sure the research is not too overwhelming and the participant does not get distracted. Considerable amount of expertise is required. Costs are very high (equipment). 17 | P a g e Chapter 6 - Single-Item Measures in Questionnaires This chapter focuses on developing questions and questionnaires that are reliable and valid, specifically on writing individual items to operationally define constructs. 1. Items – the questions or statements to which participants must provide a response 2. Response o Numeric (On a 1 to 10 scale) o Binary (True/ False) o Verbal reports (“describe in your own words…”) Steps to follow when planning and carrying out questionnaire research 1. Choose a mode of direct questioning. Consider costs and availability of information when deciding. Paper-and-pencil questionnaire Face-to-face/ telephone interview Questionnaire posted on the internet Experience sampling 2. Choose specific content areas to be covered, related topics can be included. 3. Decide which questions require follow up answers, which questions are most important etc. 4. Writing process starts, both open-ended and closed-ended questions can be used. 5. Consider question sequence and transition, and balance of open-ended and closed ended-questions. 6. Circulate draft to experts for suggestions for revision before pre-testing. 7. Questionnaire is pre-tested on same population as the actual study Interviewers should be aware of the purpose of the study and the aim of each question so they can note whether it is effective. Afterwards experimenters must discuss the answers with the participants to see if changes in formulation are necessary Open-ended responses can be changed to closed-ended responses where possible 8. Pre-test results are analyzed, if there are major changes there should be more pre-testing. 9. Final training of personnel (can be done in conjunction with further pre-testing) 10. Actual administration of questionnaire 11. Resultant date is coded and analyzed. All preceding steps are justified by their contribution to the validity of the conclusions. 18 | P a g e Factual Questions Error can arise from: o Overstating or understating income o Rounding off age o Memory failures because events happened to long ago o Memory telescoping – recalling events as more recent than their actual dates. o Specificity in questions is important Attitude and Belief Questions Problems can arise because o It is possible that participants do not have an opinion on a topic as they have not thought about it – can be distinguished through time taken to answer a question o Attitudes are multi-dimensional – can be measured using related questions discussed in chapter 7. o Attitudes have a dimension of intensity Sociometric Questions – a category of questions which measures interactions among members of a group. Sometimes questions are answered more honestly if the participants believe their answers can influence social arrangements, for example seating arrangements. Can provide info on the individual’s position in the group. Behavior Questions Questions should be specific. Shorter interval in answering usually increases accuracy. General Issues Number of questions: Avoid including too many unnecessary questions, but make sure there are enough to permit full understanding of the responses. Different questions for different subsets of respondents (Answer yes, proceed to question number… etc.) Sensitivity related to a question posed needs to be considered Wording Improperly worded questions can result in biased or meaningless responses; terms must be exact and simple Structure Use short questions Questions should simplify the respondent’s task as much as possible 19 | P a g e Alternatives should be made clear (do you think such and such policy should be implemented instead of this and that policy) Forced-choice format – when participants are forced to choose either one answer or the other Double-barreled questions – inappropriately combining two separate ideas while asking for a single answer (e.g. use mother or father instead of ‘parents’) Open-ended versus closed-ended questions Open ended pros: allow more detailed answers, can be used when full range of responses is unknown. Open ended cons: Cost, self-contradictory, incomprehensible, irrelevant answers. Closed ended pros: easily scored for analysis, can help clarify type of response required Often, both forms of questions are used. Responses can be suggested through interval scales “No opinion” should be factored in to avoid unreliable response Filter questions – intended to screen out respondents who do not have any knowledge or opinion on the issue Floaters – people who answer “don’t know” to filtered questions but would give a good answer when there is only an unfiltered question Question Sequence Start with simple questions, follow with main questions Keep topically related questions together Clear transitions Funnel principle – general questions should come first, followed by increasingly specific and detailed questions Split-ballot experiment – two or more versions of a questionnaire used for different subsets – any differences in response can be attributed to wording or sequence variation. Sensitive Content Request income using broad categories Sometimes long questions are more useful in gaining reliable responses as they seem less threatening Terminologies in relation to racial questions are important. Randomized response techniques – the interviewer does not know whether the answer pertains to the sensitive or innocuous question, therefore respondent’s privacy is respected to some measure. Interviewing Questionnaire eliminates biases from social desirability, conformity etc. 20 | P a g e A positive atmosphere should be created to elicit more accurate answers (e.g. introduce yourself) Each question should be asked in the exact way it is worded on paper Explanation of questions causes a change in the frame of reference Every question must be asked Be careful not to suggest a possible reply “Don’t know” may mean a genuine lack of opinion OR the participant avoiding answering – can be aided through evaluative feedback (“thanks, we appreciate your frankness”) Quote participants directly when answering open-ended questions Avoid bias: o Interviewers can influence analysis of response, e.g. if the interviewer is a socialist o Interviewers’ perception of the situation. Can never be overcome completely, but should be avoided as much as possible. 21 | P a g e Chapter 7 - Scaling and Multiple-Item Measures There are many possible ways to scale responses to a particular question: Scaling – Assignment of scores to answers to a question to yield a measure to a construct. Response Scale – The range of possible answers to a given question Multiple Item Measures – When scaling methods obtain multiples observations or ratings and combine them into a single score. e.g.: A congressional representative’s positions on a number of votes can be combined to give a single score measuring the representative’s liberalism/conservatism. Advantages of Multiple Item Measures: - Complexity of a measure of a construct is reduced due the creation of a single score that summarizes several observed variables in a meaningful way. - Allows researchers to test hypotheses about the nature of a construct, whether the construct constitutes a single dimension or several different dimensions. o If a series of variables all measure a single general characteristic of a construct it is unidimensional. o Low associations among variables imply that several dimensions exist meaning the construct is multidimensional. Levels of Measurement: Qualitative scales: • • Nominal (a.k.a. attributes) – Different categories (e.g.., sex, species, phylum, location). – Can have the option “other”. Ordinal (e.g.., social class, attitude scales): – Different categories. – Categories are rankable. – Does not provide information about interval or degree in difference between values. - Likert scale is also ordinal. 22 | P a g e Quantitative scales: • • Interval (temperature on Celsius and Fahrenheit): – Different categories. – Rankable categories. – Constant equal-sized Intervals (can be expressed numerically). – Numbers cannot be multiplied or divided because the scale does not have a true 0. Ratio (e.g., lengths, weights, volumes, capacities, rates): – Different categories. – Rankable categories. – Constant equal-sized Intervals. – Absolute Zero (physical significance, the zero actually signifies something) (e.g., temperature in Kelvin, time). Rating Scales for Quantifying Individual Judgments - Process of a judgment tasks involves forming a subjecting judgment of the position of the stimulus object along the desired dimension and then translating that judgment into a rating using the scale provided. - In order to prevent variety between judges from time to time a number of rating scales can be employed: - Graphic Rating Scale o - Itemized Rating Scales/Specific Category/Numerical Scales o - Judge indicates rating by selecting point on the running from one extreme of attribute to the other. Requite rater to select one of a small number of categories ordered by scale position. e.g.: Liberalism or conservatism is rated by selection of categories that best describe political viewpoint. Categories can be clarified by brief verbal descriptions. Comparative Rating Scales o Require judge to make comparisons. o e.g.: Is the applicant more capable than 10% of the candidates? Rank-Order Scale: Judges are required to rank individuals in relation to one another in regard to a characteristic. 23 | P a g e Self-Rating: - All above ratings can be used to secure individual’s ratings o themselves or someone else’s rating of them. - Assumption is that individuals are in a better position to report their own beliefs and feelings as long as individuals are willing to reveal them. - Concept of what constitutes a moderate or an extreme position can be different. - In order to ensure reliable/valid self-ratings: o Individuals should be told what attribute is to be rating and be given opportunity to recall their behaviors in past situations that are relevant to the judgment. o Must be motivated to give accurate rather than socially desirable ratings. Cautions of Construction/Use Rating Scale: - Halo Bias – The tendency for overall positive/negative evaluations of object being rated to influence ratings on specific dimensions. - Generosity Error – Overestimation of desirable qualities in people that are liked by the rater. - Contrast Error – Tendency for raters to see others as opposites of themselves on a trait. Arises from belief in one self’s personal trait. o e.g.: Someone very orderly rates others as relatively disorderly. Solutions: - Multiple raters and computation of mean rating for each rated object reduces impact of random errors. - Multiple raters and increasing the judge’s familiarity with the object or person being rated reduces halo bias. Development of Multiple-Item Scales: - Domain – The hypothetical population of all items relevant to the construct we wish to measure. o e.g.: Attitude statements - Domain Sampling – When a sample of items is drawn from the domain, and the person’s responses to those items estimate the desired construct as measured by the entire population of items. - Item construction must be performed under certain guidelines: o Items must be empirically related to the construct that is to be measured. o Items must differentiate among people who are at different points along the dimension being measured. o Ambiguous items must be avoided. o Items must be worded in both a positive and negative direction in order to avoid the ‘acquiescent response style’, the general tendency to agree with statements regardless of their content. 24 | P a g e Three Types of Multiple-Item Scales: 1. Differential Scales - Includes items that represent known positions on the attitude scale. Respondents are assumed to agree with only those items who position is close to their own and to disagree with items that represent distant positions. - Require items that have a definite position on the scale: items will elicit agreement from people with positions near the item’s scale value, but disagreement from others whose attitudes are more or less favorable. - Example of a nonmonotone item: The possibility of respondents to provide a greater opinion rather than simply monotone items, which are either clearly favorable or unfavorable to the object. - Advantages: - o Responses offer a check on the scale’s assumption. o Latitude of acceptance (the range of scale values that the subject agrees with) can be calculated. Disadvantages: o The construction procedure is lengthy and cumbersome. o The attitudes of judges influence their assignment of scale values to the items. o Lower reliabilities than other scales. 2. Cumulative Scales - Made up of a series of items with which the respondent indicates agreement/disagreement. - Designed in a way that a respondent who holds a particular attitude will agree with all items on one side of the position and disagree with other items. - Presents scale score, in which the total number of items person agrees with is added. o - Bogardus Social Distance Scale presents this cumulative pattern. (p. 169) Advantages: o A single number (the scale score) carries complete information about the exact pattern of responses to every item. o Provides a test for unidimensionality of the attitude. - Items that reflect more than one dimension will not form a cumulative response pattern. Disadvantages: o A simple random error in responses can distort the perfect cumulative response pattern. o Limited to unidimensional domains. Finding such domains is difficult. 25 | P a g e 3. Summated Scales - Consists of a set of items to which the participant responds with agreement or disagreement. - Uses only monotone items – items are definitely (un-)favorable, no reflection of middle position. - Responds indicate a degree of agreement/disagreement to each item. - Scale score is derived by summing or averaging the numerically coded agree and disagree response to each item. - Interpretation: The probability of agreeing with favorable items and disagreeing with unfavorable ones increases directly with the degree of favorability of the respondent’s attitude. (I would read these last pages of the chapter on the Summated Scale because the examples and diagrams help to understand the text: p. 172 – 176) 26 | P a g e Chapter 8 - Fundamentals of sampling External validity 1. Varies for different research: in most survey research it is quite important (psychology=> internal validity more important) 2. The nature of the desired generalization can take different forms for different types of research. Some research aims specifically at drawing conclusions about a given target population => particularistic research goals. Other research aims at testing theoretically hypothesized associations, no specific population or setting as focus of interest=> universalistic research goals=> no interest in the ability to extend the research findings from the sample to some population. Instead, the applicability of the theory itself outside the research context is the central question, and sampling is of little/no concern SO: research can serve different purposes. The relative priority of the various research validities depends on what the researcher is trying to accomplish!! Some basic definitions and concepts Population: is the aggregate of all of the cases that conform to some designated set of specifications. Stratum: is defined by one or more specifications that divide a population into mutually exclusive segments Population element: a single member of a population Census: is a count of all the elements in a population and/or a determination of the distributions of their characteristics, based on info obtained of each of the elements. Sample: when we select some of the elements with the intention of finding out something about the population from which they are taken, we refer to that group of elements as a sample. Sampling plans: carry the insurance that our estimates do not differ from the corresponding true population Margin of error: helps to estimate how correct the percentages are. Confidence level: the probability of samples that would produce correct results again. Representative sampling plan: sampling plan that carries confidence level insurance. It ensures that the odds are great enough so that the selected sample is sufficiently representative of the population to justify running the risk of taking it as representative. Sampling Error: The difference in the distribution of characteristics between a sample and the population as a whole. SAMPLING The basic distinction in modern sampling theory is between non-probability and probability sampling: Non-probability sampling 27 | P a g e Accidental samples: We simply take the cases that are at hand, continuing until the sample reaches a certain size. (Ex: take the first 100 people that we meet on the street who are willing to participate). Disadvantage: Very low external validity. Quota samples: adds insurance to guarantee the inclusion of diverse elements of the population and to make sure that they are taken account of in the proportions in which they occur in the population. - Almost accidental sampling, but forcing inclusion of i.e. minorities (while trying to keep proportions). Purposive samples: basic assumption is that with good judgment and an appropriate strategy, we can handpick the cases to be included and thus develop samples that are satisfactory in relation to our needs. - Common strategy: Pick cases that are judged to be typical of the population in which we are interested, assuming that errors of judgment in the selection will tend to counterbalance one another. - Snowball Samples: When research question concerns a special population whose members are difficult to locate (Ex: research into gang affiliated people). The snowballing results from members of an initial sample from the target population enlisting other members of the population to participate in the study. If they bring more people in, sample grows. Probability sampling Probability sampling provides the first kind of insurance against misleading results and they provide a guarantee that enough cases are selected from each relevant population stratum to provide an estimate for that stratum of the population. Types of probability sampling: Simple Random Samples: Selected by a process that not only gives each element in the population an equal chance of being included in the sample, but also makes the selection of every possible combination of the desired number of cases equally likely. Requires either a list or some other systematic enumeration of the population elements (sampling frame) Random number generator Careful: don´t use systematic sampling (i.e. every tenth element) Stratified Random Sampling: the population is first divided into two or more strata, then a sample random sample is taken from each stratum, and the subsamples are then joined to form the total sample 28 | P a g e Cluster Sampling: You divide your population into groups (e.g. states of America) and randomly pick some of the groups to (randomly) sample from. - Multistage area sampling: You cluster sample from your cluster sample to create a smaller research area. (E.g. you take a random city from your random state.) This can have as many levels as you want. (Countries, states, cities, streets, houses, etc.). Multilevel Samples: Combines any of the above methods. Chapter 9 - Probability Sampling Method Basic Probability Sampling Methods Simple Random Sampling - Requires list or other systematic enumeration of the population elements => sampling frame. E.g. currently enrolled students in university. Each population element having an equal and independent probability of being sampled + every possible combination of elements of a particular number has an equal probability of being drawn - Make the sample: as an example a list composed of 1 672 elements and your sample size is of 160. Then make a list of random numbers referring to the elements in the list, a high-quality computer random number generator could do that as well. (See later) => Each population element has an equal and independent chance of being included in the sample. Pitfalls Simple Random Sampling: 1) The procedure of systematic sampling (way of choosing elements that is not independent) can create important biases. E.g. noticing that the desired sampling size of 160 is approximately 1 in 10 population elements, you might pick a random number btw 1 and 10 (say, 6) and sample the sixth, sixteenth, twenty-sixth and so.. That is, every tenth element after the random start. Even if every element has an equal chance of being chosen because of the random choice of starting point; this is not a simple random sample because the selection decisions are not independent. 2) The treatment of population elements that are ineligible for sampling. Lists including eligible and ineligible elements. It might seem appropriate simply to select the next element on the list when the sampling procedure comes up with an ineligible name. This method would introduce bias and violate the nature of probability sample. Names that follow ineligible ones would have double the usual probability of selection. The correct procedure is to draw a larger sample in the first place, large enough to compensate for the loss of ineligible elements. E.g. if final sample of 160 is desired, and the estimation of ineligible elements is 20% of the list, then 29 | P a g e the initial sample size would be: 160 x 1.20 or 200. After the expected 20% loss, the remaining sample would approximate the desired size and retain the property of being statistically correct simple random sample. Obtaining and Using Random Numbers The practical question of how best to obtain a list of random numbers. Two categories of strategies: published tables and random numbers generators (software or hardware based). Stratified Random Sampling The population is first divided in two or more strata. The strata can be based on a single criterion (e.g. gender) or a combination of two or more criteria (e.g. age and sex). From each stratum a simple random sample is taken, and the subsamples are then joined to form the total sample. Table 9.2 compares simple random sample and stratified samples (on stratified by sex, the other by age). Observations and conclusions: - There is a marked improvement over SRS (simple random sampling) when the sampling is based on a stratification of the population by sex. The result is marked increase in a number of samples that give means very close to the population mean and a marked reduction in the number of sample means that deviate widely from the population mean. - When stratified by age however there is no improvement in the efficiency of sampling. Stratification contributes to the efficiency of sampling if it succeeds in establishing classes that are internally comparatively homogenous with respect to the characteristics being studied (if the difference between classes are large in comparison with the variation within classes). Sampling the various strata in different proportions It is not necessary for the strata to reflect the composition of the population. Thus, in sampling from a population in which the number of males equals the number of females, it is permissible (and might be desirable) to sample for instance 5 females to every male. However it is then necessary to make an adjustment to find the mean score of the sample that will be the best estimate of the mean score for the total population => “weighting”. Reasons? - Sometimes necessary to increase the proportion sampled from classes having small numbers of cases in order to guarantee that these classes are sampled at all. - We might want to subdivide the cases within each stratum for further analysis. - Mathematical reason. Consider two strata, one of which is more homogeneous with respect to the characteristics being studied than the other. For a given degree of precision, it will take a smaller number of cases to determine the state of affairs in the first stratum than in the second. E.g. if with respect to certain types of opinion questions, men differ among themselves much more than women, we would accordingly plan our sample to include larger proportion of men. If it is the case that women would be expected to be more alike than men in these matters, they do not have to be sampled as thoroughly as do the men for a given degree of precision. In general terms, we can expect the greatest precision if the various strata are sampled proportionately to their relative variabilities with respect to the characteristics under study rather than proportionately to their relative sizes in the population. 30 | P a g e In summary, the reason for using a stratified rather than a simple random sample plan is essentially a practical one: more precise estimates of population values can be obtained with the same sample size under the right conditions. The right conditions involve relative homogeneity of the key attributes within each stratum and easy identification of the stratifying variables. Sampling Error The difference in the distribution of characteristics between a sample and the population as a whole. Because we cannot measure the entire population, we can only estimate the extent of sampling error for a given sample. Calculate the degree of confidence. In SSC, the convention is to accept estimates about which there is a 95 % confidence that the estimate is correct. Make statements such as: “With 95% confidence we can say that there is X % sampling error given the size of our sample”. Sampling error decreases when size of a random sample increases. This property of random samples is easy to see in the formulas used to calculate margin of error. When estimating with 95 % confidence that margin of error associated with an estimate of a proportion, we can use the formula: 1.96 x V P(1 – P)/ N. N = sample size and P = the proportion of the sample that displayed the behavior of interest to us. Because N is the denominator, increasing N always reduces the estimate of sampling error. NB: Sampling error and the oft-cited margin of error associated with public opinion polls are estimates based on a set of assumptions that rarely are met. Such estimates are idealized values that assume a truly random sample from the population and no extraneous influences associated with others aspects of the polling. 31 | P a g e Chapter 11 - Randomized Experiments Randomized experiments are highly specialized tools. They are ideally suited for the task of causal analysis. The main strength of randomized experiments is their internal validity, which is accomplished through the researcher’s assumption of control over the independent variables in the design. To use a randomized experiment, the researcher must be in a position to decide which participants are assigned to which level of the putative cause. The control for a randomized experiment is most easily achieved in a laboratory setting. Controlling and Manipulating Variables Independent variable: the variable that has a causal influence on our outcome variable Dependent variable: is the outcome variable; its values depend on the independent variable Individual difference variables: all variables that people bring with them to a study and are virtually impossible to manipulate, (they are a type of independent variable) Ex: religion, income, education, etc. Experimental variables: properties an experimenter can manipulate or expose people to. Randomized experiment: individuals are randomly assigned to the various levels of the independent variable. Random Assignment (randomization): a procedure we use after we have a sample of participants and before we expose them to a treatment. It is a way of assigning participants to the levels of the independent variable so that the groups do not differ as the study begins. All participants have an equal chance of being assigned to the various experimental conditions. (Maximizes the internal validity of research) Works only on average. Random Sampling: is the procedure we use to select then the first place the participants we will study. Used to make sure the participant group we study is representative of a larger population. Also a ‘fair’ procedure where all participants have an equal chance of being included in the study. Repeated measures design: rather than some participants being in one condition and some in the other, all participants are in both conditions. 32 | P a g e Counterbalancing: the practice of varying the order of experimental conditions across participants in a repeated measures design. It is important because it helps assure internal validity and it helps control for possible contamination or carryover effects between experimental conditions. Threats to internal validity: Selection: refers to any preexisting differences between individuals in the different experimental conditions that can influence the dependent variable. Selection is always a threat to validity any time participants are not randomly assigned to a condition. Maturation: involves any naturally occurring process within persons that could cause a change in their behavior. Ex: fatigue, boredom, growth, intellectual development. History: refers to any event that coincides with the independent variable and could affect the dependent variable. Could be any historical event that occurs in the political, economic or cultural lives of the people we are studying. Instrumentation: is any change that occurs over time in measurement procedures or devices. Ex: experimenter found better way to collect data. Mortality: refers to any attrition of participants from a study. Ex: if some participants do not return for a posttest of if participants in a control group are more difficult to recruit than participants in a treatment group, these differential recruitment and attrition rates could create differences that are confused with effects of the independent variable. Mortality is a problem with longitudinal research. The greater the mortality, the less representative the final participant sample. Differential mortality: when mortality rates are different for the various experimental groups. Also creates a treat to internal validity. Selection by Maturation: occurs when there are differences between individuals in the treatment groups that produce changes in the groups at different times. For examples of these threats on internal validity: p. 249-252 Construct Validity of Independent Variables in a Randomized Experiment Operational Definition: the procedure used by the researcher to manipulate or measure the variables of the study. Construct validity: making sure that our variables capture the construct we wish to measure. 33 | P a g e Manipulated variable: when researchers create rather than measure levels of an independent variable. Manipulated Checks: when researchers demonstrate the validity of their manipulated variables, they generally obtain a measure of the independent variable construct after they have manipulated it. Alternative Experimental Designs: see page 254-264 for graphs and examples. Strengths & Weaknesses of Randomized Experiments: By randomly assigning people to experimental conditions, experimenters can be confident that the subsequent differences on the dependent variable are caused, on average, by the treatments and are not preexisting differences among groups of people. Randomized experiments can rule out many alternative explanations. Experimental Artifacts: Refers to an unintended effect on the dependent variable that is caused by some feature of the experimental setting other than the independent variable. Ex: experimenters can unwittingly influence their participants to behave in ways that confirm the hypothesis, particularly if the participants want to please the experimenter. External Validity Experimental designs and procedures maximize the internal validity of research- they enable the researcher to rule out most alternative explanations or threats to internal validity. Experimenters might maximize internal validity at the expense of the external validity or of the results. Laboratory experiments are criticized because they are poor representations of natural processes. Being artificial is not necessarily a disadvantage. Some lab analogues are more effective than their realistic. Another criticism to experiments is the representativeness of the research participants. A drawback of randomized experiments is that they are rarely yield descriptive data about frequencies or the likelihood of certain behaviors that we can generalize to the rest of the population. An important difference between how probability surveys and experiments are usually conducted is that probability surveys enlist a random sample of respondents who are representative of some larger population. A survey provides descriptive data about the population. An experiment, on the other hand, usually does not make use of a representative or random sample because the purpose of the experiment is not to provide descriptive data about percentages of people in the population. The purpose of an experiment is to provide information about causes and effects. 34 | P a g e Randomized Two-Group Design: Participants are randomly assigned to the experimental treatment group or to a comparison group. No selection threat to internal validity (randomly assigned) No maturation threat (groups mature at the same rate) No instrumentation threat if groups were tested under similar conditions Pretest-Posttest Two-Group Design: Solomon Four-Group Design: Combines the Randomized Two-Group Design with the Pretest-Posttest Two-Group Design. This way the experimenter can see if the pre-test has an influence on the outcome (test the test effect). 35 | P a g e Between-Participants Factorial Design: In a factorial design, two or more independent variables are always presented in combination. The entire design contains every possible combination of the independent variables We can ask whether the effect of one of the independent variables is qualified by the other independent variable. If it does, the two independent variables are said to “interact” in producing o (dependent variable) Repeated Measures Design: Same group of people exposed to multiple (to be tested) treatments, instead of testing all the treatments on separate groups. The repeated measures designs are randomized experiments as long as we randomly assign participants to be exposed to the various conditions in different orders. Needs less participants. 36 | P a g e Chapter 13 - Nonrandomized Designs Science does not begin and end with the randomized experiment. Science is a process of discovery, in which researchers use the best tools available to answer their questions. - In nonrandomized designs the research participants are not randomly assigned to levels of the independent variable. - Instead the comparisons between levels or between treatment or non-treatment conditions must always be made with the presumption that the groups are non- equivalent. - As a result, the internal validity of these designs is threatened by the full range of threats discussed in chapter 11. - These designs are preferred however because - The relative sacrifice in internal validity can well worth the cost. Depending on the aspirations of the researcher and the context in which the research is conducted. This type of experimentation can be done for example: 1. Sociologists gather information from a representative sample of male members of the U.S. labor force to study their training and occupational attainments. 2. Medical researchers survey the nation’s population to determine the incidents of disease related factors. 3. Political sociologists survey a sample of students in large universities to determine whether they support or oppose a military draft in the United States. - In each of these cases the researchers are not interested in establishing cause and effect conclusions. - Rather, of central concern is measuring constructs well and gathering information from a representative sample of individuals. Cross-sectional comparison Form a class of research method that involve observation of some subset of a population of items all at the same time, in which, groups can be compared at different ages with respect of independent variables, such as IQ and memory. - Panel Design: This means that in an experiment the same people were re-interviewed regularly. Quasi-experimental designs One or more independent variables are manipulated but participants are not randomly assigned to levels of the manipulated variables. 37 | P a g e - Interrupted time-series design: “Time series” refers to the strategy of measuring a set of variables on a series of occasions (e.g. monthly) during a specified period of time. “Interrupted” refers to the strategy of introducing the stimulus or event during the period of assessment in order to evaluate its effect on the variables being measured. - Static-group comparison design: This design has as many groups as there are levels of the independent variable. As such, the independent variable varies between participants. - Alternative explanations: Different causes for the outcome of an experiment, due to different influences. - Pretest-Posttest Nonequivalent Control Group Design: Is an extension of the static-group comparison design that includes measures of the dependent variable at multiple points in time. - One group Pretest-Posttest Design: Also known as simple panel design in survey research, is based on withinindividual treatment comparisons. - Regression towards the mean: or statistical regression as it is also called, refers to the phenomenon that extreme scores are not likely to be as extreme on a second testing. - Under matching: Matching on variables known to be associated with the dependent variable always errs in the direction of under matching and, therefore, fails because we can never know when we have matched on enough variables to be sure the two groups represent the same population. 38 | P a g e Chapter 16 - Qualitative research In quantitative research the researcher does not impose structure or questions on the participant, but rather learns from listening to the participant discuss issues in his or her own voice. Narrative Analysis Narrative Analysis is an oral or written recitation of events in the past, so someone would recite their lives, or that of someone else. A Narrative Analysis can even be fictional, if you were to research fairy tales for example, and are asking people for their version. Focus groups A group of 6 – 10 individuals is brought together that discuss a topic of choice by the researcher. The researcher guides the discussion, also called the moderator. The moderator also processes the information found trough the discussion. Oral history Oral history is a method for recording extended life stories of individuals. Participant Observation Observing while participating, like those old-school anthropologist that went to some tribe to live with them and observe them. When they have reached a conclusion, that conclusion should hold true for every observation without exceptions.