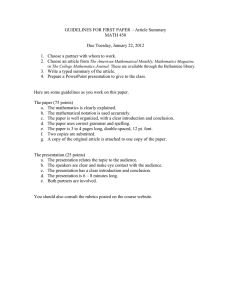

Men of Mathematics The Lives and Achievements of the Great Mathematicians from Zeno to Poincaré ( PDFDrive.com )

advertisement