2021 IEEE 16th International Conference on Industrial and Information Systems (ICIIS) | 978-1-6654-2637-4/21/$31.00 ©2021 IEEE | DOI: 10.1109/ICIIS53135.2021.9660698

Machine Learning Based Emotion Level

Assessment

Lumini Wickremesinghe1 , Dakheela Madanayake1 , Anuradha Karunasena1 , and Pradeepa Samarasinghe1

1

Faculty of Computing, Sri Lanka Institute of Information Technology, Sri Lanka

{luminiwickramasinghe@gmail.com,dpmadanayake@gmail.com,anuradha.k@sliit.lk,pradeepa.s@sliit.lk}

Abstract—With recent advancements of technology, identification of emotions of humans via facial recognition is

done with the application of numerous methods including

machine learning and deep learning. In this paper, machine

learning techniques are applied for identifying different levels

of emotions of individuals for unannotated video clips using

Facial Action Coding System. In order to archive the above,

first, two methods were experimented to obtain a labeled

image data set to train classification models where in the

first method, clustering of images was done using Action

Units(AU) identified from literature and the emotion levels

of the images were determined through the resulted clusters

and images are labeled according to the cluster they belonged

to. In the second method, the image set is analyzed explicitly

to identify AUs contributing to emotions rather than relying

on those identified in literature and then the clustering of

image set was done using those identified AUs to label the

images similar to the first method. The two labeled data

sets were used to train classification models with Random

Forest, Support Vector Machine and K-Nearest Neighbour

algorithms separately.Classification models showed better

accuracy with data set produced using the second method.

An overall F1 score, accuracy, precision and recall of 87%

was obtained for the best classification model which is

developed using the Random Forest algorithm to identify

levels of emotions. Identifying the AU combinations related to

emotions and developing a classification model for identifying

levels of emotions are the major contributions of this paper.

The results of this research would be especially useful to

identify levels of emotions of individuals who are having

issues in verbal communication.

Index Terms—Facial Action Coding System, Action Units,

Emotion levels, Clustering, Classification

I. I NTRODUCTION

The response of a person to an internal stimuli or an external phenomenon would result in an emotion expression.

Identification and analysis of emotions therefore, would

guide to understand the reasons behind the responses for

the stimuli or the phenomenon. Human emotions are often

expressed through a number of verbal and non-verbal cues.

Non-verbal cues such as gestures and facial expressions

are especially helpful to understand emotions of adults and

small children who are having difficulties in expressing

their emotions through speech.

Facial expressions, speech, physiological signals, gestures and other multi-modal information are used with

varying computational techniques to analyze personal

emotions [1], [2]. Recently much consideration has been

drawn to identify the emotions through facial expressions.

One of the most popular tools employed in the context

human emotion recognition is the Facial Action Coding

978-1-6654-2637-4/21/$31.00 ©2021 IEEE

System (FACS) [3]. The FACS is a human-observerbased system designed to describe subtle changes in

facial features. Action Units(AUs) are the fundamental

actions of individual muscles or groups of muscles that are

anatomically related to contraction or relaxation of specific

facial muscles. Facial AUs provide an important cue for

facial expression recognition. FACS consists of 44 AUs,

including those for head and eye positions. Recently much

emphasis has been paid on developing Facial Emotion

Expression (FEE) analysis systems based on images [4]–

[6] as well as videos [7]–[9], using different techniques

such as machine learning and deep learning [10], [11].

Though much late research has been on Deep Neural

Network (DNN) based systems, as such research cannot be

mapped to clinical justification, still FACS based analysis

is preferred for developing FEE systems [12].

When considering AU based FEE analysis systems one

of the most important and fundamental features of FACSbased emotion recognition is to identify and display the

contribution of each activated AU to a particular emotion.

In analyzing the relationships between AUs and facial

emotions, different techniques have been used such as

using human specialists [13], [14] and Specific Affect

Coding System (SPAFF) [15]. In SPAFF, an observational

research was performed to explore upper and lower facial

AUs and used to illustrate a set of frequently used facial

expressions. For example, AUs 1, 2, 5, 6, 12, 23, 24 and 25

were used to indicate the enthusiasm emotion while AUs 1,

6, 15 and 17 were used individually or as combinations to

differentiate sad from other emotions. The study presented

the mapping of AUs with a total of 5 positive facial expressions and 12 negative expressions and presented a logical

guide for the recognition of emotional facial expressions.

Moreover, classification techniques were also popularly

used to map AUs with the emotion expressions in existing

literature. For example, [16] used Support Vector Machine

(SVM), Extreme Gradient Boosting (Xgboost) and DNN

along with Min-max Normalization in their study. Their

work provided the mapping of the AU combinations to

seven emotions where AUs 6, 7, 12 and 25 were the most

prominent combination of AUs for the happy emotion,

while AUs 1, 4, 15 and 17 were for the sad and AUs

1, 2, 5, 25 and 26 were the most related for the surprise

expressions respectively.

Apart from these mentioned techniques, statistical approaches were also used in deriving AU-Emotion relationships. In [17], relationships of AUs to expressions were

obtained using a form of relation matrix which was derived

using a concept called discriminative power statistical

289

Authorized licensed use limited to: SLIIT - Sri Lanka Institute of Information Technology. Downloaded on January 23,2022 at 15:11:50 UTC from IEEE Xplore. Restrictions apply.

analysis. Results of this research revealed positive and

negative relationships in relation to the expressions: as

an example, for happy emotion, AUs 6, 7, 12 and 26

showed positive associations and AUs 1, 2, 5 and 9 showed

negative associations. Similarly, positive associations of

AUs 1, 4, 10, 15, 17 and AUs 1, 2, 5, 26, 27 were shown

for sad and surprise emotions respectively. A supervised

neural network was then used to determine the emotion

type from the derived AUs.

Existing studies as given above classifies only the

emotion type based on a given video or an image. When

videos are considered, most of the existing databases have

categorized the videos based on the emotion type, though

one emotion type may have a set of images ranging from

neutral level to the highest level of emotion intensity. As

an example, CK+ database [18] has folders consisting

of images from the lowest level to the highest level of

happy, sadness, surprise, angry, contempt, disgust and fear

emotions. Though the current research has not developed

ways to consider the level, identifying the variation of

emotion intensity level is crucial for behaviour analysis

given that certain disorders such as Autism are early identified by analyzing the level of emotion expressions [19].

In addressing this gap, we develop a novel technique in

this research to identify both, the emotion type and the

level for a given image.

In carrying out this research, we first experimented with

the recommended mapping between AU and emotions

in the literature. The review given above evidences that

though there are many techniques used in mapping AUs

to emotion types, there are still inconsistencies between

them. Thus we considered a set of AUs which were

common for a given emotion in the literature for our first

method given in Section II-A. As the outcome of this

method did not result in high accuracy, a novel technique

explained in Section II-B was developed using Exploratory

Factor Analysis (EFA) which outperformed in the classification accuracy. EFA is a statistical technique which helps

in reducing large number of indicator variables into limited

set of factors based on correlations between variables.

The rest of the paper is organized by detailing out the

two methodologies we adopted in Section II followed by

the results demonstration and analysis of the results in

Section III. The analysis is completed by summarizing the

key outcomes in Section III-D.

II. M ETHODOLOGY

For the purpose of this research, two video databases

were used where each video shows how facial expression

of an individual changes from neutral to an expression of

a specific emotion such as happy, sad and surprise. The

Extended Cohn-Kanade (CK+) data-set contains 593 video

sequences from a total of 123 different subjects, ranging

from 18 to 50 years of age with a variety of genders

and heritage. Each video shows a facial shift from the

neutral expression to a targeted peak expression, recorded

at 30 frames per second (FPS). For example, Fig. 1 shows

three different levels of happy emotion expressed by an

individual.

The second data set used in the research is the BAUM1 data set which contains 1184 multi-modal facial video

Fig. 1: Levels of Happy Emotions of an Individual

clips collected from 31 subjects. The 1184 video clips

contain impulsive facial expressions and speech of 13

emotional and mental states [20].

The Sections II-A and II-B explain the process followed

in order to develop models in identifying the varying levels

of emotions for a given video.

A. Method I

The first method explained in this section is based on

identifying the level of emotion based on the suggested

AUs for a given emotion in the literature.

Fig. 2: Emotion Classification Process through Method I

As per the process shown in Fig. 2, the videos relating

to emotions happy, sad and surprise were first segmented

to frames resulting in three data sets containing images

from lowest to highest emotion levels.

AU intensities were then generated with respect to the

three data sets using OpenFace 2.0 [21]. OpenFace is a

facial behavior analysis toolkit capable of generating a set

of numerical data which indicates existence of AUs as

well as their respective intensities for facial expressions.

Intensities were generated with respect to seventeen AUs

numbered 1, 2, 4-7, 9, 10, 12, 14, 15, 17, 20, 23, 25, 26

and 45 representing inner brow raiser, outer brow raiser,

brow lowerer, upper lid raiser, cheek raiser, lid tightener,

nose wrinkler, upper lip raiser, lip corner puller, dimpler,

lip corner depressor, chin raiser, lip stretched, lip tightener,

lips part, jaw drop and blink respectively.

As shown in Section I, literature shows inconsistent

results on AU contribution to a specific emotion. To avoid

these inconsistencies, a common set of AUs given in

past studies were considered for a specific emotion. For

example, the common set for happy emotion consists of

AUs {6, 7, 12 and 25} while the common AU group

for sad and surprise emotions are {1, 15, 17} and {1,

5, 26} respectively. Considering these common AU sets,

the three generated AU intensity data sets for happy, sad

and surprise emotions were clustered separately using KMeans clustering algorithm.

The purpose of clustering as above is to group image

frames showing same emotion levels together and to label

them into clusters with the level they belonged to. So that

a labeled data set is available for the classification model.

Manual analysis of images belonging to resulted clusters

revealed that images showing neutral, moderate and peak

290

Authorized licensed use limited to: SLIIT - Sri Lanka Institute of Information Technology. Downloaded on January 23,2022 at 15:11:50 UTC from IEEE Xplore. Restrictions apply.

levels of an emotion have been grouped together. Therefore, each image was given a label ’neutral’,’moderate’ and

’peak’ depending on the cluster it belonged to. Labeling

the images based on the results of the clustering was

preferred over manual labeling of images in a video clip.It

is difficult to separate images manually with the presence

of only subtle differences between images which could be

barely differentiated by human eye and because of that

the current method enables more systematic separation of

images based on their features to groups.

Once the entries corresponding to all image frames

were labeled through clustering, a classification model

was trained and tested using the labeled data set to

classify a given image to the relevant emotion type and

level. Different classification algorithms including Support

Vector Machine(SVM), Random Forest (RF) and KNN (Knearest neighbour) were used to develop the model and

accuracy related to each algorithm was obtained. However,

as this method was built based on the past research recommendations and as low accuracy was observed through

this model on identifying the levels of emotions, another

method detailed in Section II-B was followed.

B. Method II

The main difference in the method detailed here is

automated techniques to identify the contributing AUs

and their level of contributions for emotions, rather than

relying on the contributing AU recommendations from the

literature.

Method II for clustering images to groups. In comparison

to Method I, performing EFA and t-tests were done in

Method II with the aim of improving the clustering of

images by selecting the most appropriate AUs to identify

a level of an emotion and thereby leading to develop

a more reliable labeled data set for classification which

could result in identifying levels of emotions of a given

image with better accuracy.

As discussed above, images showing happy, sad and

surprise emotions from the database were clustered using

K-Means clustering by only considering AU combinations

which have shown variations in intensities with the variations of emotions during the t-test. By using the above

method, the images related to each specific emotion were

grouped into three clusters and those clusters consisted of

images related to neutral (no emotion), peak and moderate

levels of that emotion. The images in each cluster were

then given the label, neutral, peak or moderate based on

the cluster the image belonged to. The above method was

used to label all the images of the data set into seven

classes, namely, neutral, moderately-happy, peak-happy,

moderately-sad, peak-sad, moderately-surprised and peaksurprised.

Finally, a classification model was developed using the

labeled data set created as above. The training, testing split

was 70% to 30%. Classification algorithms RF, SVM and

KNN were used on the data and accuracy obtained were

compared in Section III.

III. R ESULTS AND D ISCUSSION

A. Results of Method I

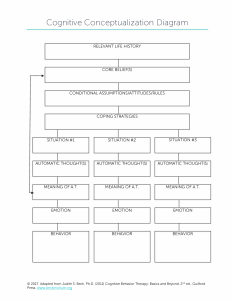

Fig. 3: Emotion Classification Process through Method II

Similar to Method 1, as shown in Fig. 3, first, the

video data were segmented into three sets of images and

AU intensities corresponding to those images were generated using the OpenFace. Exploratory Factor Analysis

(EFA) technique [22] is then used on the generated

AU intensities which resulted in three factors with a

clear demarcation of contributing AUs. The analysis of

factors with respect to the AUs revealed that the factors

corresponded to happy, sad and surprise emotions.

Furthermore to EFA, additional tests were performed to

explore whether intensities of AUs related to an emotion

as found in EFA shows any difference when there are

variations of emotion levels (e.g. moderately happy and

very happy) in images. This was carried out by performing

independent t-tests using image sets drawn from the image

data sets used in this research which shows variations of

an emotion. The results of the t-tests revealed that while

some AUs shows differences in terms of intensities when

the level of emotion varies, some others do not. Only

those AUs which shows differences were considered in

Fig. 4: Classification Report for Random Forest Classifier:

Method I

As mentioned in Section II-A, clustering of images

related to an emotion was done considering AU combinations which are said to be related to the emotion in

literature. The images were labeled based on the cluster

they belonged to and the resulted labeled data were used

for developing a classification model to identify levels of

emotions. F1 Score is the average value of recall and

precision to evaluate the proposed approach accurately.

Multiple Machine Learning(ML) models were used to

develop the model and the RF classifier resulted the

291

Authorized licensed use limited to: SLIIT - Sri Lanka Institute of Information Technology. Downloaded on January 23,2022 at 15:11:50 UTC from IEEE Xplore. Restrictions apply.

Fig. 5: Confusion Matrix for Random Forest Classifier:

Method I

highest F1-score which is 76% with the train:test split of

70:30. Classification report and confusion matrix resulted

for RF classifier is shown in Fig. 4 and Fig. 5. On further

analysis of the confusion matrix, the diagonal has higher

weights for peak emotions and neutral emotions while

there are few miss-classifications in moderate class of each

emotions. Many of these samples were miss-classified into

their own peak or neutral emotion.

B. Results of Method II

1) Results of EFA: In method II, the AU intensity

data generated for the image set were analyzed using

EFA to explore the underlying factor structure and AUs

related to the factors. Prior to extraction of factors using

EFA, Kaiser-Meyer-Olkin (KMO) Measure of Sampling

Adequacy and Bartlett test of spherecity was conducted to

check the suitability of data for factor analysis. [23] The

results showed that the KMO value was 0.797, and the

significance of Bartlett’s sphericity was 0, which indicated

that the data could be analysed using factor analysis.

EFA is then conducted on the data with the Maximum

Likelihood Estimation as the extraction technique. The

visual representation using the scree plot confirmed that

there are three factors within the data. Furthermore, AUs

related to the three factors were also identified with a cutoff factor loading of 0.4. The factors and their respective

AUs found during EFA are shown in Table I. By reviewing

TABLE I: Results of EFA

Factor

Factor 1

Factor 2

Factor 3

Related AUs

AU6, AU10, AU12, AU14, AU25

AU1, AU2, AU5, AU26

AU4, AU15, AU17

the AUs in literature related to emotions and analysis of

AUs, it was found that Factor 1 in the above table could

be related to emotion Happy. Although AU6, AU12 and

AU25 are the popularly known AUs in relation to the

emotion happy in literature [15], [16], [24], exploring

images in the data set revealed and that AU10 and AU14

which correspond to upper lip raiser and dimpler are also

visible related to images showing happy emotion in the

data set. The factor 2 in the table could be related to the

emotion surprise, whereas, factor 3 on the other hand could

be related to the emotion sad.

2) Results of Independent t-test: Following identification of AUs related to emotions as above, next, independent t-tests are performed to find whether intensities of

AUs related to an emotion vary with the level of emotion.

The results of the t-test revealed that all AUs related to

an emotion does not show significant variations with the

variation of the emotion. For example, even though after

EFA it is concluded that AUs 4, 15 and 17 were related to

the sad emotion, the independent t-test revealed that only

intensities of AU15 and AU17 show significant variations

between moderately sad and peak sad images. Similarly

AU1 and AU5 were found to show significant variations

between moderately surprised and peak surprised images,

whereas, all AUs found related to the Happy emotion (i.e

AU6, AU10, AU12, AU14 and AU25) showed significant

variations between moderately happy and peak happy

images.

3) Cluster Analysis: The results of EFA and t-tests

were used to identify the AUs which are best suitable

for clustering images related to an emotion considering

their level of emotion. For example, images showing

sad emotion were clustered using AU15 and AU17 only,

since, during t-test it was found that only those two AUs

showed variations when the level of emotion varied. In

this research, clustering of images is done using K-means

clustering technique which is a distance-based clustering

method. Selecting AUs which have intensities varying

with the emotion level only for clustering is done so

that only significant features which could be used to

cluster images more effectively are considered and thereby

leading to better clustering of images belonging to the

same level of emotion. Per each emotion, in the above

manner three clusters were generated. The clusters resulted

by grouping the images belonging to five individuals for

surprise emotion is shown in Fig. 6.

Content of the resulted clusters were analyzed manually

and it was found that images related to no specific emotion

(neutral), moderate level of an emotion and peak level of

an emotion were clustered together. The images belonging

to the above three clusters were then given the labels,

neutral, moderate or peak. For example, images showing

the happy emotion, were given the label ‘neutral’, ‘moderately happy’ and ‘peak happy’ depending on the cluster

each image belonged to. Following the above procedure,

all images in the data set were labeled with seven class

names which are, neutral, moderately-happy, peak-happy,

moderately-sad, peak-sad, moderately-surprised and peaksurprised.

4) Classification Model: Once the image data in the

data set were labeled following the clustering as explained

in III-B3, the resulted data set was used for classification.

SVM, KNN, Logistic Regression (LR) and RF classification algorithms were used on the data set. Metrics

292

Authorized licensed use limited to: SLIIT - Sri Lanka Institute of Information Technology. Downloaded on January 23,2022 at 15:11:50 UTC from IEEE Xplore. Restrictions apply.

Fig. 6: Clustering Visualization for Surprise Emotion

precision, recall and F1 score were used to compare the

performance of the four classification algorithms. Classification report and confusion matrix obtained for RF

classifier are shown in Fig. 7 and 8 respectively.

Fig. 8: Confusion Matrix for Random Forest Classifier:

Method II

such that the classification model could learn better by

using it.

TABLE II: Comparison of Surprise Emotion classification

Image

Method I Label

Method II Label

Moderate

Neutral

Peak

Moderate

Peak

Peak

TABLE III: Comparison of Happy Emotion Classification

Image

Method I Label

Method II Label

Fig. 7: Classification Report for Random Forest Classifier:

Method II

As seen in Fig 4 and Fig 5, the emotion levels were

classified with adequate accuracy in Method II except for

moderate sad emotion level, where miss-classified entries

were relatively higher than the other levels. Furthermore,

Method II classification resulted in an overall F1-score,

accuracy, precision and recall of 87% and showed the best

performance with the train:test split of 70:30.

C. Discussion

When comparing the results of Method I presented in

Fig 4 and Fig 5 with Method II results given in Fig 7

and Fig 8, it is evident that the classifier developed in

Method II shows better accuracy in identifying the levels

of emotions. The reason for the above would be that the

data set used in method II is more systematically labeled

Neutral

Neutral

Neutral

Moderate

Moderate

Peak

Classification models learns to predict class labels by

understanding the relationships between the features and

the labels given for training. If such data are mislabeled,

however, the model might not perform well in predicting

classes. In Method II of the research two additional steps

are used to improve the clustering, leading to better

labeling of the data set. For example, EFA is used to

ensure that no important features related to an emotion

are overlooked, whereas, t-tests are used to identify only

AUs which are suitable to identify varying emotion levels.

Since all important and significant features are used for

clustering, Method II has resulted in a more accurately

labeled data set.

Manual analysis of data sets resulted for Method I

and Method II also revealed that the data in Method II

are much more accurately clustered and therefore, better

labeled. For example, Table II, Table III and Table IV

show how three sets of images corresponding to surprise,

happy and sad emotions were labeled respectively using

293

Authorized licensed use limited to: SLIIT - Sri Lanka Institute of Information Technology. Downloaded on January 23,2022 at 15:11:50 UTC from IEEE Xplore. Restrictions apply.

Method I and Method II. As seen in Table II, Method I

has mislabeled neutral and moderately surprised emotion

levels, while similar miss-classification results can be seen

in Table III and Table IV.

TABLE IV: Comparison of Sad Emotion Classification

Image

Method I Label

Method II Label

Neutral

Neutral

Neutral

Moderate

Peak

Moderate

Peak

Peak

D. Conclusion and Future Works

Existing research reveals numerous efforts made on

identifying different emotions shown by individuals such

as happy and sad by analyzing videos and images. There

is however, a limited research on identifying levels of

emotion such as moderately happy and peak happy. In

this research, two methods were experimented to identify

levels of emotions for a given image or a video.

The first method clustered the image set showing different levels of emotions using the action units recommended

in the literature, labeled the images based on the clusters

they belonged to and used the labeled data to train and

test number of classification algorithms to develop a

classification model to identify levels of emotions. This

method however, did not result in an adequate level of

accuracy. Therefore, an alternative method was used where

identifying AUs which are related to emotions in the data

set via EFA method and identifying the AUs related to

an emotion which vary with the levels of emotions via

independent t-test were done before the steps of clustering

and classification. The classification models in Method

II performed better than Method I in terms of accuracy,

precision and F1-score. The reason for the above would

be that in method II additional steps are taken to improve

the clustering and thereby labeling the image set better.

As in this research the emotion type and level

classifications were achieved for happy, sad and surprise

emotions, this work will be further extended to other

emotion types. Broadening this work, we plan to develop

models to identify emotion levels of growing children

and identify the patterns of AU contributions with age.

Acknowledgements - This research was supported

by the Accelerating Higher Education Expansion and

Development (AHEAD) Operation of the Ministry of

Higher Education of Sri Lanka funded by the World Bank

(https://ahead.lk/result-area-3/).

R EFERENCES

[1] A. Saxena, A. Khanna, and D. Gupta, “Emotion recognition and

detection methods: A comprehensive survey,” Journal of Artificial

Intelligence and Systems, vol. 2, no. 1, pp. 53–79, 2020.

[2] C. Busso, Z. Deng, S. Yildirim, M. Bulut, C. M. Lee,

A. Kazemzadeh, S. Lee, U. Neumann, and S. Narayanan, “Analysis of emotion recognition using facial expressions, speech and

multimodal information,” in Proceedings of the 6th international

conference on Multimodal interfaces, 2004, pp. 205–211.

[3] P. Ekman, “Are there basic emotions?” Psychological Review,

vol. 99, pp. 550–553, 1992.

[4] V. Jacintha, J. Simon, S. Tamilarasu, R. Thamizhmani, J. Nagarajan

et al., “A review on facial emotion recognition techniques,” in

Proceedings of the International Conference on Communication

and Signal Processing. IEEE, 2019, pp. 0517–0521.

[5] M. Xiaoxi, L. Weisi, H. Dongyan, D. Minghui, and H. Li, “Facial

emotion recognition,” in Proceedings of the IEEE 2nd International

Conference on Signal and Image Processing. IEEE, 2017, pp. 77–

81.

[6] H. Siqueira, S. Magg, and S. Wermter, “Efficient facial

feature learning with wide ensemble-based convolutional neural

networks,” Proceedings of the Conference on Artificial Intelligence,

vol. 34, no. 04, pp. 5800–5809, Apr. 2020. [Online]. Available:

https://ojs.aaai.org/index.php/AAAI/article/view/6037

[7] Y. Fan, X. Lu, D. Li, and Y. Liu, “Video-based emotion recognition

using cnn-rnn and c3d hybrid networks,” in Proceedings of the 18th

ACM International Conference on Multimodal Interaction, 2016,

pp. 445–450.

[8] D. Meng, X. Peng, K. Wang, and Y. Qiao, “Frame attention networks for facial expression recognition in videos,” in Proceedings

of the IEEE International Conference on Image Processing. IEEE,

2019, pp. 3866–3870.

[9] S. J. Ahn, J. Bailenson, J. Fox, and M. Jabon, “20 using automated

facial expression analysis for emotion and behavior prediction,” The

Routledge handbook of emotions and mass media, p. 349, 2010.

[10] E. Pranav, S. Kamal, C. S. Chandran, and M. Supriya, “Facial

emotion recognition using deep convolutional neural network,”

in Proceedings of the 6th International conference on advanced

computing and communication Systems. IEEE, 2020, pp. 317–

320.

[11] S. Li and W. Deng, “Deep facial expression recognition: A survey,”

IEEE Transactions on Affective Computing, 2020.

[12] M. Nadeeshani, A. Jayaweera, and P. Samarasinghe, “Facial emotion prediction through action units and deep learning,” in Proceedings of the 2nd International Conference on Advancements in

Computing, vol. 1. IEEE, 2020, pp. 293–298.

[13] A. A. Rizzo, U. Neumann, R. Enciso, D. Fidaleo, and J. Noh,

“Performance-driven facial animation: basic research on human

judgments of emotional state in facial avatars,” CyberPsychology

& Behavior, vol. 4, no. 4, pp. 471–487, 2001.

[14] C. G. Kohler, E. A. Martin, N. Stolar, F. S. Barrett, R. Verma,

C. Brensinger, W. Bilker, R. E. Gur, and R. C. Gur, “Static

posed and evoked facial expressions of emotions in schizophrenia,”

Schizophrenia Research, vol. 105, no. 1-3, pp. 49–60, 2008.

[15] J. A. Coan and J. M. Gottman, “The specific affect coding system

(spaff),” Handbook of emotion elicitation and assessment, vol. 267,

2007.

[16] J. Yang, F. Zhang, B. Chen, and S. U. Khan, “Facial expression

recognition based on facial action unit,” in Proceedings of the

Tenth International Green and Sustainable Computing Conference.

IEEE, 2019, pp. 1–6.

[17] S. Velusamy, H. Kannan, B. Anand, A. Sharma, and B. Navathe, “A

method to infer emotions from facial action units,” in Proceedings

of the IEEE International Conference on Acoustics, Speech and

Signal Processing. IEEE, 2011, pp. 2028–2031.

[18] P. Lucey, J. F. Cohn, T. Kanade, J. Saragih, Z. Ambadar, and

I. Matthews, “The extended cohn-kanade dataset (ck+): A complete

dataset for action unit and emotion-specified expression,” in 2010

ieee computer society conference on computer vision and pattern

recognition-workshops. IEEE, 2010, pp. 94–101.

[19] C. T. Keating and J. L. Cook, “Facial Expression Production and

Recognition in Autism Spectrum Disorders: A Shifting Landscape,”

Child and Adolescent Psychiatric Clinics, 2020.

[20] S. Zhalehpour, O. Onder, Z. Akhtar, and C. E. Erdem, “Baum-1:

A spontaneous audio-visual face database of affective and mental

states,” IEEE Transactions on Affective Computing, vol. 8, no. 3,

pp. 300–313, 2016.

[21] T. Baltrusaitis, A. Zadeh, Y. C. Lim, and L.-P. Morency, “Openface

2.0: Facial behavior analysis toolkit,” in Proceedings of the 13th

IEEE International Conference on Automatic Face & Gesture

Recognition. IEEE, 2018, pp. 59–66.

[22] B. Williams, A. Onsman, and T. Brown, “Exploratory factor

analysis: A five-step guide for novices,” Australasian journal of

paramedicine, vol. 8, no. 3, 2010.

[23] M. Effendi, E. M. Matore, M. F. M. Noh, M. A. Zainal, and

E. R. M. Matore, “Establishing factorial validity in raven advanced

progressive matrices (rapm) in measuring iq from polytechnic students’ ability using exploratory factor analysis (efa),” Proceedings

of Mechanical Engineering Research Day, vol. 2020, pp. 248–250,

2020.

[24] L. Zhang, A. Hossain, and M. Jiang, “Intelligent facial action and

emotion recognition for humanoid robots,” in Proceedings of the

International Joint Conference on Neural Networks. IEEE, 2014,

pp. 739–746.

294

Authorized licensed use limited to: SLIIT - Sri Lanka Institute of Information Technology. Downloaded on January 23,2022 at 15:11:50 UTC from IEEE Xplore. Restrictions apply.