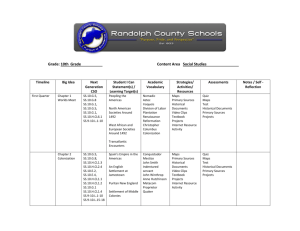

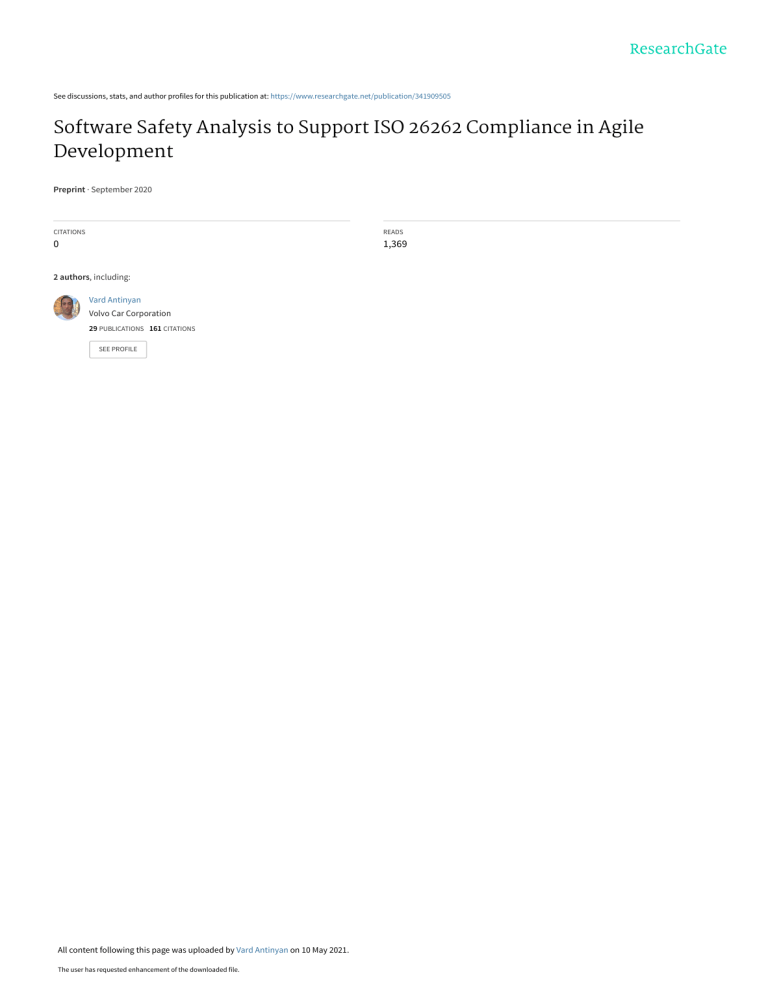

See discussions, stats, and author profiles for this publication at: https://www.researchgate.net/publication/341909505 Software Safety Analysis to Support ISO 26262 Compliance in Agile Development Preprint · September 2020 CITATIONS READS 0 1,369 2 authors, including: Vard Antinyan Volvo Car Corporation 29 PUBLICATIONS 161 CITATIONS SEE PROFILE All content following this page was uploaded by Vard Antinyan on 10 May 2021. The user has requested enhancement of the downloaded file. FOCUS: ON SOFTWARE QUALITY Software Safety Analysis to Support ISO 26262-6 Compliance in Agile Development Henrik Sandgren and Vard Antinyan, Volvo Car Group // In the literature, there are clear-cut techniques for system safety analysis but little support for software-specific safety evaluation. This article provides a software safety analysis method to help practitioners comply with ISO 26262-6 in Agile software development. // requires that a specific safety-oriented analysis should be conducted on a software architecture to fulfill the following four objectives: 1. Show evidence that software is suitable for providing specified safetyrelated functions and properties. 2. Identify and confirm the safetyrelated parts of the software. 3. Support the specification and verification of the safety mechanisms. 4. Detect failure modes that can violate the required freedom from interference. Because of the exploding number of software states, the occurrence of errors becomes probabilistic. This means that it is no longer possible to analyze every state of a given software system and determine whether there is an associated error. Rather, by analyzing certain properties, such as complexity, code smells, and so on, one can estimate the probability of the number of errors in software. However, ISO 26262-6 section 7 also requires that, for certain types of errors, which surface at the architecture level (vehicle functions), a comprehensive analysis should be conducted. The method presented in this article was developed by embracing this reality. Crisis in Software Safety Analysis Methods for conducting system safety analysis include the following (see “Glossary of Terms”): SOFTWARE SAFETY ANALYSIS is a part of the overall automotive Digital Object Identifier 10.1109/MS.2020.3026145 Date of current version: 16 April 2021 52 I E E E S O F T WA R E | PUBLISHED system safety review. Essentially, its goal is to mitigate the risks of injuries when using a vehicle. International Organization for Standardization (ISO) standard 26262-6 section 71 BY THE IEEE COMPUTER SO CIE T Y • failure mode and effect analysis (FMEA)2 • fault tree analysis3 • event tree analysis4 • hazard and operability analysis.5 The essential problem in conducting software safety analysis with these 0 74 0 -74 5 9 / 2 1 © 2 0 2 1 I E E E methods is that software, as a system, has several orders of magnitude more states than hardware. It is practically impossible to conduct analysis on all the states and define which ones should be prioritized in a plausible manner. That said, several concepts of system safety analysis methods, such as the “guide word” and the “failure mode,” are used in our study for standard representations. Alternatively, there have also been endeavors to develop software-focused safety analysis methods, such as the following: • Petri net analysis6 • false propagation and transformation notation (FPTN)7 • software hazard analysis and resolution in design (SHARD)8 • software criticality analysis9 • low-level interaction safety analysis10 • software FMEA11 • software failure modes, effects, and criticality analysis (SFMECA).12 FPTN is more of a notation technique by which no exhaustive analysis can be conducted. Petri net analysis is suitable for BaseTech software (managing interrupts and so on) but is unsuitable for application software because of increased complexity. Software FMEA and SFMECA are FMEA adaptations for software, but they require more effort as the software complexity increases. Software criticality analysis and SHARD focus on the data flow. The concept of data flow presented in SHARD is valuable in our context, and it is used in our method but in a different order and on a different abstraction layer. These approaches start the analysis on the level of the entire architecture, investigating output signals and corresponding GLOSSARY OF TERMS ASIL: automotive safety integrity level; there are four main ASIL levels, A, B, C, and D, and a secondary one, quality managed, which is not safety related. BaseTech: an electronic control unit operating system that deals with scheduling, interrupts, hardware close calls, memory usage, and so forth CL: criticality level ECU: electronic control unit; one of the many computers that manages certain groups of functionalities in a car ETA: event tree analysis Failure mode: one possible way a system can fail FMEA: failure mode and effect analysis FPTN: failure propagation and transformation notation Freedom from interference: In International Organization for Standardization (ISO) standard 26262-6, this means that a fault in a less-safety-critical software component will not lead to a fault in a more-safety-critical component. FTA: fault tree analysis HAZOP: hazard and operability analysis HIL: hardware-in-the-loop test Safety goal: In ISO 26262-6, a safety goal is a top-level requirement that is assigned to a system, with the purpose of reducing to a tolerable level the risk of one or more hazardous events. SFMECA: software failure modes, effects, and criticality analysis SHARD: software hazard analysis and resolution in design failure modes from the top down. They assume that there is an intentional forefront architecture that does not significantly change through time. In modern software development, however, the architecture changes continually. Agile teams own architectural components,13 which are called software packages, in the case of Volvo Cars (Figure 1). Architectural decisions inside software packages are emergent as opposed to those at the level of the electronic control unit (ECU), which are relatively stable through time. The emergent architecture prevents conducting top-down analysis because the states of the signals can change in the middle of the process, invalidating the results. Also, in our case, the BaseTech software and part of the application software is sourced to a supplier, making top-down analysis impossible. Application software complexit y, re sponsivene ss to c ustomers, multisite development, and the M AY/J U N E 2 0 2 1 | I E E E S O F T WA R E 53 FOCUS: ON SOFTWARE QUALITY emergent architecture urged us to develop a new software safety analysis method that could help in complying with ISO 26262-6 on a continual basis. This method, however, is designed for application software developed by Simulink models as software units. The method is not evaluated for handwritten software and for BaseTech software. Handwritten code has a less restricted development (e.g., a nonobligatory compliance to clean coding standards) and more couplings with other files and functions compared with Simulink models. Thus, analyzing data flows that are less clear requires much more effort, which may be unaffordable in Agile development. Research Method A crucial decision during the research process was to identify all error types first because, due to the versatility of errors, it was impossible to decide which types should be subject to safety-oriented software analysis. These are errors that would hinder achieving the four objectives of software safety analysis. Action research14 was employed to develop the method. A reference group of practitioners was formed to iterate across the action research cycles. Its members included a technical leader in software engineering, a technical expert on software safety, a senior safety engineer, a principal engineer of sof t ware d e velopment , a sof t wa re a rch itect, a senior software developer, and a product owner whose team was responsible for developing s a fet y- critical functionalit y for the vehicle. The tasks consisted of the following: • identifying all types of errors that can occur in application software, with the given software development process and work products • investigating and documenting the current mitigation methods for the identified types of errors • specifying mitigation methods for error types that did not already have them • reviewing the development of the safety-oriented software analysis method. The authors proposed the initial mitigation methods for error types that were unresolved. They also designed the skeleton of the safety-oriented software analysis method. It was the reference group, however, that critically questioned the proposals and shaped the outcomes during the action research Team Software Package Software Unit Software Unit Sourced Software Package Sourced Base Technology Software Team Software Package Software Unit Software Unit Target Software for Analysis FIGURE 1. An overview of electronic control unit (ECU) software at Volvo Cars. 54 I E E E S O F T WA R E | W W W. C O M P U T E R . O R G / S O F T W A R E | @ I E E E S O F T WA R E Emergent Architecture Requirements Allocation In-House Software Package Intentional Architecture Entire ECU Software (Software) cycles. In approximately 1.5 years, a software safety analysis method was crystalized. Later, the method was employed for the in-house application software of a large ECU, and, as a result, more adjustments were made to the analysis method. The software to which the method was applied had roughly 200 Simulink models (producing some 800,000 lines of code) and was developed by 12 Agile teams. Software Safety Analysis Method The analysis method is composed of two parts. The first is the evaluation of the software development process15 and employment of a set of practically suitable and empirically valid methods to detect errors in the entire development chain. These approaches hold the best promise for eliminating software errors in all production phases. The process is called a general analysis of software safety, which explicitly embraces the probabilistic nature of software error detection; not all software states can be checked. However, this ensures that the likelihood of overall software errors is minimized through the available methods and tools. The second part is a safety-oriented software analysis at the architecture level to eliminate errors that can escape the general analysis and that have a tangible likelihood to prevent achieving the four safety objectives. Aligned with continuous software engineering, the general analysis on all identified types of errors is conducted continuously. This is facilitated by methods such as automated checks, metrics-based corrections, peer reviews, and automated tests. The safety-oriented software analysis is conducted in workshops, and several steps can be automated. General Analysis of Software Safety The general analysis of software safety identifies errors in the development chain and indicates mitigation methods. The results of such an investigation in our product are documented in Table 1. If a type of error can affect any of the four safety objectives, it should be subjected to an additional safety-oriented analysis. Those error types appear in bold in the table. The mitigation methods can be changed for reasons that may or may not emanate from the safety analysis perspective. Therefore, they should be regularly evaluated and updated (e.g., once a year). The responsible software safety expert should ensure that the information of such a table is current. Safety-Oriented Software Analysis at the Architecture Level The purpose of the safety-oriented analysis is to identify errors that are due to requirement misspecification, misallocation, incorrect signal interfacing between software models, and incorrect function calls (in bold in Table 1). The analysis has two phases. The entirety of the analysis during phase 1 is the Simulink model. An Agile team that owns a set of Simulink models should select one model at a time and conduct the analysis with the support of a software safety expert. The entity of the analysis during phase 2 is the application software. Software architects should conduct the analysis with the support of a software safety expert and use the results of phase 1. Phase 1 starts with the product owner organizing a workshop with the Agile team and software safety expert. The assumption is that the team has the correct information about the failure modes of the given model and the criticality levels (CLs) of the vehicle-level consequences per failure mode. In fact, one of the goals of this analysis is to rectify this assumption. The CLs are defined as follows: • If a vehicle-level consequence does not violate a safety goal, it is classified as CL1. If the model has only CL1s, the model itself is classified as CL1. • If a vehicle-level consequence violates a safety goal but has a safety mechanism to avoid this consequence, it is classified as CL2. If the model has only CL2s and CL1s, the model itself is classified as CL2. • If the vehicle-level consequence violates a safety goal and does not have any safety mechanism, it is classified as CL3. The model itself, in this case, is classified as CL3. An overview of phases 1 and 2 is presented in Figure 2. A stepwise detailed description follows. Phase 1: Conducted by an Agile Team, With Safety Expert Support 1. Understand what function the model implements. 2. Identify failure modes per output signal and function call for the model. 3. Identify the vehicle-level consequences per failure mode to the best of the team’s knowledge. 4. Classify the vehicle-level consequences per failure mode as CL1, CL2, or CL3. • Does the vehicle-level consequence violate a safety goal? If no, classify the consequence as CL1. If yes, go to the next point. • Is there a safety mechanism, external to the model, to avoid this consequence? If M AY/J U N E 2 0 2 1 | I E E E S O F T WA R E 55 FOCUS: ON SOFTWARE QUALITY Table 1. Errors and mitigation methods identified for automotive software. Error category Error type Mitigation measures Requirements specifications and software design Errors introduced during the requirements specification Requirements review process, safety-oriented analysis Errors introduced during the requirements allocation Requirements reviews, requirements testing, safety-oriented analysis Errors introduced during the design Model reviewing, clean coding compliance,16 complexity limitation, unit testing Incorrect interfacing to software models: incompatible input/ output data types Automated build check, interface manager check Incorrect interfacing to software models: input/output value out of range Requirements testing, merge tests, HIL testing, safety-oriented analysis Missing input/output signal to/from internal software models Warning in builds, integration testing, requirements testing, merge tests, HIL tests Missing input/output signal to/from supplier software models Warning in builds, integration testing, merge tests, HIL tests Incorrect function calls Automated build check, integration testing, safety-oriented analysis Numerical overflow/underflow/saturation in software calculation Warnings in builds, coding guidelines, check script at commit Counter rollover Coding guidelines, automated build checks Infinite loop Coding guidelines, automated build checks Loss of precision due to type-casting Automated build checks, unit testing Invalid operation (e.g. divide by zero, logarithm of zero, square root of negative value) Model reviews, static analysis for clean coding compliance, Simulink restricted code generation settings Wrong set of code switches leading to parts of models incorrectly activated/deactivated during code generation Integration testing, requirements testing, merge tests, HIL tests Wrong set of code switches leading to parts of models incorrectly activated/deactivated during code compilation Integration testing, requirements testing, merge tests, HIL tests Wrong set of models chosen in the raster files (for intended configuration) Integration testing, requirements testing, merge tests, HIL tests Wrong raster times chosen for models in the raster files Requirements testing, merge tests, HIL tests Wrong execution order chosen for models in the raster files Requirements testing, merge tests, HIL tests Wrong commit tagged in GIT it (wrong version of software module used) Integration testing, requirements testing, merge tests, HIL tests Wrong make environment (wrong software delivery from supplier was used) Integration testing, HIL tests Systematic failure to execute/no call of the software function under analysis Program flow monitoring (specific analysis required only if there is BaseTech software) Systematic incorrect activation/unintended call of the software function under analysis Program flow monitoring and prevention Incompletely executed software function under analysis Program flow monitoring Systematic execution in wrong order Program flow monitoring ECU/software reset “Unintended reset” can be detected, “Intended reset” can detect the root cause Wrong nonvolatile random-access memory (RAM) parameter used by software Automated parameters check and parameter allocation Wrong RAM addressing Automated ASIL area check Interfacing between software components Coding Configuration Execution Hardware/software interfacing and configuration of software in hardware HIL: hardware in the loop; ASIL: automotive safety integrity level. 56 I E E E S O F T WA R E | W W W. C O M P U T E R . O R G / S O F T W A R E | @ I E E E S O F T WA R E yes, classify the consequence as CL2 and refer to the safety mechanism. If no, go to the next point. • Was the corresponding output signal (function call) intended to be CL3? If yes, classify its vehicle-level consequence as CL3. If no, an inappropriate design is detected. 5. Confirm the CL3 parts of the software. • Does the model with CL3 failure modes have a corresponding automotive safety integrity level (ASIL) classification in the architecture description? If no, an inappropriate design is detected. • Does the model with CL3 failure modes have requirements that address the failure modes with a corresponding ASIL classification? If no, an inappropriate design is detected. 6. Document all failure modes with the respective output signals (function calls) for models that have at least one CL2 or CL3 vehicle-level consequence. • Document the names of signals (function calls), failure modes, vehicle-level consequences, CLs, and ASIL levels. • Document guide words for all output signals and function calls (e.g., “too high,” “too low,” and “other than”). 7. Identify and document causes for CL3 failures to the best of the team’s knowledge. • Classify causes as CL1, CL2, or CL3. Causes can be input signals, triggers, code switches, configurations, and other error types. • Specify guide words for causes. 8. During the exercise, document gaps in knowledge that the team thinks it needs to have to confidently complete phase 1. 9. If no CL2 or CL3 consequences are identified in the model, document it as CL1 with the most severe vehicle-level consequence. If an output wasn’t intended to be CL3, the appropriate safety requirement wasn’t allocated or the team lacked knowledge about the CL3 model. This, in turn, means that the analysis found either a gap in the team’s knowledge or a fault in the system design. After phase 1, all documented CL3 models should be shared with the system safety engineers to confirm the corresponding ASIL levels in the hazard analysis. ASIL levels should be documented along with the information presented in the first entry under point 5 in the preceding list. Guide words are used to systematically present the possible deviations of a specific design intent and determine the likely consequences. Their use can generate questions for the examination of a specific functionality or property of the architecture during the analyses. Guide words thus can be used to identify weaknesses, faults, and failures. The selection of suitable guide words depends on the characteristics of the examined functions, behaviors, properties, interfaces, and data. The set of guide words that should be used in an analysis depends on the context, and therefore it should be chosen by the analyzing engineers, facilitated by the safety expert. An example set of guide words is: incorrect value, late value, early value, no value, too high value, and too small value. A sample of the phase 1 analysis results is provided in Table 2. The second phase of the analysis uses the result from the first to confirm the safety mechanisms for CL2 models and evaluate the freedom from interference for safety-related software. Phase 2: Conducted by the Software Architect, With Safety Expert Support 1. Confirm the CL2 parts of the software by examining all CL2resulted models and their safety mechanisms from phase 1. • Is the safety mechanism included in the target software release? If no, an inappropriate design is detected. If yes, evaluate the adequacy of the safety mechanism. • Is there an appropriate software/system integration test specified for the safety mechanism? If no, specify one. 2. Confirm the allocations of software models in the right partitions by examining all models in the architectural description. • Is each model with a given ASIL classification allocated to a software partition that has the same or a higher ASIL classification? If no, an inappropriate allocation is detected. • If models of different ASILs are allocated to the same partition, confirm in the architectural description that these models have been devised according to the criteria of the highest ASIL development. M AY/J U N E 2 0 2 1 | I E E E S O F T WA R E 57 58 I E E E S O F T WA R E | W W W. C O M P U T E R . O R G / S O F T W A R E | @ I E E E S O F T WA R E No Included in Target Software Release? Select All Safety Mechanisms of CL2 From Phase 1 Start Yes No FIGURE 2. An overview of (a) phase 1 and (b) phase 2. (b) (a) Document the Most Severe Failure Mode Yes All Failure Modes = CL1? Classify Failure Modes as CL1–3 Identify Failure Modes Based on Outputs Start No Has Respective Integration Test? Evaluate the Adequacy End Document the Safety Mechanism Document as CL2 Yes Has Safety Mechanism? Yes Intended as Safety Related? Document as CL3 No Allocated to the Right ASIL Partition? Select ASIL Models From Architecture Document Causes of Failure Modes Document Failure Modes for All Outputs Yes Has Respective ASIL in Architecture? Inappropriate Design Is Detected Yes No Yes No No No End Based on Phase 1, Confirm ASIL of In-House Interface Signals Yes Originate From CL3 Models? Select CL3 Input Signals Inappropriate Design Is Detected FOCUS: ON SOFTWARE QUALITY Table 2. Example results from the analysis of a Simulink model. Failure mode identification Output signals Guide word(s) Failure mode Software model consequences ASIL CL sVcScBTrsm_D_TrsmParkLockd Other than Falsely set to engaged Park falsely shown in DIM ASIL-B CL3 sVcScBTrsm_B_AllFrntPrkRles More Falsely set to true No vehicle consequence QM CL1 sVcScBTrsm_B_SchedulingRespons Other than Wrong value Safe state wrongly activated QM CL1 sVcDepExt_D_Counter Other than Wrong value Safe state wrongly activated QM CL1 Input signal Guide word(s) Failure mode Software model consequences ASIL CL sVcEc_D_SchedulingCounter Other than Wrong value TrsmParkLockd wrong value QM CL1 sVcDepExt_D_Counter Other than Wrong value TrsmParkLockd wrong value QM CL1 yVcScBCoord_B_SbWVehicle Less Falsely set to false TrsmParkLockd falsely engaged ASIL-B CL3 yVcEc_B_KL15 Less Falsely set to false TrsmParkLockd falsely disengaged QM CL1 sVcTcm_D_TrsmActrPosnSafe Other than Falsely set to engage TrsmParkLockd falsely engaged ASIL-B CL3 yVcTCM_B_TrsmActrPosnOk More Falsely set to true TrsmParkLockd falsely engaged ASIL-B CL3 Failure cause identification QM: quality managed. 3. Detect failure mode propagation from a lower ASIL partition to a higher one. • Do all CL3 input signals originate from CL3 models? If no, an inconsistent specification between emergent and intentional architectures is detected. 4. Based on the phase 1 analysis, confirm that the interface signals of the application software have the correct ASIL classification. Teams are recommended to conduct phase 1 during every program increment. In some Agile methods, each increment may be eight to 12 weeks. Phase 2 should be performed before every product release when it is clear that no further functional changes will occur. In both phases, the detection of an inappropriate design should generate a new work package for the Agile teams. This package should be included in the team backlog, and a priority level should be assigned to it. If an inappropriate design is detected during the first phase, the product owner can create the work package. If it is detected during the second phase, the product owner should consult with the software architect to correctly define the work package. If no fault is found, the product can be released, and the teams can move to the next stage of development. Benefits and Improvements The method is deemed economically affordable for continuous safety analysis. The reasons are the following: first, the approaches for the general analysis of software safety are largely automated and integrated with the software development environment. And once these methods are registered (Table 1), it is easy to periodically review and update the information. Second, once a safety-oriented analysis is conducted, in the future, teams will already have enough knowledge and documentation to update the evaluation based on the changes in outgoing signals and their corresponding information (failure modes, vehicle-level consequences, and so on). The documentation can be in form of Excel spreadsheets and browser-based, semiautomated means. The safety-oriented software analysis facilitates focusing on errors that directly violate the four safety M AY/J U N E 2 0 2 1 | I E E E S O F T WA R E 59 ABOUT THE AUTHORS FOCUS: ON SOFTWARE QUALITY 7. HENRIK SANDGREN is a technical expert on dependability engineering at Volvo Car Group, Gothenburg, 418 78, Sweden. His research interests include software and system safety in Agile development. Sandgren received his M.S. degree from Chalmers University of Technology. Contact him at henrik .sandgren@volvocars.com. VARD ANTINYAN is a technical expert on software quality at Volvo Car Group, Gothenburg, 418 78, Sweden. His research interests include software complexity, software quality, risk management, software process improvement, and software measurement. Antinyan received his Ph.D. degree in software engineering from the University of Gothenburg. Contact him at vard.antinyan@volvocars.com. 8. 9. 10. objectives. This analysis enables going beyond automated checks and conducting essential intellectual exercises that focus on what functions the models implement, how these models intervene with each other, and how congruent the models are with intentional architectural decisions. This helps to avoid accidental design decisions that can compromise functional safety. G enerally, there is a need for awareness about the role of a model on vehicle-level functions. Safety-oriented analysis increases this awareness in the Agile team, empowering the members with knowledge to make wiser design decisions. The organization where we used this method provided us with feedback about improvements, mainly elevating the possibility of making several steps automated and integrated into the software development environment to reduce the administrative effort of using the technique. These improvements are under way. 60 I E E E S O F T WA R E View publication stats | For example, one can automatically check that the models are allocated to the correct ASIL partitions and evaluate failure mode propagation. 11. References 1. Road Vehicles, Functional Safety, International Standard ISO 26262, 2018. 2. D. H. Stamatis, Failure Mode and Effect Analysis: FMEA From Theory to Execution. Milwaukee, WI: ASQ Press, 2003. 3. B. Vesely, Fault Tree Analysis (FTA): Concepts and Applications. Washington, D.C.: NASA, 2002. 4. J. D. Andrews and S. J. Dunnett, “Event-tree analysis using binary decision diagrams,” IEEE Trans. Rel., vol. 49, no. 2, pp. 230–238, 2000. doi: 10.1109/24.877343. 5. J. Dunjó, V. Fthenakis, J. A. Vílchez, and J. Arnaldos, “Hazard and operability (HAZOP) analysis. A literature review,” J. Hazardous Mater., vol. 173, nos. 1–3, pp. 19–32, 2010. doi: 10.1016/j.jhazmat.2009.08.076. 6. K. Jensen and G. Rozenberg, HighLevel Petri Nets: Theory and W W W. C O M P U T E R . O R G / S O F T W A R E | 12. 13. 14. 15. 16. @ I E E E S O F T WA R E Application. New York: Springer-Verlag, 2012. P. Fenelon and J. A. McDermid, “New directions in software safety: Causal modelling as an aid to integration,” Workshop on Safety Case Construction, York, 1992 Art no. Y01 5DD. [Online]. Available: http://citeseerx.ist .psu.edu/viewdoc/download?doi=10.1 .1.47.4673&rep=rep1&type=pdf D. J. Pumfrey, “The principled design of computer system safety analyses,” Ph.D. dissertation, Univ. of York, York, 1999. P. Bishop, R. Bloomfield, T. Clement, and S. Guerra, “Software criticality analysis of COTS/SOUP,” Rel. Eng. Syst. Saf., vol. 81, no. 3, pp. 291–301, 2003. doi: 10.1016/ S0951-8320(03)00093-0. P. Fenelon and J. A. McDermid, “An integrated tool set for software safety analysis,” J. Syst. Softw., vol. 21, no. 3, pp. 279–290, 1993. doi: 10.1016/0164-1212(93)90029-W. H. H. Kim, “SW FMEA for ISO-26262 software development,” in Proc. AsiaPacific Software Eng. Conf., 2014, pp. 19–22. doi: 10.1109/APSEC.2014.85. Space Product Assurance: Methods and Techniques to Support the Assessment of Software Dependability and Safety, ECSS-Q80-03, European Cooperation for Space Standardization, Noordwijk, 2006. D. Leffingwell, Scaling Software Agility: Best Practices for Large Enterprises. London: Pearson, 2007. J. McNiff, Action Research: Principles and Practice. Evanston, IL: Routledge, 2013. VDA QMC Working Group 13/Automotive SIG, “Automotive SPICE process assessment/reference model,” 2017. Motor Industry Software Reliability Association, Guidelines for the Use of the C Language in Critical Systems. Warwickshire, U.K.: MISRA, 2012.