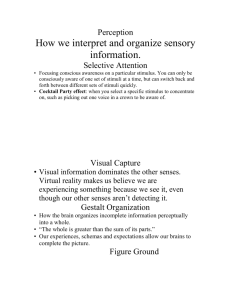

z Sensing the world around us z sound The movement of air molecules brought about by a source of vibration z eardrum The part of the ear that vibrates when sound waves hit it z cochlea A coiled tube in the ear filled with fluid that vibrates in response to sound z Basilar membrane A vibrating structure that runs through the center of the cochlea, dividing it into an upper chamber and a lower chamber and containing sense receptors for sound z Hair cells Tiny cells covering the basilar membrane that, when bent by vibrations entering the cochlea, transmit neural messages to the brain z The physical aspects of sound Sound is actually the physical movement of air molecules in regular, wavelike patterns caused by a vibrating source When the lowest notes are playing on ,the speaker moves in and out The speaker pushes air molecules into waves with the same pattern as its movement Strength of the wave pattern weakens considerably during their travels z All other sources that produce sound work in essentially the same fashion Air or some other medium, such as water is necessary to make the vibrations of the object reach us z Frequency Frequency is the number of wave cycles that occur in a second The audio speaker moving when low notes are played because of a primary characteristic of sound called frequency At very low frequencies there are relatively few wave cycles per second Low frequencies are translated into a sound that is very low in pitch z pitch Pitch is the characteristic that makes sound seem ‘high’ low. The lowest frequency that humans are capable of hearing is 20 cycles per second Higher frequencies are heard as sounds of higher pitch At the upper end of the sound spectrum, people can detect sounds with frequencies as high as 20,000 cycles per second z Amplitude Is a feature of wave patterns that allows us to distinguish between loud and soft sound Amplitude is the spread between the up-and down peaks and valleys of air pressure in a sound wave as it travels through the air Waves with small peaks and valleys produce soft sounds’ Those with relatively large peaks are valleys produce loud sounds We are sensitive to broad variations in sound amplitude The range is measured in decibels z Theories of sound Place theory of hearing Frequency theory of hearing z How are our brains able to sort out wavelengths of different frequencies and intensities? So, why do we hear the difference between notes in a song instead of just monotone notes? This is attributed to the frequency theory of hearing. The frequency theory of hearing states that the frequency of the auditory nerve's impulses corresponds to the frequency of a tone, which allows us to detect its pitch. z The way it works is that sound waves cause the entire basilar membrane to vibrate at different rates, which, in turn, causes the neural impulses to be transmitted at different rates. Basically, when we hear a musical note, it causes specific vibrations in our ears that lets us hear that specific pitch. Lower notes vibrate at slower speeds, while higher notes vibrate at higher speeds. As pitch increases, nerve impulses of the same frequency are sent to the auditory nerve. This means that a tone with a frequency of 700 hertz produces 700 nerve impulses per second. It is the speed in which the neural signals move along the brain that determine the pitch. z Place theory of hearing The place theory of hearing is used to explain how we distinguish high-pitched sounds that possess a frequency that exceeds 5,000 hertz. According to the place theory of hearing, we can hear different pitches due to specific sound frequencies causing vibrations in specific parts on the basilar membrane of the cochlea. In other words, different parts of the cochlea are activated by different frequencies. z Each location on the basilar membrane possesses a particular characteristic frequency. For example, a sound that measures 6,000 hertz would stimulate the spot along the basilar membrane that possesses a characteristic frequency of 6,000 hertz. The brain detects the pitch based on the position of the hair cells that transmitted the neural signal. z z Auditory cortex After an auditory message leave the ear, it is transmitted to the auditory cortex of the brain through a complex series of neural interconnections Neuron that respond to specific types of sounds Specific sort of sound features( clicks and whistles) About sound’s location Perceptual organization z Gestalt psychology was founded by German thinkers Max Wertheimer, Wolfgang Kohler, and Kurt Koffka and focused on how people interpret the world.2 The Gestalt perspective formed partially as a response to the structuralism of Wilhelm Wundt, who focused on breaking down mental events and experiences to the smallest elements. Max Wertheimer noted that rapid sequences of perceptual events, such as rows of flashing lights, create the illusion of motion even when there is none. This is known as the phi phenomenon. Motion pictures are based on this principle, with a series of still images appearing in rapid succession to form a seamless visual experience. z According to Gestalt psychology, the whole is different from the sum of its parts. Based upon this belief, Gestalt psychologists developed a set of principles to explain perceptual organization, or how smaller objects are grouped to form larger ones. z Have you noticed how a series of flashing lights often appears to be moving, such as neon signs or strands of lights? According to Gestalt psychology, this apparent movement happens because our minds fill in missing information. This belief that the whole is greater than the sum of the individual parts led to the discovery of several different phenomena that occur during perception z Some of the most basic perceptual processes can be described by a series of principles that focus on the ways we organize bits and pieces of information into meaningful wholes. Known as gestalt laws of organization, these principles were set forth in the Gestalt laws of organization: A series of principles that describe how we organize bits and pieces of information into meaningful wholes. z FIGURE 1 When the usual cues we use to distinguish figure from ground are absent, we may shift back and forth between different views of the same figure. If you look at each of these objects long enough, you’ll probably experience a shift in what you’re seeing. In (a), you can see either a vase or the profiles of two people. In (b), the shaded portion of the figure, called a Necker cube, can appear to be either the front or the back of the cube. z Gestalt law of organization Law of Proximity: This law describes elements that are closely placed together and thus perceived as a group. For example, placing a particular shape without proximity , just as the Adidas logo in figure 1, but still perceived as separate shapes. z proximity .. .. .. .. .. .. .. .. .. .. .. .. .. z z Law of Similarity: This law describes placing similar objects with one another. It could be perceived as a pattern or group. For example, placing two distinct shapes in vertical columns. z z similarity z similarity z Law of Continuation: This is described as the compelling movement of the eyes through an object to another object. For example, in the image letter below, the eyes are compelled to follow the curve in the letter to the leaf. z z Law of continuity z z Law of Closure: This is described as the incompleteness or a space unenclosed in an object. For example, the panda below shows some incompleteness in its figure. z Law of Simplicity: Any ambiguous stimuli have the tendency to determined to favor the simplest. An example is shown below in figure 7. Law of Pragnanz: This is described as the reduction and organization of the reality of an object to its simplest possible form. Example is shown below. z Figure and ground z Although gestalt psychology no longer plays a prominent role in contemporary psychology, its legacy endures. One fundamental gestalt principle that remains influential is that two objects considered together form a whole that is different from the simple combination of the objects. Gestalt psychologists argued that the perception of stimuli in our environment goes well beyond the individual elements that we sense. Instead, it represents an active, constructive process carried out within the brain z Bottom -up and top -down processing Ca- yo- re-d t-is -en-en-e, w-ic- ha- ev-ry -hi-d l-tt-r m-ss-ng? It probably won’t take you too long to figure out that it says, “ Can you read this sentence, which has every third letter missing?” If perception were based primarily on breaking down a stimulus into its most basic elements, understanding the sentence, as well as other ambiguous stimuli, would not be possible. The fact that you were probably able to recognize such an imprecise stimulus illustrates that perception proceeds along two different avenues, called top-down processing and bottom-up processing. z In top-down processing, perception is guided by higher-level knowledge, experience, expectations, and motivations. You were able to figure out the meaning of the sentence with the missing letters because of your prior reading experience, and because written English contains redundancies. Not every letter of each word is necessary to decode its meaning. Moreover, your expectations played a role in your being able to read the sentence. You were probably expecting a statement that had something to do with psychology, not the lyrics to a Fergie song z Top-down processing is illustrated by the importance of context in determining how we perceive objects. Look, for example, at Figure 4. Most of us perceive that the first row consists of the letters A through F, while the second contains the numbers 10 through 14. But take a more careful look and you’ll see that the “B” and the “13” are identical. Clearly, our perception is affected by our expectations about the two sequences—even though the two stimuli are exactly the same z However, top-down processing cannot occur on its own. Even though top-down processing allows us to fill in the gaps in ambiguous and out-of-context stimuli, we would be unable to perceive the meaning of such stimuli without bottom-up processing. Bottom-up processing consists of the progression of recognizing and processing information from individual components of a stimuli and moving to the perception of the whole. We would make no headway in our recognition of the sentence without being able to perceive the individual shapes that make up the letters. Some perception, then, occurs at the level of the patterns and features of each of the separate letters. z Top-down and bottom-up processing occur simultaneously, and interact with each other, in our perception of the world around us. Bottom-up processing permits us to process the fundamental characteristics of stimuli, whereas top-down processing FIGURE 3 Although at first it is difficult to distinguish anything in this drawing, keep looking, and eventually you’ll probably be able to see the figure of a dog (James, 1966). The dog represents a gestalt, or perceptual whole, which is something greater than the sum of the individual elements. Top-down processing: Perception that is guided by higher-level knowledge, experience, expectations, and motivations. FIGURE 4 The power of context is shown in this figure. Note how the B and the 13 ar z allows us to bring our experience to bear on perception. As we learn more about the complex processes involved in perception, we are developing a better understanding of how the brain continually interprets information from the senses and permits us to make responses appropriate to the environment (Buschman & Miller, 2007). z Perceptual constancy Consider what happens as you finish a conversation with a friend and he begins to walk away from you. As you watch him walk down the street, the image on your retina becomes smaller and smaller. Do you wonder why he is shrinking? Of course not. Despite the very real change in the size of the retinal image, you factor into your thinking the knowledge that your friend is moving farther away from you because of perceptual constancy. Perceptual constancy is a phenomenon in which physical objects are perceived as unvarying and consistent despite changes in their appearance or in the physical environment. z In some cases, though, our application of perceptual constancy can mislead us. One good example of this involves the rising moon. When the moon first appears at night, close to the horizon, it seems to be huge—much larger than when it is high in the sky later in the evening. You may have thought that the apparent change in the size of the moon was caused by the moon’s being physically closer to the earth when it first appears. In fact, though, this is not the case at all: the actual image of the moon on our retina is the same, whether it is low or high in the sky z Instead, the moon appears to be larger when it is close to the horizon primarily because of the phenomenon of perceptual constancy. When the moon is near the horizon, the perceptual cues of intervening terrain and objects such as trees on the horizon produce a misleading sense of distance. The phenomenon of perceptual constancy leads us to take that assumed distance into account when we view the moon, and it leads us to misperceive the moon as relatively large. z In contrast, when the moon is high in the sky, we see it by itself, and we don’t try to compensate for its distance from us. In this case, then, perceptual constancy leads us to perceive it as relatively small. To experience perceptual constancy, try looking at When the moon is near the horizon, we do not see it by itself and perceptual constancy leads us to take into account a misleading sense of distance. fel70207_ch03_088-129.indd 120 9/11/08 5:23:12 PM 121 z the moon when it is relatively low on the horizon through a paper-towel tube; the moon suddenly will appear to “shrink” back to normal size (Coren, 1992b; Ross & Plug, 2002; Imamura & Nakamizo, 2006). Perceptual constancy applies not just to size (as with the moon illusion) but to shape and color as well. For example, despite the varying images on the retina as an airplane approaches, flies overhead, and disappears, we do not perceive the airplane z Depth perception As sophisticated as the retina is, the images projected onto it are flat and two- dimensional. Yet the world around us is three-dimensional, and we perceive it that way. How do we make the transformation from 2-D to 3-D? The ability to view the world in three dimensions and to perceive distance—a skill known as depth perception—is due largely to the fact that we have two eyes. binocular disparity z Because there is a certain distance between the eyes, a slightly different image reaches each retina. The brain integrates the two images into one composite view, but it also recognizes the difference in images and uses it to estimate the distance of an object from us. The difference in the images seen by the left eye and the right eye is known as binocular disparity z binocular disparity To get a sense of binocular disparity for yourself, hold a pencil at arm’s length and look at it first with one eye and then with the other. There is little difference between the two views relative to the background. Now bring the pencil just six inches away from your face and try the same thing. This time you will perceive a greater difference between the two view z the distance of objects that we view provides us with a means of determining distance. If we view two objects and one is considerably closer to us than the other is, the retinal disparity will be relatively large and we will have a greater sense of depth between the two. However, if the two objects are a similar distance from us, the retinal disparity will be minor, and we will perceive them as being a similar distance from us z Binocular vision – seeing 3D with two eyes There are two main binocular cues that help us to judge distance: • Disparity – each eye see a slightly different image because they are about 6 cm apart (on average). Your brain puts the two images it receives together into a single three-dimensional image. Animals with greater eye separation, such as hammerhead sharks, can have a much greater depth perception (as long as the view from both eyes overlaps the same scene). This can be very useful when trying to catch fast-moving prey. z • Convergence – when looking at a close-up object, your eyes angle inwards towards each other (you become slightly cross-eyed). The extra effort used by the muscles on the outside of each eye gives a clue to the brain about how far away the object is. If you hold your finger 20 cm in front of your eyes, your muscles need to work a lot harder than when your finger is 50 cm away. These binocular cues are most effective for objects up to 6 m away. After this, the amount of eye separation does not give a great enough difference in images to be useful. z Monocular cues z In some cases, certain cues permit us to obtain a sense of depth and distance with just one eye. These cues are known as monocular cues. One monocular cue—motion parallax is the change in position of an object on the retina caused by movement of your body relative to the object. For example, suppose you are a passenger r in a moving car, and you focus your eye on a stable object such as a tree. Objects that are closer than the tree will appear to move backward, and the nearer the object is, the more quickly it will appear to move. In contrast, objects beyond the tree will seem to move at a slower speed, but in the same direction as you are. Your brain is able to use these cues to calculate the relative distances of the tree and other objects. z t makes a smaller image on the retina is farther away than is the one that provides a larger image— an example of the monocular cue of relative size. But it’s not just size of an object that provides information about distance; the quality of the image on the retina helps us judge distance. texture gradient The monocular cue of texture gradient provides information about distance because the details of things that are far away are less distinct z z linear perspective Finally, anyone who has ever seen railroad tracks that seem to join together in the distance knows that distant objects appear to be closer together than are nearer ones, a phenomenon called linear perspective. People use linear perspective as a monocular cue in estimating distance, allowing the two-dimensional image on the retina to record the three-dimensional world (Bruce, Green, & Georgeson, 1997; Dobbins et al., 1998; Shimono & Wade, 2002; Bruggeman, Yonas, & Konczak, 2007). z Linear perspective z Motion perception When a batter tries to hit a pitched ball, the most important factor is the motion of the ball. How is a batter able to judge the speed and location of a target that is moving at some 90 miles per hour? The answer rests in part on several cues that provide us with relevant information about the perception of motion. For one thing, the movement of an object across the retina is typically perceived relative to some stable, unmoving background. Moreover, . z if the stimulus is heading toward us, the image on the retina will expand in size, filling more and more of the visual field. In such cases, we assume that the stimulus is approaching—not that it is an expanding stimulus viewed at a constant distance. It is not, however, just the movement of images across the retina that brings about the perception of motion. If it were, we would perceive the world as moving every time we moved our heads. Instead, one of the critical things we learn about perception is to factor information about our own head and eye movements along with information about changes in the retinal image z