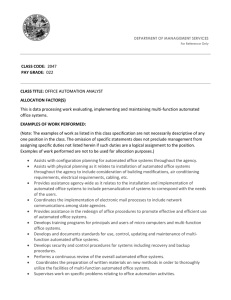

Downloaded from SAE International by Georgia Institute of Technology, Friday, September 17, 2021 2021-01-0858 Published 06 Apr 2021 A Study on Functional Safety, SOTIF and RSS from the Perspective of Human-Automation Interaction You Zhang SAIC Motor Corporation Limited Gavan Lintern Monash University Liping Gao and Zhao Zhang SAIC Motor Corporation Limited Citation: Zhang, Y., Lintern, G., Gao, L., and Zhang, Z., “A Study on Functional Safety, SOTIF and RSS from the Perspective of HumanAutomation Interaction,” SAE Technical Paper 2021-01-0858, 2021, doi:10.4271/2021-01-0858. Abstract A s perhaps the primary conduit of the physical expression of human freedom of movement, transportation in its various forms plays a critical role in human society. The availability of ready transport has shaped the fabric, infrastructure, and, to some degree, even the culture of whole human society. Now, automated driving, in various forms of Advanced Driver Assistance Systems (ADAS) and self-driving systems, is promising a safe, efficient, and productive life to humans by relieving driver from active driving tasks in the context of road transportation. However, recent high-profile crashes, e.g., fatalities involving Uber test autonomous vehicle or Tesla car, have undermined the automateddriving promise of enhanced safety. On the other hand, in automotive industry, there are safety approaches like ISO 26262 and others in-development which all are expected to mitigate the safety risk resulting from automated driving. This contribution firstly reviewed the emerging human-automation interaction issues relating to automated-driving safety. Then, with the respect to these issues, the relevant automotive safety approaches - ISO 26262, ISO 21448 (Safety of the Intended Functionality, SOTIF) and RSS (Responsibility-Sensitive Safety model), were discussed to highlight the blind spots in them which may impair the safety promise of automated driving where now machine learning mechanisms are prevalent. Next, we briefly introduced the system-thinking tools in human factors discipline which are expected to enhance automated-driving technology to address human-automation interaction issues. Finally, we concluded the findings and argued that automated-driving safety risks should be managed not only from technological perspective but also from humanfactors perspective, and the philosophy of human use of driving automation can benefit the development of a safe, reliable, and trustworthy Automated Driving System (ADS). Keywords Automated Driving, Human Automation interaction, Traffic Safety, Machine Learning, Human Factors Introduction S afety is at the core of automated driving which promises to make our lives safer. Today, road transportation safety risk is still high e.g., in 2018 road traffic accidents killed about 1.35 million people world-wide [1] and human errors, such as speeding, misjudgment of other driver’s behaviors, alcohol impairment, and distraction, are frequently reported as responsible for these deadly accidents [2]. By eliminating many of the mistakes of human drivers, automated driving has the potential to dramatically reduce road fatalities [3]. Moreover, studies suggest that safety is among the best predictors of public intention to accept a self-driving car [4, 5] and safety-related topics are strongly associated with consumers’ level of trust with self-driving cars [6]. Therefore, it is unlikely that the introduction of automated driving will succeed without careful and comprehensive consideration of safety. Nevertheless, Automated Driving Vehicles (ADVs) are already with us. Some provide various forms of assistance to support the driver who remains in ultimate control while others are designed to put the vehicle under permanent computer control without any human input. The former employ numerous ADAS from all major players in automobile market e.g., in 2020 Euro NCAP report for automated driving, there were ADAS branded different names from more than 10 car makers [7]. For the latter, by Feb. 2020, 66 companies possessed permits to test self-driving vehicles on the roads of the State of California alone [8]. This reveals that self-driving vehicles are the focus of a fast-paced field of modern technology for emerging transportation capacity. Nevertheless, relatively few members of the traveling public have yet experienced trips in a self-driving vehicle. Moreover, the public may have difficulties in understanding the varying forms of Downloaded from SAE International by Georgia Institute of Technology, Friday, September 17, 2021 2 A STUDY ON FUNCTIONAL SAFETY, SOTIF AND RSS FROM THE PERSPECTIVE OF HUMAN-AUTOMATION INTERACTION automated driving technology e.g., they may well assume that their ADV possesses more operational capability and intelligence than is the case [9]. In the automotive literature, the Society of Automotive Engineers (SAE) levels of automation [10] describes a hierarchy for categorizing the technology. It differentiates driver control versus automation control through five levels from zero to five progressing from completely manual (non-automation) to full automation. Critiques on the SAE levels claimed that they limit discussion to the narrow technical capabilities of the ADS as assessed against the known human driving task. This benefits engineering development but not necessarily the public interest [11, 12]. Here we will not discuss the SAE levels in detail (notably, the SAE standard was not intended as a specification to cover all constraints [10] that are critical to the safe operation of the automated vehicles), which is beyond the scope of this paper, but instead will focus on the real-world constraints on automated driving technology that are neglected within public discussion. For example, in 2018, a Tesla Model X in automated driving mode struck a fixed object in the area that divided the main lanes of a highway from an exit ramp where the lane markings was worn and faded [13]. Although the Tesla’s automation was defined as partial automation [13], it was given the name of Autopilot, thereby giving the impression that it was a self-driving system [14]. It was, however, unable to react to the fixed object in the area and had limitations in visual processing that prevented it maintaining the appropriate lane of travel [13]. The driver, who was killed in the crash, did not take any emergency action before the crash, most likely due to distraction caused by a cell phone game application and facilitated by Tesla automation’s ineffective monitoring of driver engagement [13]. Evidently, the driver did not fully understand the constraints for safe operation of the driving automation. Nor was there effective mitigation of hazards from violation of these constraints. Automated driving is enabled by digitalization; that is, penetration of digital technologies into systems is pervasive with software playing a key role [15, 16]. A growing number of ADSs incorporate software in ever increasing size and complexity. Software-related issues have thereby challenged manufacturers because software now has life-critical control authority and must be considered a credible potential cause of severe mishaps [17]. In the automotive field, the development of safety critical systems relies on stringent safety methodologies, designs, and analyses to prevent, mitigate, or contain hazards. ISO 26262 and in-development ISO/WD 21448 are two main safety standards used to address safety of electrical and electronic components, mandating methodologies for system, hardware, and software development. Specifically, the standardized process ensures traceability across system requirements, architectural and unit design, coding, verification, and validation. In cases of safety critical systems with high complexity, Hazard Analysis and Risk Assessment (HARA) in iteration with system design is required to formally represent the design domain for operation. The development of self-driving systems and safety critical ADAS are boosted by recent advances in Machine Learning (ML) techniques as well as by rapid growth of computing power. ML enables these systems to perform intended driving tasks without intervention from system users and without being explicitly programmed by system designers. Unlike traditional software development where a human programmer must specify input/output relationships, systems enabled by ML are designed to identify those relationships automatically from a training data set. Once the identification is completed, they can fulfill the intended output with data differing from those used in training. Further, Deep Learning (DL), a subfield of ML, intends to automate discovery of representations of useful information (i.e., features) in data and thus saves much effort of handcrafting features. DL makes it economically feasible to apply ML techniques in a wide range of applications [18]. However, it is challenging to demonstrate that ML enabled systems can be used safely in safety-critical systems (e.g., an ADV) because it is difficult to reason about the correctness of the ML system behavior in the face of realworld data that always differs somehow from data used in training and validation [18, 19]. On the other hand, the safe operation of ADVs addresses a challenge beyond the narrow technological aspect, as these vehicles will be integrated into the complex socio-technical system of modern human road traffic where accidents often result from interactions between a large number of elements (i.e., motor vehicles, other road users, road infrastructures) [20]. That is, simply removing the human driver from the driving task will change the mechanism of accident development and eliminate both the negative and positive effects that the human driver has on traffic as a participant in an accident as well as a potential accident avoidance and compensation element [21]. Further, as the ADS replaces the driver in the driving task, it may also introduce currently unknown effects into road traffic, where the technology may behave differently to the way designers think it will. For example, the most frequent type of crashes in a self-driving test in California were predominantly rear-ends where self-driving vehicles were struck by a human-driven vehicle. The causal factor emerged potentially from the differences between automated driving and human driving behavior [22, 23, 24]. Thus, it is likely that the interactions between driving automation and human users should be considered carefully in order to mitigate the safety risk of automated driving. Many relevant methodologies are available to advance ADS safety [25]. Here we will not review all available methodologies, but instead will review a list of selected methods in the light of human-automation interaction. The terms to be reviewed and their brief descriptions are listed in Table 1. In the following sections, we first addressed the emerging human-automation interaction issues when considering the effect of pervasive software content and the penetration of ML algorithms on ADV. Then, we evaluated ISO 26262, ISO/WD 21448 and RSS with respect to these issues, highlighting the blind spots in them that may impair the safety promise of automated driving where machine learning mechanisms are now prevalent. We argued that effective management of automated-driving safety risk needs considerations not only from a technological perspective but also from a human-factors perspective, both of which contribute to the development of a safe, reliable, and trustworthy ADSs. We also briefly introduced two system-thinking tools from the human factors discipline which can be used to enhance automated driving technology by addressing human-automation interaction Downloaded from SAE International by Georgia Institute of Technology, Friday, September 17, 2021 A STUDY ON FUNCTIONAL SAFETY, SOTIF AND RSS FROM THE PERSPECTIVE OF HUMAN-AUTOMATION INTERACTION © SAE International. TABLE 1 Terms referring to methodologies contributing to automated driving safety No. Term Description 1 ISO 26262 Addresses Functional Safety, the safety risk from systematic failures and random hardware failures in Electrical / Electronic (E/E) systems within road vehicles [26] 2 ISO/WD 21448 (Working Draft, WD) Addresses Safety of the Intended Functionality (SOTIF), the safety risk from potential hazardous behaviors related to the intended functionality or performance limitation of a system [27] 3 Responsibility Addresses a mathematical model Sensitive Safety (RSS) formalizing an interpretation the traffic law which is applicable to self-driving cars [28] 4 Systematic analysis method Addresses a number of tools in the Human Factors / Ergonomics discipline for understanding complex socio-technical systems [29] issues. Finally, we concluded this paper and provided future directions. Emerging HumanAutomation Interaction Issues in Automated Driving From the perspective of human-automation interaction, automated driving involves the use of automation by a non-professional user (e.g., an average driver) in a time-sensitive (i.e., require a response within a finite, short time interval) and safety-critical context (i.e., where an incorrect action can have safety consequences) [30]. Further, non-professional drivers cannot rely on extensive training or experience with automated driving technology, and the technology might be used in a wider set of context than that can be predicted by ADS designers. Bearing these points in mind, we review humanautomation interaction issues relating to safety - automation brittleness, function allocation between driver and automation, and automation reasoning in the context of modern road traffic. Automation Brittleness If you have used a car that is equipped with some ADAS, such as a lane-keeping system, you have probably engaged with DL algorithms. In safety-critical settings like road traffic, computer vision is a common application of DL, where DL algorithms are often used to ‘perceive’ the surrounding environment for making decisions e.g., identifying where lane markings are and thereby maintaining the appropriate lane of travel. While important advancements have been made in 3 the last years in computer vision and in DL algorithms that underpin these systems, such approaches to develop perceptual models of the real world suffer problems of brittleness. From a human-automation-interaction perspective, automation is defined as brittle when it operates well for situations it designed to address but needs human intervention for situations its programming does not cover. This can challenge the human operator who may not realize that the automation is acting incorrectly or does not understand the cause of incorrect automation behavior [31]. Further, DL enabled systems have additional difficulties in that DL algorithms learn the target pattern through their training data and without a formal specification, making it difficult to define and pose specific safety constraints [32]. Brittleness occurs when DL algorithms cannot generalize or adapt to conditions outside the narrow set of assumptions implicitly expressed by its training data set. For example, a number of black and white stickers in precise positions on a standard octagonal red stop sign could trick a computer vision algorithm to see a 45-mph speed limit sign [33]. Notably, the modified stop sign is still discernible to humans [33]. Thus, humans may not be able to predict this edge case where the algorithm fails, with probable safety consequences. The source of this perceptual brittleness comes from the fact that DL algorithms do not actually learn to perceive the world in a way that can generalize in the face of uncertainty [34]. Specifically, the term ‘deep’ in DL refers to the fact that DL is done across a chain of several layers stacked together, with higher level feature representations (e.g., the combination of a horizontal and vertical edges can form a corner or a cross) composed of simpler features (e.g., an edge at a particular orientation) detected earlier in the chain. A DL training algorithm does not need to specify what features to be detected at each layer -i.e., the learning procedure adjusts the coefficients in all the layers so that the system automatically learns to detect the right features or combinations of features [18]. Thus, what the algorithm has actually “learned” from the training data set is that a particular set of mathematical relationships belong together as a label for a particular object; and thereby, abnormal data which perturbs such a relationship can disrupt the algorithmic recognition. Function Allocation between Driver and Automation Engineering system design usually relies on some form of functional or role assignment to bring together the functional requirements and human cognitive processes as a coordinated system [35]. For an ADV, SAE J3016 serves as a structure for classifying function allocation between driver and automation by a six-level taxonomy ranging from zero to full driving automation [10]. However, achieving such allocation in practice may result in problems, as designers of ADSs may differ in what they set as appropriate criteria for the function allocation. For example, the so-called ‘irony of automation’ states that introduction of automation can radically change how people perceive or act in a specific context [36]. With automation, people do not merely reduce what they work on when Downloaded from SAE International by Georgia Institute of Technology, Friday, September 17, 2021 4 A STUDY ON FUNCTIONAL SAFETY, SOTIF AND RSS FROM THE PERSPECTIVE OF HUMAN-AUTOMATION INTERACTION (part of) a task is automated but use different strategies for working on that task [30]. For example, ADAS are designed to relieve the human driver from certain tasks (e.g., lane keep assist for steering, adaptive cruise control for pressing the gas), and can therefore comfort the driver, enabling him or her to switch attention for better monitoring the traffic environment and the vehicle. However, extensive human factors research has indicated that these ADAS transform drivers from their traditional manual operation duty to the role of passive observers who need to monitor the automation, probably yielding new types of safety concerns - e.g., drivers may become more willing to divert their visual attention to off-road locations, compared to when they are in manual control of the vehicle [37], and their vigilance may progressively decrease as a drive extends [38]. Moreover, average drivers may underestimate risks or place too much trust in the system- e.g., in the deadly crash involving a Tesla model X, the driver did not take any emergency action to prevent the crash. He was probably distracted by his cellphone, there being an active game application that had been the foremost open application on his cellphone just prior to the crash [13]. Thus, it is likely that the driver had misplaced his trust in the Tesla’s automation, diverting his attention for game playing during the trip. Also, average drivers may lack the training and experience to use ADS. Until now, the need for drivers to know about how cars work is minimal. Likely, it is assumed that drivers can quickly master the basic control of the car and there seems to be little need for them to understand the inner workings of any car component. However, the introduction of automated driving has changed that [39]. Advances in automated driving come rapidly and keeping up with them is becoming difficult for drivers. Automation Reasoning in the Context of Current Road Traffic Human cognition supports two basic reasoning approaches bottom-up reasoning which occurs when information is taken in at the sensor level (i.e., the eyes, the ears, the sense of touch) to build a model of the world we are in, and top-down reasoning which occurs when perception is driven by cognitive expectations [34]. Usually, we mix these two for processing information - e.g., when bottom-up reasoning tells us that part of a lane line has disappeared, we can use top-down reasoning to infer where the line would be even if we cannot see all of it. Humans do not need perfect information because of our ability to fill in missing information from experience, which helps us to cope with the uncertainty in the realworld environment. However, this ability to anticipate is built over years of experience rooted in the human society. Specifically, the road traffic is a mutual social resource where the social harmony between all actors is preserved and mandated by the rules of the road (e.g., traffic-control devices, traffic laws and directions), and is augmented by common expectations about how other road users will behave in various driving contexts [9]. While the capability of self-driving cars will evolve during the coming years, the degree to which these cars will conform to intrinsic human reasoning concerning social behavior on the road is doubtful - e.g., driving automation was unable to properly respond to marginal corner cases such as a pedestrian, impaired by drugs, pushing a bicycle across a street where there was no crosswalk [40] and a truck driver who ignored a stationary autonomous vehicle and continued to reverse [41]. Notably, these accidents are unlikely to have occurred with human drivers who would normally anticipate the possibility of unknown objects ahead and would be able to avoid being hit by a slow-reversing truck. These scenarios seem simple for human drivers who intuit to use top-down reasoning to resolve uncertainty but such abstract reasoning and thereby the development of alternative action plans are beyond the current scope of ML enabled systems. Additionally, human drivers and ADSs have quite different sources for bottom-up reasoning - while human drivers (and pedestrians) rely overwhelmingly on vision, this is not necessarily true for ADSs, which are informed by light detection and ranging (e.g., Lidar), radio detection and ranging (e.g., Radar), and vision as well as other forms of sensors. These various sensors detect emissions in form and frequency different from that detected by human vision, and then the information from these sensors is fused and integrated to assemble the ‘perceived’ surrounding environment. Thus, humans and ADSs perceive a quite different world even they are actually in the same traffic context, which may result in their different behaviors e.g., an automated car can ‘see’ far more things than can a human driver and thus, it becomes unnecessarily ‘timid’ on road and thereby often yields to other objects. This eccentric behavior of an ADS may be unexpected by an average human driver, possibly leading to a safety consequence. The divergence of human and ADS perceptions, together with the absence of abstract reasoning in ML enabled systems, may mean that human drivers and ADVs are far from achieving the full degree of integration in road traffic. A Review of Safety Methodologies for Automated Driving With respect to the above human-automation interaction issues, we review below methodologies contributing to automated driving safety •• ISO 26262, the state-of-art safety standard in the road vehicle industry, which addresses random and systematic software and hardware failures relevant to any E/E component in ADVs, and •• The still in-development ISO/WD 21448, which addresses insufficiencies of the intended functionality and reasonably foreseeable misuse by human users, currently focusing on sensors and algorithms for the creation of situation awareness critical to safety, and •• RSS, which provides an approach for planning nominal ADV behaviors for ensuring safety Downloaded from SAE International by Georgia Institute of Technology, Friday, September 17, 2021 A STUDY ON FUNCTIONAL SAFETY, SOTIF AND RSS FROM THE PERSPECTIVE OF HUMAN-AUTOMATION INTERACTION Notably, these methodologies can complement each other - they tackle different aspects of an ADV, which typically needs a perception-plan-control architecture to interact with other elements in road traffic [42]. ISO 26262 ISO 26262 is the state-of-art standard in the automobile industry. It is intended to achieve Functional Safety, the absence of unreasonable risk due to malfunctioning E/E components. ISO 26262 seeks to ensure systems have the capability to mitigate failure risk sufficiently for hazards that are identified in the Hazard Analysis and Risk Assessment (HARA) process. Also, HARA determines the required amount of mitigation by evaluating the severity of a potential loss event, operational exposure to hazards, and human controllability of the loss event when failure occurs. These factors combine into an Automotive Safety Integrity Level (ASIL) as assigned to safety goals and detailed requirements following these goals. The safety goals and requirements guide the system development process which is then decomposed into hardware and software development processes. Additionally, the assigned ASIL determines which technical and process mitigations should be applied, including specified design and analysis tasks that must be performed. Indeed, ISO 26262 advocates for a systematic approach whereby safety is achieved by one or more safety measures to be implemented throughout the system in question. These measures are together intended to prevent the occurrence of hazards that can harm to humans, whether by lowering the probability of an E/E function failure or by mitigating the hazards when the failure occurs. Therefore, it is likely that with appropriate safety measures, ISO 26262 can address the brittleness of a ML enabled automation system - i.e., a mechanism can be designed based on an ISO 26262 process that is invoked whenever there is a failure of the primary ML module, permitting a less-than-perfect primary ML module so long as failures are detected quickly enough to invoke the safety mechanism [19]. The human driver can be relieved from the urgency to mitigate the risk from failed ML algorithms as now the safety mechanism will be responsible for bringing the whole vehicle to safe state e.g., stop within the lane. Also, relaxing safety requirements on a primary ML module while keeping the whole vehicle safe can save costs and reduce complexity for the overall system. However, it is still difficult to implement these benefits within ISO 26262 since ISO 26262 traditionally assumes that the behavior of a system can be completely specified and verified prior to operation, which is not held true for ML algorithms [43]. The criteria of difficulty in applying ISO 26262 to mitigation of potential safety risks from ML modules refer to interpretability, implementation transparency, stability, testability, etc. [43, 44] - e.g., due to the lack of logical structures and system specification, it is unclear how to extend ISO 26262 to test the correctness of software with DL components [45]. Moreover, novel approaches and techniques for improving ML algorithms do not always align with requirements from industrial standards like ISO 26262 and thereby, their applicability needs to be improved. One solution for this is to adapt them to the development cycle V model of ISO 26262 [43]. 5 Further, ISO 26262 itself does not include systematic techniques for evaluating human task performance during human use of driving automation, thereby possibly underestimating human-automation issues relevant to automated driving safety. For example, human Controllability accessed in HARA refers to the probability that a human in the traffic context (e.g., drivers, pedestrians, cyclists, or any humans who can influence the loss event) can sufficiently control the loss event when system failure happens, such that they can avoid the specific harm. During the evaluation of Controllability, ISO 26262 relies upon certain assumptions about humans e.g., the driver is in an appropriate condition to drive (e.g., not tired), has the appropriate training, and is complying with applicable legal regulations, which may not hold true in a real-world traffic. Moreover, the decision on Controllability level usually depends upon input from subject matter experts, possibly lacking justification from appropriate theoretical or empirical evidence. Thus, safety measures vulnerable to deficient human performance in the real-world context are possibly developed within the ISO 26262 framework - e.g., an ADAS may well assume a human driver as the fallback role and thus, the system will alert the driver and then shut down itself whenever failure occurs. Evidently, this safety measure will not always work since considerable research has demonstrated that a human driver may not fulfill his/her fallback role due to factors like distraction [37, 46], vigilance decrement [38] or mode confusion [46]. Another issue related to ISO 26262 HARA is that normally the process is built upon a completely specified system where the functional allocation between the human and the ADS is fully specified. However, this may not be possible, given an ML-enabled automation and the fact that the HARA process starts in the early phase of ISO 26262 activities where it is likely that the functional allocation remains immature and not yet assessed in any complex operational setting. On one hand, the automation behavior evolves as being shaped by constraints implicitly established by the data fed into the automation. On the other hand, human behavior adaptations to automation persist - e.g., a recent driving-automation research performed by Insurance Institute for Highway Safety and Massachusetts Institute of Technology on volunteers over a month found that after the period, drivers were substantially more likely to let their focus slip or take their hands off the wheel when using automation. The impact of an SAE Level 2 system was more dramatic than that of its Level 1 equivalent [47]. Techniques like FMEA (Failure Mode and Effects Analysis) and FTA (Fault Tree Analysis), both referred by ISO 26262 in safety analysis, rely upon the chain model of failure development [48, 49]. They cannot adequately capture the interdependent and changeable safety-relevant constraints resulting from intensive ML content and human factors issues in operation of an ADV. SOTIF Walter Huang, a 38-year-old Apple Inc. engineer, who died in the Tesla Model X crash [13], was probably unaware that his car would respond poorly to a fixed object, especially one revealed after the preceding car cut out. This sudden appearance of the object compressed to the limit the reaction time Downloaded from SAE International by Georgia Institute of Technology, Friday, September 17, 2021 6 A STUDY ON FUNCTIONAL SAFETY, SOTIF AND RSS FROM THE PERSPECTIVE OF HUMAN-AUTOMATION INTERACTION available for recognition by multiple sensors and algorithms. The driver was also probably unaware that diminished visibility of lane markings on one side significantly challenged the perception software in maintaining the appropriate lane of travel. Yet it seems unreasonable to require that an ordinary driver (e.g., not a perception engineer) to maintain a robust mental model of the constraints that shape the safe domain for operating an ADV. Indeed, it is insufficient, inappropriate, and dangerous to depend on human supervision of automation in complex, safety-critical environments without making explicit the interdependent capabilities and needs of both the automation and the human [50]. However, designing a system that is good at knowing it has exited its safe operational domain can be a significant challenge whether that exit is initiated via human intervention or via automation - e.g., human-automation interface should be carefully designed to help the driver address unanticipated events; functional limitations of operating context should be thoroughly recognized, and thereby functional performance fully verified and validated. The ISO/WD 21448 or SOTIF standard describes an iterative development process for recognizing performance limitations of the intended function (including ML components) and reducing the risk due to scenarios/inputs that belong to unsafe-known (e.g., samples out of an operational design domain) and unsafe-unknown (e.g., samples out of a training distribution) situations to the acceptable extent [44]. The SOTIF process augments ISO 26262 with a SOTIF specific HARA and safety concept for hazards due to inadequate performance functionality, insufficient situational awareness, reasonably foreseeable misuse, or shortcomings of the human machine interface. In comparison, ISO 26262 only addresses hazards due to E/E failures. For reducing the SOTIF risk to the acceptable level, SOTIF measures are taken to improve the function or restrict the operational domain of the function. A verification and validation strategy is then developed to argue that the residual risk is within the acceptable level. However, the best practices for mitigation of SOTIF risk are not yet known and even the standard itself might change in the future. ISO/WD 21448 does not specify what technique is adequate - i.e., to increase ML performance and robustness in a safety-critical context can be particularly challenging, relying upon advances in scientific literature and engineering adaptations of these advances [44]. What is still intractable is the issue concerning the acceptability of the SOTIF risk which is associated with the purpose, scope, and boundary of the ADS function and also, user acceptance of the function. ISO 26262 uses ASIL to guide the engineering effort needed to mitigate risk from Functional Safety, e.g., an ASIL B safety goal dictates more mitigation work than an ASIL A goal, whereas the SOTIF standard requires the target risk level of an accident associated with ADS function shall be the same or lower than the risk of the same-type accident already observed on public roads [27]. Thus, in the light that accidents result from the interactions between elements within road traffic, SOTIF practitioners will fail to develop the ADS function with the appropriate risk level nor can they direct their effort to achieve that level if they do not investigate human performance relating to interactions in the blend of traffic elements e.g., automation, infrastructure, drivers, and other humans relevant to the driving context. Another issue troubling SOTIF practitioners is that an improved algorithm does not always lead to a safer humanautomation system. On the contrary, perfect driving automation may impair drivers’ situation awareness and weaken their task performance in critical scenarios where human intervention is needed [31]. Careful consideration of functional allocation between human and driving automation in the real-world setting is needed to ensure safety for the overall system. Also, the current draft version of the SOTIF standard is explicitly intended to only cover vehicles up to SAE Level 2. Additional measures are needed for considering the standard for higher levels of automation. RSS RSS is a rigorous mathematical model that formalizes an interpretation of the law in road traffic [28]. RSS is intended to provide a logic for a self-driving car to make safe decisions, given the assumption that the hardware and software of the ADS are operating error free (i.e., are functionally safe). Thus, by building and proofing the safety logic inside the ADS, RSS contributes to the overall ADS safety where Functional Safety is a necessary but not sufficient measure - i.e., Functional Safety mitigates risks from failures, but a mostly functionally safe self-driving car may still crash into an object on road due to the unsafe logic in the code. Indeed, RSS provides an approach to plan ADS behavior for circumventing the challenge of behavior prediction where there is a vast multitude of unseen situations, based on the worst-case behavior positions and actions of surrounding vehicles [51]. By and large, RSS is constructed by formalizing the following 5 “common sense” human rules in the context of road traffic [28]: •• Do not hit someone from behind. •• Do not cut-in recklessly. •• Right-of-way is given, not taken. •• Be careful in areas with limited visibility. •• If you can avoid an accident without causing another one, you must do so. To formalize the above rules, RSS uses Newtonian mechanics to specify behavioral constraints such as determining safe following distance to avoid collisions even when other vehicles make extreme maneuvers such as hard braking. However, using RSS as the safety decision logic for self-driving cars requires not only knowledge of the physics of the situation, but also correct measurements to feed into the RSS equations [52]. The variables in the RSS equations are subject to complications that result from considering physical factors in real-world settings - e.g., vehicle status, road geometry and environmental parameters. Notably, the five aforementioned human rules on traffic formalized by the RSS equation are still subject to different Downloaded from SAE International by Georgia Institute of Technology, Friday, September 17, 2021 A STUDY ON FUNCTIONAL SAFETY, SOTIF AND RSS FROM THE PERSPECTIVE OF HUMAN-AUTOMATION INTERACTION explanations - e.g., what does ‘Do not cut-in recklessly’ actually mean? Different societies and persons may have different interpretations for the term ‘recklessly’. Herein from the first Google self-driving car crash goes an example regarding a ‘reckless’ lane-merging [53] The Google car prepared to make a right turn. It stopped when it detected the sandbags blocking its path. After a few cars had passed, the Google self-driving car began to proceed back into the center of the lane to pass the sandbags. A public transit bus was approaching from behind. The test driver in Google self-driving car saw the bus approaching in the left side mirror but believed the bus would stop or slow to allow the Google car to continue. Approximately three seconds later, as the Google self-driving car was reentering the center of the lane it contacted the side of the bus. Actually, the Google self-driving car may not be regarded as ‘reckless’ since it had already waited for a few cars to pass and even the human test driver in the car also expected the transit bus to give way to the Google car. It is likely that another bus driver might have given right-of-way to the Google car as it was ahead of the transit bus. Although Google acknowledged the technology bore some responsibility in that ‘if our car hadn’t moved there wouldn’t have been a collision’ [53], it is evident that the human bus driver who kept the bus moving forward also contributed to the accident. Therefore, there are subtle judgements behind the term ‘reckless’ which may be hard to formalize precisely. Alternatively, when a human driver is situated in the same driving scenario e.g., merge the lane, he or she will not only check the room for the operation, but also negotiate with other road users for the room, comprehend their spatiotemporal relationships related to ego car, project their future movements and response to the contingency if the negotiation fails. These activities describe how humans extract and process information during the driving task [54, 55], by which a human driver can take appropriate action to resolve potential conflicts on road with the respect to - safety, i.e., the trajectory of ego car shall not intervene with that of another road user, and efficiency, i.e., not to wait too long time for the merge. Thus, the abstract harmony between road users guides them to create the more concrete, crash-free physics. The worst-case planning approach promoted by RSS may not be feasible in some driving scenarios (e.g., lane merging) where there is potential right-of-way conflict between road users. After combining theoretical models and empirical data, a recent study [51] showed that merging gaps observed in real-world traffic are considerably smaller than the corresponding safe merging gaps. Subsequently, any attempt to guarantee safety by the worst-case planning would prevent vehicles performing basic maneuvers like merging in common traffic scenarios. The fundamental safety issue in the above merging example is that it requires all the involved vehicles to agree for ensuring safety. Therefore, successful interaction with surrounding traffic elements may be the only recourse for ADVs to operate safely and efficiently. This requires predicting human behavior and planning accordingly, possibly strengthened by the connectivity between surrounding road users who compete for the same space on the road [51]. 7 The Multi-Faceted Automated Driving Safety Today, only the aspect of functional safety is well understood, supported by established methods, techniques and tools and addressed by the existing ISO 26262. So, it is known only for this aspect what is considered necessary to claim sufficient coverage, i.e., to be able to argue a sufficient level of functional safety to release a (non-automated) car as a finished product. Besides the aforementioned ISO 26262 (i.e., Functional Safety), in-development ISO/WD 21448 (i.e., SOTIF) and RSS (i.e., an approach for planning safe nominal behavior), UL 4600, a voluntary industry standard, states to ensure ADS safety by taking a safety-case approach consisting of goal, argumentation, and evidence [56]. How to consider the specific ways in which these safety metrologies could be adopted into a framework for the minimum performance of an ADS or a minimum risk threshold an ADS must meet in road traffic remains a challenge [57]. Likely, to enhance the safety of a finished ADV, this framework needs to address safety-related issues (e.g., reliability and robustness) of designs not only over the life of the vehicle, but also in “edge” cases—both of which are difficult and even impossible to verify through one-time testing on a finished vehicle. System-Thinking Tools in Human Factors Automated driving, as it is being integrated into current road traffic systems, is a complex socio-technical system. This integration will proceed with few adjustments to infrastructure i.e., to change the world without changing the world [58]. Given the hazardous potential of automated driving, and the cost, the suffering, and the professional jeopardy associated with adverse events (e.g., the crashes associated with Tesla [13] and Uber [40]), the socio aspect of automated driving technology should have a high profile. With the still-fast-evolving technology, it is likely that uncertainties injected by trends in deregulation, competition, budgetary constraints, user adaptations and public awareness will continuously challenge the management of automated driving safety. Therefore, here we argue that the safety framework of automated driving should handle the challenges proactively by considering evolving constraints in the traffic environment as a complex socio-technical system that shapes behavior of drivers and other humans [59]. Notably, many of the human factors tools already available to address complex systems can contribute to automated driving safety. These tools have been used to improve risk management by identifying the mechanisms underlying major industrial accidents and by building a comprehensive system design in support of risk management. In this section, we will not address all these tools but focus on two methods - STPA (System-Theoretic Process Analysis) and CWA (Cognitive Work Analysis). They have been widely used to address complex socio-technic systems - e.g., military operations, Downloaded from SAE International by Georgia Institute of Technology, Friday, September 17, 2021 8 A STUDY ON FUNCTIONAL SAFETY, SOTIF AND RSS FROM THE PERSPECTIVE OF HUMAN-AUTOMATION INTERACTION nuclear plant, and air traffic and also, have already been available in the automotive literature. We are going to briefly review these tools and then discuss their potential contribution to automated driving safety. STPA Systems-Theoretic Accident Model and Processes (STAMP) is a causality accident model, based upon system theory, in which safety is viewed as a system property that arises when system components interact with each other within a specific environment. In STAMP, there is a set of safety constraints related to the system components to enforce safety. Accidents occur when interactions of system components violate the safety constraints. Based upon STAMP, System-Theoretic Process Analysis (STPA) is a tool intended for proactive hazard analysis which has become increasingly complicated in today’s complex systems relating to software, component interactions and human-machine interactions. In the SOTIF standard, STPA is regarded as a suitable technique for systematic identification of triggering conditions that initiate the subsequent system reaction potentially leading to hazards [27]. Triggering conditions are specific conditions of a driving scenario, such as driver misuse of automation, sun glare on the camera, or the aforementioned graffiti on the stop sign which tricked the computer to see a 45-mph speed sign. STPA has already been applied to design of ADSs - e.g., Abdulkhaleq, Lammering and Wagner et al. developed a dependable architecture with STPA for fully automated driving vehicles [60]; France applied STPA to an Automated Park Assist system [61]. Further, the graphics output of STPA is based upon a hierarchical control structure, which is particularly useful for specifying the roles of agents (e.g., hardware/software elements, human operators) in a safety incident [29]. CWA Cognitive Work Analysis (CWA) is a multi-stage analytic framework for identifying the human- relevant work constraints in a socio-technical system and is thereby an effective tool for supporting design of complex social-technical system [62, 63]. The underlying assumption of this framework is that the potential for action by workers in a complex system can be specified by the behavior-shaping constraints that define a field within which action can take place [64]. The analysis identifies and represents different types of constraints that are imposed on workers by a complex socio-technical system. CWA comprises several stages of analysis where Work Domain Analysis (WDA) is the most frequently used. Within those stages, Cognitive Work Analysis promises to reveal how work can be done in a system, thereby promoting designs that help operators improvise creative and spontaneous solutions as the seek to adapt to unanticipated events and subsequently, enhance safety, productivity, and operator health. CWA stages can be used as prospective tools to identify possible future designs [29, 64]. Particularly, a context-independent WDA can facilitate the development of graphical representations that can help the designer understand the design implications of future system functions and interactions. For example, Allison and Stanton have used CWA to identify a range of potential solutions to generate design recommendations for supporting fuel-efficient driving [65]; and Zhang, Lintern, and Gao et al. have used WDA to analyze test report and field data for supporting design of ADSs [66]. Final Thoughts on SystemThinking Tools Notably, there are considerable differences between STPA and CWA. CWA is a clean slate design method whereas STPA is a method of hazard analysis for a system concept (i.e., one already designed). The result from CWA is a robust system that takes account of both human and technological capabilities. CWA may guide system design to innovative strategies for interactions between vehicles with different levels of automation, for example the problem the Google car had with the bus. By contrast, STPA assesses hazards due to interaction between subsystems and humans based upon a designed system [60, 61]. There are limitations on STPA and CWA - graphic outputs of both tools need considerable expertise to interpret. Also, significant amounts of detailed data are required to produce a meaningful understanding of the constraints derived from these tools. However, the limitations may not compromise the benefits STPA and CWA can provide. There are still other tools e.g., FRAM (Functional Resonance Analysis Method) [21] available for ensuring automated driving safety and this paper is not intended to serve as a comprehensive review (one option for such a review can be found in [29]). Indeed, all these tools can be complementary, each contributing something important to the development of automated cars. The future direction can be an integrated approach which will result in a safer system that more effectively satisfies the needs of the driving public. Conclusion This contribution first addressed the emerging human-automation interaction issues when considering the intensive software content and the penetration of ML algorithms on ADV development. Then, with the respect to these issues, we evaluated ISO 26262, ISO/WD 21448 and RSS. It is likely that these methodologies lack support for understanding human-automation interactions relevant to automated driving safety. We finally promoted system-thinking tools from the human factors discipline and argued that these tools can enhance the existing automotive methodologies, thereby benefiting the overall safety framework of automated driving. References 1. Global Status Report on Road Safety 2018, Geneva: World Health Organization, License: CC BYNC-SA 3.0 IGO, Downloaded from SAE International by Georgia Institute of Technology, Friday, September 17, 2021 A STUDY ON FUNCTIONAL SAFETY, SOTIF AND RSS FROM THE PERSPECTIVE OF HUMAN-AUTOMATION INTERACTION https://www.who.int/violence_injury_prevention/road_ safety_status/2018/en, accessed Oct. 2020. 2. National Highway Traffic Safety Administration (NHTSA)’s National Center for Statistics and Analysis, “2016 Fatal Motor Vehicle Crashes: Overview,” NHTSA Press Release Oct. 2017, http://www.nhtsa.gov/press-release/usdot-release2016-fatal-traffic-crash-data. 9 16. Maurer, M., Christian Gerdes, J., Lenz, B., and Winner, H., “Autonomous Driving - Technical, Legal and Social Aspects,” Springer Open, doi:10.1007/978-3-662-48847-8. 17. Koopman, P., ‘Practical Experience Report: Automotive Safety Practices vs. Accepted Principles’, SAFECAMP preprint, 2018, available at https://users.ece.cmu. edu/~koopman/pubs/koopman18_safecomp.pdf, accessed Nov. 2020. 3. European Commission, “Roadmap on Highly Automated Vehicles,” rev1.1-11-06-2016, https://circabc.europa.eu/sd/ a/3e06f7bf-2719-4be1-9f24-ba6b2975d7eb/Discussion%20 Paper%20-%20rev.1%2004-05-2015.pdf, accessed Oct. 2020. 18. LeCun, Y., ‘The Power and Limits of Deep Learning’, Research-Technology Management, 2018, 61:6, 22-27, doi:10.1 080/08956308.2018.1516928 4. Gkartzonikas, C. and Gkritza, K., “What Have We Learned? A Review of Stated Preference and Choice Studies on Autonomous Vehicles,” Transportation Research Part C, 2019. https://doi.org/10.1016/j.trc.2018.12.003. 19. Koopman, P. and Wagner, M., “Autonomous Vehicle Safety: An Interdisciplinary Challenge,” IEEE Intelligent Transportation Systems Magazine, 2017, doi:10.1109/ MITS.2016.2583491. 5. Zoellick, J., Kuhlmey, A., Schenk, L., Schindel, D., and Blüher, S., “Amused, Accepted, and Used? Attitudes and Emotions towards Automated Vehicles, Their Relationships, and Predictive Value for Usage Intention,” Transportation Research Part F, 2019. https://doi.org/10.1016/j. trf.2019.07.009. 20. Salmon, P., McClure, R., and Stanton, N., “Road Transport in Drift? Applying Contemporary Systems Thinking to Road Safety,” Safety Science 50(2012):1829-1838, 2012. http://dx. doi.org/10.1016/j.ssci.2012.04.011. 6. Lee, J. and Kolodge, K., “Exploring Trust in Self-Driving Vehicles through Text Analysis,” Human Factors, 2019, doi:10.1177/0018720819872672. 7. Euro NCAP, “2020 Assisted Driving Tests,” https://www. euroncap.com/en/vehicle-safety/safety-campaigns/2020assisted-driving-tests/, accessed Nov. 2020. 8. Hawkins, A., “Everyone Hates California’s Self-Driving Car Reports,” https://www.theverge.com/2020/2/26/21142685/ california-dmv-self-driving-car-disengagement-report-data, The Verge, 2020, accessed Nov. 2020. 9. Hancock, P., Nourbakhshc, I., and Stewartd, J., “On the Future of Transportation in an Era of Automated and Autonomous Vehicles,” Proceedings of the National Academy of Sciences of the United States of America (PNAS) 116(16), 2019. www.pnas.org/cgi/doi/10.1073/pnas.1805770115. 10. SAE International Surface Vehicle Recommended Practice, “Taxonomy and Definitions for Terms Related to Driving Automation Systems for On-Road Motor Vehicles,” SAE Standard J3016, Rev. Sep. 2016. 11. Stayton, E. and Stilgoe, J., “It’s Time to Rethink Levels of Automation for Self-Driving Vehicles,” IEEE Technology and Society Magazine, Sep. 2020, doi:10.1109/MTS.2020.3012315. 12. Hancock, P., “Some Pitfalls in the Promises of Automated and Autonomous Vehicles,” Ergonomics 62(4):479-495, 2019, doi:10.1080/00140139.2018.1498136. 13. National Transportation Safety Board (NTSB), “Collision between a Sport Utility Vehicle Operating with Partial Driving Automation and a Crash Attenuator, Mountain View, California, March 23, 2018,” NTSB Accident Report, 2020, https://www.ntsb.gov/investigations/AccidentReports/ Reports/HAR2001.pdf, accessed March 20, 2020. 14. Stilgoe, J., “Machine Learning, Social Learning and the Governance of Self-Driving Cars,” Social Studies of Science 48(1):25-26, 2018, doi:10.1177/0306312717741687. 15. Watzenig, D. and Horn, M., Automated Driving - Safer and More Efficient Future Driving (Switzerland: Springer International Publishing, 2017), doi:10.1007/978-3-31931895-0. 21. Grabbe, N., Kellnberger, A., Aydin, B., and Bengler, K., “Safety of Automated Driving: The Need for a Systems Approach and Application of the Functional Resonance Analysis Method,” Safety Science 126, 2020, doi:https://doi. org/10.1016/j.ssci.2020.104665. 22. Favarò, F., Nader, N., Eurich, S., Tripp, M., and Varadaraju, N., “Examining Accident Reports Involving Autonomous Vehicles in California,” PLOS One 12(9), 2017, doi:https://doi. org/10.1371/. 23. Biever, W., Angell, L., and Seaman, S., “Automated Driving System Collisions: Early Lessons,” Human Factors, 2019, doi:10.1177/0018720819872034. 24. Boggs, A., Wali, B., and Khattak, A., “Exploratory Analysis of Automated Vehicle Crashes in California: A Text Analytics & Hierarchical Bayesian Heterogeneity-Based Approach,” Accident Analysis & Prevention, 2020, doi:https:// doi.org/10.1016/j.aap.2019.105354. 25. Ballingall, S., Sarvi, M., and Sweatman, P., “Safety Assurance Concepts for Automated Driving Systems,” SAE Technical Paper 2020-01-0727. https://doi.org/10.4271/2020-01-0727. 26. International Organization for Standardization (ISO), “Road Vehicles - Functional Safety,” ISO 26262, Second Edition, 2018, International Standard. 27. ISO, ‘Road Vehicles - Safety of the Intended Functionality’, ISO/WD 21448, Working Draft (WD), 2019 28. Shalev-shwartz, S., Shammah, S., and Shashua, A., “On a Formal Model of Safe and Scalable Self-Driving Cars,” 2018, available at https://arxiv.org/pdf/1708.06374.pdf, accessed Nov. 2020. 29. Thatcher, A., Nayak, R., and Waterson, P., “Human Factors and Ergonomics Systems-Based Tools for Understanding and Addressing Global Problems of the Twenty-First Century,” Ergonomics, 2019, doi:https://doi.org/10.1080/0014 0139.2019.1646925. 30. Janssen, C., Donker, S., Brumby, D., and Kun, A., “History and Future of Human-Automation Interaction,” International Journal of Human-Computer Studies 131:99107, 2019. https://doi.org/10.1016/j.ijhcs.2019.05.006. Downloaded from SAE International by Georgia Institute of Technology, Friday, September 17, 2021 10 A STUDY ON FUNCTIONAL SAFETY, SOTIF AND RSS FROM THE PERSPECTIVE OF HUMAN-AUTOMATION INTERACTION 31. Endsley, M., “From Here to Autonomy: Lessons Learned from Human-Automation Research,” Human Factors 59(1), 2017, doi:10.1177/0018720816681350. 32. Mohseni, S., Pitale, M., Singh, V., and Wang, Z., “Practical Solutions for Machine Learning Safety in Autonomous Vehicles,” 2019, available at https://arxiv.org/ pdf/1912.09630.pdf, . 33. Evtimov, I., Eykholt, K., Fernandes, E., Kohno, T., Li, B., Prakash, A., Rahmati, A., and Song, D., “Robust PhysicalWorld Attacks on Deep Learning,” 2017, available at https:// arxiv.org/pdf/1707.08945.pdf, accessed Nov. 2020. 34. Cummings, M., “Rethinking the Maturity of Artificial Intelligence in Safety-Critical Settings,” 2020, http://hal. pratt.duke.edu/sites/hal.pratt.duke.edu/files/u39/2020-min. pdf, accessed Nov. 2020. 35. Lintern, G., “Work-Focused Analysis and Design,” Cognition, Technology & Work 14(1):71-81, 2017, doi:10.1007/ s10111-010-0167-y. 36. Bainbridge, L., “Ironies of Automation,” Automatica 19(6):775-779, 1983. 37. Gaspar, J. and Carney, C., “The Effect of Partial Automation on Driver Attention: A Naturalistic Driving Study,” Human Factors, 2019, doi:10.1177/0018720819836310. 38. Greenlee, E., DeLucia, P., and Newton, D., “Driver Vigilance in Automated Vehicles: Effects of Demands on Hazard Detection Performance,” Human Factors, 2018, doi:10.1177/0018720818761711. 39. Casner, S. and Hutchins, E., “What Do We Tell the Drivers? Toward Minimum Driver Training Standards for Partially Automated Cars,” Journal of Cognitive Engineering and Decision Making, 2019, doi:10.1177/1555343419830901. 40. NTSB, “Collision between Vehicle Controlled by Developmental Automated Driving System and Pedestrian, Tempe, Arizona, March 18, 2018,” NTSB Accident Report, 2019. 41. NTSB, “Low-Speed Collision between Truck-Tractor and Autonomous Shuttle, Las Vegas, Nevada, November 8, 2017,” NTSB Report, 2019. 42. Feth, P., Adler, R., Fukuda, T., Ishigooka, T., and Otsuka, S. et al., ‘Multi-Aspect Safety Engineering for Highly Automated Driving’, 2018, In: Gallina, B., Skavhaug, A., Bitsch, F. (eds) ‘Computer Safety, Reliability, and Security. SAFECOMP 2018. Lecture Notes in Computer Science’, Vol. 11093. Springer, Cham. https://doi.org/10.1007/978-3-31999130-6_5 43. Serna, J., Diemert, S., Millet, L., Debouk, R. et al., “Bridging the Gap between ISO 26262 and Machine Learning: A Survey of Techniques for Developing Confidence in Machine Learning Systems,” SAE Technical Paper 2020-01-0738, 2020. https://doi.org/10.4271/2020-01-0738. 44. Mohseni, S., Pitale, M., Singh, V., and Wang, Z., “Practical Solutions for Machine Learning Safety in Autonomous Vehicles,” 2019, https://arxiv.org/pdf/1912.09630.pdf, accessed Nov. 2020. 45. Huang, X., Kroening, D., Ruan, W., Sharp, J. et al., “A Survey of Safety and Trustworthiness of Deep Neural Networks: Verification, Testing, Adversarial Attack and Defence, and Interpretability,” Computer Science Review, 2020, doi:https:// doi.org/10.1016/j.cosrev.2020.100270. 46. Endsley, M., “Autonomous Driving Systems: A Preliminary Naturalistic Study of the Tesla Model S,” Journal of Cognitive Engineering and Decision Making, 2017, doi:10.1177/1555343417695197. 47. Insurance Institute for Highway Safety (IIHS) News, “Drivers Let their Focus Slip as they Get Used to Partial Automation,” https://www.iihs.org/news/detail/drivers-lettheir-focus-slip-as-they-get-used-to-partial-automation, accessed Nov. 2020. 48. Thomas, J., Sguelia, J., Suo, D., Leverson, N. et al., “An Integrated Approach to Requirements Development and Hazard Analysis,” SAE Technical Paper 2015-01-0274, 2015. https://doi.org/10.4271/2015-01-0274. 49. Leverson, N., Engineering A Safer World: Systems Thinking Applied to Safety (Cambridge, MA: MIT Press, 2011). 50. Canellas, M., and Haga, R., “Unsafe at Any Level: NHTSA’s Levels of Automation Are a Liability for Autonomous Vehicle Design,” 2020, https://arxiv.org/pdf/2003.00326.pdf, accessed Dec. 2020. 51. Shetty, A., Yu, M., Kurzhanskiy, A., Grembek, O., and Varaiya, P., “Safety Challenges for Autonomous Vehicles in the Absence of Connectivity,” 2020, https://arxiv.org/ pdf/2006.03987.pdf. 52. Koopman, P., Osyk, B., and Weast, J., “Autonomous Vehicles Meet the Physical World: RSS, Variability, Uncertainty, and Proving Safety,” Preprint SafeComp 2019, http://users.ece. cmu.edu/~koopman/pubs/Koopman19_Safecomp_RSS.pdf, accessed Nov. 2020. 53. Davies, A., “Google’s Self-Driving Car Caused Its First Crash,” Wired, 2016, https://www.wired.com/2016/02/ googles-self-driving-car-may-caused-first-crash/, accessed Nov. 2020. 54. Banks, V. and Stanton, N., Automobile Automation Distributed Cognition on the Road (Taylor & Francis Group: CRC Press, 2017). 55. Shinar, D., Traffic Safety and Human Behavior Second Edition (UK: Emerald Publishing Limited, 2017). 56. Edge Case Research, “UL 4600: Standard for Safety for the Evaluation of Autonomous Products,” https://edge-caseresearch.com/ul4600/, accessed Nov. 2020. 57. NHTSA, “Framework for Automated Driving System Safety,” NHTSA 49 CFR Part 571, Docket No. NHTSA-2020-0106, 2020, https://public-inspection.federalregister.gov/202025930.pdf, accessed Dec. 2020. 58. Stilgoe, J., “Self-Driving Cars Will Take a While to Get Right,” Nature Machine Intelligence., 2019, doi:10.1038/ s42256-019-0046-z. 59. Lintern, G., “Jens Rasmussen's Risk Management Framework,” Theoretical Issues in Ergonomics Science., 2019, doi:10.1080/1463922X.2019.1630495. 60. Abdulkhaleq, A., Lammering, D., Wagner, S., Rober, J. et al., “A Systematic Approach Based on STPA for Developing a Dependable Architecture for Fully Automated Driving Vehicles,” 4th European STAMP Workshop 2016, Procedia Engineering 179:41-51, 2017, doi:10.1016/j.proeng.2017.03.094. 61. France, M., “Engineering for Humans: A New Extension to STPA,” Master Thesis, Massachusetts Institute of Downloaded from SAE International by Georgia Institute of Technology, Friday, September 17, 2021 A STUDY ON FUNCTIONAL SAFETY, SOTIF AND RSS FROM THE PERSPECTIVE OF HUMAN-AUTOMATION INTERACTION Technology, 2015, http://sunnyday.mit.edu/megan-thesis.pdf, accessed Oct. 2020. 62. Rasmussen, J., Pejtersen, A., and Goodstein, L., Cognitive Systems Engineering (New York: Wiley, 1994). 63. Vicente, K., Cognitive Work Analysis: Toward Safe, Productive, and Healthy Computer-Based Work (New Jersey: Lawrence Erlbaum Associates, Inc., 1999). 64. Lintern, G., “Joker One: A Tutorial in Cognitive Work Analysis,” 2013, Retrieved from www. cognitivesystemsdesign.net. 65. Allison, C.K. and Stanton, N.A., “Using Cognitive Work Analysis to Inform Policy Recommendations to Support Fuel-Efficient Driving,” . In: Stanton, N.A., editor. Advances in Human Aspects of Transportation. (Cham, Springer Nature, 2018), 376-385. 66. Zhang, Y., Lintern, G., Gao, L., and Zhang, Z., “Conceptualization of Human Factors in Automated Driving by Work Domain Analysis,” SAE Technical Paper 2020-011202, 2020. https://doi.org/10.4271/2020-01-1202. Contact Information You Zhang Company: SAIC Motor Corporation Ltd. Address: No.201, Anyan Rd., Jiading District, Shanghai, China Postal Code: 201804 zhangyou@saicmotor.com; starz@yeah.net 11 Definitions/Abbreviations ADS - Automated Driving System - ADV - Automated Driving Vehicle ADAS - Advanced Driver Assistance Systems E/E - Electrical / Electronic NCAP - New Car Assessment Program ASIL - Automotive Safety Integrity Level HARA - Hazard Analysis and Risk Assessment ML - Machine Learning DL - Deep Learning SOTIF - Safety of the Intended Functionality RSS - Responsibility Sensitive Safety STAMP - Systems-Theoretical Accident Model and Process CWA - Cognitive Work Analysis WDA - Work Domain Analysis FRAM - Functional Resonance Analysis Method NHTSA - National Highway Traffic Safety Administration FMEA - Failure Mode and Effects Analysis FTA - Fault Tree Analysis © 2021 SAE International. All rights reserved. No part of this publication may be reproduced, stored in a retrieval system, or transmitted, in any form or by any means, electronic, mechanical, photocopying, recording, or otherwise, without the prior written permission of SAE International. Positions and opinions advanced in this work are those of the author(s) and not necessarily those of SAE International. Responsibility for the content of the work lies solely with the author(s). ISSN 0148-7191