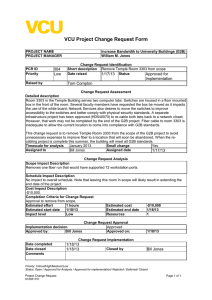

Evo Controller 8200/RNC IP configuration Ivan Guo Agenda • • • • 1. Overview 2. IP transmission boards 3. IP Configuration 4. Learn by instance • References. Overview HW architecture TN Infra Structure IP/Ethernet ATM IP/Ethernet Traffic (e.g. Mub/Mut) OSS-RC Ext. Mgmt Sys Main Subrack 1..1 CMXB SCXB EPB ET Synch. Ref. Mur -48V/DC Mur Thin Client/ Console Extension Subrack Iu CN CMXB SCXB EPB ET 0..2 Iur RNC -48V/DC Iub RBS RNC HW architecture TN Infra Structure IP/Ethernet IP/Ethernet Traffic (e.g. Mub/Mut) Main Subrack CMXB OSS-RC Ext. Mgmt Sys 1..1 Synch. Ref. SCXB Mur -48V/DC EPB Mur Thin Client/ Console Extension Subrack Iu CMXB CN Iur EPB RNC Iub RBS RNC 0..2 SCXB -48V/DC Evo Processor Board • Evo Processor Board (EPB1) – General processing – 2 X 10 Gbps Ethernet ports in the back plane – 2 X RS232 ports in one connector on the front panel (RS232) – 2 X 1 Gbps Ethernet ports in one connector on the front panel (DBG) 25 (GE) 22 (RS232) EPB1 Evo Processor Board • • • The EPB implements IP and Ethernet layer functions, such as: 10 Gigabit Ethernet termination, VLAN termination and IP layer termination, Performance Management (PM), and Fault Management (FM). The two 10 Gigabit Ethernet links (interfaces) at the back are accessed from the Ethernet switch board through the backplane Local Access to the node is only supported through the Gigabit Ethernet interface on the front. Evo Processor Board • • Ethernet interface redundancy (1+1) is handled by the embedded Ethernet forwarder. The embedded Ethernet forwarder does not require any configuration. Each GigabitEthernet MO is configured to use the 10 Gigabit Ethernet Interface 1 or the 10 Gigabit Ethernet Interface 2 by using the primaryLink attribute. IP termination on EPB 3810/3820 ET-IPG SPB Iu-ps (IP) AAL5 AAL5 Ext. host ET-IPG PDR AAL2 DC Gateway Ext. host Gateway Iub (IP) EVO UDP Iu-ps (IP) Ext. host UDP PDR EPB Int. host UDP UDP DC Ext. host Iub (IP) IP termination on EPB • Advantage & disadvantage – Increase call setup capacity and throughput – Large increase in external IP addresses • 3 types of Software Allocations (SWAs) for EPB: – EPB_C1 RNC Central Processor 1 placed only in Main Subrack in slots 3 and 25. – EPB_C2 RNC Central Processor 2 placed only in Main Subrack in slots 4 and 26. – EPB_BLADE_A Module Controller (MC) functionality resides on the primary processor and Device functionality on the primary and secondary processor. IP termination on EPB RNC Software deployment C1 (1+1) C2 (1+1) - 2 x SCTP Front End - O&M - RFN server (moved from TUB) - 2 x SCTP Front End - Central device handling - UE register “Blade” (2 – 68) Primary processor Secondary processor CPP PDR device + CPP IP (bare metal) IP (bare metal) CC device DC device RNC Module + RNSAP DC device RNC Module + RANAP DC device RNC Module + PCAP DC device DC device DC device DC device DC device IP transmission boards Cabinet EvoC 8200/RNC EPB ES-2 ES2 Power & Fan Module EPB ES1 EPB ES-1 MS Power & Fan Module APP2 APP1 MS Power & Fan Module R R Iu/Iur APP APP Cable shelf R R Iub System Control Switch Board • System Control Switch Board (SCXB3) 46 (SYNC2) 42 (E-DBG) 40 (RS232) 37 (GPS) 35 (SYNC1) 32 (SC) 27 (RP-B) 25 (GE 3) 22 (GE 2) 20 (GE 1) 17 (E 4) 15 (E 3) 12 (E 2) 10 (E 1) SCXB3 – Ethernet switch – Timing unit – 28 X 1 Gbps Ethernet port in the back plane (One is looped back to the same board.) – 3 X Clock synchronization reference input connector (SYNC1, SYNC2, GPS) – 10/100/1000BASE-T debug connector (E-DBG) – RS232 debug connector (RS232) – Serial Control connector (SC) – Connector to regional processor in AXE, not used in RNC (RP-B) – 3 X 1 Gbps Ethernet ports on the front panel (GE1, GE2, GE3) – 4 X 10 Gbps Ethernet ports on the front panel (E1, E2, E3, E4) Common Main Switch Board • Common Main Switch Board (CMXB3) – Ethernet switch – 24 X 10 Gbps Ethernet ports in the back plane – 10/100/1000BASE-T debug connector on the front panel (DBG) – 4 X 1 Gbps Ethernet ports on the front panel (GE1 - GE4) – 4 X 10 Gbps Ethernet ports on the front panel (E5 – E8) – 4 X 40 Gbps or 10 Gbps Ethernet ports on the front panel (E1 – E4) 42 (DBG) 40 (GE 4) 37 (GE 3) 35 (GE 2) 32 (GE 1) 27 (E 8) 25 (E 7) 22 (E 6) 20 (E 5) 17 (E 4) 15 (E 3) 12 (E 2) 10 (E 1) CMXB3 evel 2 Evo Controller Provides 10G and 1G Ethernet to all Slot Positions White fronts and new LEDs SCXB CMXB3 SCXB CMXB3 Dbg APP ExtA Sync RPBS 1GE 1GE 1GE 1GE 10GE 1GE 1GE Dbg APP ExtA Sync RPBS Dbg 10GE 10GE SCXB provides 28x1G backplane ports and 4x10G + 3x1G front ports Dbg 1GE 1GE 1GE 1GE 10GE 1GE 1GE 10GE 10GE 1GE 40GE 1GE 40GE 10GE 40GE 10GE 40GE 10GE 40GE 10GE 40GE 10GE 40GE 10GE 40GE 10GE 40GE 10GE 40GE CMXB3 allow 24 * 10GE backplane ports 3 * 10GE + 5 * 40GE + 4 * 1GE front ports Active Patch Panel • Active Patch Panel (APP). – Each APP contains a row of connectors and slots for optoelectrical converters (SFPs). Two APPs are placed below the lowest subrack in Evo RNC. Each row contains – 4 SFP slots (pos. 18, 28, 32 and 42) for maximum 10 Gbps Ethernet 4 DensiShield connectors (pos. 21, 24, 35 and 38) that are connected to the SFP slots – 4 RJ-45 connectors (pos. 47, 51, 61 and 65) for Ethernet 2 DensiShield connectors (pos. 55 and 68) that are connected to the RJ-45 connectors (two RJ-45 connected to each DensiShield connector) 2 DensiShield connectors for Supervision and control (pos. 74 and 77) – 1 connector for power supply (pos. 82) • Note that the lower has position designators starting with “02-“ while the higher APP has position designators starting with “03-“, Connections APP to CMXB3 Cables between APP and Main subrack. APP Pos Type Connect or Pos CMXB3 E5 5-20 2-21 CMXB3 E6 5-22 2-24 CMXB3 E7 5-25 2-35 CMXB3 E8 5-27 2-38 CMXB3 E5 83-20 3-21 CMXB3 E6 83-22 3-24 CMXB3 E7 83-25 3-35 CMXB3 E8 83-27 3-38 CMXB3 CMXB3 Board connector Non-CMXB connections between MS and APPs Cables for management and control. Connector Pos Unit Pos SCXB3 SC 02-32 APP 02-74 SCXB3 SC 80-32 APP 03-74 EPB1 GE 08-25 APP 02-68 EPB1 GE 74-25 APP 03-68 SCXB3 Unit EPB1 One end EPB1 SCXB3 Other end ESL & CISL SCXB3 SCXB3 SCXB3 CMXB3 SCXB3 CMXB3 CMXB3 SCXB3 CMXB3 CMXB3 SCXB3 CMXB3 IP Configuration Ethernet Infrastructure 40G SCXB CMXB EPB CMXB SCXB 40G 40G 40G SCXB CMXB EPB CMXB SCXB 40G 40G 40G 4x10G SCXB APP CMXB EPB CMXB SCXB 4x10G 4x10G APP 4x10G IP Transport in Evo • • • • IP Host User Plane Termination The EPB1 board is equipped with two processors with 8 cores each. Each processor will that uses external or internal communication needs an IP host in order to communicate. Each processor supports up to 16 external and 1 internal IP host terminations. RNC user plane software (DC, CC and PDR) will be located on both processors which results in that IP interfaces will be needed on both processors. The external IP hosts will be configured in a similar way as the IpAccessHostEt using the MOM. However the internal IP host will not be shown in the MOM and will not be possible to configure. IP Transport in Evo • • • IP Host Control Plane Termination The control plane (SCTP) can be terminated in two different ways; via ipAccessSctp or directly to the ipAccessHostEt. The main benefit of using the IpAccessSctp MO is that the CP and UP can share the same ipAccessHostEt (IP address). The SCTP functionality supports multi-homing; see MO structure for Iub CP below. The proposed default configuration is to configure the Iub CP without any multihoming and share IP address with the Iub UP. IP Addressing • External and internal IP hosts • The IP termination on the EPB consists of a number of external and internal IP hosts. – Up to 16 external hosts and up to 64 internal hosts are supported for each processor. – Each external host is connected to a separate IpInterface MO and all the internal hosts are connected to the same IpInterface. – The internal IP hosts require no configuration and are not visible in the MO structure. – It is important to make sure that the VLAN ID is not colliding with any planned external VLANs. IP Addressing • The PIU processor contains MCs, where the IUB CP is terminated. It also contains a CC device (terminating IUB common channels) and DC device (terminating the IUB and IUR UP for dedicated channels). • The Piudevice processor contains PDR device (terminating the IUPS UP) and DC device (terminating the IUB and IUR UP for dedicated channels). • A typical configuration: different VLANs/subnets used for all different interfaces. – 3 IP hosts per blade on PIU, for IUB UP/CP, IU-CS UP, IUR-UP – 4 IP hosts per blade on Piudevice, for IUB UP, IU-CS UP, IUR-UP, plus IU-PS UP. – For the central boards(C1, C2), one IP host is needed per board for termination of SCTP for IU-CS, IUPS and IUR CP and for IU-PC. – In addition, IP hosts are needed on C1 for Mur and IU-BC. IP Addressing Iub: 2*68 (EPB blade)+2 (C1 EPB) =138 IP hosts One /24 network with 256 addresses IuCS/Iur UP: 2*68 (EPB balde) =136 IP hosts One /24 network with 256 addresses E E E E E E Up to 24 EPB blades E E E E E E Up to 24 EPB blades IuPS UP: 68 (EPB blade) IP hosts One /25 network with 128 addresses C1 C2 E E E E C1 C2 2 C1, 2 C2 and 2 – 20 EPB blades Iu/Iur CP: 2*4 IP hosts Two /29 network with 8 addresses each H Iub UP/CP - two IpAccessHostEt per EPB blade + 2 for NTP Server on C1 device processor H IuCS/Iur UP - two IpAccessHostEt per EPB blade H IuPS UP - one IP host per EPB blade, only on secondary (PIU Device) processor Iu/Iur CP – one (multi homed) IP host on each c1 and c2 EPB (two VLANs, CP-A and CP-B) H Vlan Configuration • The configuration of node Ethernet infrastructure (VLAN, CoS, RSTP etc) remains unchanged compared to baseline (3820). • • A number of subnetworks and VLANs should be planned and Vlan MOs configured before starting the configuration of the external interfaces. • For singlehoming, five subnetworks are created, as described below: – – – – – • One for Iu and Iur control plane One for Iub user plane and control plane One for Iu-CS user plane One for Iur user plane One for Iu-PS user plane For multihoming, six subnetworks are created: – – – – – Two for Iu and Iur control plane One for Iub user plane and control plane One for Iu-CS user plane One for Iur user plane One for Iu-PS user plane CMXB –VLAN configuration CP-B on right hand side CMXB E to ES1 to ES2 E1 E2 CP-A IuPS Up to 24 EPB blades E3 IuCS/Iur external (Iub) E E E E E Up to 24 EPB blades B C1 C2 E E5 E crosslink MS E Iub E E E E E E E C1 C2 2 C1, 2 C2 and 2 – 20 EPB blades E7 external (Iu/Iur) H Iub UP/CP - two IpAccessHostEt per EPB blade + 2 for NTP Server on C1 device processor H IuCS/Iur UP - two IpAccessHostEt per EPB blade H IuPS UP - one IP host per EPB blade, only on secondary (PIU Device) processor H Iu/Iur CP – one (multi homed) IP host on each c1 and c2 EPB (two VLANs, CP-A and CP-B) Node Internal VLAN • A node internal VLAN needs to be defined on all backplane and ISL ports (not external ports). This is performed by creating a internal VLAN and setting the vlanType = INTERNAL_IP_TRANSPORT in the VLAN MO. IP Configuration on EPB Blade • • • • The IP Configuration and number of IP Hosts needed on EPB blades can vary depending on traffic separation. But the IP configuration on all EPB blades in a node should be identical. A typical configuration (used in the following examples) would be: – IuPS User Plane in one VLAN – IuCS User Plane and Iur User Plane in one VLAN – Iub Control Plane and Iub User Plane in same VLAN There are two processors on each EPB and IP termination is required on each processor for the functionality allocated to that processor. This gives the following need: – Primary (PIU) Processor: Iub CP, Iub UP, IuCS UP and Iur UP – Secondary (PIU Device) Processor: Iub UP, IuCS UP, Iur UP and IuPS UP IP Configuration on EPB blade: Iub CP/UP, IuPS UP and IuCS/Iur UP RncFunction IubLink Sctp rpuId ipAccessSctpRef controlPlaneTranportOption remoteCpIpAddress1 sctpRef ReliableProgramUniter admActiveSlot admPassiveSlot IpAccessSctp ipAccessHostEtRef1 ipAccessHostEtRef2 userPlaneTransportOption userPlaneIpResourceRef IpAccessHostPool ipAccessHostRef IpAccessHostEt ipAddress ipInterfaceMoRef IpAccessHostEt ipAddress ipInterfaceMoRef IurLink userPlaneTransportOption userPlaneIpResourceRef Slot PlugInUnit ExchangeTerminalIp GigaBitEthernet IpInterface defaultRouter0 networkPrefixLength mtu, vlanRef IpInterface defaultRouter0 networkPrefixLength mtu, vlanRef PiuDevice IpAccessHostEt ipAddress ipInterfaceMoRef CnOperator IuLink userPlaneTransportOption userPlaneIpResourceRef IpAccessHostPool ipAccessHostRef Ranap cnDomainInd=CS IpAccessHostEt ipAddress ipInterfaceMoRef GigaBitEthernet IpInterface defaultRouter0 networkPrefixLength mtu, vlanRef IpInterface defaultRouter0 networkPrefixLength mtu, vlanRef IuLink userPlaneTransportOption userPlaneIpResourceRef Ranap cnDomainInd=PS ExhangeTerminalIp IpAccessHostPool ipAccessHostRef IpAccessHostEt ipAddress ipInterfaceMoRef IpInterface defaultRouter0 networkPrefixLength mtu, vlanRef IP Configuration on EPB Blade IuPS (1..) IuLink IuLink IpAccessHostPool (IuPS UP) IuLink IuLink Rpu Slot Slot IpAccessHostEt IpInterface PiuDevice IpAccessHostEt IpInterface Slot IpAccessHostEt IpInterface IpAccessHostEt IpAccessHostEt PiuDevice IpInterface IpAccessHostEt IpAccessHostEt EPB 2 PlugInUnit IpInterface IpInterface IpInterface IpInterface IpInterface Rpu PlugInUnit IpInterface EPB 1 Sctp IpAccessSctp Rpu PlugInUnit IpAccessHostEt IpAccessHostPool (Iub UP) IpAccessSctp IpAccessHostEt IpAccessHostEt IubLink IubLink IubLink Sctp IpAccessSctp IpAccessHostEt Iub (1..n) IurLink IurLink IpAccessHostPool (IuCS/Iur UP) Sctp IpAccessHostEt Iur (1..n) IuCS (1..) IpAccessHostEt PiuDevice IpInterface IpAccessHostEt IpInterface IpAccessHostEt IpInterface EPB n IpInterface IpInterface IP Configuration on C1 and C2 EPB • The IP Configuration and number of IP Hosts needed on C1 and C2 EPBs depends on Sctp and NTP Server configuration • A typical configuration (used in the following examples) would be: – Four SCTP Front Ends (FE) • One on each C1 or C2 EPB (non robust) – Use multihoming with two IP addresses for each Sctp FE • Does not provide board redundancy; both IP terminations are on same processor • Only used for remote-end redundancy and network path diversity • Two VLANs for the Iu/Iur signalling traffic • NOTE: Also possible to use other variants • IP termination for NTP Server on C1 EPBs – NTP Server is to be located on the secondary (PIU Device) Processor of each C1 EPB (two NTP Server instances provide redundancy) – These two NTP Servers can be used by all RBS nodes connected to the EvoC node. Iu/Iur CP – one-legged RPUs, multihoming on same EPB Sctp rpuId ipAccessSctpRef Sctp rpuId ipAccessSctpRef ReliableProgramUniter admActiveSlot admPassiveSlot IpAccessSctp ipAccessHostEtRef1 ipAccessHostEtRef2 Sctp rpuId ipAccessSctpRef ReliableProgramUniter admActiveSlot admPassiveSlot IpAccessSctp ipAccessHostEtRef1 ipAccessHostEtRef2 IpAccessHostEt ipAddress ipInterfaceMoRef ReliableProgramUniter admActiveSlot admPassiveSlot IpAccessSctp ipAccessHostEtRef1 ipAccessHostEtRef2 IpAccessHostEt ipAddress ipInterfaceMoRef IpAccessHostEt ipAddress ipInterfaceMoRef ReliableProgramUniter admActiveSlot admPassiveSlot IpAccessSctp ipAccessHostEtRef1 ipAccessHostEtRef2 IpAccessHostEt ipAddress ipInterfaceMoRef IpAccessHostEt ipAddress ipInterfaceMoRef IpAccessHostEt ipAddress ipInterfaceMoRef Slot Sctp rpuId ipAccessSctpRef Slot IpAccessHostEt ipAddress ipInterfaceMoRef IpAccessHostEt ipAddress ipInterfaceMoRef Slot Slot PlugInUnit PlugInUnit PlugInUnit PlugInUnit ExchangeTerminalIp ExchangeTerminalIp ExchangeTerminalIp ExchangeTerminalIp GigaBitEthernet GigaBitEthernet GigaBitEthernet GigaBitEthernet IpInterface defaultRouter0 networkPrefixLength mtu, vlanRef=A IpInterface defaultRouter0 networkPrefixLength mtu, vlanRef=A IpInterface defaultRouter0 networkPrefixLength mtu, vlanRef=A IpInterface defaultRouter0 networkPrefixLength mtu, vlanRef=A IpInterface defaultRouter0 networkPrefixLength mtu, vlanRef=B IpInterface defaultRouter0 networkPrefixLength mtu, vlanRef=B IpInterface defaultRouter0 networkPrefixLength mtu, vlanRef=B IpInterface defaultRouter0 networkPrefixLength mtu, vlanRef=B PiuDevice PiuDevice C1 (Slot 3) C2 (Slot 4) PiuDevice C1 (Slot 25) PiuDevice C2 (Slot 26) Iu/Iur CP – one-legged RPUs, no multihoming – Possible configuration Sctp rpuId ipAccessSctpRef Sctp rpuId ipAccessSctpRef ReliableProgramUniter admActiveSlot admPassiveSlot IpAccessSctp ipAccessHostEtRef1 ipAccessHostEtRef2 Sctp rpuId ipAccessSctpRef ReliableProgramUniter admActiveSlot admPassiveSlot IpAccessSctp ipAccessHostEtRef1 ipAccessHostEtRef2 IpAccessHostEt ipAddress ipInterfaceMoRef ReliableProgramUniter admActiveSlot admPassiveSlot IpAccessSctp ipAccessHostEtRef1 ipAccessHostEtRef2 IpAccessHostEt ipAddress ipInterfaceMoRef Slot Sctp rpuId ipAccessSctpRef IpAccessSctp ipAccessHostEtRef1 ipAccessHostEtRef2 IpAccessHostEt ipAddress ipInterfaceMoRef Slot ReliableProgramUniter admActiveSlot admPassiveSlot IpAccessHostEt ipAddress ipInterfaceMoRef Slot Slot PlugInUnit PlugInUnit PlugInUnit PlugInUnit ExchangeTerminalIp ExchangeTerminalIp ExchangeTerminalIp ExchangeTerminalIp GigaBitEthernet IpInterface defaultRouter0 networkPrefixLength mtu, vlanRef PiuDevice C1 (Slot 3) GigaBitEthernet IpInterface defaultRouter0 networkPrefixLength mtu, vlanRef PiuDevice C2 (Slot 4) GigaBitEthernet IpInterface defaultRouter0 networkPrefixLength mtu, vlanRef PiuDevice GigaBitEthernet IpInterface defaultRouter0 networkPrefixLength mtu, vlanRef PiuDevice C1 (Slot 25) C2 (Slot 26) SS7/SCTP Configuration – Four single SCTP Front Ends Four FE defined • One on each EPB with C1 and C2 role (Slots 3,4,25 and 26 in MS) Redundancy and load sharing must be handled above SCTP level • Iu links must use at least two SCTP Front Ends (FE) each – At least two M3uAssocation per CN node • For board redundancy handled on SCCP/M3UA level – Up to four M3uAssocation per CN node • For load sharing on all SCTP FE • Load distributed evenly on all M3UA/SCTP in each Signalling route set (Srs) • Alternative is to use only some SCTP FE for each CN node but spread traffic for different CN nods on all SCTP FE evenly • Iur links must use two SCTP hosts each – At least two M3uAssocation per connected RNC node • Spread traffic for different RNCs evenly on all SCTP FE • Possible, but not needed to use more than two SCTP FE for Iur Iu CP – Ranap/Sccp/Mtp3b RncFunction CnOperator IuLink Ranap localSccpApRef remoteSccpApRef SccpSp mtp3bSpId SccpScrc SccpApLocal ssN SccpApRemote ssN mtp3bApId Mtp3bSpItu Mtp3bAp serviceIndicator routeSetId Mtp3bSrs destPointCode Mtp3bSr linkSetM3UId priority M3uAssociation localIpMask localPortNumber mtp3bSrsId remoteIpAddress1 remoteIpAddress2 scptId Sctp ipAccessSctpRef rpuId Mtp3bSr linkSetM3UId priority M3uAssociation localIpMask localPortNumber mtp3bSrsId remoteIpAddress1 remoteIpAddress2 scptId Sctp ipAccessSctpRef rpuId Mtp3bSr linkSetM3UId priority Mtp3bSr linkSetM3UId priority M3uAssociation localIpMask localPortNumber mtp3bSrsId remoteIpAddress1 remoteIpAddress2 scptId M3uAssociation localIpMask localPortNumber mtp3bSrsId remoteIpAddress1 remoteIpAddress2 scptId Sctp ipAccessSctpRef rpuId Sctp ipAccessSctpRef rpuId Iu CP – Ranap/Sccp/Mtp3b – Full loadsharing RncFunction CnOperator IuLink Ranap localSccpApRef remoteSccpApRef IuLink Ranap localSccpApRef remoteSccpApRef SccpSp mtp3bSpId SccpScrc SccpApLocal ssN SccpApRemote ssN mtp3bApId Mtp3bSpItu Mtp3bAp serviceIndicator routeSetId Mtp3bAp serviceIndicator routeSetId Mtp3bSrs destPointCode Mtp3bSrs destPointCode SccpApRemote ssN mtp3bApId Mtp3bSr Mtp3bSr Mtp3bSr Mtp3bSr M3uA M3uA Sctp ipAccessSctpRef rpuId M3uA M3uA Sctp ipAccessSctpRef rpuId Mtp3bSr Mtp3bSr Mtp3bSr Mtp3bSr M3uA M3uA Sctp ipAccessSctpRef rpuId M3uA M3uA Sctp ipAccessSctpRef rpuId Iur CP – Ranap/Sccp/Mtp3b – Partial load sharing RncFunction IurLink Rnsap localSccpApRef remoteSccpApRef IurLink Rnsap localSccpApRef remoteSccpApRef SccpSp mtp3bSpId SccpScrc SccpApLocal ssN SccpApRemote ssN mtp3bApId Mtp3bSpItu Mtp3bAp serviceIndicator routeSetId Mtp3bAp serviceIndicator routeSetId Mtp3bSrs destPointCode Mtp3bSrs destPointCode SccpApRemote ssN mtp3bApId Mtp3bSr linkSetM3UId priority M3uAssociation localIpMask localPortNumber mtp3bSrsId remoteIpAddress1 remoteIpAddress2 scptId Sctp ipAccessSctpRef rpuId Mtp3bSr linkSetM3UId priority M3uAssociation localIpMask localPortNumber mtp3bSrsId remoteIpAddress1 remoteIpAddress2 scptId Sctp ipAccessSctpRef rpuId Mtp3bSr linkSetM3UId priority M3uAssociation localIpMask localPortNumber mtp3bSrsId remoteIpAddress1 remoteIpAddress2 scptId Sctp ipAccessSctpRef rpuId Mtp3bSr linkSetM3UId priority M3uAssociation localIpMask localPortNumber mtp3bSrsId remoteIpAddress1 remoteIpAddress2 scptId Sctp ipAccessSctpRef rpuId EvoC 8200/RNC – NTP Server for Network Synchronization (Iub) Slot=MS3 Slot=MS25 PlugInUnit PlugInUnit ExchangeTerminalIp ExchangeTerminalIp GigaBitEthernet GigaBitEthernet PiuDevice IpAccessHostEt ipAddress ipInterfaceMoRef ntpServerMode=ENABLED ExhangeTerminalIp GigaBitEthernet PiuDevice IpAccessHostEt ipAddress ipInterfaceMoRef ntpServerMode=ENABLED IpInterface defaultRouter0 networkPrefixLength mtu, vlanRef ExhangeTerminalIp GigaBitEthernet IpInterface defaultRouter0 networkPrefixLength mtu, vlanRef C1 (MS, Slot 3) C1 (MS, Slot 25) IP hosts configured with NTP servers enabled on both C1 boards Learn by instance IP Configuration Instance • Contents – Iub User and Control Plane – IU/IUR Control Plane – IUPS User Plane – IUCS/IUR User Plane Iub User and Control Plane Iub User and Control Plane • In this configuration procedure Iub control plane is configured by defining IpAccessSctp and IpAccessHostEt MOs for SCTP so that the IP hosts can be used for Iub user plane configuration. • • • • • • • • • • For configuration of Iub control plane and user plane the following data must be known: • ipAddress: The IP address for the host of the Iub control plane and user plane. Two for each EPB are needed. • routerIpAddress: The IP address to the default router. One mandatory address can be configured. If no router is going to be used in the network a fictitious address must be used, because this attribute is used to determine the subnet mask. • networkPrefixLength: Used to specify which part of the routerIpAddress is the subnet address. IUB User Plane • When the Iub control plane is configured using IpAccessHostEt on EPB, the additional IpInterface and IpAccessHostEt are required for the Iub user plane. • The typical situation is to have one IpAccessHostPool for Iub user plane. One IpAccessHostEt is defined on primary processor for Iub Control Plane and Iub User Plane, and one IpAccessHostEt on secondary processor used only for Iub User Plane. Iub User and Control Plane • • • • • • 1. Create one IpInterface MO for primary processor (PIU) and one for secondary processor (PiuDevice). 2. Create two IpAccessHostEt for each EPB SET “ipInterfaceMoRef” as the interface on the EPB created. 3. Create one IpAccessHostPool for Iub, including all IpAccessHostEt defined on EPB_BLADE_A in both processors created. 4. Create IpAccessSctp MO for each EPB Set “ipAccessHostEtRef1” to “IpAccessHostEt” defined on Primary processor. 5. Create one Sctp MO for each EPB Set “ipAccessSctpRef” to “IpAccessSctp” defined above. 6. Add In the IubLink MO created above add a reference to the IpAccessHostPool MO, by setting the userPlaneIpResourceRef attribute. Set the IP addresses for the Iub control plane and the attributes: userPlaneTransportOption and controlPlaneTransportOption to IPv4. Iu/Iur Control Plane • • • • Horizontal Distribution (HD) stack is divided into two parts, Front End (FE) and Back End (BE). The FE handles the communication with the CN and containsthe SCTP-stack. The BE contains the SCC server. One SCTP (FE) executes on each C1 and C2 EPB (four EPBs in total). On each EPB used as Front End (FE) the following MOs are needed. For single-homed configuration: • one IpInterface • one IpAccessHostEt • one IpAccessSctp • one Sctp For multi-homed configuration: • two IpInterface • two IpAccessHostEt • one IpAccessSctp • one Sctp The Iu and Iur control plane is not affected by the introduction of the Evo. There is however a conceptual difference since the multi-homing, both ipAccessHostEt’s will be on the same processor. This will lead to the difference; there is no ET board redundancy but path redundancy is still supported. RPU for the Iu/Iur control plane is however still supported for board redundancy as in baseline. Iu/Iur Control Plane • • • • • • 1. Create one IpInterface MO for each EPB with FE for the singlehomming. For multihoming, create two IpInterface on the same processor. 2. Create one IpAccessHostEt MO for each EPBs with C1, C2 role for the singlehoming and two IpAccessHostEt on the same processor, for multihoming. 3. Create one IpAccessSctp MO for each EPB. For single-homing set the attribute ipAccessHostEtRef1 as a reference to IpAccessHostEt MO. In case of multi-homing, set the attributes ipAccessHostEtRef1 and ipAccessHostEtRef2. 4. Find the RPU to be used when creating the Sctp MO. The RPU ID depends on the subrack and slot number. The principle rule for naming the RPUs for SCTP FE on C1 and C2 EPBs is to use the name reliableProgramLabel_<subrackName>-<slotId>-0 for each four EPBs. 5. Create one Sctp MO for each EPB with C1 and C2 role. IUPS User Plane IUPS User Plane • 1. Configure one IpInterface MO on PlugInUnit (primary processor) or PiuDevice (secondary processor) for each EPB blade. • 2. Configure one IpAccessHostEt MO for each EPB blade. • 3. Create one IpAccessHostPool for Iu-PS user plane with references to all IpAccessHostEt created in Step 2. • 4. Configure one IpEthPacketDataRouter instance for each Pdr Device on the EPB. • Name “IpEthPacketDataRouterId” as “Pdr_<subrack>-<slot>-<1>” • 5. For each CN (Core Network) node that is connected to the EvoC 8200/RNCwith IP PS connection create the IuLink MO. Set “userPlaneIpResourceRef” to the “IpAccessHostPool” created in Step 3. • 6. Verify new Iu-PS connections by checking whether the pmNoRoutedIpPacketsUl and pmNoRoutedIpPacketsDl counters are stepped in the new IpEthPacketDataRouter MOs. Iu-CS and Iur User Plane Iu-CS and Iur User Plane • 1. Create one IpInterface on primary processor (PlugInUnit) and one on secondary processor (PiuDevice). Set VlanRef to Vlan MO created for Iu-CS and Iur user plane • 2. Create two IpAccessHostEt for each EPB board to use. • 3. Create one IpAccessHostPool MO for Iu-CS and Iur. Name IpAccessHostPoolId as “Iu_Iur_xx” There is one common IpAccessHost used for both Iu and Iur. • 4. Create the IuLink MO for IU CS Set userPlaneIpResourceRef tp IpAccessHostPool created in Step 3. • 5. Create the IurLinkMO Set userPlaneIpResourceRef tp IpAccessHostPool created in Step 3. External Interface Improvement • 1 Reconfiguring the RNC Ethernet External Transmission Speed • The Evo Controller 8200/RNC can be configured to support 10G Ethernet transmission speed. For instruction on how to reconfigure transmission speed refer to Reconfiguring External Interfaces. • 2 Configuring Additional External Interface • In the Evo Controller 8200/RNC it is possible to split the Iu/Iur and Iub interfaces into separate physical interfaces. For instruction on how to activate and configure additional external Ethernet interface refer to Reconfiguring External Interfaces • References • • • • 1. Initial IP Configuration, 57/1543-AXD 105 03/1 Uen A 2. Hardware Configurations for Evo Controller 8200 for RNC, 2/155 18-COA 109 400 Uen 3. NDS IP Termination on EPB1 in Evo, 50/102 68-FCP 101 8201 Uen 4. IP EPB SW, IP Termination, 20/159 41-FCP 105 1096 Uen