AI, Discretion, and Bureaucracy in Public Administration

advertisement

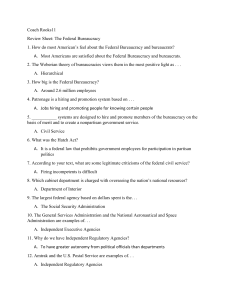

856123 research-article2019 ARPXXX10.1177/0275074019856123The American Review of Public AdministrationBullock Article Artificial Intelligence, Discretion, and Bureaucracy American Review of Public Administration 2019, Vol. 49(7) 751­–761 © The Author(s) 2019 Article reuse guidelines: sagepub.com/journals-permissions https://doi.org/10.1177/0275074019856123 DOI: 10.1177/0275074019856123 journals.sagepub.com/home/arp Justin B. Bullock1 Abstract This essay highlights the increasing use of artificial intelligence (AI) in governance and society and explores the relationship between AI, discretion, and bureaucracy. AI is an advanced information communication technology tool (ICT) that changes both the nature of human discretion within a bureaucracy and the structure of bureaucracies. To better understand this relationship, AI, discretion, and bureaucracy are explored in some detail. It is argued that discretion and decision-making are strongly influenced by intelligence, and that improvements in intelligence, such as those that can be found within the field of AI, can help improve the overall quality of administration. Furthermore, the characteristics, strengths, and weaknesses of both human discretion and AI are explored. Once these characteristics are laid out, a further exploration of the role AI may play in bureaucracies and bureaucratic structure is presented, followed by a specific focus on systems-level bureaucracies. In addition, it is argued that task distribution and task characteristics play a large role, along with the organizational and legal context, in whether a task favors human discretion or the use of AI. Complexity and uncertainty are presented as the major defining characteristics for categorizing tasks. Finally, a discussion is provided about the important cautions and concerns of utilizing AI in governance, in particular, with respect to existential risk and administrative evil. Keywords artificial intelligence, bureaucracy, discretion, administration, governance Introduction In 1980, Michael Lipsky codified the role of the individual decision maker, the “street-level” bureaucrat (Lipsky, 1980). Such bureaucrats, including teachers, doctors, case workers, police officers, fire fighters, garbage collectors, and information service workers, provided government services, benefits, and punishments directly to the public (often, quite literally, out on the street). As Lipsky noted, these street-level bureaucrats used their discretion—their decision-making latitude in implementing government policies in complex and uncertain problem spaces—to serve the public and solve problems. Lipsky’s work inspired much scholarly curiosity in the field of public administration (PA), into the motivations of these street-level bureaucrats (and their managers) who were implementing policy. Because bureaucrats exercise discretion when completing their job tasks, it would be helpful to understand how these decision-making processes work. Scholars examined differences between government bureaucrats and private sector employees across motivations and attitudes such as public service motivation (Perry, 1996; Perry & Wise, 1990), extrinsic motivation (Bullock, Hansen, & Houston, 2018), and organizational commitment (Bullock, Stritch, & Rainey, 2015). In addition to this work, PA gave rise to the field of public management, where the impacts of managerial decisions on performance were highlighted (Bullock, Greer, & O’Toole, 2019; Meier & O’Toole, 2001, 2002; O’Toole & Meier, 1999, 2003). This research empirically established the relationship between those working within government and the performance of government (O’Toole & Meier, 2011). In the midst of this wave of scholarly attention devoted to understanding the motivations, attitudes, and decisionmaking processes of street-level bureaucrats, Bovens and Zouridis (2002) observed a trend in large, executive, public agencies, from street-level bureaucracies, to screen-level bureaucracies, on to systems-level bureaucracies. No longer were bureaucrats simply “out on the street” providing services to the general public; now much of this work was being done behind a computer screen. Many decisions were becoming routinized and guided by computer software and databases (screen-level bureaucracy), which resulted in changes in the operation and scope of discretion itself. Decision-making tasks were guided by software for case management, performance tracking, and increases in the 1 The Bush School of Government & Public Service, Texas A&M University, College Station, USA Corresponding Author: Justin B. Bullock, The Bush School of Government & Public Service, Texas A&M University, 4220, TAMU College Station, TX, 77843-4220, USA. Email: jbull14@tamu.edu 752 oversight and accountability of bureaucrats’ discretion (Bovens & Zouridis, 2002). Bovens and Zouridis (2002) maintain that the transition from street-level bureaucracy to screen-level bureaucracy was only the first for some bureaucracies. Screen-level bureaucracies began to give way to even more expansive uses of information communication technology (ICT) tools, such as computers, email, and other uses of the Internet and digital world. Meanwhile, the ICT tools themselves increased in their capacity to both augment and automate decisionmaking tasks (Kurzweil, 2005, 2013). In some instances, these increases began to give way to a new form of bureaucracy, the systems-level bureaucracy, characterized by the decisive role that ICT tools play in the bureaucratic process for some agencies. No longer were bureaucrats out on the ground or sitting behind screens inputting data; instead, ICT tools themselves augmented and automated more and more tasks, in some cases even replacing the role of expert judgment by human bureaucrats (Bovens & Zouridis, 2002). In the 17 years since Bovens and Zouridis observed a burgeoning transition to systems-level bureaucracies, the rise in power of ICT tools, and in particular artificial intelligence (AI), brings to the forefront classic PA questions about discretion, bureaucracy, and administration. This essay seeks to explore the impacts of AI on discretion and the potential consequences for bureaucracy and governance. AI’s use as a tool of governance is growing and this trend is being discussed by the U.S. Federal Government and debated by scholars. For example, on June 29, 2018, SpaceX launched an artificially intelligent robot named CIMON to the International Space Station to aid NASA astronauts (Davenport, 2018). In addition, the U.S. General Services Administration has partnered with a number of U.S. federal agencies to explore the use of artificially intelligent personal assistants. The use of AI as part of predictive policing and facial recognition for surveillance is growing. In “The Rise of Big Data Policing,” Ferguson (2017) documents the growth of AI and digital surveillance in law enforcement and predictive policing. Furthermore, Virginia Eubanks (2018) argues that the automation of tasks once the purview of street-level bureaucrats has led to an automation of inequality, giving rise to 21st-century digital poorhouses. Eubanks highlights how states’ use of algorithms to make decisions about government benefits and punishments disproportionately harms the poor. Recently, the U.S. federal government has made AI a policy priority, issuing reports affecting both federal agencies and the private market. On December 20, 2016, the Executive Office of The President released “Artificial Intelligence, Automation, and the Economy,” signed by the Chair of the Council of Economic Advisers, the Directors of the Office of Science and Technology Policy, the Domestic Policy Council, and the National Economic Council, and the U.S. Chief Technology Officer (Executive Office of the President, 2016). This report focuses on the emergence of AI in the economy, but also can be applied to the types of tasks American Review of Public Administration 49(7) traditionally in the purview of PA and street-level bureaucrats. “Accelerating AI capabilities,” it states, will enable automation of some tasks that have long required human labor. Rather than relying on closely-tailored rules explicitly crafted by programmers, modern AI programs can learn from patterns in whatever data they encounter and develop their own rules for how to interpret new information. This means that AI can solve problems and learn with very little human input . . . . This (the combination of AI & robotics) will permit automation of many tasks now performed by human workers and could change the shape of the labor market and human activity. (Executive Office of the President, 2016, p. 8) Even more recently, Executive Order (EO) 13859, “Maintaining American Leadership in Artificial Intelligence,” was issued on February 14, 2019. This EO notes that AI will affect essentially all federal agencies, and that those agencies should pursue six strategic objectives in response, including promoting sustained investment in AI Research & Development, enhancing access to high-quality federal data, reducing barriers to the use of AI technologies, ensuring technical standards minimize vulnerabilities to attacks from malicious actors, training the next generation of AI researchers, and developing a strategic plan to protect the United States’ advantage in AI and technology critical to U.S. interests (Executive Office of the President, 2019). The U.S. federal government has begun to prioritize issues of AI and improving governance. PA scholars should do the same. This article seeks to provide an exploration of the role AI is playing in changing the nature of bureaucratic discretion. To better understand this changing nature of discretion, discretion itself needs to be better situated in a broader discussion of PA and intelligence. Thus, an argument is presented that decisionmaking, as Herbert Simon (1947) argued, is at the heart of administration, and that intelligence is the basic capacity for decision-making. In this context, human discretion is characterized as human bureaucrats engaging in decision-making (in which uncertainty is present) for a complex task about how best to deliver government services, benefits, and punishments. Once this argument is elucidated, then the human terrain for decision-making and quality of discretion are elaborated upon. Then, some of the basic characteristics of AI are presented. From here, arguments are made about what the rise of AI means for the future of discretion within bureaucracy broadly and more specifically about the impact AI has on discretion within systems-level bureaucracies. Finally, the article concludes with some concerns and caution about how AI should be used intelligently as a tool of governance. Administration, Discretion, and Intelligence Herbert Simon noted that decision-making is at the very heart of administration (Simon, 1947). Street-level public servants use their decision-making latitude—their discretion—to conduct Bullock the basic work of administration. Once legislation is passed, bureaucratic discretion occurs at all levels, from the details of policy implementation, to agenda- and priority-setting, to the design of public agencies and organizations, and to the actual delivery of services on the ground (Busch & Henriksen, 2018). Quality of administration can be characterized, in part, by how effectively public administrators use their discretion to achieve policy goals. An understanding of the individual decision-making process is an important feature of the study of administration. But what drives decision-making capacity? Can some construct be used as a guide to the quality of decision-making, and thus the quality of discretion? While both motivation and goal alignment of the individual bureaucrat play large and important roles, psychologists, neuroscientists, and AI scholars argue that intelligence is the construct that helps to define the overall capacity for the quality of decision-making (Dreary, Penke, & Johnson, 2010; Piaget, 2005; Russell & Norvig, 2016). This essay adopts Max Tegmark’s (2017) definition of intelligence, “the ability to accomplish complex goals.” Tegmark argues that this definition is broad enough to encompass many of the competing definitions of intelligence, which include “capacity for logic, understanding, planning, emotional knowledge, self-awareness, creativity, problem solving and learning” (loc 919, Tegmark, 2017). This definition also allows for many types of intelligence, in pursuit of many different types of goals and gives a common construct that can be assessed across agents that pursue these goals (with a bonus characterization of substrate independence, which is helpful in thinking about intelligence across organic and nonorganic entities; Bostrom, 2014; Tegmark, 2017).1 Administration is the attempt to achieve complex organizational goals through a decision-making process; intelligence is the ability to accomplish complex goals. Thus, those interested in the study of administration should be concerned with advances in intelligence, and these advances abound, particularly in the realm of AI. However, before discussing AI, it is useful to consider the strengths and limitations inherent in human intelligence, and hence human discretion. Human Discretion in Administration Human discretion exists at the heart of administration. Humans created and maintained the administrative state, and they remain its designers, leaders, and implementers. Given this history of human discretion in administration, what is known about the decision-making process and how well humans perform as actors with discretion in the delivery of public services? To treat this topic fully, in all its nuances, would require a book. In fact, numerous books have been written, for example, on how street-level bureaucrats use their discretion (Lipsky, 1980); entire textbooks explain how humans design, manage, and run public organizations (Rainey, 2014). A recent wave of research in behavioral PA 753 looks to incorporate what behavioral economists and psychologists have discovered about the systematic biases and limitations to human rationality, and how this plays out in the field.2 A brief treatise must suffice. Ample empirical evidence suggests that human public administrators are quite capable of efficiently, effectively, and equitably completing a wide range of administrative tasks (Rainey, 2014). Early work in PA examined a core set of key administrative tasks known as POSDCORB: planning, organizing, staffing, directing, coordination, reporting, and budgeting (Gulick, 1937), and these tasks have been accomplished by humans throughout history. Administrative tasks rely on the ability to flexibly coordinate across a number of domains; such tasks are quite suitable to human strengths (Harari, 2016). Administrators engage in networking, politicking, and innovating (Borins, 1998, 2008; Dunn, 1997; Goldsmith & Eggers, 2004; Isett, Mergel, LeRoux, Mischen, & Rethemeyer, 2011; Linden, 1990), all forms of discretion. They are often motivated to serve the public, find meaning in helping others, and work to help efficiently and effectively make use of public resources to provide public goods (Bullock et al., 2018; Bullock et al., 2015; Perry & Wise, 1990). They cleverly satisfice, coordinate, and compromise to handle the multitude of tasks required of them (Simon, 1947). But the human discretion picture is not always a rosy one. Humans are not rational automatons (Kahneman, 2011). They are riddled with a set of systematic cognitive biases and subjective probability weights (Bazerman, 2002; Kahneman & Tversky, 1972; Wilkinson, 2008) and succumb to planning fallacies and to loss aversion (Kahneman & Tversky, 1979). They are susceptible to corruption (Klitgaard, 1988). They become demotivated and do not perform their job tasks well. They award improper and fraudulent benefits and deny benefits to the deserving (Greer & Bullock, 2018; Wenger & Wilkins, 2008). They use their power and influence to cause harm to the weak. They are often overconfident in their own abilities and quickly run into limits in their capacity to process large amounts of data (Kahneman, 2011). While humans have certainly improved their administrative abilities over time to match the changing demands of modern society, there is no question that human administrators are limited in their capacities and deeply flawed in their pursuit of efficiency, effectiveness, and equitable administration of the law. Over time, ICT tools have aided humans in refining and improving their discretion, and thus the overall quality of administration. Early ICT included basic systems of written accounting, but these tools have progressed to complex technological systems in which AI systems do more and more of the tasks that were once in the domain of human bureaucrats. This march of improvement in the capacity of ICT tools allows for augmentation and automation of tasks and continues to encroach into the space of work once completed solely by human administrators (Busch & Henriksen, 2018). In addition, ICT progress presents its own challenges (Barth & 754 Arnold, 1999; Bovens & Zouridis, 2002; Simon, 1997), which will be further elaborated upon later in this essay. While these challenges are not necessarily new (automation and advances in computing have made numerous administrative tasks obsolete over time), advances in AI appear especially dramatic. What began as the automation of physical efforts and relatively straightforward data entry is evolving to the domains of cognitive and analytical tasks (Brundage et al., 2018). AI: Basic Characteristics and Opportunities for Administration AI can be thought of as the ability of a nonorganic, mechanical entity to accomplish complex goals (Tegmark, 2017). As mentioned earlier, this implies that intelligence is substrate independent: It can exist as a biological process informing human actions or as a mechanical process guiding an automaton. AI literature distinguishes between “weak” or “narrow AI” and “strong” or “general AI.” Weak or narrow AI can learn to complete a small set of tasks or one particularly challenging goal. Strong or general AI can learn across a broad range of challenging complex tasks across almost any domain (Nilsson, 2009). There is much discussion about the rising abilities of deep neural networks and generative adversarial networks (Bostrom, 2014), but for the purposes of this article, this will suffice: Narrow AIs are being continuously improved and are increasingly able to accomplish tasks that were once most effectively, efficiently, and equitably completed by humans. As AI progresses, potential applications to the work of administration and governance will multiply, though governments often do lag in their adoption of new technologies. In a resource-constrained environment, public organizations dare not invest in large updates to their computing hardware and software. However, as these costs fall, and AI gains in replicability, scalability, and efficiency (Brundage et al., 2018), the same pressures that delay government technological adoption may make these tools attractive investments in organizational efficiency. It seems that with current technologies, almost two decades after Bovens and Zouridis’ observation of the beginnings of systems-level bureaucracies, many tasks carried out by human actors may already be more efficiently and effectively carried out by AI. This suggests that tasks completed by human street-level bureaucrats, across many domains, may be augmented or automated by AI. AI may bring increasing levels of effectiveness and efficiency, but threats to governance remain, such as impacts on equity, accountability and democracy (Barth & Arnold, 1999), along with concerns of managerial capacity and political legitimacy (Salamon, 2002). Given competing governance goals and values, these opportunities and threats present important questions for how discretion should be employed within the context of public organizations. These questions permeate all organizational levels of governance tasks and are directly addressed in the following sections (Busch & Henriksen, 2018). American Review of Public Administration 49(7) The rise of AI’s capabilities will result in the augmentation and automation of cognitive tasks. Market analysts estimate that advances in AI and robotics are likely to lead to further automation in at least 10 different task areas including: personalized products, targeted marketing, fraud detection, supply chain and logistics, credit analysis, construction, food preparation and service, autonomous vehicles, automated checkout, and health care provision (Helfstein & Manley, 2018). Consider a few examples of these task areas and their relationship to administration and governance. A wave of disruption is already cresting, and unless the field addresses these challenges preemptively, it is easy to imagine a violent crash. Personalized products could dramatically change how taxes are collected through automation of data collection from employer and state databases. These same products could help identify those who are legally entitled to sets of social services. Targeted marketing could certainly be used to help ensure that citizens receive all relevant public service announcements. Fraud detection could be improved dramatically, particularly in entitlement and social insurance programs, eliminating street-level bureaucrat positions in fraud management. Supply chain and logistics could be streamlined across a host of government business practices, thus potentially improving governance efficiency. Credit analysis could be improved for governments of all sizes, with the creation of more effective tools to accurately price credit and determine creditworthiness. Automated food preparation and service could modernize cafeterias for public organizations throughout the country. Autonomous vehicles will likely replace bus drivers, or at least change the nature of their jobs, and revolutionize transportation across government agencies. The public information officers mentioned by Bovens and Zouridis may become obsolete, as the design of automated checkout systems improves and the public’s comfort with them increases. The health care provider industry (another category of nonroutine, nonlegal, and complex work mentioned by Bovens and Zouridis) seems to be in the midst of its own AI revolution; this could have dramatic implications for the delivery of public health services (Topol, 2019). These are just some examples of the breadth of PA tasks that could be automated or augmented by AI. The expansiveness of this task terrain presents a question for scholars of PA: What is the future of discretion for street-level bureaucrats? The Future of Street-Level Bureaucrats’ Discretion in Bureaucracy Bovens and Zouridis (2002) noted the changing character of large, executive agencies, stating that many of these “public agencies of the welfare state appear to be quietly undergoing a fundamental change of character internally. Information communication and technology (ICT) is one of the driving forces behind this transformation” (p. 175). They note that a number of large executive organizations made a shift from traditional street-level bureaucracy to screen-level and, for at Bullock least two agencies in the Netherlands, there was a shift toward systems-level bureaucracies. Bovens and Zouridis (2002) characterize systems-level bureaucracies as those in which ICT has come to play a decisive role in the organizations’ operations. It is not only used to register and store data, as in the early days of automation, but also to execute and control the whole production process. Routine cases are handled without human interference. Expert systems have replaced professional workers. Apart from the occasional public information officer and the help desk staff, there are no other street-level bureaucrats as Lipsky defines them. (p. 180) There are no other street-level bureaucrats. While Bozens and Zouridis are quite cautious in their suggestion of the expanse of systems-level bureaucracies (“It remains to be seen whether similar transformations can be observed in non-legal, non-routine, street-level interactions such as teaching, nursing, and policing” (p. 180), they argue that bureaucracies that do transition to systems-level will only employ three groups of people: (a) those active in the data processing process, (b) managers of the production process, (c) and those that help clients interface with the information system. This shift to systems-level bureaucracies may greatly reduce the scope of administrative work that would be completed by humans, leaving very little, if any, work left for the traditional street-level bureaucrat. Building from Bovens and Zouridis’ argument, and the work of other scholars, there is a growing debate in PA about whether ICT has a curtailing or enabling influence on the discretion of human street-level bureaucrats. Buffat (2015) highlights this debate: On the one hand, initial research considered that street-level discretion decreases or disappears in the case of large bureaucratic informatisation. Since an insistence is put on the negative impact of ICT over discretion, the label “curtailment thesis” is relevant. On the other hand, other studies point out more nuanced effects of ICT. These studies indicate that new technologies constitute only one factor among others shaping street- level discretion and that they provide both frontline agents and citizens with action resources. This why we label this orientation the “enablement thesis.” (p. 151) Buffat (2015) notes that Snellen (1998, 2002), Zuurmond (1998), and Bovens and Zouridis (2002) make versions of the curtailment argument. Buffat summarizes these curtailment arguments by stating that the scholars believe that “ICT has a negative and curtailing effect on frontline discretion. In computerized public service delivery, street-level bureaucrats partially or totally lose their discretionary power” (p 153). Buffat (2015) notes four weaknesses to the curtailment argument: (a) it implies technological determinism, (b) discretion is too narrowly defined, (c) empirical limitations, and (d) an insufficient interest in the concrete uses of technologies by frontline workers. He offers a different hypothesis, which is labeled the “enablement thesis.” 755 Buffat notes evidence from several studies including a subsidy allocation process in the Netherlands (Jorna & Wagenaar, 2007), ICT and policy discretion in a Swiss unemployment fund (Buffat, 2011), implementation of French agricultural policy (Weller, 2006), and the use of a French social aid website (Vitalis & Duhaut, 2004). These studies lead Buffat to claim discretion is not suppressed at the frontline despite ICT tools and continues to exist in the daily street-level activity. This result is linked to contextual factors such as the inability of ICT tools to capture the whole picture of frontline work and choices, limited resources for managers to control time and attention, and work organization or the skills possessed by street-level agents. Analytically speaking, this means that technology (and its use) is only one of the factors influencing the discretion of frontline agents and that a variety of non-technological factors shape it as well. This is why no unilateral effects of technology can be assumed. (Buffat, 2015, pp. 156-157) Busch and Henriksen (2018) come to a somewhat different conclusion. They use Buffat’s constructs of enablement and curtailment and find evidence for both in their literature review on digital discretion. Building from Buffat’s call for a better understanding of the contextual factors contributing to the diffusion of digital discretion, and informed by their own literature review, Busch and Henriksen identify 10 contextual factors nestled within four levels of analysis. These factors include: at the macro-level policy maker: formulation of rules; at the meso-level street-level bureaucracy: formulation of organizational goals, formalization of routines, and interagency dependency; at the micro-level street-level bureaucracy: professionalization, computer literacy, decision consequences, information richness, and relational negotiations; and at the technology level: features. Busch and Henriksen also identify and discuss six technologies from empirical studies, including telephone, multifunctional computer, database, website, case management system, and an automated system. From their literature review, Busch and Henriksen question Lipsky’s claim that “the nature of service provision calls for human judgement that cannot be programmed and for which machines cannot substitute” (Lipsky, 1980, p. 161). Their response addresses two questions: (a) can digital discretion (defined by the authors as “increased use of technologies that can assist street-level bureaucrats in handling cases by providing easy access to information about clients through channels such as online forms, and automating parts of or whole work processes”) cause a value shift in street-level bureaucracy and (b) under what conditions can this shift occur? They find that there is an increase in diffusion of digital discretion in governance. They argue that the empirical evidence to date (which they note is sparse) suggests digital discretion “seems to be more suitable for strengthening ethical and democratic values, and in general fails in its attempts to strengthen professional and relational values” (Busch & Henriksen, 2018, p. 18). The authors argue that digital discretion is diffusing, it is 756 influencing street-level bureaucrats, and ICT is sometimes substituting for human judgment. Finally, the authors note that the overall scope of street-level bureaucracy is decreasing. The authors conclude that while certain types of street-level work seem to avoid extensive changes due to ICT, it makes more and more sense to talk about digital bureaucracy and digital discretion, since an increasing number of street-level bureaucracies are characterized by digital bureaucrats operating computers instead of interacting face-toface with their clients. (Busch & Henriksen, 2018) However, they do note that one technology in particular has the potential to automate even nonroutine street-level work such as nursing, teaching, and policing. They state that artificial intelligence is a technology that has developed rapidly in recent decades describing computers that can act as autonomous agents and approximate the human brain. For example, IBM’s Watson can now better identify symptoms of diseases than experienced physicians, and AI has proved to conduct better assessments of English essays than teachers. (Busch & Henriksen, 2018) What do these arguments suggest for the future of streetlevel bureaucrats’ discretion within bureaucracy? The first observation is that AI enables human discretion across some tasks and curtails discretion across others. In this way, as Buffat (2015) and Busch and Henriksen (2018) highlight, the context of the task greatly matters. Busch and Henriksen provide a number of contextual organizational factors. The second observation is that not only does the context matter, but the type of task itself matters. Here, Perrow (1967) provides some useful insight by classifying work tasks along two important dimensions: the frequency with which the task requires deviations from normal procedures and the degree to which the task is analyzable or can be broken down into a rational systematic search process. While the organizational contextual factors provide guidance of where to look for discretionary tasks within bureaucracy, this task classification can be very helpful if two assumptions are added: (a) tasks that rarely deviate from normal procedures are less complex and more routine, and are therefore more likely to be automated or augmented by AI, compared with those that often deviate from normal procedures and are less routine, and (b) the more analyzable a task is the less uncertainty there is around that task and the more likely it is to be automated or augmented by AI, and the less analyzable a task, the less likely it is to be automated or augmented by AI. The greater the deviations from normal procedures, the more complex the task, which makes it more difficult to automate. The less analyzable a task, the greater the uncertainty, which also makes a task difficult to automate. Thus, tasks that are high in complexity (more deviations from the norm) and high in uncertainty (less analyzable) should most likely remain as a discretionary task to be completed by humans. Furthermore, American Review of Public Administration 49(7) tasks that are lower in complexity (fewer deviations from the norm) and lower in uncertainty (more analyzable) are more likely to be completed by a machine. While human discretion remains most important in tasks where complexity and uncertainty are high, given humans’ weaknesses in situations of uncertainty, AI also maintains a comparative advantage to humans with dealing with uncertainty. Thus, AI likely has a relative advantage for tasks that are high in uncertainty but low in complexity. Conversely, when a task is low in uncertainty but high in complexity, humans can identify highly complex and abstract patterns across task sets (a more general intelligence that still escapes AI), so humans likely retain a relative advantage in this domain. Figure 1 illustrates this relationship in a 2 × 2 matrix. It is important to consider the task itself in the diffusion of digital discretion and AI. Organizational context matters as well, but the complexity and uncertainty around any given task will also influence whether the task likely remains in the domain of human discretion. A final observation is that the complexity and uncertainty of a task are likely to vary along the contextual factors highlighted by Busch and Henriksen and across different types of governance organizations and organizational units within a governance entity. For example, imagine the structure of a local government of a mid-size city. This city may have a transportation department, a police department, a fire department, an accounting department, a legal department, a human resources department, a library services department, and a parks and recreation department, among many others. While some tasks and task distributions will be similar across these departments, others will vary significantly based upon the work of the particular unit. For example, fire fighters and police officers encounter complex and uncertain task situations in their interactions with the citizenry; such complex and uncertain encounters may result in extreme deviations from normal procedures and limits in the ability to fully analyze the situation. By contrast, the task distribution for work tasks in an accounting office, a human resources department, and a library services department may consist of more tasks with fewer deviations from normal procedures (less complex) and that are more analyzable (less uncertain). It is clear that the scope of street-level bureaucrats’ discretion is decreasing in some domains, and the overall role human discretion plays in administration is changing. Discretion is curtailed and automated across some tasks and enabled and augmented across others. This is evident in the contextual analysis provided by Busch and Henriksen (2018) and in the argument provided here on task complexity and uncertainty. In addition, the distribution of types of tasks completed by a governance organization contributes to the degree to which the organization is likely to shift from being a street-level bureaucracy to a screen-level bureaucracy and on to a systems-level bureaucracy. Systems-level bureaucracies are likely comprised of relatively more tasks that are analyzable (less uncertain) and that do not deviate from 757 Bullock Uncertainty Low High Low Low Complexity, Low Uncertainty Low Complexity, High Uncertainty Task Characteristics: Few Deviations, High Analyzability Few Deviations, Low Analyzability AI Dominates Leaning AI Complexity Relative Strengths of Human and AI High Task Characteristics High Complexity, Low Uncertainty High Complexity, High Uncertainty Many Deviations, High Analyzability Many Deviations, Low Analyzability Relative Strengths of Human and AI Leaning Human Human Dominates Figure 1. Task complexity, task uncertainty, and discretion. Note. AI = artificial intelligence. normal procedures (less complex). Without using Perrow’s (1967) framework, Bovens and Zouridis (2002) maintain that factors such as legal and organizational contexts may aid in the creation of systems-level bureaucracies. Bovens and Zouridis pose two examples of systems-level bureaucracies that fit this mold: the monitoring of traffic light violations and the processing of student loan applications. These two tasks are more analyzable (less uncertain) and do not deviate from normal procedures (less complex). It is in this task environment that systems-level bureaucracies have likely evolved, and that AI may play the largest role in impacting human discretion. AI Influence on Systems-Level Bureaucracies and Discretion Systems-level bureaucracies are characterized by ICT tools that decisively serve the roles of execution, control, and external communication; typical cases require no human interference. This means that many tasks have been fully automated. Systems-level bureaucracies, furthermore, operate within a detailed legal regime where there is no executive discretion. AI, it should be noted, is an advanced ICT tool that is efficient, scalable, rapidly diffuses, and increases anonymity and psychological distance from the task (Brundage et al., 2018). It is high in connectivity and updateability (Harari, 2018), and its capabilities often exceed those of human actors. Thus, while the scope of discretion is both curtailing and enabling across bureaucracies (but likely decreasing in overall scope), the legal and organizational contexts in which systems-level bureaucracies arise are contexts that are likely dominated by tasks that are analyzable (low in uncertainty) and do not regularly deviate from the normal procedure (low in complexity), which happen to be the same types of tasks for which AI is likely able to replace human discretion. This suggests that while AI is likely to play a strong role in limiting human discretion across different types of tasks and levels of bureaucracies, systems-level bureaucracies seem poised to first experience both its significant opportunities and its threats to governance and discretion. Three articles provide important arguments concerning this relationship. Bovens and Zouridis (2002) list two particular concerns for these new bureaucracies: (a) new discretionary powers that are given to the designers of the systems that run the systems-level bureaucracy and (b) the potential rigidity of the systems to individual circumstances. Busch and Henriksen (2018) assert that the growth in digital discretion seems likely to improve ethical and democratic values for discretionary administrative tasks but presents challenges for professional and relational values. Finally, Barth and Arnold (1999) highlight three potential impacts of AI on administration, which include potential improvements in democratic governance, concerns about the role of ethics and professional competence in discretion, and accountability across the power balance between legislators and administrators and across control of the AI. These arguments are explored in further detail below. Given their overlap, Barth and Arnold (1999) and Busch and Henriksen (2018) are discussed together, with a focus on Barth and Arnold’s (1999) argument. According to Bovens and Zouridis (2002), the transition from street-level bureaucracy to systems-level bureaucracy introduces two concerns for a constitutional state, new discretionary powers granted to the system designers and the digital rigidity to individual circumstances. AI is likely to directly impact both of these. First, AI promises significant improvements in the digital rigidity of ICT systems. AI systems may be able to both improve upon the “zenith of rational and legal authority” as discussed by Bovens & Zouridis and allow for that legal authority to be more personalized, all the while minimizing typical human biases against groups of people.3 However, advanced AI 758 systems present an even tougher challenge on the dimensions of supervision and public accessibility. Many AI systems are described as black box processes, or systems viewed in terms of inputs and outputs without any knowledge of its internal workings, which make supervision and public accessibility challenging. However, recent work by AI researchers and legal scholars has begun to provide some potential remedies to this lack of transparency (Kleinberg, Ludwig, Mullainathan, & Sunstein, 2019). These concerns also pose long-term questions about how best to ensure value alignment (matching AI goals with the values and goals of its designer) and transparent decisionmaking, a preoccupation of a number of AI scholars, including Nick Bostrom (2014). Barth and Arnold (1999) argue that advances in AI will eventually reach a level at which “machines have the capability to go beyond initial programming and learn to learn” (p. 334). They state that implicit to this ability is the “ability to exercise judgment as the situation changes (p. 335).” At this level of AI, Barth and Arnold believe three themes would be important to consider: First, the ability to program machines with values and motives suggests the potential to improve the rationality of decisions through tools that can apply a known or specified range of values or biases (theme of responsiveness). Second, the ability to develop machines that can sense subtle aspects or changes in the environment suggests tools that can make political or situational assessments (theme of judgment). Finally, machines that can learn to learn independently suggest a tool without precedence that may exceed the capacity of humans to scan the environment, assess situations, and make decisions in a timely manner without human supervision (theme of accountability). (Barth & Arnold, 1999) Barth and Arnold further argue that AI could improve democratic governance, in that it could be programmed to efficiently and effectively carry out the values or policies of political actors. However, the “programmable administrator” (the term used by Barth & Arnold) eliminates the chance for the personal ethics or professional competence of human bureaucrats to curb the excesses of these same politicians. On the theme of judgment, Barth and Arnold reiterate the need to better understand what role professional judgment should play in the administrative discretion function. Finally, on the theme of accountability, Barth and Arnold pose two questions: First, would AI systems lessen the reliance of legislators on the bureaucracy, or vice versa (and if so, is this desirable?); and second, does the nature of AI (i.e., the ability to think independently) suggest systems that are potentially beyond the control of those responsible for them (i.e., public officials)? (p. 342) In response to the first question the authors find that it does not seem that the increased technical capacity potentially represented by an AI system would affect the balance of control between legislators or administrators other than the American Review of Public Administration 49(7) potential to reduce the number of analysts on both sides of the fence. (p. 342) And as for the second question, along with many leading AI thinkers, they state that “there is the distinct danger of AI systems becoming used so much that they become impossible to monitor either because they simply exceed the capacity of humans or the humans lose master of their subject through lack of use” (p. 342). Discretion is growing for system designers within systems-level bureaucracies, which can be both humans and AI designers. This discretion, while dependent upon individual circumstances, may still lead to more equitable, ethical, and democratic implementation overall, by allowing for responses that do not overweight individual circumstances (in the direction of either favoritism or discrimination). AI is likely to weaken discretion for professional competence and relational values, which could affect human bureaucrats’ relationships with citizens and the citizens’ relationships with the state. Finally, AI systems can be opaque black box processes, with capacities in some task areas that outpace those of humans, raising concerns of control and accountability. Given these concerns, it is important to proceed carefully with the deployment of AI to replace human discretion. As Figure 1 suggests, for tasks that are lower in both complexity and uncertainty, these concerns may be minimized and the use of AI is encouraged, but for tasks that are characterized by more complexity and more uncertainty, the use of AI should be approached with caution. AI has been increasing in capacity and thus in overall intelligence and ability to accomplish discretionary tasks. As AI capacity exceeds that of humans in many task domains, this increase in intelligence could increase the overall quality of discretionary tasks, and thus the overall quality of administration. But even if the overall quality of administration can be improved, AI changes the nature of risks to good governance in significant and important ways. Caution and Concern With the Rise of AI The power of AI should not be underestimated. The Future of Humanity Institute, OpenAI, and other partners published a report noting the potential malicious uses of AI (Brundage et al., 2018). They agree that AI expands existing threats to governance actors, introduces new threats, and changes the typical character of threats. Furthermore, they note six security-relevant properties of AI that contribute to its potential malicious use. AI exhibits a dual-use nature (it has the potential to be used to further both beneficial and nefarious objectives), AI systems are commonly both efficient and scalable, AI systems can exceed human capabilities, AI systems can increase anonymity and psychological distance (from the victim), and AI developments lend themselves to rapid diffusion, and AI systems often contain a number of unresolved vulnerabilities (Brundage et al., 2018). These properties lead many to consider AI an existential risk to humanity. An 759 Bullock existential risk is “one where an adverse outcome would either annihilate Earth-originating intelligent life or permanently and drastically curtail its potential” (Bostrom, 2002, p. 2). Organizations such as OpenAI and the Future of Humanity Institute make it their mission to ensure that AI is used for the benefit of humanity and not its detriment. With these risks in mind, it is important to consider which aims and goals AI should be attempting. Unfortunately, we lack a complete public ethics framework by which to order the values that we would direct AI to maximize. Without such a tool to guide good governance, and given AI’s power for good and evil, it may be appropriate for public administrators to act in a way that minimizes its potential for administrative evil, or unnecessary harm and suffering inflicted upon a population (Adams, 2011). We can appreciate the value of minimizing administrative evil while also realizing that AI will continue to advance. To stay ahead of the risks and abreast of the opportunities, we should take stock, as begun here, of where this technology can viably take us, and its broader implications for the field of PA. As technology continues to progress, the number of tasks at the street level that are too uncertain or too complex for AI will continue to shrink. This suggests a decreased scope of tasks to be performed by human bureaucrats, leaving open the question of what this automation means for organizational outcomes and performance.4 The corollary to this is an overall rise in digital discretion and digital bureaucracy (Busch & Henriksen, 2018). While it is not the purpose of this article to fully describe the nature of the transition to digital bureaucracies, it seems obvious that a clear assessment of public values is needed to complete this analysis. PA has often relied on the professional norms of different groups of its practitioners to address values-centered questions, but both these professionals and their influence are likely to decline in the face of rising digital discretion and digital bureaucracy (Busch & Henriksen, 2018). So how do we prioritize these values, across multiple types of service delivery? Should people receive equal treatment under the law? Or should circumstances be taken into account? What about the relative importance of effectiveness, efficiency, and equity? Numerous questions remain about how to maximize the common good while mitigating the possibility of administrative evil, particularly in the presence of such a powerful tool of governance. As more and more tasks continue to be automated within systems-level bureaucracies, what is the overall impact on both discretion and performance? The literature on digital discretion is split, and while evidence suggests there is likely to be a narrowing of discretion, more theoretical and empirical research is needed to better understand the full effects of this constriction. In particular, scholars must better understand the competing roles between human systems designers and their AI counterparts in developing bureaucratic systems that are effective, efficient, and equitable. One theoretical suggestion from this essay is that tasks that are low in complexity and uncertainty are the likeliest candidates for automation by AI, whereas tasks high in complexity and uncertainty should remain under the purview of human discretion. AI should be employed carefully, as it potentially presents an existential risk to humanity. This article also suggests that scholars should consider the effect of AI on the structure of bureaucracy. Bovens and Zouridis (2002) tracked the shift from street-level to screenlevel to systems-level for certain executive decision-making organizations. AI is likely to positively impact the performance of organizations that have already become systemslevel bureaucracies, although the extent to which this is true should be further examined empirically. However, the impact on bureaucracies that do not fit this characterization is less clear. The size, resources, and task distribution of these public organizations all affect the likelihood of AI’s influence, but much more research is needed in this area as well. Finally, the power and versatility of AI beg the question: What goals should it be directed to accomplish? In this way, AI has been described as a dual-use technology: It can be used toward a wide variety of ends, both good and evil. Given this, we need a better understanding of which values of governance should be maximized and the optimal balance among traditional priorities such as effectiveness, efficiency, and equity. The changing character of discretion prompted by the rise of AI is likely to have important ramifications throughout society and the economy, but it is just as likely to vitally influence administration and governance. While this article identifies and addresses many of the theoretical and empirical questions of importance, much more research is needed to understand the influence of AI on discretion and bureaucratic performance and to plan a cohesive and effective response. Conclusion Justin B. Bullock is also affiliated to Taiwan Institute for Governance and Communication Research. Given the intimate relationship between intelligence and administration, it should not be surprising that AI is encroaching upon the human space of administration and raising a lot of questions, opportunities, and concerns. AI will continue to progress in its capabilities, and it will be up to public administrators and PA scholars to discern how best to manage the rising influence of AI and the waning discretion of streetlevel bureaucrats. Author’s Note Declaration of Conflicting Interests The author(s) declared no potential conflicts of interest with respect to the research, authorship, and/or publication of this article. Funding The author(s) received no financial support for the research, authorship, and/or publication of this article. 760 American Review of Public Administration 49(7) Notes 1. 2. 3. 4. Given the definition of intelligence, it should be clear that it can be used to distinguish between human intelligence and artificial intelligence (AI). Within the fields of computer science, AI, and physics, it is commonly asserted that intelligence is substrate independent. Substrate independence suggests that intelligence, or the ability to accomplish a complex task, can be encoded not just in biological format, as with organic brains, but in nonbiological format, as with computers. One striking example of this at a systematic level has been found by Mogen Pedersen, Justin Stritch, and Frederik Thuesen (2018), where they find “Overall, our studies support the likelihood that ethnic minority clients will be punished more often for policy infractions than ethnic majority clients—and that caseworker work experience mitigates part of this bias” As noted by multiple scholars, this potential is not always realized in practice. Virginia Eubanks (2018) makes a compelling argument for how automation of some tasks has led to decisions that reinforce oppression toward the poor. Andrew Ferguson (2017) has also highlighted a number of challenges with predictive policing and “black” data, in which he highlights challenges for discrimination in predictive policing. For example, Wenger and Wilkins (2008) highlight how automation of the application for unemployment insurance led to improved outcomes for women. ORCID iD Justin B. Bullock https://orcid.org/0000-0001-6263-2343 References Adams, G.B. (2011). The Problem of Administrative Evil in a Culture of Technical Rationality. Public Integrity, 13, 275-286. Barth, T. J., & Arnold, E. (1999). Artificial intelligence and administrative discretion: Implications for public administration. The American Review of Public Administration, 29, 332-351. Bazerman, M. (2002). Judgment in managerial decision making (5th ed.). Hoboken, NJ: John Wiley. Borins, S. (1998). Innovating with integrity: How local heroes are transforming American government. Washington, DC: Georgetown University Press. Borins, S. (2008). Innovations in government: Research, recognition, and replication. Washington, DC: Brookings Institution. Bostrom, N. (2002). Existential risks: Analyzing human extinction scenarios and related hazards. Journal of Evolution and Technology, 9, 1-30. Bostrom, N. (2014). Superintelligence. New York, NY: Oxford University Press. Bovens, M., & Zouridis, S. (2002). From street-level to systemlevel bureaucracies: How information and communication technology is transforming administrative discretion and constitutional control. Public Administration Review, 62, 174-184. Brundage, M., Avin, S., Clark, J., Toner, H., Eckersley, P., Garfinkel, B., . . . Anderson, H. (2018). The malicious use of artificial intelligence: Forecasting, prevention, and mitigation. arXiv preprint arXiv: 1802.07228. Buffat, A. (2011). Discretionary Power and Accountability of the Office Bureaucracy: The Taxators of a Unemployment Fund as Implementing Actors (Unpublished doctoral thesis). University of Lausanne, Switzerland. Buffat, A. (2015). Street-level bureaucracy and e-government. Public Management Review, 17, 149-161. Bullock, J. B., Greer, R. A., & O’Toole, L. J. (2019). Managing risks in public organizations: A conceptual foundation and research agenda. Perspectives on Public Management and Governance, 2, 75-87. Bullock, J. B., Hansen, J. R., & Houston, D. J. (2018). Sector differences in employee’s perceived importance of income and job security: Can these be found across the contexts of countries, cultures, and occupations. International Public Management Journal, 21, 243-271. Bullock, J. B., Stritch, J. M., & Rainey, H. G. (2015). International comparisons of public and private employees’ work motives, attitudes, and perceived rewards. Public Administration Review, 73, 479-489. Busch, P. A., & Henriksen, H. Z. (2018). Digital discretion: A systematic literature review of ICT and street-level discretion. Information Polity, 23, 3-28. Davenport, C. (2018, June 29). SpaceX is flying an artificially intelligent robot named CIMON to the International Space Station. The Washington Post. Retrieved from https://www.washingtonpost. com/news/the-switch/wp/2018/06/29/spacex-is-flying-an-artificially-intelligent-robot-named-cimon-to-the-internationalspace-station/?noredirect=on&utm_term=.66652e44fdf8 Dreary, I. J., Penke, L., & Johnson, W. (2010). The neuroscience of human intelligence differences. Nature Reviews Neuroscience, 11, 201-211. Dunn, D. D. (1997). Politics and administration at the top: Lessons from down under. Pittsburgh, PA: University of Pittsburgh Press. Eubanks, V. (2018). Automating inequality: How high-tech tools profile, police, and punish the poor. New York, NY: St. Martin’s Press. Executive Office of the President. (2016). Artificial ­intelligence, automation, and the economy. Retrieved from https://­ obamawhitehouse.archives.gov/sites/whitehouse.gov/files/ documents/Artificial-Intelligence-Automation-Economy. PDF Executive Office of the President. (2019, February 11). Maintaining American leadership in artificial intelligence (E.O. 13859). Retrieved from https://www.federalregister. gov/documents/2019/02/14/2019-02544/maintaining-american-leadership-in-artificial-intelligence Ferguson, A. (2017). The rise of big data policing: Surveillance, race, and the future of law enforcement. New York: New York University Press. Goldsmith, S., & Eggers, W. D. (2004). Governing by network. Washington, DC: Brookings Institution. Greer, R. A., & Bullock, J. B. (2018). Decreasing improper payments in a complex federal program. Public Administration Review, 78(1), 14-23. Gulick, L. (1937). Notes on the theory of organization. In L. Gulick & L. Urwick (Eds.), Papers on the science of administration (pp. 1-49). New York, NY: Institute of Public Administration. Harari, Y. N. (2016). Homo deus: A brief history of tomorrow. New York, NY: Random House. Harari, Y. N. (2018, October). Why technology favors tyranny. The Atlantic. Retrieved from https://www.theatlantic.com/ magazine/archive/2018/10/yuval-noah-harari-technology-tyranny/568330/ 761 Bullock Helfstein, S. & Manley, I.P. (2018, February). Alpha Currents On the Markets. Morgan Stanley Wealth Management, Global Investment Committee. Retrieved from https://advisor.morganstanley.com/richard.linhart/documents/field/l/ li/linhart-richard-steven/227_AlphaCurrents___Artifical_ Intelligence_and_Automation.pdf Isett, K., Mergel, I., LeRoux, K., Mischen, P., & Rethemeyer, K. (2011). Networks in public administration scholarship: Understanding where we are and where we need to go. Journal of Public Administration Research and Theory, 21, i157-i173. Jorna, F., & Wagenaar, P. (2007). The “iron cage” strengthened? Discretion and digital discipline. Public Administration, 85, 189-214. Kahneman, D. (2011). Thinking, fast and slow. New York, NY: Farrar, Straus and Giroux. Kahneman, D., & Tversky, A. (1972). Subjective probability: A judgment of representativeness. Cognitive Psychology, 3, 430-454. Kahneman, D., & Tversky, A. (1979). Intuitive prediction: Biases and corrective procedures. Management Science, 12, 313-327. Kleinberg, J., Ludwig, J., Mullainathan, S., & Sunstein, C. R. (2019). Discrimination in the age of algorithms. arXiv:1902.03731. Klitgaard, R. (1988). Controlling corruption. Berkeley: University of California Press. Kurzweil, R. (2005). The singularity is near: When humans transcend biology. New York, NY: Penguin Books. Kurzweil, R. (2013). How to create a mind: The secret of human thought revealed. New York, NY: Penguin Books. Linden, R. M. (1990). From vision to reality: Strategies of successful innovators in government. Charlottesville, VA: LEL Enterprise. Lipsky, M. (1980). Street-level bureaucracy: Dilemmas of the individual in public service. New York, NY: Russell Sage Foundation. Meier, K. J., & O’Toole, L. J. (2001). Managerial strategies and behavior in networks: A model with evidence from U.S. public education. Journal of Public Administration Research and Theory, 11, 271-293. Meier, K. J., & O’Toole, L. J. (2002). Public management and organizational performance: The impact of managerial quality. Journal of Public Administration Research and Theory, 21, 629-643. Nilsson, N. J. (2009). The quest for artificial intelligence: A history of ideas and achievements. New York, NY: Cambridge University Press. O’Toole, L. J., & Meier, K. J. (1999). Modeling the impact of public management: Implications of structural context. Journal of Public Administration Research and Theory, 9, 505-526. O’Toole, L. J., & Meier, K. J. (2003). Plus ça change: Public management, personnel stability, and organizational performance. Journal of Public Administration Research and Theory, 13, 43-64. O’Toole, L. J., & Meier, K. J. (2011). Public management: Organizations, governance, and performance. Cambridge, UK: Cambridge University Press. Pedersen, M. J., Stritch, J. M., & Thuesen, F. (2018). Punishment on the frontlines of public service delivery: Client ethnicity and caseworker sanctioning decisions in a Scandinavian welfare state. Journal of Public Administration Research and Theory, 28, 339-354. Perrow, C. (1967). A framework for the comparative analysis of organizations. American Sociological Review, 32, 194-208. Perry, J. L. (1996). Measuring public service motivation: An assessment of construct reliability and validity. Journal of Public Administration Research and Theory, 6, 5-24. Perry, J. L., & Wise, L. R. (1990). The motivational bases of public service. Public Administration Review, 50, 367-373. Piaget, J. (2005). The psychology of intelligence. London, England: Routledge. Rainey, H. G. (2014). Understanding and managing public organizations. San Francisco, CA: Jossey-Bass. Russell, S. J., & Norvig, P. (2016). Artificial intelligence: A modern approach. Kuala Lumpur, Malaysia: Pearson. Salamon, L. M. (2002). The tools of Government: A guide to the New Governance. New York: Oxford University Press. Simon, H. A. (1947). Administrative behavior: A study of decisionmaking processes in administrative organizations. Oxford, UK: Palgrave Macmillan. Simon, H. A. (1997). Administrative behavior: A study of decisionmaking processes in administrative organizations (4th ed.). New York, NY: Simon & Schuster. Snellen, I. (1998). Street level bureaucracy in an information age. In I. Snellen & W. van de Donk (Eds.), Public administration in an information age: A handbook (pp. 497-508). Amsterdam, The Netherlands: IOS Press. Snellen, I. (2002). Electronic governance: Implications for citizens, politicians and public servants. International Review of Administrative Sciences, 68, 183-198. Tegmark, M. (2017). Life 3.0: Being human in the age of artificial intelligence. New York, NY: Alfred A. Knopf. Topol, E. (2019). Deep medicine: How artificial intelligence can make healthcare human again. New York, NY: Basic Books. Vitalis, A., & Duhaut, N. (2004). NTIC and administrative relationship: from the window relationship to the network relationship. French Review of Public Administration, 110, 315-326. Weller, J.-M. (2006). Poularde farmer must be saved from remote sensing: public concern over administrative work. Policies and Public Management, 24, 109-122. Wenger, J. B., & Wilkins, V. M. (2008). At the discretion of rogue agents: How automation improves women’s outcomes in unemployment insurance. Journal of Public Administration Research and Theory, 19, 313-333. Wilkinson, N. (2008). An introduction to behavioral economics. New York, NY: Palgrave Macmillan. Zuurmond, A. (1998). From bureaucracy to infocracy: Are democratic institutions lagging behind? In I. T. M. W. Snellen & B. H. J. van de Donk (Eds.), Public administration in an information age: A handbook (pp. 259-270). Amsterdam, The Netherlands: IOS Press. Author Biography Justin B. Bullock is an assistant professor of Public Service & Administration at The Bush School of Government & Public Service, Texas A&M University. His research interests include public administration, governance, artificial intelligence, decision making, discretion, and motivation.