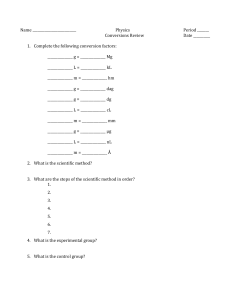

1) Read from Op –log

Configure Kafka Source which consumes messages from MongoDB

Oplog. This will be the producer Kafka for messages to be consumed

by the parser.

2) The parser will parse the message.

3) The parsed message can undergo required Transformation.

4) JdbcOutput is the DML produced to be applied to the database.

Replicate MongoDB to Oracle:

Kafka Source

Kafka Input

Transform

(Encrypt/Modify/Filter)

JdbcOutput

Parser

Jackson/csv etc

The Kafka to Database Sync Application ingest string messages seperated by ',' from

configured kafka topic and writes each message as a record in Oracle DataBase. This

application uses PoJoEvent as an example schema, this can be customized to use custom

schema based on specific needs.

ApplicationAnnotation(name="Kafka-to-Database-Sync")

public class Application implements StreamingApplication

{

@Override

public void populateDAG(DAG dag, Configuration conf)

{

KafkaSinglePortInputOperator kafkaInputOperator = dag.addOperator("kafkaInput",

KafkaSinglePortInputOperator.class);

CsvParser csvParser = dag.addOperator("csvParser", CsvParser.class);

JdbcPOJOInsertOutputOperator jdbcOutputOperator = dag.addOperator("JdbcOutput", new

JdbcPOJOInsertOutputOperator());

/*

* Custom field mapping(DB ColumnName -> PojoFieldExpression) provided to JdbcOutput Operator.

*/

JdbcTransactionalStore outputStore = new JdbcTransactionalStore();

jdbcOutputOperator.setStore(outputStore);

jdbcOutputOperator.setFieldInfos(addFieldInfos());

/*

* Connecting JDBC operators and using parallel partitioning for input port.

*/

dag.addStream("record", kafkaInputOperator.outputPort, csvParser.in);

dag.addStream("pojo", csvParser.out, jdbcOutputOperator.input);

dag.setInputPortAttribute(csvParser.in, Context.PortContext.PARTITION_PARALLEL, true);

dag.setInputPortAttribute(jdbcOutputOperator.input, Context.PortContext.PARTITION_PARALLEL, true);

/*

* To add custom logic to your DAG, add your custom operator here with

* dag.addOperator api call and connect it in the dag using the dag.addStream

* api call.

*

* For example:

*

* To add the transformation operator in the DAG, use the following block of

* code.

*

TransformOperator transform = dag.addOperator("transform", new TransformOperator());

Map<String, String> expMap = Maps.newHashMap();

expMap.put("name", "{$.name}.toUpperCase()");

transform.setExpressionMap(expMap);

*

* And to connect it in the DAG as follows:

kafkaInput --> csvParser --> Transform --> JdbcOutput

dag.addStream("pojo", csvParser.out, transform.input);

dag.addStream("transformed", transform.output, jdbcOutputOperator.input);

dag.setInputPortAttribute(transform.input, Context.PortContext.PARTITION_PARALLEL, true);

*

* In ApplicationTest.java

Mapping

* TUPLE_CLASS property from the xml configuration files.

*/

fieldInfos.add(new JdbcFieldInfo("account_no", "accountNumber", FieldInfo.SupportType.INTEGER, 0));

fieldInfos.add(new JdbcFieldInfo("name", "name", FieldInfo.SupportType.STRING, 0));

fieldInfos.add(new JdbcFieldInfo("amount", "amount", FieldInfo.SupportType.INTEGER, 0));

return fieldInfos;

}

}

Need to do PoC for

Does the application scales linearly with the number of poller partitions.

The application is fault tolerant and can withstand node and cluster outages without

data loss.

The application is also highly performant and can process as fast as the network

allows.

It is extremely easy to add custom logic to get your business value without worrying

about database connectivity and operational details of database poller and database

writer.

The only configuration user needs to provide is source kafka broker lists and database

connection details, table.

This enterprise grade application template will dramatically reduce your time to

market and cost of operations.