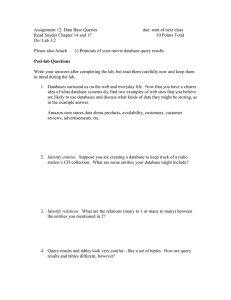

Relational Queries to Data Mining

Most people have Data from which they want information.

So, most people need DBMSs whether they know it or not.

A major component of any DBMS is the query processor.

Queries can range from structure to unstructured:

Relational querying Simple Searching and aggregating

SELECT

FROM

WHERE

Complex

queries

(nested,

EXISTS..

)

FUZZY queries (e.g.,

BLAST searches, ..

OLAP

(rollup,

drilldown,

slice/dice.

.

Machine Learning

Supervised Classification

Regression

UnsupervisedClustering

Data Mining

Association

Rule Mining

Although we looked fairly closely at the structured end of this spectrum, much research

is yet to be done on that end to solve the problem of delivering standard workload

answers with low response times and high throughput (D. DeWitt, ACM

SIGMOD’02 plenary symposium).

On the Data Mining end, we have barely scratched the surface.

(But those scratches have made the difference between becoming the world’s

biggest corporation and filing for bankruptcy – Walmart vs. KMart)

Some Vertical DBMS approaches

BSM: A Bit Level Decomposition Storage Model

A model of query optimization of all types

•

Vertical partitioning has been studied within the context of both centralized database system as well as

distributed ones. It is a good strategy when small numbers of columns are retrieved by most queries. The

decomposition of a relation also permits a number of transactions to execute concurrently. Copeland et al

presented an attribute level decomposition storage model (DSM) [CK85] storing each column of a

relational table into a separate binary table. The DSM showed great comparability in performance.

•

Beyond attribute level decomposition, Wong et al further took the advantage of encoding attribute values

using a small number of bits to reduce the storage space [WLO+85]. In this paper, we will decompose

attributes of relational tables into bit position level, utilize SPJ query optimization strategy on them, store

the query results in one relational table, finally data mine using our very good P-tree methods.

•

Our method offers these advantages:

–

–

–

–

(1) By vertical partitioning, we only need to read everything we need. This method makes hardware caching work

really well and greatly increases the effectiveness of the I/O device.

(2) We encode attribute values into bit vector format, which makes compression easy to do.

(3) SPJ queries can be formulated as Boolean expressions, which facilitates fast implementation on hardware.

(4) Our model is fit not only for query processing but for data mining as well.

• [CK85] G.Copeland, S. Khoshafian. A Decomposition Storage Model. Proc. ACM Int. Conf. on Management

of Data (SIGMOD’85), pp.268-279, Austin, TX, May 1985.

• [WLO+85] H. K. T. Wong, H.-F. Liu, F. Olken, D. Rotem, and L. Wong. Bit Transposed Files.

•

Proc. Int. Conf. on Very Large Data Bases (VLDB’85), pp.448-457, Stockholm, Sweden, 1985.

SPJ Query Optimization Strategies One-table Selections

•

There are two categories of queries in one-table selections: Equality Queries and Range Queries. Most

techniques [WLO+85, OQ97, CI98] used to optimize them employ encoding schemes – equality

encoding and range encoding. Chan and Ioannidis [CI99] defined a more general query format called

interval query. An interval query on attribute A is a query of the form “x≤A≤y” or “NOT (x≤A≤y)”. It

can be an equality query or a range query when x or y satisfies different kinds of conditions.

•

We defined interval P-trees in previous work [DKR+02], which is equivalent to the bit vectors of

corresponding intervals. So for each restriction in the form above, we have one corresponding interval

P-tree. The ANDing result of all the corresponding interval P-trees represents all the rows satisfy the

conjunction of all the restriction in the where clause.

• [CI98] C.Y. Chan and Y. Ioannidis. Bitmap Index Design and Evaluation. Proc. ACM Intl. Conf. on

Management of Data (SIGMOD’98), pp.355-366, Seattle, WA, June 1998.

• [CI99] C.Y. Chan and Y.E. Ioannidis. An Efficient Bitmap Encoding Scheme for Selection Queries. Proc.

ACM Intl. Conf. on Management of Data (SIGMOD’99), pp.216-226, Philadephia, PA, 1999.

• [DKR+02] Q. Ding, M. Khan, A. Roy, and W. Perrizo. The P-tree algebra. Proc. ACM Symposium Applied

Computing (SAC 2002), pp.426-431, Madrid, Spain, 2002.

• [OQ97] P. O’Neill and D. Quass. Improved Query Performance with Variant Indexes. Proc. ACM Int.

Conf. on Management of Data (SIGMOD’97), pp.38-49, Tucson, AZ, May 1997.

Vertical Select-Project-Join (SPJ) Queries

A Select-Project-Join query has joins, selections and projections. Typically there is a central fact relation (e.g., Enrollments

or E below) to which several dimension relations are to be joined (e.g., Student(S), Course(C) below).

A bit encoding is shown in reduced font italics for certain attributes, e.g., gen=gender, s=Student#, etc.

S|s____|name_|gen| C|c____|name|st|term|

|0 000|CLAY |M 0| |0 000|BI |ND|F 0|

|1 001|THAIS|M 0| |1 001|DB |ND|S 1|

|2 010|GOOD |F 1| |2 010|DM |NJ|S 1|

|3 011|BAID |F 1| |3 011|DS |ND|F 0|

|4 100|PERRY|M 0| |4 100|SE |NJ|S 1|

|5 101|JOAN |F 1| |5 101|AI |ND|F 0|

E|s____|c____|grade |

|0 000|1 001|B

10|

|0 000|0 000|A

11|

|3 011|1 001|A

11|

|3 011|3 011|D

00|

|1 001|3 011|D

00|

|1 001|0 000|B

10|

|2 010|2 010|B

10|

|2 010|3 011|A

11|

|4 100|4 100|B

10|

|5 101|5 101|B

10|

Vertical bit sliced (uncompressed P-trees) attributes stored as:

S.s2

0

0

1

1

0

0

S.s1

0

0

0

0

1

1

S.s0

0

1

0

1

0

1

S.g

0

0

0

1

1

1

C.c2

0

0

1

1

0

0

C.c1

0

0

0

0

1

1

C.c0

0

1

0

1

0

1

Vertical (un-bit-sliced) attributes are stored:

C.t

0

1

1

0

1

0

E.s2

0

0

0

0

0

0

0

0

1

1

S.name

|CLAY |

|THAIS|

|GOOD |

|BAID |

|PERRY|

|JOAN |

E.s1

0

0

0

0

1

1

1

1

0

0

E.s0

0

0

1

1

1

1

0

0

0

1

C.name

|BI |

|DB |

|DM |

|DS |

|SE |

|AI |

E.c2

0

0

0

0

0

0

0

0

1

1

E.c1

0

0

1

0

1

1

1

0

0

0

C.st

|ND|

|ND|

|NJ|

|ND|

|NJ|

|ND|

E.c0

1

0

1

0

1

1

0

1

0

1

E.g1

1

1

0

1

1

0

1

1

1

1

E.g0

0

1

0

0

1

0

0

1

0

0

When 1 or more joins are required and there are more than 1 join attributes, e.g.,

the following SPJ on Student, Course, Offerings, Rooms, Enrollments files (next 5 slides):

R:r

cap

|0 00|30 11|

|1 01|20 10|

|2 10|30 11|

|3 11|10 01|

S:s

n gen

|0 000|A|M|

|1 001|T|M|

|2 010|S|F|

|3 011|B|F|

|4 100|C|M|

|5 101|J|F|

S.s2

0

0

1

1

0

0

S.s1

0

0

0

0

1

1

S.s0

0

1

0

1

0

1

R.r1

0

0

1

1

S.n

A

T

S

B

C

J

C.c0

0

1

0

1

C.n

B

D

M

S

R.c1

1

1

1

0

R.c0

1

0

1

1

S.g

M

M

F

F

M

F

C:c

n cred

|0 00|B|1 01|

|1 01|D|3 11|

|2 10|M|3 11|

|3 11|S|2 10|

C.c1

0

0

1

1

R.r0

0

1

0

1

C.r1

0

1

1

1

C.r0

1

1

1

0

E.s2

0

0

0

0

0

0

0

0

1

1

E.s1

0

0

1

1

0

0

1

1

0

0

SELECT S.n, C.n

FROM S, C, O, R, E

WHERE S.s=E.s & C.c=O.c & O.o=E.o & O.r=R.r

& S.g=M & C.r=2

& E.g=A

& R.c=20;

E:s

o

|0 000|1

|0 000|0

|3 011|1

|3 011|3

|1 001|3

|1 001|0

|2 010|2

|2 010|7

|4 100|4

|5 101|5

E.s0

0

0

1

1

1

1

0

0

0

1

E.o2

0

0

0

0

0

0

0

1

1

1

E.o1

0

0

0

1

1

0

1

1

0

0

grade

001|2 10|

000|3 11|

001|3 11|

011|0 00|

011|0 00|

000|2 10|

010|2 10|

111|3 11|

100|2 10|

101|2 10|

E.o0

1

0

1

1

1

0

0

1

0

1

E.g1

1

1

1

0

0

1

1

1

1

1

E.g0

0

1

1

0

0

0

0

1

0

0

O :o

|0 000|0

|1 001|0

|2 010|1

|3 011|1

|4 100|2

|5 101|2

|6 110|2

|7 111|3

O.o2

0

0

0

0

1

1

1

1

O.o1

0

0

1

1

0

0

1

1

O.o0

0

1

0

1

0

1

0

1

O.c1

0

0

0

0

1

1

1

1

c

00|0

00|1

01|0

01|1

10|0

10|2

10|3

11|2

O.c0

0

0

1

1

0

0

0

1

r

01|

01|

00|

01|

00|

10|

11|

10|

O.r1

0

0

0

0

0

1

1

1

O.r0

1

1

0

1

0

0

1

0

S.s2

0

0

1

1

0

0

S.s1

0

0

0

0

1

1

S.s0

0

1

0

1

0

1

S.n

A

T

S

B

C

J

C.c1

0

0

1

1

C.c1

0

1

0

1

C.n

B

D

M

S

C.r1

0

1

1

1

SM

S.g

M

1

M

1

F

0

F

0

M

1

F

0

C.r’22

C.r

1

0

1

0

1

0

0

1

O.o2

0

0

0

0

1

1

1

1

O.o1

0

0

1

1

0

0

1

1

O.o0

0

1

0

1

0

1

0

1

O.c1

0

0

0

0

1

1

1

1

O.c0

0

0

1

1

0

0

0

1

O.r1

0

0

0

0

0

1

1

1

O.r0

1

1

0

1

0

0

1

0

R.r1 R.r0

0 0

0 1

1 0

1 1

R.c1 R.c’

R.c00

1 0

1

1 1

0

1 0

1

0 0

1

EgA

0

1

Cr2 1

0

0

0

0

0

0

1

0

1

0

0

For selections, S.g=M C.r=2 E.g=A R.c=20 create selection

masks (note that C.r=2 is coded in binary as 10 b

Rc20

0

1

0

0

E.s2

0

0

0

0

0

0

0

0

1

1

E.s1

0

0

1

1

0

0

1

1

0

0

E.s0

0

0

1

1

1

1

0

0

0

1

E.o2

0

0

0

0

0

0

1

0

1

1

E.o1

0

0

0

1

1

0

1

1

0

0

E.o0

1

0

1

1

1

0

0

1

0

1

E.s2

0

0

0

0

0

0

0

0

0

0

E.s1

0

0

1

0

0

0

0

1

0

0

E.s0

0

0

1

0

0

0

0

0

0

0

E.o2

0

0

0

0

0

0

0

1

0

0

E.o1

0

0

0

0

0

0

0

1

0

0

E.o0

0

0

1

0

0

0

0

1

0

0

Apply selection masks (Zero out numeric values, blanked out others).

S.s2

0

0

0

0

0

0

S.s1

0

0

0

0

1

0

S.s0

0

1

0

0

0

0

C.c1

0

0

0

1

C.c0 C.n

0

0

0

S

1

S.n

A

T

C

O.o2

0

0

0

0

1

1

1

1

O.o1

0

0

1

1

0

0

1

1

0

0

1

0

1

0

1

0

1

O.c1

0

0

0

0

1

1

1

1

O.c0

0

0

1

1

0

0

0

1

O.r1

0

0

0

0

0

1

1

1

O.r0

1

1

0

1

0

0

1

0

R.r1 R.r0

0 0

0 1

0 0

0 0

SELECT S.n, C.n

FROM S, C, O, R, E

WHERE S.s=E.s & C.c=O.c & O.o=E.o & O.r=R.r

& S.g=M & C.r=2

& E.g=A

& R.c=20;

E.g1

1

1

1

0

0

1

1

1

1

1

E.g0

0

1

1

0

0

0

0

1

0

0

S.s2

0

0

0

0

0

0

S.s1

0

0

0

0

1

0

S.s0

0

1

0

0

0

0

C.c1

0

0

0

1

C.c0 C.n

0

0

0

S

1

S.n

A

T

C

O.o2

0

0

0

0

1

1

1

1

O.o1

0

0

1

1

0

0

1

1

O.o0

0

1

0

1

0

1

0

1

O.c1

0

0

0

0

1

1

1

1

O.c0

0

0

1

1

0

0

0

1

O.r1

0

0

0

0

0

1

1

1

O’.r00

O.r

1

0

1

0

0

1

1

0

0

1

0

1

1

0

0

1

R.r1 R.r0

0 0

0 1

0 0

0 0

Rc20

0

1

0

0

E.s2

0

0

0

0

0

0

0

0

0

0

E.s1

0

0

1

0

0

0

0

1

0

0

E.s0

0

0

1

0

0

0

0

0

0

0

E.o2

0

0

0

0

0

0

0

1

0

0

E.o1

0

0

0

0

0

0

0

1

0

0

E.o0

0

0

1

0

0

0

0

1

0

0

For the joins, S.s=E.s C.c=O.c O.o=E.o O.r=R.r, one approach is to follow an indexed nested loop like method (note that

the P-trees themselves are self indexing).

The join O.r=R.r is simply part of a selection on O (R doesn’t contribute output nor participate in any further operations)

Use the Rc20-masked R as the inner relation and O as the r-indexed outer relation) to produce a further selection mask for O.

Get 1st R.r value, 01b Mask the corresponding O tuples, PO.r1^P’O.r0

O.o2

0

0

0

0

0

1

0

1

O.o1

0

0

0

0

0

0

0

1

O.o0

0

0

0

0

0

1

0

1

O.c1

0

0

0

0

0

1

0

1

O.c0

0

0

0

0

0

0

0

1

OM

0

0

0

0

0

1

0

1

This is the only R.r value (if there were more,

one would do the same for each, then OR

those masks to get the final O-mask).

Next, we apply the O-mask, OM to O

SELECT S.n, C.n

FROM S, C, O, R, E

WHERE S.s=E.s & C.c=O.c & O.o=E.o & O.r=R.r

& S.g=M & C.r=2

& E.g=A

& R.c=20;

C.c1

0

0

0

1

C.c0 C.n

0

0

0

1

S

O.o2

0

0

0

0

0

1

0

1

For the final 3 joins C.c=O.c

O.o1

0

0

0

0

0

0

0

1

O.o0

0

0

0

0

0

1

0

1

O.c1

0

0

0

0

0

1

0

1

O.c0

0

0

0

0

0

0

0

1

E.s2

0

0

0

0

0

0

0

0

0

0

E.s1

0

0

1

0

0

0

0

1

0

0

E.s0

0

0

1

0

0

0

0

0

0

0

E.o2

0

0

0

0

0

0

0

1

0

0

E.o1

0

0

0

0

0

0

0

1

0

0

S’.s22 S.s1

S.s

0 0

1

0 0

1

0 0

0 0

0 1

1

0 0

E.o0

0

0

1

0

0

0

0

1

0

0

S’.s00 S.n

S.s

0 A

1

1 T

0

0

0

0 C

1

0

O.o=E.o E.s=S.s the same indexed nested loop like method can be used.

Get 1st masked C.c value, 11b Mask corresponding O tuples: PO.c1^PO.c0

SELECT S.n, C.n

FROM S, C, O, R, E

WHERE S.s=E.s & C.c=O.c & O.o=E.o & O.r=R.r

& S.g=M & C.r=2

& E.g=A

& R.c=20;

OM

0

0

0

0

0

0

0

1

Get 1st masked O.o value, 111b Mask corresponding E tuples: PE.o2^PE.o1^PE.o0

Get 1st masked E.s value, 010b

EM

0

0

0

0

0

0

0

1

0

0

Mask corresponding S tuples: P’S.s2^PS.s1^P’S.s0

Get S.n-value(s), C, pair it with C.n-value(s), S, output concatenation, C.n S.n

S C

There was just one masked tuple at each stage in this

example. In general, one would loop through the

masked portion of the extant domain at each level

(thus, Indexed Horizontal Nested Loop or IHNL)

SM

0

0

0

0

1

0

C.c1

0

0

0

1

C.c1 C.n

0

0

0

S

1

O.o1

0

0

0

0

0

1

0

1

O.o2

0

0

0

0

0

0

0

1

O.o3

0

0

0

0

0

1

0

1

O.c1

0

0

0

0

0

1

0

1

O.c2

0

0

0

0

0

0

0

1

E.s1

0

0

0

0

0

0

0

0

0

0

E.s2

0

0

1

0

0

0

0

1

0

0

E.s3

0

0

1

0

0

0

0

0

0

0

E.o1

0

0

0

0

0

0

0

1

0

0

E.o2

0

0

0

0

0

0

0

1

0

0

E.o3

0

0

1

0

0

0

0

1

0

0

S.s1

0

0

0

0

0

0

S.s2

0

0

0

0

1

0

S.s3

0

1

0

0

0

0

S.n

A

T

C

Having done the query tree sequentially (selections first, then joins and projections) it appears that the entire query tree could

be done in one combined step by

looping through the masked C tuples,

for each C.n value,

determine if there is an S.n value that should be paired with it by logical operations

output those S.n, C.n pair(s), if any, else go to the next masked C.n value.

Does this lead to a one-pass vertical query optimizer?!?!?!

Can the indexed nested loop like algorithm be modified to loop horizontally? (across bit positions, rather than down tuples?)

SELECT S.n, C.n

FROM S, C, O, R, E

WHERE S.s=E.s & C.c=O.c & O.o=E.o & O.r=R.r

& S.g=M & C.r=2

& E.g=A

& R.c=20;

DISTINCT Keyword, GROUP BY Clause, ORDER BY Clause,

HAVING Clause and Aggregate Operations

•

Duplicate elimination after a projection (SQL DISTINCT keyword) is one of the most

expensive operations in query optimisation. In general, it is as expensive as the join

operation. However, in our approach, it can automatically be done while forming the output

tuples (since that is done in an order). While forming all output records for a particular

value of the ORDER BY attribute, duplicates can be easily eliminated without the need for

an expensive algorithm.

•

The ORDER BY and GROUP BY clauses are very commonly used in queries and can

require a sorting of the output relation. However, in our approach, if the central relation is

chosen to be the one with the sort attribute and the surrogation is according to the attribute

order (typically the case – always the case for numeric attributes), then the final output

records can be put together and aggregated in the requested order without a separate sort

step at no additional cost. Aggregation operators such as COUNT, SUM, AVG, MAX, and

MIN can be implemented without additional cost during the output formation step and any

HAVING decision can be made as output records are being composed, as well (See Yue

Cui’s Master’s thesis in NDSU library for vertical aggregation computations using P-trees.)

•

If the Count aggregate is requested by itself, we note that P-trees automatically provide the

full counts for any predicate with just one multiway AND operation.

Combining Data Mining and Query Processing

•

Many data mining request involve pre-selection, pre-join, and pre-projection on a database to

isolate the specific data subset to which the data mining algorithm is to be applied. For

example, in the above database, one might be interested in all Association Rules of a given

support threshold and confidence threshold but only on the result relations of the complex

SPJ query shown. The brute force way to do this is to first join all relations into one universal

relation and then to mine that gigantic relation. This is not a feasible solution in most cases

due to the size of the resulting universal relation. Furthermore, often some selection on that

universal relation is desirable prior to the mining step.

•

Our approach accommodates combinations of querying and data mining without necessitation

the creation of a massive universal relation as an intermediate step. Essentially, the full

vertical partitioning and P-trees provide a selection and join path which can be combined with

the data mining algorithm to produce the desired solution without extensive processing and

massive space requirements. The collection of P-trees and BSQ files constitute a lossless,

compressed version of the universal relation. Therefore the above techniques, when

combined with the required data mining algorithm can produce the combination result very

efficiently and directly.

S:s

n gen

|0 000|A|M|

|1 001|T|M|

|2 010|S|F|

|3 011|B|F|

|4 100|C|M|

|5 101|J|F|

S.s2

0

0

1

1

0

0

S.s1

0

0

0

0

1

1

S.s0

0

1

0

1

0

1

C:c

n cred

|0 00|B|1 01|

|1 01|D|3 11|

|2 10|M|3 11|

|3 11|S|2 10|

C.c1 C.c0 C.n C.r1

0

0

B

0

0

1

D

1

1

0

M

1

1

1

S

1

S.n

A

T

S

B

C

J

C.r0

1

1

1

0

S.g

0

0

1

1

0

1

Horizontal Indexed Nested Loop Join???

O :o

|0 000|0

|1 001|0

|2 010|1

|3 011|1

|4 100|2

|5 101|2

|6 110|2

|7 111|3

O.o1

0

0

1

1

0

0

O.o1

1 2

0

0

0

0

1

1

1

1

0<rc(S.s

c

00|0

00|1

01|0

01|1

10|0

10|2

10|3

11|2

O.o0

0

1

0

1

0

1

0

1

r

01|

01|

00|

01|

00|

10|

11|

10|

O.c1

0

0

0

0

1

1

1

1

O.c0

0

0

1

1

0

0

0

1

O.r1

0

0

0

0

0

1

1

1

SELECT *

R:r

cap

|0 00|30 11|

|1 01|20 10|

|2 10|20 10|

|3 11|10 01|

R.r1 R.r0 R.c1 R.c0

0

0

1

1

0

1

1

0

1

0

1

0

1

0

1

O.r0 1

1

1

E.s2

0

0

1

0

0

0

0

0

1

0

0

0

0

0

1

1

FROM S,E

E:s

o

|0 000|1

|0 000|0

|3 011|1

|3 011|3

|1 001|3

|1 001|0

|2 010|2

|2 010|7

|4 100|4

|5 101|5

E.s1

0

0

1

1

0

0

1

1

0

0

E.s0

0

0

1

1

1

1

0

0

0

1

E.o2

0

0

0

0

0

0

0

1

1

1

E.o1

0

0

0

1

1

0

1

1

0

0

grade

001|2 10|

000|3 11|

001|3 11|

011|0 00|

011|0 00|

000|2 10|

010|2 10|

111|3 11|

100|2 10|

101|2 10|

E.o0

1

0

1

1

1

0

0

1

0

1

E.g1

1

1

1

0

0

1

1

1

1

1

E.g0

0

1

1

0

0

0

0

1

0

0

WHERE S.s=E.s

1st if 0<rc(S.s2) thenif rc(S.s2)<|S| thenif

1)^ thenif rc(S.s1)<|S| thenif 0<rc(S.s0) thenif rc(S.s0)<|S| then…

So depth-first traversal down the bitslice tree for S.s, skipping all values that are not present, and for each S.s value that is present, one

and gives that value P-tree in E (index into E) so optimal retrieval can be done.

If the Ptrees are organized according to physical boundaries as below, then is there a P-tree based Hybrid Hash join that allows us to

avoid excessive rereads of extents?

It seems clear that compressing bit vectors into P-trees based, not on 1/2d boundaries, but on page and extent boundaries is important.

Use the Dr. Md Masum Serazi approach, but with the following levels (possibly collapsing levels 0 and 1 together)

The level-0 fanout is the bfr of the page blocks.

The level-1 fanout is the extent size (# of blocks per extent).

The level-2 fanout is the (maximum) number of extents per file.

The level-3 fanout is the number of files in the DB

The real advantage of this approach may to apply it to join algorithms where the location of join

Attribute values is known ( see V. Goli’s thesis) since we know the location of all values

Through ANDs.

Graph G=(N,E) is (T,I)-bipartite iff N=T!I and e={e1,e2}E, if e1T [I]

then e2I [T]. WOLOG write e={eT,eI} (E is directed from T to I e=(eT,eI) )

E={ {ek,T,ek,I} | k=1..|E|} or the edge relationship can be expressed as

tIset, ET= { (t,Iset(t) | tT and Iset(t)={i|{t,i}E}

iTset, EI= { (i,Iset(i) | where Iset(i)={t | {t,i}E}

tImap, ETb={ (t,b1,...,b|I|) | where bk=1 iff ek,T=t}

iTmap, EIb={ (i,b1,...,b|T|) | where bk=1 iff ek,I=t}

Given a star schema with fact, E and dimensions, S, C. E is a ER-relationship

between entities, S and C and is therefore a bipartite graph, G=(N,E) where N

is the disjoint union of S and C.

Given a join S.s with E.s, JoinIndex (JI) is a relationship between S and E,

giving a bipartite graph, G=(S!E,JI). The sEmap of this relationship is the

association matrix of Qiang Ding's thesis.

C.c1

0

0

1

1

C.c0

0

1

0

1

C.n

1

0

0

1

S.a

1

1

0

0

1

0

0

0

S.s2

0

0

0

0

1

1

1

1

S.s1

0

0

1

1

0

0

1

1

S.s0

0

1

0

1

0

1

0

1

E.s2

1

0

0

0

0

0

0

0

1

1

0

0

0

0

1

1

0

0

0

0

0

0

1

E.s1

0

0

1

1

0

0

1

1

0

0

0

0

0

0

0

0

1

1

1

0

0

0

0

E.s0

0

0

0

1

1

0

1

1

0

0

0

0

0

0

0

0

0

0

0

1

0

0

0

E.g

0

0

0

1

1

0

1

1

0

0

0

0

0

0

0

0

0

0

0

1

0

0

0

E.c1

0

0

0

1

0

0

0

1

0

0

0

0

0

0

0

0

0

1

1

0

0

1

0

E.c0

1

0

0

0

1

0

1

1

0

1

0

0

0

0

0

0

0

1

0

1

1

0

0

QUERY PROCESSING in Distributed DBMSs (DDBMSs)

Desirable Features of a Distributed DBMS:

LOCATION TRANSPARENCY is achieved if a user can access needed data without having to

know which site has that data. -simplifies logic of programs -allows data movement as usage

patterns change A data object (typically a file) is fragmented if it is divided into multiple

pieces for storage and/or placement purposes at different sites. e.g., accounts files: Fargo

customer accounts can be stored in Fargo, Grand Forks customer accounts can be stored in

Grand Forks...)

FRAGMENTATION TRANSPARENCY is achieved if users can access needed data without

having to know whether it is fragmented. a data object (typically a record or file) is

REPLICATED if it has ≥ 1 physical copy -distributed replication advantages include

availability -disadvantages include increased update overhead.

REPLICATION TRANSPARENCY is achieved if users can access needed data without

knowing whether or not it is replicated. Additional desirable DDBMS features include:

LOCAL AUTONOMY is achieved if the system is distributed consistent with the logical and

physical distribution of the enterprise. It allows local control over local data, It allows local

accountability and less dependency on remote Data Processing Support for INCREMENTAL

GROWTH AVAILABILITY and RELIABILITY.

QUERY PROCESSING in Distributed DBMSs (DDBMSs)

Distributed systems can more easily allow for graceful (and unlimited) growth simply by adding

additional sites. The DDBMS software should allow for adding sites easily. Reliability can be

provided by replicating data. The DDBMS should allow for replication to enhance reliability

and availability in the presence of failures of sites or links.

DISTRIBUTED QUERIES Query Optimization Methods can be

STATIC: strategy of transmissions and local processing activities is fully determined before

execution begins (at compile time).

DYNAMIC: Each step is decided after seeing results of previous steps. Response time usually is

dominated by transmission costs (i.e., local processing times are negligible by comparison assumed 0?).

One model is to take RESPONSE time to be linear in number of bytes, X, sent: R(X) = AX + B

B is the fixed (setup?) cost of the transmission and

AX is the variable cost (depending on message size only, not distance).

What assumptions does this make? (next slide)

Bandwidth = The number of fbits per second that can be sent.

delay

• Time to send a message from point A to point B

Components of delay = Propagation + Transmit + Queue (=delays in send and demultiplexing queues)

Transmit = Size / Bandwidth:

The time between when 1st bit enters and last bit enters the link.

Propagation = Distance / SpeedOfLight: The time between when the last bit enters and last bit leaves

the link.

Propagation versus Transmit delay

• If you’re sending 1 byte, propagation delay dominates.

• If you’re sending 500 MB, transmit delay dominates

18

QUERY PROCESSING in Distributed DBMSs (DDBMSs)

A STATIC, QUERY PROCESSING ALGORITHM usually takes as input: database statistics

such as relation sizes attribute sizes projected sizes of attributes produces as output: a strategy

for answering the query (a pattern of what transmissions to make, when, where and what local

processing to do, when and where) Usually involves 4 phases:

LOCAL PROCESSING phase: do all processing that can be done initially at each site that

doesn't require data interchange between sites. (e.g., local selections, joins and projections)

The result this phase is that there will be one participating relation at each participating site.

REDUCTION phase: selected "semijoins" to be done to reduce the size of participating relations

by eliminating tuples that are not needed in answering the query.

TRANSPORT phase: send one relation from each participating site (the result of the reduction

phase) to the querying site.

COMPLETION phase: finishing up processing using those relations to get final answer (e.g.,

final projects, selects, joins)

See http://www.cs.ndsu.nodak.edu/~perrizo/classes/765/09query.html for more details, if you

desire them.

QUERY PROCESSING in Distributed DBMSs (DDBMSs)

What is the SEMIJOIN of R1(A,B) to R2(A,C) on A? (written: R1:A→ R2 ).

1. projection R onto A (again, the result is written as R1[A])

2. R1[A]:A→ R2 (Which selects those tuples of R2 that will participate in the join).

The result of R1[A]:A→ R2 is the sub-relation of R2 of only those R2-tuples which will participate

in the full join of R1 JOINA R2 on A (eliminates non-participants at the cost of generating (and

sending, if R2 is located at a different site than R1) the R1-join attribute values).

A semijoin can be viewed as a special SELECTION operator also, since it selects out those tuples

of R2 that have a matching A-value in R1.

Thus the semijoin is perfect for reducing the size of relations before they are sent to the querying

site.

But note that semijoins don't always end up reducing the size of a relation.

See http://www.cs.ndsu.nodak.edu/~perrizo/classes/765/09query.html if more details are

desired.

STATIC QUERY PROCESSING Example in DDBMSs

ENROLLS#→STUDENT

For example,

1. project ENROLL onto the S# attribute:

STUDENT-FILE

S#|SNAME |LCODE

25|CLAY |NJ5101

32|THAISZ|NJ5102

38|GOOD |FL6321

17|BAID |NY2091

57|BROWN |NY2092

2. Join the two relations on S#

ENROLL FILE

S#|C#|GRADE

32|8 | 89

32|7 | 91

32|6 | 62

38|6 | 98

resulting in:

S#

|32|

|38|

join

S# |SNAME |LCODE

25|CLAY |NJ5101

32|THAISZ|NJ5102

38|GOOD |FL6321

17|BAID |NY2091

57|BROWN |NY2092

S# |SNAME |LCODE

32|THAISZ|NJ5102

38|GOOD |FL6321

S#

32

38

STATIC QUERY PROCESSING Example in DDBMSs

semijoins don't always end up reducing the size of a relation. Consider

STUDENT S#→ ENROLL

Project STUDENT onto the S# attribute and join it with ENROLL:

S#

25

32

38

17

57

join

S#|C#|GRADE

32|8 | 89

32|7 | 91

32|6 | 62

38|6 | 98

resulting in the entirety of STUDENT again (no tuples eliminated)!

So let's make it a rule:

- never semijoin the primary key to a foreign key, because it will always result in no

reduction.

Distributed Semijoin of R1 at site1 to R2 at site2 along A

1. projection R1[A]

2. transmission of R1[A] to the site2.

3. R1[A] A-join R2 (select R2-tuples that participate in join)

Consider the following distributed query:

Assume SELECT R1.A2, R2.A2 FROM R1,R2WHERE R1.A1 = R2.A1 arrives at site3.

At site1: R1

A1

a

a

b

c

e

A2 A3 A4

A

C

A

D

E

A

D

B

D

B

B

D

C

B

A

A5 A6 A7 A8 A9

C

E

D

B

A

C

A

B

A

C

E

A

A

C

C

A

B

B

A

D

F

B

A

C

D

At site 2: R2

A1

A2

d 1

e 2

g 3

Assume response time for transmission of X bytes between

any 2 sites is R(X) = X + 10 time units.

Distributed Semijoin of R1 at site1 to R2 at site2 along A

Strategy-1: (No reduction phase).

STRATEGY 1

1. Send R1 to site3: 45 bytes sent.

Cost is R(45)=45+10 =

2. Send R2 to site3: 6 bytes sent.

Cost of R(6)= 6+10 =

3. Final join (cost = 0) result: eEBAACCDD2 Response time=

At site1: R1

site2: R2

A1 A2 A3 A4 A5 A6 A7 A8 A9

a

a

b

c

e

A

C

A

D

E

A

D

B

D

B

B

D

C

B

A

C

E

D

B

A

C

A

B

A

C

E

A

A

C

C

A

B

B

A

D

55

16

71

A1 A 2

F

B

A

C

D

d

e

g

site3

Strategy 1': If 1. and 2. are done in parallel, the response time=

55

1

2

3

STRATEGY 2

1. Send R2[A] to site1; do R2[A]R1

3 bytes sent. Cost=R(3)= 13

2. Send R2[A]R1 to site3.

9 bytes sent. Cost=R(9)= 19

3. Send R2 to site3;

6 bytes sent. Cost=R(3) = 16

4. JOIN R2[A]R1 and R2 on A1 at site3.

Cost =

0

At site1: R1

Distributed Semijoin of R1 at site1 to R2 at site2 along A;

site2: R2

A1 A2 A3 A4 A5 A6 A7 A8 A9

a

a

b

c

e

A

C

A

D

E

A

D

B

D

B

B

D

C

B

A

C

E

D

B

A

C

A

B

A

C

E

A

A

C

C

A

B

B

A

D

A1 A 2

F

B

A

C

D

d

e

g

site3

result: eEBAACCDD2 Response time =

Strategy 2': If 1. and 3. are (can be?) done in parallel, the response time = 32

48

1

2

3

STRATEGY 3

1. Send R1[A] to site2; do R1[A]R2

45 bytes sent. Cost=R(45)= 55

2. Send R1[A]R2 to site3.

2 bytes sent. Cost=R(2)= 12

3. Send R1 to site3;

45 bytes sent. Cost=R(45) = 55

4. JOIN R1[A]R2 and R1 on A1 at site3.

Cost =

0

At site1: R1

Distributed Semijoin of R1 at site1 to R2 at site2 along A;

site2: R2

A1 A2 A3 A4 A5 A6 A7 A8 A9

a

a

b

c

e

A

C

A

D

E

A

D

B

D

B

B

D

C

B

A

C

E

D

B

A

C

A

B

A

C

E

A

A

C

C

A

B

B

A

D

A1 A 2

F

B

A

C

D

d

e

g

site3

result: eEBAACCDD2 Response time =

Strategy 3': If 1. and 3. are (can be?) done in parallel, the response time = 67

122

1

2

3

Distributed Query Processor DQP) must pick the strategy!

For static algorithms, the hardest job of the Distributed Query Processor

(which is at site3 where the query came in and must be processed) is

to pick among these 6 alternatives (if other transmission and local

processing cost are used, there would be a vastly different set of

alternative strategies).

The DQP at site3 must pick a strategy without seeing the data at aites 1

and 2. E.g., if the DQP decides that a "one semijoin strategy is best,

should it be 2, versus 3 (or 2' versus 3' if the network accomodates

parallel transmissions from a given send site). Note the vast

difference is cost (2 costs 48 and 3 costs 122, though both are 1

semijoin strategies! 3 costs more than the no semijoin strategy which

is 1 at a cost of 55).

The DQP has a need for estimates of the two semijoin result sizes, since

the actual results are not known in advance at site 3. That estimation

method is important, but difficult, since the situation can be very

different than the above.

STRATEGY 2 with different R1 and R2 data

1. Send R2[A] to site1; do R2[A]R1

6 bytes sent. Cost=R(3)= 16

2. Send R2[A]R1 to site3.

45 bytes sent. Cost=R(45)=55

3. Send R2 to site3;

12 bytes sent. Cost=R(12) = 22

4. JOIN R2[A]R1 and R2 on A1 at site3.

Cost =

0

At site1: R1

site2: R2

A1 A2 A3 A4 A5 A6 A7 A8 A9

d

d

e

g

e

A

C

A

D

E

A

D

B

D

B

B

D

C

B

A

C

E

D

B

A

C

A

B

A

C

E

A

A

C

C

A

B

B

A

D

A1 A 2

F

B

A

C

D

result:

Strategy 1 has same cost=71 so

Strategy 1 is better!

How should semijoin results be estimated?

site3

dEBAACCDD1

dCDDEAABB1

eABCDBABA2

gDDBBACAC3

eEBAACCDD2

Response time =

d

e

g

q

q

v

93

1

2

3

4

5

7

Selectivity Theory for estimating semijoin results.

The work of Hevner and Yao assumes data values are uniformly distributed and

attribute-distributions are independent of each other.

Results estimated as follows: (assuming A1 has domain {a,b,...z}).

The Selectivity of attributed R1 is the ratio of the number of values present (size of the

extant domain) over the number of values possible (size of full domain).

Therefore the selectivity of R1.A is 3/26. Using selectivity theory, we estimate the size

of semijoin, R1 A-semijoin R2 as:

(Original size of R2)*(selectivity of incoming, R1.A): 45 * 3/26 = 5.2

Selectivity theory estimates 5.2 bytes of R1 survive semijoin.

This is close for the first example database state and the algorithm proposed by Hevner

& Yao (ALGORITHM-GENERAL) would correctly select method 1.

However, it is way off in the second database state but ALGORITHM-GENERAL

would still select strategy-1 (not best for this DB state).

UPDATE PROPAGATION IN DISTRIBUTED DATABASES

UPDATE PROPAGATION: To update any replicated data item, the DDBMS must

propagate the new value consistently to all copies.

IMMEDIATE method: update all copies (the update fails if even 1 copy is unavailable)

PRIMARY method: designate 1 copy as primary for each item.

Update is deemed complete (COMMITTED) when primary copy is updated.

Primary copy site is responsible for broadcasting the update to the other sites.

Broadcast can be done in parallel while the transaction is contining, however that

runs counter to local autonomy theme

Thank

you.