Recognizing Soldier Activities in the Field

advertisement

Recognizing Soldier Activities in the Field

David Minnen1 , Tracy Westeyn1 , Daniel Ashbrook1 , Peter Presti2 , and Thad Starner1

1

Georgia Institute of Technology, College of Computing, GVU, Atlanta, Georgia, USA. {dminn, turtle, anjiro, thad}@cc.gatech.edu

2

Georgia Institute of Technology, Interactive Media Technology Center, Atlanta, Georgia, USA. peter.presti@imtc.gatech.edu

Abstract— We describe the activity recognition component

of the Soldier Assist System (SAS), which was built to meet

the goals of DARPA’s Advanced Soldier Sensor Information

System and Technology (ASSIST) program. As a whole, SAS

provides an integrated solution that includes on-body data

capture, automatic recognition of soldier activity, and a multimedia interface that combines data search and exploration.

The recognition component analyzes readings from six on-body

accelerometers to identify activity. The activities are modeled

by boosted 1D classifiers, which allows efficient selection of

the most useful features within the learning algorithm. We

present empirical results based on data collected at Georgia

Tech and at the Army’s Aberdeen Proving Grounds during

official testing by a DARPA appointed NIST evaluation team.

Our approach achieves 78.7% for continuous event recognition

and 70.3% frame level accuracy. The accuracy increases to

90.3% and 90.3% respectively when considering only the

modeled activities. In addition to standard error metrics, we

discuss error division diagrams (EDDs) for several Aberdeen

data sequences to provide a rich visual representation of the

performance of our system.

Keywords— activity recognition, machine learning, distributed sensors

I. I NTRODUCTION

The Soldier Assist System (SAS) was created for

DARPA’s Advanced Soldier Sensor Information System

and Technology (ASSIST) program. The stated goal of

the ASSIST program is to “enhance battlefield awareness

via exploitation of information collected by soldier-worn

sensors.” The task was to develop an “integrated system

and advanced technologies for processing, digitizing and

reporting key observational and experiential data captured

by warfighters” [1]. SAS allows soldiers to utilize on-body

sensors with an intelligent desktop interface to augment their

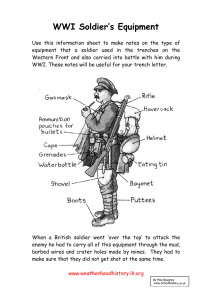

post-patrol recall and reporting capability (see Figure 1).

These post-patrol reports, known as after action reports

(AARs) are essential for communicating information to

superiors and future patrols. For example, a soldier may

return from a five hour patrol and his superiors may ask for

a report on all suspicious situations encountered on patrol.

In generating his report, the soldier can use the SAS desktop

interface to view all of the images taken around the time of

Fig. 1.

Screenshot of the SAS desktop interface

a particular activity such as taking a knee or raising his field

weapon. These situations are likely to contain faces, places,

or other events of interest to the soldier. These images can

be used to jog the soldier’s memory, provide details that

may have gone unnoticed during patrol, and provide relevant

data to be directly included in the soldier’s AAR for further

analysis by superiors.

The automatic recognition of salient activities may be a

key part of reducing the amount of time spent manually

searching through data. Working with NIST, DARPA, and

subject matter experts, we identified 14 activities important for generating AARs: running, crawling, lying on the

ground, a weapon up state, kneeling, walking, driving,

sitting, walking up stairs, walking down stairs, situation

assessment, shaking hands, opening a door, and standing

still. During interviews, soldiers identified the first five

activities as the most important.

Given these specific requirements, our sensing hardware

was tailored to these activities and for the collection of rich

multimedia data. The on-body sensors include six threeaxis accelerometers, two microphones, a high resolution still

camera, a video camera, a GPS, an altimeter, and a digital

compass (see Figure 2). The soldier activities are recognized

by analyzing the accelerometer readings, while the other

sensors provide content for the desktop interface.

Given the number of sensors and the magnitude of the

data, we used boosting to efficiently select features and learn

accurate classifiers. Our empirical results show that many

of the automatically selected features are intuitive and help

justify the use of distributed sensors. For example, driving

can be detected by identifying engine vibration detected by

the hip sensor while in a moving vehicle.

In Section V, we present cross-validated accuracy measurements for several system variations. Standard accuracy

metrics do not always account for the impact that different

error types have on continuous activity recognition applications, however. For example, a trade-off could exist between

identifying all instances of a raised weapon (potentially with

imprecise boundaries) and obtaining precise boundaries for

each identified event but failing to find all of the instances.

To better characterize the performance of our approach,

we discuss error division diagrams (EDDs), a recently

introduced performance visualization that allows quick, visual comparisons between different continuous recognition

systems [2]. The SAS system favors higher recall rates rather

than higher precision rates because the activity recognition

is used to highlight salient information for a soldier. Failing

to identify events can cause important information to be

overlooked, while a soldier can easily cope with potential

boundary errors by scanning the surrounding media.

II. R ELATED W ORK

Many applications in ubiquitous and wearable computing

support the collection and automatic identification of daily

activities using on-body sensing [3], [4], [5]. Westeyn and

Vadas et al. proposed the use of three on-body accelerometers to support the identification and recording of autistic

self-stimulatory behaviors for later evaluation by autism

specialists [6]. In 2004, Bao and Intille showed that the use

of two accelerometers positioned at the waist and upper arm

were sufficient to recognize 20 distinct activities using the

C4.5 decision tree learning algorithm with overall accuracy

rates of 84% [7]. Lester et al. reduced the number of

sensor locations to one position by incorporating multiple

sensor modalities into a single device [8]. Using a hybrid

of both discriminative and generative machine learning

methods (modified AdaBoost and HMMs) they recognized

10 activities with an overall accuracy of 95%. Finally,

Raj et al. simultaneously estimated location and activity

using a dynamic Bayesian network to model sensor activity

and location dependencies while relying on a particle filter

for efficient inference [9]. Their approach performs similarly

to that of Lester et al. and elegantly combines activity

recognition and location estimation.

III. S OLDIER A SSIST S YSTEM O N -B ODY S ENSORS

The SAS on-body system is designed to be unobtrusive

and allow for all-day collection of rich data. The low power,

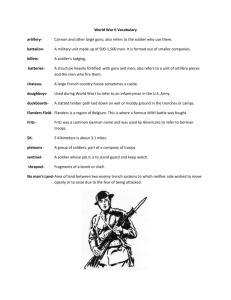

Fig. 2. Left: Soldier wearing the SAS sensor rig at the Aberdeen Proving

Grounds. Right: Open Camelbak showing OQO computer and connectors.

lightweight system consists of sensors spanning several

modalities along with a wireless on-body data collection

device. Importantly, the sensors are distributed at key locations on the body, and all but two are affixed to standard

issue equipment already worn by a soldier.

The major sensing components used for soldier activity

recognition are the six three-axis bluetooth accelerometers

positioned on the right thigh sidearm holster, in the left

vest chest pocket, on the barrel of the field weapon, on a

belt over the right hip, and on each wrist via velcro straps.

Each accelerometer component consists of two perpendicularly mounted dual-axis Analog Devices ADXL202JE

accelerometers capable of sensing ±2G static and dynamic

acceleration. Each accelerometer is sampled at 60Hz by a

PIC microcontroller (PIC 16F876). The Bluetooth radio is a

Taiyo Yuden serial port replacement module that handles the

transport and protocol details of the Bluetooth connection.

Each sensor is powered by a single 3.6V 700mA lithium

ion battery providing 15-20 hours of run-time.

Data from the wireless accelerometers (and other streaming sensors) are collected by an OQO Model 01+ palmtop

computer. The OQO and supporting equipment is carried

inside the soldier’s Camelbak water backpack. The OQO

sits against the Camelbak water reservoir to both cushion the

OQO against sudden shock and to to help avoid overheating.

Two 40 watt-hour camcorder batteries provide additional

power to the OQO and supply main power to other components in the system. These batteries provide the system with

a run time of over 4 hours.

Our system also contains off-the-shelf sensing components for location, video, and audio data. Affixed to the

shoulder of the vest is a Garmin GPSMAP 60CSx. The unit

also houses a barometric altimeter and a digital compass.

Two off-the-shelf cameras are mounted on the soldier’s

helmet. The first is a five megapixel Canon S500 attached

to the front of the helmet on an adjustable mount. A power

cable and USB cable run from the helmet to the soldier’s

backpack. The OQO automatically triggers the camera’s

shutter approximately every 5 seconds and stores the images

on its internal hard drive. A Fisher FVD-C1 mounted on the

side of the helmet. This camera records MPEG-4 video at

640x480 to an internal SD memory card.

Our system has two microphones for recording the soldier’s speech and ambient audio in the environment. One

microphone is attached to the helmet and positioned in front

of the soldier’s mouth by a boom. The second microphone

is attached to the arm strap of the Camelbak near the chest

of the soldier. Both microphones have foam wind shields

and are powered by a USB hub attached to the OQO.

The various sensors are not hardware synchronized. To

account for asynchronous sensor readings, we ran experiments to estimate the latency associated with each sensor.

The collection and recording software running on the OQO

is responsible for storing a timestamp with each sensor reading and applying the appropriate temporal offset to account

for the hardware latency. This provides rough synchronization across the different sensors and frees later processes,

such as the data query interface and the recognition system,

from needing to adjust the timestamps individually.

IV. L EARNING ACTIVITY M ODELS

Two fundamental issues must be addressed in order to

automatically distinguish between the 14 soldier activities

that our system must identify. The first is how to incorporate

sensor readings through time, and the second concerns

choosing a sufficiently rich hypothesis space that allows

accurate discrimination and efficient learning. Although

several models have been proposed that explicitly deal with

temporal data (e.g., hidden Markov models (HMMs) and

switching linear dynamic systems (SLDSs)), these models

can be very complex and typically expend a great deal of

representational power modeling aspects of a signal that

are not important to our system. Instead, we have adopted

an approach that classifies activities based on aggregate

features computed over a short temporal window similar to

the approach of Lester et al. [8].

differential descriptors for each dimension. We also compute

aggregate features based on each three-axis accelerometer.

Originally our feature list was augmented with GPS derivatives, heading, and barometer readings. However, preliminary experiments showed that this did not significantly

improve performance and these features were not included

in the experiments discussed in this paper. This finding is in

line with that of Raj et al. who also determined that GPSderived features did not aid activity recognition in a similar

task using a different sensor package [9].

Effectively, we have transformed a difficult temporal

pattern recognition problem into a simpler spatial classification task by computing aggregate features. One potential

difficulty is determining which features are important for

distinguishing each activity. Rather than explicitly choosing

relevant features as a preprocessing step (either manually or

through an automatic feature selection algorithm), we build

models using an AdaBoost framework [10] by selecting the

dimension and corresponding 1D classifier that minimize

classification error at each iteration. This framework automatically selects the best features for discrimination and is

robust to unimportant or otherwise distracting features.

B. Boosted 1D Classifiers

AdaBoost is an iterative framework for combining binary

classifiers such that a more accurate ensemble classifier

results [10]. We use a variant of the original formulation

that includes feature selection and support for unequal class

sizes [11]. The ensemble classifier is based on the sign of

a value derived from a weighted combination of the weak

classifiers called the margin. The magnitude of the margin

gives an indication of the confidence of the classifier in

the result. Margins may not be comparable across different

classifiers however, but we can use a method developed by

Platt to convert the margin into a pseudo-probability [12].

This method works by learning the parameters of a sigmoid

function (f (x) = 1/(1+eA(x+B) )) that directly maps a margin to a probability. We fit the sigmoid to margin/probability

pairs derived from the training points and only s ave the

sigmoid parameters (A and B) for use during inference.

A. Data Preprocessing

Several steps are needed to prepare the raw accelerometer

readings for analysis. First, we resample each sensor stream

at 60Hz to estimate the instantaneous reading of every

sensor at the same fixed intervals. Next, we slide a three

second window along the 18D synchronized time series

in one second steps. For each window, we compute 378

features based on the 18 sensor readings including mean,

variance, RMS, energy in various frequency bands, and

C. 1D Classifiers

During each round of boosting, the algorithm selects

the feature and 1D classifier that minimizes the weighted

training error. Typically, decision stumps are used as the

1D classifier due to their simplicity and efficient, globally

optimal learning algorithm. Decision stumps divide the

feature range into two regions, one labeled as the positive

class and the other as the negative class. In our experiments,

we supplement decision stumps with a Gaussian classifier

that models each class with a Gaussian distribution and

allows for one, two, or three decision regions depending

on the model parameters.

Learning the Gaussian classifier is straightforward. The

parameters for the two Gaussians ((µ1 , σ1 ) and (µ2 , σ2 )) can

be estimated directly from the weighted data. The decision

boundaries are then found by equating the two Gaussian

formulas and solving the resulting quadratic equation for

x: (σ12 − σ22 )x2 − 2(σ12 µ2 − σ22 µ1 )x + [(σ12 µ22 − σ22 µ21 ) −

2σ12 σ22 log( σσ21 )] = 0.

D. Multiclass Classification

The boosting framework can be used to learn accurate

binary classifiers but direct generalization to the multiclass

case is difficult. Two common methods for combining multiple binary classifiers into a single multiclass classifier are

the one-vs-all (OVA) and the one-vs-one (OVO) approaches.

In the one-vs-all case, for C classes, C different binary

classifiers are learned. For each classifier, one of the classes,

call it ωi , is taken as the positive class while the (C − 1)

others are combined to form the negative class. When a new

feature vector must be classified, each of the C classifiers is

applied and the one with the largest margin (corresponding

to the most confident positive classifier) is taken as the final

classification. Alternatively, we can base the decision on the

pseudo-probability of each class computed from the learned

sigmoid functions.

In the one-vs-one approach, C(C − 1)/2 classifiers are

learned, one for each pair of (distinct) classes. To classify a

new feature vector, the margin is calculated for each class

pair (mij is the margin for the classifier trained for ωi vs.

ωj ). Within this framework many methods may be used to

combine the individual classification results:

• vote[ms,ps,pp] - each classifier votes for the class with

the largest margin; ties are broken by using msum,

psum, or pprod.

• msum - each class is scored as the sum of the individual

class margins; the final !

classification is based on the

C

highest score: argmax

j=1 mij (x)

computing features over three second windows spaced at one

second intervals. This means that typically there are three

different windows that overlap for each second of data. To

produce a single classification for each one second interval,

we tested three methods:

• vote - each window votes for a single class and the

class with the most votes is selected

• prob - each window submits a probability for

each class,

"3and the most likely class is selected:

argmax j=1 p(ωi |windowj )

i

•

probsum - each window submits a probability for each

class, and the class !

with the highest probability sum is

3

selected: argmax

j=1 p(ωi |windowj )

i

V. E XPERIMENTAL R ESULTS

To evaluate our approach to recognizing soldier activity,

we collected data in three situations. In each case, the subject

wore the full SAS rig and completed an obstacle course

designed to exercise all of the activities. We collected 14

sequences totaling roughly 3.3 hours of activity data and

then manually labeled each sequence based on the video

and audio captured automatically by the system. The first

round of data collection took place in a parking deck near

our lab. We created an obstacle course based on feedback

from the NIST evaluation team in order to collect an initial

data set for development and testing. The second data

set was collected at the Aberdeen Proving Ground under

more realistic conditions to supplement our initial round

of training data. Finally, we have data collected while the

subject shadowed an experienced soldier as he traversed an

obstacle course set up by the NIST evaluation team.

Figure 3 shows a graph of performance versus the number

of rounds of boosting used to build the ensemble classifier.

We learned the ensemble classifier with 100 rounds and

used a leave-one-out cross validation scheme to evaluate

performance. In this case, we learned a classifier based on 13

of the 14 sequences and evaluated it on the 14th sequence.

As you can see in the graph, performance does vary with

i

•

psum - each class is scored as the sum of the individual

class probabilities; the final !

classification is based on

C

the highest score: argmax

j=1 p(ωi |mij (x))

i

•

pprod - the classification

is based on the most likely

"C

class: argmax j=1 p(ωi |mij (x))

i

E. Combining Classifications from Overlapping Windows

As discussed in Section IV-A, we transform a temporal

recognition problem into a spatial classification task by

Fig. 3. Event and frame-based accuracy vs. number of rounds of boosting

Fig. 4. Comparison of different binary classifier merge methods to handle

multiclass discrimination

additional rounds of boosting but basically levels off after

20 rounds. Thus we only used 20 rounds of boosting in

subsequent experiments.

Although all of the features used by the activity recognition system are selected automatically, manual inspection

reveals that they are often easily interpreted. For example,

one of the primary features used to classify ”shaking hands”

is the frequency of the accelerometer mounted on the right

wrist. Interestingly, the learning algorithm selected high

frequency energy in the chest and thigh sensors as useful

features for detecting the ”driving” activity. We believe this

is due to engine vibration detected by the sensor when the

soldier was seated in the humvee. This cue highlights the

strength of automatic feature selection during learning since

high-frequency vibration is quite discriminative but might

not have been obvious to a system engineer if features had

to be manually specified.

The official performance metric established by the NIST

evaluation team measured recognition performance during

those times when one of the 14 identified soldier activities

occurred. We call the unlabeled time the “null activity”

to indicate that it constitutes a mix of behaviors related

only by being outside the set of pre-identified activities.

Importantly, the null activity may constitute a relatively

large percentage of time and contain activity that is similar

to the identified activities. It is therefore very difficult to

model the null class or to detect its presence implicitly as

low confidence classifications for the identified activities.

Performance measures for our approach in both cases (i.e.,

when the null activity is included and when it is ignored)

are given in figure 4.

As discussed in Section IV-D, there are two common

approaches for combining binary classifiers into a multiclass

discrimination framework. Figure 4 displays the perfor-

Fig. 5. Error division diagrams comparing three multiclass methods both

with and without the null class. The bold line separates “serious errors”

from boundary and thresholding errors.

mance using each of the methods. The performance is

fairly stable across the different methods, though the inferior

accuracy achieved using the msum method validates the idea

that while the margin does indicate class membership, its

value is not generally commensurate across different classifiers. Interestingly, although the recognition performance

is comparable, the average OVO training time was less

than half that of the OVA methods (11.9 vs. 29.9 minutes

without a null model and 23.9 vs. 47.3 minutes including

the null activity). Thus, selecting an OVO method can either

significantly reduce training time or allow the use of more

sophisticated weak learners or additional rounds of boosting

without increasing the run time for model learning.

We can visualize and compare the trade-offs between

various event level errors and frame level errors using

Error Division Diagrams (EDDs). EDDs allow us to view

the nuances of the system’s performance not completely

described by the standard accuracy metric involving substitution, deletions, and insertions. For example, EDDs allow

comparisons of errors caused by boundary discrepancies.

These discrepancies include both underfilling (falling short

of the boundary) and overflowing (surpassing the boundary).

EDDs also simultaniously compare the fragmentation of

an event into multiple smaller events and the merging of

multiple events into a single event. The different error types

and a more detailed explanation of EDDs can be found in

Minnen et al. [13]. Figure 5 shows EDDs for three different

multiclass methods. The EDDs show that the majority of

the errors are substitution or insertion errors.

Finally, Figure 6 shows a color-coded activity timeline

Fig. 6. Activity timeline showing the results of four system variations on one of the parking deck sequences. The top row (GT) shows the manually

specified ground truth labels. System A was trained without any knowledge of the null class, while System C learned an explicit model of the null activity.

Systems B and D are temporally smoothed versions of Systems A and C, respectively. In the smoothed systems, there is less fluctuation in the class labels.

Also note that Systems A and B never detect the null activity, and so their performance would be quite low if the null class was included in the evaluation.

Systems C and D, however, perform well over the entire sequence.

that provides a high-level overview of the results of four system variations. Each row, labeled A-D, represents the results

of a different system. These results can be compared to the

top row, which shows the manually specified ground truth

activities. The diagram depicts the effects of two system

choices: explicit modeling of the null activity (Systems C

and D) and temporal smoothing (Systems B and D).

As expected, Systems A and B perform well over the

identified activities but erroneously label all null activity

as a known class. Many of the mistakes seem reasonable

and potentially indicative of ground truth labeling inconsistencies. For example, walking before raising a weapon,

crawling before and after lying, and opening a door between

driving and walking are all quite plausible. Systems C and

D, however, provide much better overall performance by

explicitly modeling and labeling null activity. In particular,

System D, which also includes temporal smoothing, performs very well and fixes several mistakes in System C by

removing short insertion and deletion errors.

VI. C ONCLUSION

The activity recognition component of the Soldier Assist

System can accurately detect and recognize many lowlevel activities considered important by soldiers and other

subject matter experts. By computing aggregate features

over a window of sensor readings, we can transform a

difficult temporal recognition problem into a simpler spatial

modeling task. Using a boosting framework over 1D classifiers provides an efficient and robust method for selecting

discriminating features. This is very important for an onbody, distributed sensor network in which some sensors may

be uninformative or even distracting for detecting certain

activities. Finally, we show that performance is relatively

unaffected by the choice of multiclass aggregation method,

though one-vs-one methods lead to a considerable reduction

in training time.

VII. ACKNOWLEDGMENTS

This research was supported and funded by DARPA’s

ASSIST project, contract number NBCH-C-05-0097.

R EFERENCES

[1] DARPA, “ASSIST BAA #04-38, http://www.darpa.mil/ipto/

solicitations/open/04-38 PIP.htm,” November 2006.

[2] J. Ward, P. Lukowicz, and G. Tröster, “Evaluating performance in

continuous context recognition using event-driven error characterisation,” in Proceedings of LoCA 2006, May 2006, pp. 239–255.

[3] N. Kern, S. Antifakos, B. Schiele, and A. Schwaninger, “A model

for human interruptability: Experimental evaluation and automatic

estimation from wearable sensors.” in ISWC.

IEEE Computer

Society, 2004, pp. 158–165.

[4] M. Stäger, P. Lukowicz, N. Perera, T. von Büren, G. Tröster, and

T. Starner, “Soundbutton: Design of a low power wearable audio

classification system.” in ISWC. IEEE Computer Society, 2003, pp.

12–17.

[5] P. Lukowicz, J. Ward, H. Junker, M. Stäger, G. Tröster, A. Atrash,

and T. Starner, “Recognizing workshop activity using body worn

microphones and accelerometers,” in Pervasive Computing, 2004, pp.

18–22.

[6] T. Westeyn, K. Vadas, X. Bian, T. Starner, and G. D. Abowd, “Recognizing mimicked autistic self-stimulatory behaviors using hmms.” in

Ninth IEEE Int. Symp. on Wearable Computers, October 18-21 2005,

pp. 164–169.

[7] L. Bao and S. S. Intille, “Activity recognition from user-annotated

acceleration data,” in Pervasive. Berlin Heidelberg: Springer-Verlag,

2004, pp. 1–17.

[8] J. Lester, T. Choudhury, N. Kern, G. Borriello, and B. Hannaford,

“A hybrid discriminative/generative approach for modeling human

activities,” in Nineteenth International Joint Conference on Artificial

Intelligence, July 30 - August 5 2005, pp. 766–772.

[9] A. Raj, A. Subramanya, D. Fox, and J. Bilmes, “Rao-blackwellized

particle filters for recognizing activities and spatial context from

wearable sensors,” in Experimental Robotics: The 10th International

Symposium, 2006.

[10] R. E. Schapire, “A brief introduction to boosting,” in IJCAI, 1999,

pp. 1401–1406.

[11] P. Viola and M. Jones, “Rapid object detection using a boosted cascade of simple features,” in Computer Vision and Pattern Recognition,

2001.

[12] J. Platt, “Probabilistic outputs for support vector machines and

comparisons to regularized likelihood methods,” in Advances in Large

Margin Classifiers. MIT Press, 1999.

[13] D. Minnen, T. Westeyn, T. Starner, J. Ward, and P. Lukowicz,

“Performance metrics and evaluation issues for continuous activity

recognition,” in Performance Metrics for Intelligent Systems, 2006.