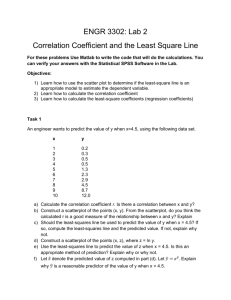

Document

advertisement

Different Nonlinear Least Squares

Some slides are from J.P. Lewis you was at that point

at Stanford University.

Setup

• Let f be a function such that

a ∈ R n → f (a, x ) ∈ R

where x is a vector of parameters

• Let {ak,bk} be a set of measurements/constraints.

We fit f to the data by solving:

min x

1

2

(

(

)

)

b

−

f

a

,

x

∑ k

k

2 k

Regularized Least-Squares

or

min x

∑ rk

k

2

with rk = bk − f (a k , x )

Approximation using Taylor series

( )

1 T

T

E (x + h ) = E (x ) + ∇E (x ) h + h H E (x ) h + O h

transposed !! 2

T

∂ 2 rj

∂rj

1 m 2

with E (x ) = ∑ rj , J = and H r j = dx_i dx_kT

2 j =1

∂xi

∂xi

m

∇E = ∑ r j ∇r j = J T r

j =1

m

m

m

H E = ∑ ∇rj ∇rj + ∑ rjH rT = J T J + ∑ rjH rT

j =1

Regularized Least-Squares

T

j =1

j

symmetric matrix

j =1

j

23

Existence of minimum

In general converges to a local minima.

A local minima is characterized by:

1.

2.

∇E (x min ) = 0

h H E (x min ) h ≥ 0, for all h small enough

(e.g. H E (x min ) is positive semi - definite)

T

Regularized Least-Squares

T

Existence of minimum

E (x )

1

T

E (x min + h ) ≈ E (x min ) + h T H E (x ) h

2

x min

Regularized Least-Squares

A non-linear function for the example:

the Rosenbrock function

z = f ( x, y ) = (1 − x 2 ) 2 + 100( y − x 2 ) 2

Global minimum at (1,1)

Regularized Least-Squares

Overview of different LS type algo.

•

•

•

•

Steepest (gradient) descent

Newton’s method

Gauss-Newton’s method

Levenberg-Marquardt method

Regularized Least-Squares

Gradient descent algorithm

• Start at an initial position x0

• Until convergence

– Find minimizing step dxk

using a local approximation of f

– xk+1=xk+ dxk

Produce a sequence x0, x1, …, xn such that

f(x0) > f(x1) > …> f(xn)

Regularized Least-Squares

Steepest (gradient) descent

x k +1 = x k − α∇E (x k )

Step

where

α

is chosen such that:

f (x k +1 ) < f (x k )

using a line search algorithm:

minα f ( k x k − α∇E (x k ))

Regularized Least-Squares

xk

x1

x2

Regularized Least-Squares

x min

∇E (x k )

x k +1

xk

x1

x2

Regularized Least-Squares

x min

∇E (x k )

x k +1

In the plane of the

steepest descent direction

x k +1

Regularized Least-Squares

xk

Rosenbrock function (1000 iterations)

Regularized Least-Squares

Newton’s method

• Step: second order approximation

E (x k + h ) ≈ N (h ) = E (x k ) + ∇E (x k )

T

1 T

T

h + h H E (x k ) h

2

At the minimum of N

∇N (h ) = 0 ⇒ ∇E (x k ) + H E (x k )h = 0

⇒ h = −H E (x k ) ∇E (x k )

−1

x k +1 = x k − H E (x k )−1 ∇E (x k )

Regularized Least-Squares

1

T

T

E (x k ) + ∇E (x k ) h + h T H E (x k ) h

2

xk

x1

x2

Regularized Least-Squares

x min

- H E (x k ) ∇E (x k )

−1

x k +1

Problem

• If H E (x k ) is not positive semi-definite, then - H E (x k )−1 ∇E (x k )

is not a descent direction: the step increases the error

function

• Uses positive semi-definite approximation of Hessian

based on the Jacobian

(quasi-Newton methods)

Regularized Least-Squares

Gauss-Newton method

Step: use

x k +1 = x k − H E (x k )−1 ∇E (x k )

with the approximate Hessian

m

H E = J T J + ∑ rjH rT ≈ J T J

j =1

j

Advantages:

• No second order derivatives

• J T J is positive semi-definite

Regularized Least-Squares

Rosenbrock function (48 evaluations)

Regularized Least-Squares

Levenberg-Marquardt algorithm

• Blends Steepest descent and Gauss-Newton

• At each step solve, for the descent direction h

(J

T

J + λI ) h = −∇E (x k )

if λ large

h ∝ −∇E (x k )

(steepest descent)

if λ small

h ∝ −(J J ) ∇E (x k )

T

Regularized Least-Squares

−1

(Gauss - Newton)

Managing the damping parameter λ

• General approach:

– If step fails, increase damping until step is successful

– If step succeeds, decrease damping to take larger

step

• Improved damping

(J

T

J + λdiag(J T J ) ) h = −∇E (x k )

Regularized Least-Squares

Rosenbrock function (90 evaluations)

Regularized Least-Squares