WHITE PAPER

DATA CENTER

www.brocade.com

World-Class Data Center Realizes

Goals of Investment Protection,

Energy Efficiency, and Scalability

In March of 2008, Brocade had a vision to build a worldclass campus, including a new data center to demonstrate

the Brocade One concepts of unmatched simplicity, nonstop networking, investment protection, and optimized

applications. In September of 2010, the vision was realized

and we officially dedicated the new campus and data

center.

The new Brocade® campus in San Jose, California, was built to

provide the business with flexibility needed to satisfy growth for our

employees and for R&D and solution labs. Design principles of both

the campus and the data centers were flexibility, scalability, energy

efficiency, and sustainability. And the results were nothing short of

extraordinary. Three buildings provide 562,000 square feet to house

Brocade’s 2,500 employees, labs, data center, café, fitness center,

and so on. An overarching goal was thoughtful investment—and

that guided our decisions with regards to our employees, partners,

customers, and vendors as well.

Prior to the move, we had three data centers and R&D labs in five

locations with a total of about 2000 racks. On the new campus,

labs were consolidated in one building. The highly efficient electrical

and mechanical design of Brocade data center and labs will save

over 10 million Kilowatt-hours of energy consumption annually over

the previous year. And over 4500 tons of carbon dioxide emissions

annually will be offset over the previous year. This paper describes

how we designed and built the data center and labs to achieve these

spectacular results.

2

Introduction

Looking back at the planning phase, a number of goals were mandated by the executive staff:

• Energy efficiency and sustainability

• Consolidation of footprint, while maximizing real estate investment

• Investment protection, that is, use as much of the existing equipment as possible

• Create a baseline for future growth and enhancements

The following sections describe how we accomplished these goals—followed by some best

practices and lessons learned about planning and building or refreshing a corporate data

center.

Energy Efficiency and Sustainability

Both industry best practices and innovative design played a part in delivering on the “green”

promise in the new data center.

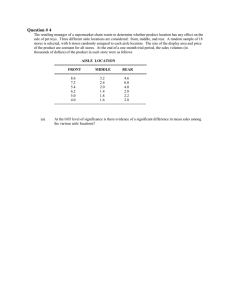

Hot Aisle/Cold Aisle

As is common in most organization, the IT equipment load is the number one consumer of

electricity at a building level. One of the best ways to handle the heat generated by the many

devices in the data center is by using a hot aisle/cold aisle equipment floor plan. Servers and

network equipment are typically configured in standard 19” (wide) racks and rack enclosures,

in turn, are arranged for accessibility for cabling and servicing. Increasingly, however, the floor

plan for data center equipment distribution must also accommodate air flow for equipment

cooling. This requires that individual units be mounted in a rack for consistent air flow

direction (all exhaust to the rear or all exhaust to the front) and that the rows of racks be

arranged to exhaust into a common space, called a hot aisle/cold aisle plan, as shown in

Figure 1.

COLD aisle

Figure 1.

Hot aisle/cold aisle plan

for consistent air flow.

Equipment row

HOT aisle

Equipment row

Air

flow

COLD aisle

Equipment row

HOT aisle

A hot aisle/cold aisle floor plan provides greater cooling efficiency by directing cold to hot

air flow for each equipment row into a common aisle. Each cold aisle feeds cool air for two

equipment rows while each hot aisle allows exhaust for two equipment rows, thus enabling

maximum benefit for the hot/cold circulation infrastructure. Even greater efficiency is

achieved by deploying equipment with variable-speed fans. Hot row containment provides

a better working environment for IT personnel and temperatures are more comfortable. In

Brocade data center and labs, we can control temperature to the level of a pod if we need

to (two rows of equipment back to back make up a pod). The Solutions Center lab also uses

both high end-doors and more economical curtain system heat containment methods.

3

Variable Speed Fans

Variable speed fans increase or decrease their spin rate in response to changes in equipment

temperature. As shown in Figure 2, cold air flow into equipment racks with constant speed

fans favors the hardware mounted in the lower equipment slots and thus nearer to the cold

air feed. Equipment mounted in the upper slots is heated by their own power draw as well

as the heat exhaust from the lower tiers. Use of variable speed fans in equipment racks

enables each unit to selectively apply cooling as needed, with more even utilization of cooling

throughout the equipment rack.

Figure 2.

Variable speed fans in

equipment racks enable more

efficient distribution of cooling.

More

even

cooling

Equipment

at bottom

is cooler

Server rack with constant speed fans

Server rack with variable speed fans

Power Distribution Units

High-voltage power is fed into the data center and labs to Power Distribution Units (PDUs),

which physically sit inside these labs, minimizing the energy and line loss to the end-point.

The PDUs have shadow metering capabilities to monitor and measure energy consumption

with utility-grade precision. Green-labeled PDUs are backed up by UPS and emergency power,

while red-labeled PDUs are backed up by emergency power only. Inside the data center,

equipment is fed from both A and B power sources for redundancy, with A power having UPS

and emergency generator backup.

Figure 3.

PDUs minimize

energy and line loss

to the end-point.

In addition to known best practices in this area, Brocade worked with a trusted vendor to

design and build a custom In-Row Cooling (IRC) system. To quote the vendor, “This may well

be the best system on the market today that’s not on the market … but will be ….” This IRC

unit provides the ultimate solution in flexibility due to its unique custom design. It can fit

into any rack space in the data center because of its similar physical footprint to the rack.

Brocade’s custom IRC is 80 percent more energy efficient than the conventional Clean Room

Air Conditioning Unit (CRAC) and 70 percent more efficient than current alternative IRC units

on the market.

4

The IRC unit provides added reliability by utilizing three high-efficiency fans directly driven

by three EMC motors. The motors themselves are the most efficient on the market,

consuming about 70 percent less energy than the nearest competitor. Each motor operates

at 33 percent, and if a fan or motor fails the unit automatically ramps up the remaining fans

to 66 Hz to provide the full cooling capacity of the unit.

Automated Controls with Energy Monitoring

Because vendor wattage and BTU specifications may assume maximum load conditions,

using data sheet specifications or equipment label declarations does not provide an accurate

basis for calculating equipment power draw or heat dissipation. An objective multi-point

monitoring system for measuring heat throughout the data center is really the only means to

observe and proactively respond to changes in the environment.

A number of monitoring options are available today. For example, some vendors are

incorporating temperature probes into their equipment design to provide continuous

reporting of heat levels via management software. Some solutions provide rack-mountable

systems that include both temperature and humidity probes and monitoring through a Web

interface. In addition, new monitoring software products can render a three-dimensional view

of temperature distribution across the entire data center, analogous to an infrared photo of a

heat source.

Starline Busway System

It’s interesting to note that Brocade was

an early adopter of the Starline Busway

System, an electrical power distribution

system for mission-critical facilities. It is

simple, versatile, fast and economical

solution for supplying power to electrical

loads and is unique because the busway

can be tapped instantly at any location,

without losing uptime—giving you the

ability to change or add to floor plans

quickly and easily. Although the Starline

Busway System has been on the

market for a number of years, it’s still

winning awards, most recently the Tech

50 award from the 2009 Pittsburgh

Technology Council in the Advanced

Manufacturing category.

Although monitoring systems add cost to data center design, they are invaluable diagnostic

tools for fine-tuning airflow and equipment placement to maximize cooling and keeping power

and cooling costs to a minimum. An example of a three-dimensional view of temperature in

the Brocade data center is shown in Figure 4.

Figure 4.

A 6 Sigma application generates

heat topologies at different

heights from the floor.

Cooling System

The number two consumer of electricity at the building level are the chiller and then the

pumps to circulate water for cooling to the HVAC units in the lab. Brocade selected the

highest-efficiency chillers at partial loads and cooling towers with variable frequency drives.

As a general rule, chillers never reach 100 percent load, the point at which their highefficiency features are triggered. However, the high-efficiency features of our chillers are

triggered at partial loads below 50 percent load, which helps conserve energy at Day One

loads

Our chilled water system utilizes a water side heat exchanger, which is passive and has

no electrical pumps and is capable of providing 2000 tons of free cooling without the use

of a compressor. This water-side heat exchanger provides free cooling when the outside

temperature permits. Despite what you may have heard, in Silicon Valley the sun doesn’t

shine all the time.

5

Finally, we have a primary system only and no secondary pumping system. Before the 1980s,

primary-only systems were the norm. With the growth of high-tech labs and critical labs, a

primary/secondary system has been typically prevalent until very recently. Organizations were

reluctant to do away with primary/secondary systems, because they felt that it made them

vulnerable in the event of a fluctuation differential pressure that might exist in a primary

only system. But in Brocade’s case where through the design and selection of the correct

equipment, automation and controls, and its constant load, there is no concern or need to

utilize a primary/secondary system to regulate pressure in load. We simply don’t need it and

have avoided initial capital investment cost and inefficiencies.

Figure 5.

Main cooling

system substations.

Consolidation

Through the consolidation of three data centers into one we were able to consolidate our

space requirements by decreasing the physical footprint by 30 percent and decreasing data

center energy consumption by 37 percent.

Racks

One of the decisions that we made was to use taller racks—8’ tall instead of 6’—with standard

19” width. We were able to consolidate 2011 racks to the 1860 rack capacity in for all labs.

In 2011, it is projected that we will be occupying 1587 of these racks, with 300 targeted for

growth.

With these taller racks and greater rack count, a question that comes to mind is how to fit

them into the data center and labs. We used a flat floor design, which allows for more vertical

rack space, rather than a raised floor, which requires ramps and stairs for access. A flat

floor design was selected because it allowed 10 percent more rack counts in comparison to

conventional raised floor systems which require ramps and stairs.

Cabling

One of the most critical areas for consolidation is in fact the proliferation of cables in highdensity racks, with high-port-count devices. In both the data center and other labs, two

separate conveyance systems were deployed: structured and horizontal cabling systems

(Systimax) and ad hoc cabling in the data center (Systimax, Tyco) and ad hoc cabling in

the solutions and proof-of-concept labs (Corning, Splice, Tyco, and Systimax). This lab also

features a flexible two-tier cable conveyance design that provides both a structured cable tray

conveyance system for the backbone network and a high capacity open cable conveyance

system for ad-hoc cabling.

In the Proof-of-Concept (PoC) lab, Brocade products are physically connected to an optical

switching matrix that lets us rapidly build out and store network topologies for each customer.

This allows us to share equipment and minimize set up time.

6

Investment Protection

The new data center is initially a Tier 1 (no N+1 power or cooling), but the infrastructure is

in place for a Tier 2 infrastructure (generator pads, UPS, additional chillers) on which N+1

systems can be placed when the business requirement exist The data center has installed

the latest in fire suppression technology. This includes a double-action, pre-action dry

pipe system that prevents water leaks and false alarms from interrupting business-critical

applications. Fire and smoke detection is monitored by very early smoke detection, which

senses the smallest amount of combustion or smoke particles and activates a dry green

agent fire suppression system that stops and prevents fire from spreading by removing the

oxygen in the space.

Campus Sustainability

To summarize, by meeting sustainability challenges head on:

• This campus uses 40 percent less water than a standard office or campus building. This

is achieved by the collection of rain and run-off water recycled, filtered, and reused for

irrigation. The selection of sustainable trees and landscaping features, and the selection of

no-water and low-flow water fixtures throughout the campus help us accomplish this.

• The campus utilizes a 550 KW photovoltaic (PV) system attached to our garage structure

that harnesses the suns energy to offset power consumption and the carbon footprint. The

power from the PV system is fed into Building 2. This system is capable of offsetting the

total amount of power consumed by our data center.

• By strategically implementing the best practices at a building and lab level one of the

lowest calculated Power Utilization Factors in Data Center design of 1.3 has been achieved.

The design has also allowed us to exceed California energy code requirements by 16%.

• Due to the selection of a sustainable site, water efficiency, energy efficiency, selection and

reuse of renewable materials, indoor air quality achievements, and innovation of design,

the campus is scheduled to receive accreditation from the U.S. Green Building Council for

Leadership and Energy and Environmental Design (LEED) certification of silver for core and

shell and gold for corporate Interiors.

Figure 6.

Power Usage Effectiveness (PUE):

a measure of data center energy

efficiency calculated by dividing the total

data center energy consumption by the

energy consumption of the IT computing

equipment.

About Brocade

Founded in 1995, Brocade is an industry leader in providing reliable, high-performance

network solutions that help the world’s leading organizations transition smoothly to a

virtualized world where applications and information reside anywhere.

Today, Brocade is extending its proven data center expertise across the entire network with

future-proofed solutions built for consolidation, network convergence, virtualization, and

cloud computing.

Headquartered in San Jose, California, the company has approximately 4000 employees

worldwide and serves a wide range of industries and customers in more than 160 countries.

With a complete family of Ethernet, storage, and converged networking solutions, Brocade

helps organizations achieve their most critical business objectives through:

• Unmatched simplicity to overcome today’s complexity

• Non-stop networking to maximize business uptime

• Optimized applications to increase business agility and gain a competitive advantage

• Investment protection to provide a smooth transition to new technologies while leveraging

existing infrastructure

Brocade combines a proven track record of expertise, innovation, and new technology

development with open standards leadership and strategic partnerships with the world’s

leading IT companies. This extensive partner ecosystem enables truly best-in-class business

solutions. To find out more about Brocade, visit www.brocade.com.

7

www.brocade.com

WHITE PAPER

Corporate Headquarters

San Jose, CA USA

T: +1-408-333-8000

info@brocade.com

European Headquarters

Geneva, Switzerland

T: +41-22-799-56-40

emea-info@brocade.com

Asia Pacific Headquarters

Singapore

T: +65-6538-4700

apac-info@brocade.com

© 2010 Brocade Communications Systems, Inc. All Rights Reserved. 10/10 GA-WP-1537-00

Brocade, the B-wing symbol, BigIron, DCFM, DCX, Fabric OS, FastIron, IronView, NetIron, SAN Health, ServerIron,

TurboIron, and Wingspan are registered trademarks, and Brocade Assurance, Brocade NET Health, Brocade One,

Extraordinary Networks, MyBrocade, and VCS are trademarks of Brocade Communications Systems, Inc., in the United

States and/or in other countries. Other brands, products, or service names mentioned are or may be trademarks or

service marks of their respective owners.

Notice: This document is for informational purposes only and does not set forth any warranty, expressed or implied,

concerning any equipment, equipment feature, or service offered or to be offered by Brocade. Brocade reserves the

right to make changes to this document at any time, without notice, and assumes no responsibility for its use. This

informational document describes features that may not be currently available. Contact a Brocade sales office for

information on feature and product availability. Export of technical data contained in this document may require an

export license from the United States government.