Human response to sound

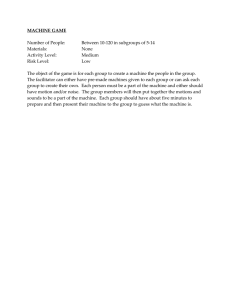

advertisement