All Sessions - Acoustical Society of America

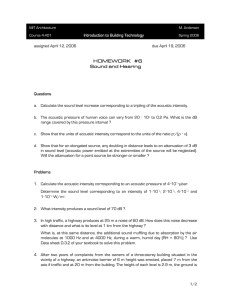

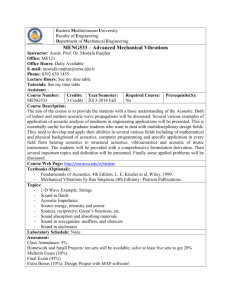

advertisement