UCSD Blueprint for the Digital University

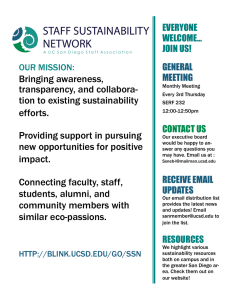

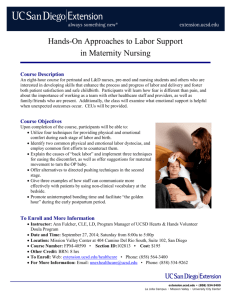

advertisement