experimental and quasi-experimental designs for

advertisement

AND

EXPERIMENTAL

QUASI-EXPERIMENTAL

DESIGNSFORGENERALIZED

CAUSALINFERENCE

ii:.

William R. Shadish

Trru UNIvERSITYop MEvPrrts

.jr-*"'"+.'-, ,

iLli"

fr

**

Thomas D. Cook

NonrrrwpsrERN UNrvPnslrY

Donald T. Campbell

HOUGHTONMIFFLINCOMPANY

2002

Boston New York

and

Experiments

Causal

Generalized

lnference

Ex.per'i'ment (ik-spEr'e-mant):[Middle English from Old French from Latin

experimentum, from experiri, to try; seeper- in Indo-European Roots.]

n. Abbr. exp., expt, 1. a. A test under controlled conditions that is

made to demonstratea known truth, examine the validity of a hypothesis, or determine the efficacyof something previously untried' b. The

processof conducting such a test; experimentation. 2' An innovative

"Democracy is only an experiment in gouernment"

act or procedure:

(.V{illiam Ralph lnge).

Cause (k6z): [Middle English from Old French from Latin causa' teason,

purpose.] n. 1. a. The producer of an effect, result, or consequence.

b. The one, such as a person, an event' or a condition, that is responsible for an action or a result. v. 1. To be the causeof or reason for; result in. 2. To bring about or compel by authority or force.

o MANv historians and philosophers,the increasedemphasison experimentation in the 15th and L7th centuriesmarked the emergenceof modern science

1983). Drake (1981) cites

from its roots in natural philosophy (Hacking,

'Water,

'1.6'!.2

or Moue in It as usheringin

treatrseBodies Tbat Stay Atop

Galileo's

modern experimental science,but earlier claims can be made favoring \Tilliam

Gilbert's1,600study Onthe Loadstoneand MagneticBodies,Leonardoda Vinci's

and perhapseventhe Sth-centuryB.C.philoso(1,452-1.51.9)

many investigations,

pher Empedocles,who used various empirical demonstrationsto argue against

'1.969a,

1'969b).In the everyday senseof the term, humans

Parmenides(Jones,

have beenexperimentingwith different ways of doing things from the earliestmoments of their history. Suchexperimentingis as natural a part of our life as trying

a new recipe or a different way of starting campfires.

z | 1. EXeERTMENTs

ANDGENERALTzED

cAUsALINFERENcE

I

However, the scientific revolution of the 1.7thcentury departed in three ways

from the common use of observation in natural philosophy atthat time. First, it increasingly used observation to correct errors in theory. Throughout historg natural philosophers often used observation in their theories, usually to win philosophical arguments by finding observations that supported their theories.

However, they still subordinated the use of observation to the practice of deriving

theories from "first principles," starting points that humans know to be true by our

nature or by divine revelation (e.g., the assumedproperties of the four basic elements of fire, water, earth, and air in Aristotelian natural philosophy). According

to some accounts,this subordination of evidenceto theory degeneratedin the 17th

"The

century:

Aristotelian principle of appealing to experiencehad degenerated

among philosophers into dependenceon reasoning supported by casual examples

and the refutation of opponents by pointing to apparent exceptions not carefully

'1,98"1.,

examined" (Drake,

p. xxi).'Sfhen some 17th-century scholarsthen beganto

use observation to correct apparent errors in theoretical and religious first principles, they came into conflict with religious or philosophical authorities, as in the

case of the Inquisition's demands that Galileo recant his account of the earth revolving around the sun. Given such hazards,the fact that the new experimental science tipped the balance toward observation and ^way from dogma is remarkable.

By the time Galileo died, the role of systematicobservation was firmly entrenched

as a central feature of science,and it has remained so ever since (Harr6,1981).

Second,before the 17th century, appeals to experiencewere usually basedon

passive observation of ongoing systemsrather than on observation of what happens after a system is deliberately changed. After the scientific revolution in the

L7th centurS the word experiment (terms in boldface in this book are defined in

the Glossary) came to connote taking a deliberate action followed by systematic

observationof what occurred afterward. As Hacking (1983) noted of FrancisBacon: "He taught that not only must we observenature in the raw, but that we must

'twist

also

the lion's tale', that is, manipulate our world in order to learn its secrets" (p. U9). Although passiveobservation revealsmuch about the world, active manipulation is required to discover some of the world's regularities and possibilities (Greenwood,, 1989). As a mundane example, stainless steel does not

occur naturally; humans must manipulate it into existence.Experimental science

came to be concerned with observing the effects of such manipulations.

Third, early experimenters realized the desirability of controlling extraneous

influences that might limit or bias observation. So telescopeswere carried to

higher points at which the air was clearer, the glass for microscopeswas ground

ever more accuratelg and scientistsconstructed laboratories in which it was possible to use walls to keep out potentially biasing ether waves and to use (eventually sterilized) test tubes to keep out dust or bacteria. At first, thesecontrols were

developed for astronomg chemistrg and physics, the natural sciencesin which interest in sciencefirst bloomed. But when scientists started to use experiments in

areas such as public health or education, in which extraneous influences are

harder to control (e.g., Lind , 1,753lr,they found that the controls used in natural

AND CAUSATTONI I

EXPERTMENTS

sciencein the laboratoryworked poorly in thesenew applications.So they developed new methodsof dealingwith extraneousinfluence,such as random assignment (Fisher,1,925)or addinga nonrandomizedcontrol group (Coover& Angell,

1.907).As theoreticaland observationalexperienceaccumulatedacrossthesesettings and topics,more sourcesof bias were identifiedand more methodswere developedto copewith them (Dehue,2000).

TodaSthe key featurecommonto all experimentsis still to deliberatelyvary

somethingso asto discoverwhat happensto somethingelselater-to discoverthe

what

effectsof presumedcauses.As laypersonswe do this, for example,to assess

happensto our blood pressureif we exercisemore, to our weight if we diet less,

or ro our behaviorif we read a self-helpbook. However,scientificexperimentation has developedincreasinglyspecializedsubstance,language,and tools, inthat is the pricluding the practiceof field experimentationin the socialsciences

mary focus of this book. This chapter begins to explore these matters by

(1) discussing

the natureof causationthat experimentstest,(2) explainingthe spethat decializedterminology(e.g.,randomizedexperiments,quasi-experiments)

generalize

problem

how

to

(3)

of

the

scribessocial experiments, introducing

causalconnectionsfrom individual experiments,and (4) briefly situatingthe experimentwithin a largerliteratureon the nature of science.

AND CAUSATION

EXPERIMENTS

A sensiblediscussionof experimentsrequiresboth a vocabularyfor talking about

causationand an understandingof key conceptsthat underliethat vocabulary.

DefiningCause,Effect,and CausalRelationships

Most peopleintuitively recognizecausalrelationshipsin their daily lives.For instance,you may say that another automobile'shitting yours was a causeof the

damageto your car; that the number of hours you spentstudyingwas a causeof

your testgrades;or that the amountof food a friend eatswas a causeof his weight.

You may evenpoint to more complicatedcausalrelationships,noting that a low

test gradewas demoralizing,which reducedsubsequentstudying,which caused

evenlower grades.Here the samevariable(low grade)can be both a causeand an

effect,and there can be a reciprocal relationship betweentwo variables (low

gradesand not studying)that causeeachother.

Despitethis intuitive familiarity with causalrelationsbips,a precisedefinition

of causeand effecthaseludedphilosophersfor centuries.lIndeed,the definitions

1. Our analysisrefldctsthe useof the word causationin ordinary language,not the more detaileddiscussionsof

causeby philosophers.Readersinterestedin suchdetail may consult a host of works that we referencein this

chapter,includingCook and Campbell(1979).

4

AND GENERALTZED

CAUSAL

INFERENCE

| 1. EXPERTMENTS

of terms suchas cause and,effectdependpartly on eachother and on the causal

relationshipin which both are embedded.So the 17th-centuryphilosopherJohn

Locke said: "That which producesany simpleor complexidea,we denoteby the

generalnamecaLtse,

and that which is produced, effect" (1,97s, p. 32fl and also:

" A cAtrseis that

which makesany other thing, either simpleidea, substance,

or

mode,beginto be; and an effectis that, which had its beginningfrom someother

thing" (p. 325).Sincethen,otherphilosophers

and scientists

havegivenus useful

definitionsof the threekey ideas--cause,effect,and causalrelationship-that are

more specificand that betterilluminatehow experimentswork. We would not defend any of theseas the true or correctdefinition,giventhat the latter haseluded

philosophersfor millennia;but we do claignthat theseideashelp to clarify the scientific practiceof probing causes.

Cause

'We

Considerthe causeof a forest fire.

know that fires start in differentways-a

match tossedfrom a ca\ a lightning strike, or a smolderingcampfire,for example. None of thesecausesis necessarybecausea forest fire can start evenwhen,

say'a match is not present.Also, none of them is sufficientto start the fire. After

all, a match must stay "hot" long enoughto start combustion;it must contact

combustiblematerial suchas dry leaves;theremust be oxygenfor combustionto

occur; and the weather must be dry enoughso that the leavesare dry and the

match is not dousedby rain. So the match is part of a constellationof conditions

without which a fire will not result,althoughsomeof theseconditionscan be usually takenfor granted,suchasthe availabilityof oxygen.A lightedmatchis, rherefore, what Mackie (1,974)called an inus condition-"an insufficient but nonredundantpart of an unnecessary

but sufficient condition" (p. 62; italicsin original). It is insufficientbecausea match cannot start a fire without the other conditions. It is nonredundant only if it adds something fire-promoting that is

uniquelydifferent from what the other factors in the constellation(e.g.,oxygen,

dry leaves)contributeto startinga fire; after all,it would beharderro saywhether

the match causedthe fire if someoneelsesimultaneouslytried startingit with a

cigarettelighter.It is part of a sufficientcondition to start a fire in combination

with the full constellationof factors.But that condition is not necessary

because

thereare other setsof conditionsthat can also start fires.

A researchexampleof an inus condition concernsa new potentialtreatment

for cancer.In the late 1990s,a teamof researchers

in Bostonheadedby Dr. Judah

Folkman reportedthat a new drug calledEndostatinshrank tumors by limiting

their blood supply (Folkman, 1996).Other respectedresearchers

could not replicatethe effectevenwhen usingdrugsshippedto them from Folkman'slab. Scientists eventuallyreplicatedthe resultsafter they had traveledto Folkman'slab to

learnhow to properlymanufacture,transport,store,and handlethe drug and how

to inject it in the right location at the right depth and angle.One observerlabeled

thesecontingenciesthe "in-our-hands" phenomenon,meaning "even we don't

AND CAUSATIONI S

EXPERIMENTS

know which details are important, so it might take you some time to work it out"

(Rowe, L999, p.732). Endostatin was an inus condition. It was insufficientcause

by itself, and its effectivenessrequired it to be embedded in a larger set of conditions that were not even fully understood by the original investigators.

Most causesare more accurately called inus conditions. Many factors are usually required for an effectto occur, but we rarely know all of them and how they

relate to each other. This is one reason that the causal relationships we discussin

this book are not deterministic but only increasethe probability that an effect will

occur (Eells,1,991,;Holland, 1,994).It also explains why a given causalrelationship will occur under some conditions but not universally across time, space,hu-"r pop,rlations, or other kinds of treatments and outcomes that are more or less

related io those studied. To different {egrees, all causal relationships are context

dependent,so the generalizationof experimental effects is always at issue.That is

*hy *. return to such generahzationsthroughout this book.

Effect

'We

that

can better understand what an effect is through a counterfactual model'l'973'

goes back at least to the 18th-century philosopher David Hume (Lewis,

p. SSel. A counterfactual is something that is contrary to fact. In an experiment,

ie obseruewhat did happez when people received a treatment. The counterfactual is knowledge of what would haue happened to those same people if they simultaneously had not receivedtreatment. An effect is the difference betweenwhat

did happen and what would have happened.

'We

cannot actually observe a counterfactual. Consider phenylketonuria

metabolic diseasethat causesmental retardation unless

(PKU), a genetically-based

treated during the first few weeks of life. PKU is the absenceof an enzyme that

would otherwise prevent a buildup of phenylalanine, a substance toxic to the

nervous system. Vhen a restricted phenylalanine diet is begun early and maintained, reiardation is prevented. In this example, the causecould be thought of as

the underlying genetic defect, as the enzymatic disorder, or as the diet. Each implies a difierenicounterfactual. For example, if we say that a restricted phenylalanine diet causeda decreasein PKU-basedmental retardation in infants who are

at birth, the counterfactual is whatever would have happened

phenylketonuric

'h"d

t'h.r. sameinfants not receiveda restricted phenylalanine diet. The samelogic

applies to the genetic or enzymatic version of the cause. But it is impossible for

theseu.ry ,"-i infants simultaneously to both have and not have the diet, the genetic disorder, or the enzyme deficiency.

So a central task for all cause-probing research is to create reasonable approximations to this physically impossible counterfactual. For instance, if it were

ethical to do so, we might contrast phenylketonuric infants who were given the

diet with other phenylketonuric infants who wer€ not given the diet but who were

similar in many ways to those who were (e.g., similar face) gender,age, socioeconomic status, health status). Or we might (if it were ethical) contrast infants who

I

6 I 1. EXPERIMENTS

ANDGENERALIZED

CAUSAL

INFERENCE

were not on the diet for the first 3 months of their lives with those same infants

after they were put on the diet starting in the 4th month. Neither of these approximations is a true counterfactual. In the first case,the individual infants in the

treatment condition are different from those in the comparison condition; in the

second case, the identities are the same, but time has passedand many changes

other than the treatment have occurred to the infants (including permanent damage done by phenylalanine during the first 3 months of life). So two central tasks

in experimental design are creating a high-quality but necessarilyimperfect source

of counterfactual inference and understanding how this source differs from the

treatment condition.

This counterfactual reasoning is fundarnentally qualitative becausecausal inference, even in experiments, is fundamentally qualitative (Campbell, 1975;

Shadish, 1995a; Shadish 6c Cook, 1,999). However, some of these points have

been formalized by statisticiansinto a specialcasethat is sometimescalled Rubin's

"1.974,'1.977,1978,79861.

CausalModel (Holland, 1,986;Rubin,

This book is not

about statistics, so we do not describethat model in detail ('West,Biesanz,& Pitts

[2000] do so and relate it to the Campbell tradition). A primary emphasisof Rubin's model is the analysis of causein experiments, and its basic premisesare consistent with those of this book.2 Rubin's model has also been widely used to analyze causal inference in case-control studies in public health and medicine

(Holland 6c Rubin, 1988), in path analysisin sociology (Holland,1986), and in

a paradox that Lord (1967) introduced into psychology (Holland 6c Rubin,

1983); and it has generatedmany statistical innovations that we cover later in this

book. It is new enough that critiques of it are just now beginning to appear (e.g.,

Dawid, 2000; Pearl, 2000). tUfhat is clear, however, is that Rubin's is a very general model with obvious and subtle implications. Both it and the critiques of it are

required material for advanced students and scholars of cause-probingmethods.

CausalRelationship

How do we know if cause and effect are related? In a classic analysis formalized

by the 19th-century philosopher John Stuart Mill, a causal relationship exists if

(1) the causeprecededthe effect, (2) the causewas related to the effect,and (3) we

can find no plausible alternative explanation for the effect other than the cause.

These three characteristics mirror what happens in experiments in which (1) we

manipulate the presumed cause and observe an outcome afterward; (2) we see

whether variation in the cause is related to variation in the effect; and (3) we use

various methods during the experiment to reduce the plausibility of other explanations for the effect, along with ancillary methods to explore the plausibility of

those we cannot rule out (most of this book is about methods for doing this).

2. However, Rubin's model is not intended to say much about the matters of causal generalization that we address

in this book.

EXPERTMENTS

AND CAUSATTON

| 7

I

Henceexperimentsare well-suitedto studyingcausalrelationships.No other sciof causalrelationshipssowell.

entificmethodregularlymatchesthe characteristics

methods.

In many correlational

Mill's analysisalsopointsto the weaknessof other

studies,for example,it is impossibleto know which of two variablescamefirst,

so defendinga causalrelationshipbetweenthem is precarious.Understandingthis

logic of causalrelationshipsand how its key terms,suchas causeand effect,are

to critique cause-probingstudies.

definedhelpsresearchers

and Confounds

Correlation,

Causation,

A well-known maxim in research is: Correlation does not proue causation. This is

so becausewe may not know which variable came first nor whether alternative explanations for the presumed effectexist. For example, supposeincome and education are correlated.Do you have to have a high income before you can aff.ordto pay

for education,or do you first have to get a good education before you can get a better paying job? Each possibility may be true, and so both need investigation.But until those investigationsare completed and evaluatedby the scholarly communiry a

simple correlation doesnot indicate which variable came first. Correlations also do

little to rule out alternative explanations for a relationship between two variables

such as education and income. That relationship may not be causal at all but rather

due to a third variable (often called a confound), such as intelligence or family socioeconomicstatus,that causesboth high education and high income. For example,

if high intelligencecausessuccessin education and on the job, then intelligent people would have correlatededucation and incomes,not becauseeducation causesincome (or vice versa) but becauseboth would be causedby intelligence.Thus a central task in the study of experiments is identifying the different kinds of confounds

that can operate in a particular researcharea and understanding the strengthsand

weaknessesassociatedwith various ways of dealing with them

Causes

and Nonmanipulable

Manipulable

In the intuitive understandingof experimentationthat most peoplehave,it makes

senseto say,"Let's seewhat happensif we requirewelfarerecipientsto work"; but

it makesno senseto say,"Let's seewhat happensif I changethis adult maleinto a

Experimentsexplore

girl." And so it is alsoin scientificexperiments.

three-year-old

of a medicine,the

the

dose

the effectsof things that can be manipulated,such as

amount of a welfarecheck,the kind or amount of psychotherapyor the number

of childrenin a classroom.Nonmanipulableevents(e.g.,the explosionof a supernova) or attributes(e.g.,people'sages,their raw geneticmaterial,or their biologiwe cannotdeliberatelyvary them

cal sex)cannotbe causesin experimentsbecause

most scientistsand philosophersagree

to seewhat then happens.Consequently,

that it is much harderto discoverthe effectsof nonmanipulablecauses.

I

8

|

1. EXeERTMENTS

ANDGENERALTzED

cAUsALTNFERENcE

To be clear,we are not arguing that all causesmust be manipulable-only that

experimental causesmust be so. Many variables that we correctly think of as causes

are not directly manipulable. Thus it is well establishedthat a geneticdefect causes

PKU even though that defect is not directly manipulable.'We can investigatesuch

causesindirectly in nonexperimental studiesor even in experimentsby manipulating biological processesthat prevent the gene from exerting its influence, as

through the use of diet to inhibit the gene'sbiological consequences.Both the nonmanipulable gene and the manipulable diet can be viewed as causes-both covary

with PKU-basedretardation, both precedethe retardation, and it is possibleto explore other explanations for the gene'sand the diet's effectson cognitive functioning. However, investigating the manipulablc diet as a causehas two important advantages over considering the nonmanipulable genetic problem as a cause.First,

only the diet provides a direct action to solve the problem; and second,we will see

that studying manipulable agents allows a higher quality source of counterfactual

inferencethrough such methods as random assignment.\fhen individuals with the

nonmanipulable genetic problem are compared with personswithout it, the latter

are likely to be different from the former in many ways other than the genetic defect. So the counterfactual inference about what would have happened to those

with the PKU genetic defect is much more difficult to make.

Nonetheless,nonmanipulable causesshould be studied using whatever means

are availableand seemuseful. This is true becausesuch causeseventuallyhelp us

to find manipulable agents that can then be used to ameliorate the problem at

hand. The PKU example illustrates this. Medical researchersdid not discover how

to treat PKU effectively by first trying different diets with retarded children. They

first discovered the nonmanipulable biological features of retarded children affected with PKU, finding abnormally high levels of phenylalanine and its associated metabolic and genetic problems in those children. Those findings pointed in

certain ameliorative directions and away from others, leading scientiststo experiment with treatments they thought might be effective and practical. Thus the new

diet resulted from a sequenceof studies with different immediate purposes, with

different forms, and with varying degreesof uncertainty reduction. Somewere experimental, but others were not.

Further, analogue experiments can sometimes be done on nonmanipulable

causes,that is, experiments that manipulate an agent that is similar to the cause

of interest. Thus we cannot change a person's race, but we can chemically induce

skin pigmentation changes in volunteer individuals-though such analogues do

not match the reality of being Black every day and everywhere for an entire life.

Similarly past events,which are normally nonmanipulable, sometimesconstitute

a natural experiment that may even have been randomized, as when the 1'970

Vietnam-era draft lottery was used to investigate a variety of outcomes (e.g., Angrist, Imbens, & Rubin, 1.996a;Notz, Staw, & Cook, l97l).

Although experimenting on manipulable causesmakes the job of discovering

their effectseasier,experiments are far from perfect means of investigating causes.

I

EXPERIMENTS

AND CAUSATIONI 9

Sometimesexperiments modify the conditions in which testing occurs in a way

that reducesthe fit between those conditions and the situation to which the results

are to be generalized.Also, knowledge of the effects of manipulable causestells

nothing about how and why those effectsoccur. Nor do experiments answer many

example, which questions are

other questions relevant to the real world-for

worth asking, how strong the need for treatment is, how a cause is distributed

through societg whether the treatment is implemented with theoretical fidelitS

and what value should be attached to the experimental results.

In additioq, in experiments,we first manipulate a treatment and only then observeits effects;but in some other studieswe first observean effect, such as AIDS,

and then search for its cause, whether manipulable or not. Experiments cannot

help us with that search. Scriven (1976) likens such searchesto detective work in

which a crime has been committed (..d., " robbery), the detectivesobservea particular pattern of evidencesurrounding the crime (e.g.,the robber wore a baseball

cap and a distinct jacket and used a certain kind of Bun), and then the detectives

searchfor criminals whose known method of operating (their modus operandi or

m.o.) includes this pattern. A criminal whose m.o. fits that pattern of evidence

then becomesa suspect to be investigated further. Epidemiologists use a similar

method, the case-control design (Ahlbom 6c Norell, 1,990),in which they observe

a particular health outcome (e.g., an increasein brain tumors) that is not seen in

another group and then attempt to identify associatedcauses(e.g., increasedcell

phone use). Experiments do not aspire to answer all the kinds of questions, not

even all the types of causal questions, that social scientistsask.

and CausalExplanation

CausalDescription

attribThe uniquestrengthof experimentationis in describingthe consequences

utableto deliberatelyvaryinga treatment.'Wecall this causaldescription.In contrast, experimentsdo lesswell in clarifying the mechanismsthrough which and

the conditionsunder which that causalrelationshipholds-what we call causal

explanation.For example,most childrenvery quickly learnthe descriptivecausal

relationshipbetweenflicking a light switch and obtainingillumination in a room.

However,few children (or evenadults)can fully explain why that light goeson.

To do so, they would haveto decomposethe treatment(the act of flicking a light

switch)into its causallyefficaciousfeatures(e.g.,closingan insulatedcircuit) and

its nonessentialfeatures(e.g.,whetherthe switch is thrown by hand or a motion

detector).They would haveto do the samefor the effect (eitherincandescentor

fluorescentlight can be produced,but light will still be produced whether the

light fixture is recessedor not). For full explanation,they would then have to

show how the causallyefficaciousparts of the treatmentinfluencethe causally

affectedparts of the outcomethrough identified mediating processes(e.g.,the

I

INFERENCE

ANDGENERALIZED

CAUSAL

1O I T. CXPTRIMENTS

passageof electricity through the circuit, the excitation of photons).3 ClearlS the

causeof the light going on is a complex cluster of many factors. For those philosophers who equate cause with identifying that constellation of variables that necessarily inevitably and infallibly results in the effect (Beauchamp,1.974),talk of

cause is not warranted until everything of relevanceis known. For them, there is

no causal description without causal explanation. Whatever the philosophic merits of their position, though, it is not practical to expect much current social science to achieve such complete explanation.

The practical importance of causal explanation is brought home when the

switch fails to make the light go on and when replacing the light bulb (another

easily learned manipulation) fails to solva the problem. Explanatory knowledge

then offers clues about how to fix the problem-for example, by detecting and repairing a short circuit. Or if we wanted to create illumination in a place without

lights and we had explanatory knowledge, we would know exactly which features

of the cause-and-effectrelationship are essentialto create light and which are irrelevant. Our explanation might tell us that there must be a source of electricity

but that that source could take several different molar forms, such as abattery, a

generator, a windmill, or a solar array. There must also be a switch mechanism to

close a circuit, but this could also take many forms, including the touching of two

bare wires or even a motion detector that trips the switch when someone enters

the room. So causal explanation is an important route to the generalization of

causal descriptions becauseit tells us which features of the causal relationship are

essentialto transfer to other situations.

This benefit of causal explanation helps elucidate its priority and prestige in

all sciencesand helps explain why, once a novel and important causal relationship

is discovered, the bulk of basic scientific effort turns toward explaining why and

how it happens. Usuallg this involves decomposing the causeinto its causally effective parts, decomposing the effects into its causally affected parts, and identifying the processesthrough which the effective causal parts influence the causally

affected outcome parts.

These examplesalso show the close parallel between descriptive and explanatory causation and molar and molecular causation.aDescriptive causation usually

concerns simple bivariate relationships between molar treatments and molar outcomes, molar here referring to a package that consistsof many different parts. For

instance, we may find that psychotherapy decreasesdepression,a simple descriptive causal relationship benveen a molar treatment package and a molar outcome.

However, psychotherapy consists of such parts as verbal interactions, placebo3. However, the full explanationa physicistwould offer might be quite different from this electrician's

explanation,perhapsinvoking the behaviorof subparticles.This differenceindicatesiust how complicatedis the

notion of explanationand how it can quickly becomequite complex once one shifts levelsof analysis.

4. By molar, we mean somethingtaken as a whole rather than in parts. An analogyis to physics,in which molar

might refer to the propertiesor motions of masses,as distinguishedfrom those of moleculesor atomsthat make up

thosemasses.

EXPERIMENTS

AND CAUSATIONI 11

I

generating procedures, setting characteristics,time constraints, and payment for

services.Similarly, many depression measuresconsist of items pertaining to the

physiological,cognitive, and affectiveaspectsof depression.Explan atory causation

breaks thesemolar causesand effectsinto their molecular parts so as to learn, say,

that the verbal interactions and the placebo featuresof therapy both causechanges

in the cognitive symptoms of depression,but that payment for servicesdoes not do

so even though it is part of the molar treatment package.

If experiments are less able to provide this highly-prized explanatory causal

knowledge, why.are experimentsso central to science,especiallyto basic social science,in which theory and explanation are often the coin of the realm? The answer is

that the dichotomy ber'*reendescriptive and explanatory causation is lessclear in scientific practice than in abstract discussionsabout causation.First, many causal explanatironsconsist of chains of descriptivi causal links in which one event causesthe

next. Experiments help to test the links in each chain. Second,experiments help distinguish betweenthe validity of competing explanatory theories, for example, by testing competing mediating links proposed by those theories. Third, some experiments

test whether a descriptive causal relationship varies in strength or direction under

Condition A versus Condition B (then the condition is a moderator variable that explains the conditions under which the effect holds). Fourth, some experimentsadd

quantitative or qualitative observations of the links in the explanatory chain (mediator variables) to generateand study explanations for the descriptive causal effect.

Experiments are also prized in applied areas of social science,in which the

identification of practical solutions to social problems has as great or even greater

priority than explanations of those solutions. After all, explanation is not always

required for identifying practical solutions. Lewontin (1997) makes this point

about the Human Genome Project, a coordinated multibillion-dollar research

program ro map the human genome that it is hoped eventually will clarify the genetic causesof diseases.Lewontin is skeptical about aspectsof this search:

'!ilhat

is involvedhereis the differencebetweenexplanationand intervention.Many

disorderscan be explainedby the failureof the organismto makea normal protein,a

of a genemutation.But interuentionrequiresthat the

failurethat is the consequence

normalproteinbe providedat the right placein the right cells,at the right time and in

the right amount,or elsethat an alternativeway be found to providenormal cellular

to keepthe abnormalproteinaway

function.'Whatis worse,it might evenbenecessary

from the cellsat criticalmoments.None of theseobjectivesis servedby knowing the

"1,997,

p.29)

of the defectivegene.(Lewontin,

DNA sequence

Practical applications are not immediately revealedby theoretical advance.Instead, to reveal them may take decadesof follow-up work, including tests of simple descriptive causal relationships. The same point is illustrated by the cancer

drug Endostatin, discussedearlier. Scientistsknew the action of the drug occurred

through cutting off tumor blood supplies; but to successfullyuse the drug to treat

cancersin mice required administering it at the right place, angle, and depth, and

those details were not part of the usual scientific explanation of the drug's effects.

12 I 1. EXPERTMENTS

AND GENERALTZED

TNFERENCE

CAUSAL

I

In the end,then,causaldescriptionsand causalexplanationsarein delicatebalancein experiments.'$7hat

experimentsdo bestis to improvecausaldescriptions;

they do lesswell at explainingcausalrelationships.But most experimentscan be

designedto providebetterexplanationsthan is typicallythe casetoday.Further,in

focusingon causaldescriptions,experimentsoften investigatemolar eventsthat

may be less strongly related to outcomesthan are more molecularmediating

processes,

especiallythoseprocesses

that are closerto the outcomein the explanatory chain. However,many causaldescriptionsare still dependableand strong

enoughto be useful,to be worth making the building blocks around which important policiesand theoriesare created.Just considerthe dependabilityof such

causalstatements

asthat schooldesegregation

causeswhite flight, or that outgroup

threat causesingroup cohesion,or that psychotherapyimprovesmentalhealth,or

that diet reducesthe retardationdueto PKU. Suchdependable

causalrelationships

are usefulto policymakers,practitioners,and scientistsalike.

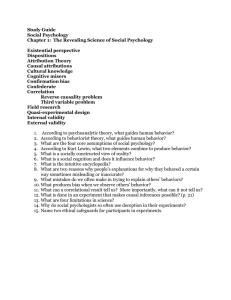

MODERNDESCRIPTIONS

OF EXPERIMENTS

Some of the terms used in describing modern experimentation (seeTable L.L) are

unique, clearly defined, and consistently used; others are blurred and inconsistently used. The common attribute in all experiments is control of treatment

(though control can take many different forms). So Mosteller (1990, p. 225)

writes, "fn an experiment the investigator controls the application of the treatment"l and Yaremko, Harari, Harrison, and Lynn (1,986,p.72) write, "one or

more independent variables are manipulated to observe their effects on one or

more dependentvariables." However, over time many different experimental subtypes have developed in responseto the needs and histories of different sciences

'Winston

('Winston, 1990;

6c Blais, 1.996\.

TABLE1.1TheVocabularyof Experiments

Experiment:

A studyin whichan intervention

to observe

itseffects.

is deliberately

introduced

Randomized

Experiment:

to receive

the treatmentor

An experiment

in whichunitsareassigned

an alternative

conditionby a randomprocess

suchasthe tossof a coinor a tableof

randomnumbers.

randomly.

An experiment

in whichunitsarenot assigned

to conditions

Quasi-Experiment:

NaturalExperiment:

Not reallyan experiment

because

the causeusuallycannotbe

manipulated;

with

a studythat contrasts

eventsuchasan earthquake

occurring

a naturally

a comoarison

condition.

Correlational

or observational

study;a study

Study:Usuallysynonymous

with nonexperimental

thatsimplyobserves

amongvariables.

the sizeanddirection

of a relationship

I

OF EXPERIMENTS

MODERNDESCRIPTIONS

I tr

Experiment

Randomized

The most clearlydescribedvariant is the randomizedexperiment,widely credited

to Sir RonaldFisher(1,925,1926).Itwas first usedin agriculturebut laterspread

to other topic areasbecauseit promisedcontrol over extraneoussourcesof variation without requiringthe physicalisolationof the laboratory.Its distinguishing

featureis clear and important-that the varioustreatmentsbeingcontrasted(includingno treatmentat all) are assignedto experimentalunits' by chance,for example,by cointossor useof a table of random numbers.If implementedcorrectlS

,"rdo- assignmentcreatestwo or more groupsof units that are probabilistically

Hence,any outcomedifferencesthat are obsimilarto .".h other on the average.6

servedbetweenthosegroupsat the end,ofa study arelikely to be dueto treatment'

not to differencesbetweenthe groupsthat alreadyexistedat the start of the study.

Further,when certainassumptionsare met, the randomizedexperimentyieldsan

estimateof the sizeof a treatmenteffectthat has desirablestatisticalproperties'

along with estimatesof the probability that the true effectfalls within a defined

confidenceinterval.Thesefeaturesof experimentsare so highly prized that in a

researchareasuchas medicinethe randomizedexperimentis often referredto as

the gold standardfor treatmentoutcomeresearch.'

Closelyrelatedto the randomizedexperimentis a more ambiguousand inconsistentlyusedterm, true experiment.Someauthorsuseit synonymouslywith

randomizedexperiment(Rosenthal& Rosnow,1991').Others useit more genermanipally to refer to any studyin which an independentvariableis deliberately

'We

ulated (Yaremkoet al., 1,9861anda dependentvariableis assessed. shall not

usethe term at all givenits ambiguity and given that the modifier true seemsto

imply restrictedclaimsto a singlecorrectexperimentalmethod.

Quasi-Experiment

Much of this book focuseson a class of designsthat Campbell and Stanley

sharewith all other

(1,963)popularizedasquasi-experiments.s

Quasi-experiments

5. Units can be people,animals,time periods,institutions,or almost anything else.Typically in field

experimentationthey are peopleor someaggregateof people,such as classroomsor work sites.In addition, a little

thought showsthat random assignmentof units to treatmentsis the sameas assignmentof treatmentsto units, so

thesephrasesare frequendyusedinterchangeably'

6. The word probabilisticallyis crucial, as is explainedin more detail in Chapter 8.

7. Although the rerm randomized experiment is used this way consistently acrossmany fields and in this book,

statisticianssometimesuse the closely related term random experiment in a different way to indicate experiments

for which the outcomecannor be predictedwith certainry(e.g.,Hogg & Tanis, 1988).

8. Campbell (1957) first calledthesecompromisedesignsbut changedterminologyvery quickly; Rosenbaum

(1995a\ and Cochran (1965\ referto theseas observationalstudies,a term we avoid becausemany peopleuseit to

to

refer to correlationalor nonexperimentalstudies,as well. Greenbergand Shroder(1997) usequdsi-etcperiment

refer to studiesthat randomly assigngroups (e.g.,communities)to conditions,but we would considerthesegrouprandomizedexperiments(Murray' 1998).

I

14 I 1. EXPERIMENTS

AND GENERALIZED

CAUSAL

INFERENCE

I

experiments a similar purpose-to test descriptivecausal hypothesesabout manipulable causes-as well as many structural details, such as the frequent presenceof

control groups and pretest measures,to support a counterfactual inference about

what would have happened in the absenceof treatment. But, by definition, quasiexperiments lack random assignment. Assignment to conditions is by means of selfselection,by which units choosetreatment for themselves,or by meansof administrator selection,by which teachers,bureaucrats,legislators,therapists,physicians,

or others decide which persons should get which treatment. Howeveq researchers

who use quasi-experimentsmay still have considerablecontrol over selectingand

schedulingmeasures,over how nonrandom assignmentis executed,over the kinds

of comparison groups with which treatment,groups are compared, and over some

aspectsof how treatment is scheduled.As Campbell and Stanleynote:

There are many natural socialsettingsin which the researchpersoncan introduce

somethinglike experimentaldesigninto his schedulingof data collectionprocedures

(e.g.,the uhen and to whom of measurement),

eventhough he lacksthe full control

over the schedulingof experimentalstimuli (the when and to wltom of exposureand

the ability to randomizeexposures)which makesa true experimentpossible.Collecdesigns.(Campbell&

tively,such situationscan be regardedas quasi-experimental

p. 34)

StanleS1,963,

In quasi-experiments,the causeis manipulable and occurs before the effect is

measured. However, quasi-experimental design features usually create less compelling support for counterfactual inferences. For example, quasi-experimental

control groups may differ from the treatment condition in many systematic(nonrandom) ways other than the presenceof the treatment Many of theseways could

be alternative explanations for the observed effect, and so researchershave to

worry about ruling them out in order to get a more valid estimate of the treatment

effect. By contrast, with random assignmentthe researcherdoes not have to think

as much about all these alternative explanations. If correctly done, random assignment makes most of the alternatives less likely as causes of the observed

treatment effect at the start of the study.

In quasi-experiments,the researcherhas to enumeratealternative explanations

one by one, decide which are plausible, and then use logic, design, and measurement to assesswhether each one is operating in a way that might explain any observedeffect. The difficulties are that thesealternative explanations are never completely enumerable in advance, that some of them are particular to the context

being studied, and that the methods neededto eliminate them from contention will

vary from alternative to alternative and from study to study. For example, suppose

two nonrandomly formed groups of children are studied, a volunteer treatment

group that gets a new reading program and a control group of nonvolunteerswho

do not get it. If the treatment group does better, is it becauseof treatment or becausethe cognitive development of the volunteerswas increasingmore rapidly even

before treatment began? (In a randomized experiment, maturation rates would

t

rl

OF EXPERIMENTS 1s

MODERNDESCRIPTIONS

|

this alternative,the rehavebeenprobabilisticallyequalin both groups.)To assess

searchermight add multiple preteststo revealmaturationaltrend beforethe treatment, and then comparethat trend with the trend after treatment.

Another alternativeexplanationmight bethat the nonrandomcontrol group into booksin their homesor

childrenwho had lessaccess

cludedmoredisadvantaged

who had parentswho read to them lessoften. (In a randomizedexperiment'both

this altergroupswould havehad similar proportionsof suchchildren.)To assess

nativi, the experimentermay measurethe number of books at home,parentaltime

would

spentreadingtochildren,and perhapstrips to libraries.Then the researcher

seeif thesevariablesdiffered acrosstreatment and control groups in the hypothesizeddirection that could explain the observedtreatment effect. Obviously,as the

number of plausiblealternativeexplapationsincreases,the designof the quasi. experimentbecomesmore intellectually demandingand complex---especiallybecausewe are nevercertainwe haveidentifiedall the alternativeexplanations.The

efforts of the quasi-experimenterstart to look like affemptsto bandagea wound

that would havebeenlesssevereif random assignmenthad beenusedinitially.

The ruling out of alternativehypothesesis closelyrelatedto a falsificationist

logic popularizedby Popper(1959).Poppernoted how hard it is to be sure that a

g*.r"t conclusion(e.g.,,ll r*"ttr are white) is correct basedon a limited set of

observations(e.g.,all the swansI've seenwere white). After all, future observations may change(e.g.,somedayI may seea black swan).So confirmation is logically difficult. By contrast,observinga disconfirminginstance(e.g.,a black swan)

is sufficient,in Popper'sview, to falsify the generalconclusionthat all swansare

white. Accordingly,nopper urged scientiststo try deliberatelyto falsify the conclusionsthey wiih to draw rather than only to seekinformation corroborating

them. Conciusionsthat withstand falsificationare retainedin scientificbooks or

journals and treated as plausible until better evidencecomes along. Quasiexperimentationis falsificationistin that it requiresexperimentersto identify a

causalclaim and then to generateand examineplausiblealternativeexplanations

that might falsify the claim.

However,suchfalsificationcan neverbe as definitiveas Popperhoped.Kuhn

(7962) pointed out that falsificationdependson two assumptionsthat can never

be fully tested.The first is that the causalclaim is perfectlyspecified.But that is

neverih. ."r.. So many featuresof both the claim and the test of the claim are

debatable-for example,which outcome is of interest,how it is measured,the

conditionsof treatment,who needstreatment,and all the many other decisions

must make in testingcausalrelationships.As a result, disconfirthat researchers

mation often leadstheoriststo respecifypart of their causaltheories.For example, they might now specifynovel conditionsthat must hold for their theory to be

irue and that were derivedfrom the apparentlydisconfirmingobservations.Second, falsificationrequiresmeasuresthat are perfectlyvalid reflectionsof the theory being tested.However,most philosophersmaintain that all observationis

theorv-laden.It is laden both with intellectualnuancesspecificto the partially

INFERENCE

AND GENERALIZED

CAUSAL

16 I 1. EXPERIMENTS

I

of the theory held by the individual or group deuniquescientificunderstandings

vising the test and also with the experimenters'extrascientificwishes,hopes,

aspirations,and broadly shared cultural assumptionsand understandings.If

measuresare not independentof theories,how can they provideindependenttheory tests,includingtestsof causaltheories?If the possibilityof theory-neutralobservationsis denied,with them disappearsthe possibilityof definitiveknowledge

both of what seemsto confirm a causalclaim and of what seemsto disconfirmit.

a fallibilist versionof falsificationis possible.It arguesthat studNonetheless,

iesof causalhypothesescan still usefullyimproveunderstandingof generaltrends

that might pertainto thosetrends.It ardespiteignoranceof all the contingencies

guesthat causalstudiesare usefulevenif w0 haveto respecifythe initial hypothAfesisrepeatedlyto accommodatenew contingenciesand new understandings.

ter all, those respecificationsare usually minor in scope;they rarely involve

wholesaleoverthrowingof generaltrendsin favor of completelyoppositetrends.

Fallibilist falsificationalso assumesthat theory-neutralobservationis impossible

but that observationscan approacha more factlikestatuswhenthey havebeenrepeatedlymadeacrossdifferenttheoreticalconceptionsof a construct,acrossmulthat observaand at multiple times.It alsoassumes

tiple kinds of measurements,

that

different

and

one,

not

tions are imbued with multiple theories,

iust

operationalproceduresdo not sharethe samemultiple theories.As a result,observationsthat repeatedlyoccur despitedifferent theoriesbeing built into them

havea specialfactlike statusevenif they can neverbe fully justifiedascompletely

theory-neutralfacts.In summary,then, fallible falsificationis more than just seeing whether observationsdisconfirm a prediction. It involvesdiscoveringand

judging the worth of ancillary assumptionsabout the restrictedspecificityof the

causalhypothesisunder test and also about the heterogeneityof theories,viewpoints, settings,and times built into the measuresof the causeand effectand of

modifying their relationship.

any contingencies

It is neitherfeasiblenor desirableto rule out all possiblealternativeinterpretarionsof a causalrelationship.Instead,only plausiblealternativesconstitutethe

major focus.This servespartly to keep matterstractablebecausethe number of

possiblealternativesis endless.It also recognizesthat many alternativeshaveno

seriousempiricalor experientialsupport and so do not warrant specialattention.

However,the lack of supportcan sometimesbe deceiving.For example,the cause

of stomachulcerswas long thought to be a combinationof lifestyle(e.g.,stress)

and excessacid production. Few scientistsseriouslythought that ulcers were

that an

it was assumed

causedby a pathogen(e.g.,virus,germ,bacteria)because

However,in L982 Ausacid-filled stomachwould destroy all living organisms.

'Warren

discoveredspiral-shaped

tralian researchersBarry Marshall and Robin

bacteria,later named Helicobacterpylori (H. pylori), in ulcerpatients'stomachs.

rilfith this discovery,the previouslypossiblebut implausiblebecameplausible.By

"1994,

a U.S. National Institutesof Health ConsensusDevelopmentConference

concluded that H. pylori was the major causeof most peptic ulcers. So labeling ri-

I

OFEXPERIMENTS

MODERNDESCRTPTONS

II tt

val hypothesesas plausible dependsnot just on what is logically possible but on

social consensus,shared experienceand, empirical data.

Becausesuch factors are often context specific, different substantive areasdevelop their own lore about which alternatives are important enough to need to be

controlled, even developing their own methods for doing so. In early psychologg

for example, a control group with pretest observations was invented to control for

the plausible alternative explanation that, by giving practice in answering test content, pretestswould produce gains in performance even in the absenceof a treatment effect (Coover 6c Angell, 1907). Thus the focus on plausibility is a two-edged

sword: it reducesthe range of alternatives to be considered in quasi-experimental

work, yet it also leavesthe resulting causal inference vulnerable to the discovery

that an implausible-seemingalternative may later emerge as a likely causal agent.

NaturalExperiment

The term natural experiment describesa naturally-occurring contrast between a

treatment and a comparisoncondition (Fagan, 1990; Meyer, 1995;Zeisel,1,973l.

Often the treatments are not even potentially manipulable, as when researchers

retrospectivelyexamined whether earthquakesin California causeddrops in property values (Brunette, 1.995; Murdoch, Singh, 6c Thayer, 1993). Yet plausible

causal inferences about the effects of earthquakes are easy to construct and defend. After all, the earthquakesoccurred before the observations on property values,and it is easyto seewhether earthquakesare related to properfy values. A useful source of counterfactual inference can be constructed by examining property

values in the same locale before the earthquake or by studying similar localesthat

did not experience an earthquake during the bame time. If property values

dropped right after the earthquake in the earthquake condition but not in the comparison condition, it is difficult to find an alternative explanation for that drop.

Natural experiments have recently gained a high profile in economics. Before

the 1990s economists had great faith in their ability to produce valid causal inferencesthrough statistical adjustments for initial nonequivalence between treatment and control groups. But two studies on the effects of job training programs

showed that those adjustments produced estimates that were not close to those

generated from a randomized experiment and were unstable across tests of the

model's sensitivity (Fraker 6c Maynard, 1,987; Lalonde, 1986). Hence, in their

searchfor alternative methods, many economistscame to do natural experiments,

such as the economic study of the effects that occurred in the Miami job market

when many prisoners were releasedfrom Cuban jails and allowed to come to the

United States(Card, 1990). They assumethat the releaseof prisoners (or the timing of an earthquake) is independent of the ongoing processesthat usually affect

unemployment rates (or housing values). Later we explore the validity of this

assumption-of its desirability there can be little question.

18 I 1. EXPERIMENTS

AND GENERALIZED

INFERENCE

CAUSAL

Nonexperimental

Designs

The termscorrelationaldesign,passiveobservationaldesign,and nonexperimental

designrefer to situationsin which a presumedcauseand effect are identified and

measuredbut in which other structural featuresof experimentsare missing.Random assignmentis not part of the design,nor are suchdesignelementsas pretests

and control groupsfrom which researchers

might constructa usefulcounterfactual

inference.Instead,relianceis placedon measuringalternativeexplanationsindividually and then statisticallycontrolling for them. In cross-sectional

studiesin

which all the data aregatheredon the respondentsat one time, the researchermay

not even know if the causeprecedesthe dffect. When thesestudiesare used for

causalpurposes,the missingdesignfeaturescan be problematicunlessmuch is already known about which alternativeinterpretationsare plausible,unlessthose

that are plausiblecan be validly measured,and unlessthe substantivemodel used

for statisticaladjustmentis well-specified.

Theseare difficult conditionsto meetin

the real world of researchpractice,and thereforemany commentatorsdoubt the

potentialof suchdesignsto supportstrongcausalinferencesin most cases.

EXPERIMENTS

ANDTHEGENERALIZATION

OF

CAUSALCONNECTIONS

The strength of experimentation is its ability to illuminate causal inference. The

weaknessof experimentation is doubt about the extent to which that causal rela'We

tionship generalizes.

hope that an innovative feature of this book is its focus

on generalization. Here we introduce the general issuesthat are expanded in later

chapters.

Most Experiments

Are HighlyLocalBut Have

GeneralAspirations

Most experimentsare highly localizedand particularistic.They are almostalways

conductedin a restrictedrange of settings,often just one, with a particular version of one type of treatmentrather than, say,a sampleof all possibleversions.

Usually they have severalmeasures-eachwith theoreticalassumptionsthat are

differentfrom thosepresentin other measures-but far from a completesetof all

possiblemeasures.Each experimentnearly always usesa convenientsampleof

people rather than one that reflectsa well-describedpopulation; and it will inevitably be conductedat a particular point in time that rapidly becomeshistory.

Yet readersof experimentalresultsare rarelyconcernedwith what happened

in that particular,past,local study.Rather,they usuallyaim to learn eitherabout

theoreticalconstructsof interestor about alarger policy.Theoristsoften want to

CONNECTIONS

OFCAUSAL

AND THEGENERALIZATION

EXeERTMENTS

I t'

connect experimental results to theories with broad conceptual applicability,

which ,.q,rir., generalization at the linguistic level of constructs rather than at the

level of the operations used to represent these constructs in a given experiment.

They nearly always want to generallzeto more people and settings than are representedin a single experiment. Indeed, the value assignedto a substantive theory

usually dependson how broad a rangeof phenomena the theory covers. SimilarlS

policymakers may be interested in whether a causal relationship would hold

implemented as a

iprobabilistically) across the many sites at which it would be

experimental

original

the

beyond

policS an inferencethat requires generalization

stody contexr. Indeed, all human beings probably value the perceptual and cognitive stability that is fostered by generalizations. Otherwise, the world might appear as a btulzzingcacophony of isolqted instances requiring constant cognitive

processingthat would overwhelm our limited capacities.

In defining generalizationas a problem, we do not assumethat more broadly applicable resulti are always more desirable(Greenwood, 1989). For example, physicists -ho use particle accelerators to discover new elements may not expect that it

would be desiiable to introduce such elementsinto the world. Similarly, social scientists sometimes aim to demonstrate that an effect is possible and to understand its

mechanismswithout expecting that the effect can be produced more generally. For

"sleeper effect" occurs in an attitude change study involving perinstance, when a

suasivecommunications, the implication is that change is manifest after a time delay

but not immediately so. The circumstancesunder which this effect occurs turn out to

be quite limited and unlikely to be of any general interest other than to show that the

theory predicting it (and many other ancillary theories) may not be wrong (Cook,

Gruder, Hennigan & Flay l979\.Experiments that demonstrate limited generalization may be just as valuable as those that demonstratebroad generalization.

Nonetheless,a conflict seemsto exist berweenthe localized nature of the causal

knowledge that individual experiments provide and the more generalizedcausal

goals that researchaspiresto attain. Cronbach and his colleagues(Cronbach et al.,

f gSO;Cronbach, 19821havemade this argument most forcefully and their works

have contributed much to our thinking about causal generalization. Cronbach

noted that each experiment consistsof units that receivethe experiencesbeing contrasted, of the treaiments themselves, of obseruations made on the units, and of the

settings in which the study is conducted. Taking the first letter from each of these

"instances on which data

four iords, he defined the acronym utos to refer to the

"1.982,p.

78)-to the actual people,treatments' measures'

are collected" (Cronb ach,

and settingsthat were sampledin the experiment. He then defined two problems of

"domain about which

[the] question is asked"

generalizition: (1) generaliiing to the

"units, treatments,variables,

(p.7g),which he called UTOS; and (2) generalizingto

oUTOS.e

"nd r.r,ings not directly observed" (p. 831,*hi.h he called

S,

9. We oversimplify Cronbach'spresentationhere for pedagogicalreasons.For example,Cronbach only usedcapital

not small s, so that his system,eferred only to ,tos, not utos. He offered diverseand not always consistentdefinitions

do here.

of UTOS and *UTOS, in particular. And he doesnot usethe word generalizationin the samebroad way we

I

INFERENCE

20 I 1. EXPERIMENTS

AND GENERALIZED

CAUSAL

outlinedbelowand presentedin more deOur theoryof causalgeneralization,

tail in ChaptersLL through 13, melds Cronbach'sthinking with our own ideas

about generalizationfrom previousworks (Cook, 1990, t99t; Cook 6c Campbell,1979), creatinga theory that is differentin modestways from both of these

predecessors.

Our theory is influencedby Cronbach'swork in two ways.First, we

follow him by describingexperimentsconsistentlythroughout this book as consistingof the elementsof units, treatments,observations,and settingsrlothough

we frequentlysubstitutepersonsfor units giventhat most field experimentationis

conductedwith humansas participants.:Wealsooften substituteoutcomef.orobseruationsgiven the centrality of observationsabout outcomewhen examining

areofteninterested

causalrelationships.Second,we acknowledgethat researchers

in two kinds of.generalizationabout eachof thesefive elements,and that these

that

two typesareinspiredbg but not identicalto, the two kinds of generalization

'We

Cronbach defined.

call these construct validity generalizations(inferences

about the constructsthat researchoperationsrepresent)and externalvalidity genabout whetherthe causalrelationshipholdsovervariation

eralizations(inferences

variables).

in persons,settings,treatment,and measurement

ConstructValidity:CausalGeneralization

as Representation

The first causal generalization problem concerns how to go from the particular

units, treatments, observations, and settings on which data are collected to the

higher order constructs these instancesrepresent.These constructs are almost always couched in terms that are more abstract than the particular instancessampled in an experiment. The labels may pertain to the individual elementsof the experiment (e.g., is the outcome measured by a given test best described as

intelligence or as achievement?).Or the labels may pertain to the nature of relationships among elements, including causal relationships, as when cancer treatments are classified as cytotoxic or cytostatic depending on whether they kill tumor cells directly or delay tumor growth by modulating their environment.

Consider a randomized experiment by Fortin and Kirouac (1.9761.The treatment

was a brief educational course administered by severalnurses,who gave a tour of

their hospital and covered some basic facts about surgery with individuals who

were to have elective abdominal or thoracic surgery 1-5to 20 days later in a single Montreal hospital. Ten specific outcome measureswere used after the surgery,

such as an activities of daily living scaleand a count of the analgesicsused to control pain. Now compare this study with its likely t^rget constructs-whether

10. \Weoccasionallyrefer to time as a separatefeatureof experiments,following Campbell (79571and Cook and

Campbell (19791,becausetime can cut acrossthe other factorsindependently.Cronbachdid not includetime in

his notational system,insteadincorporating time into treatment(e.g.,the schedulingof treatment),observations

(e.g.,when measuresare administered),or setting (e.g.,the historicalcontext of the experiment).

oF cAUsALcoNNEcrtoNS| ,,

ANDTHEGENERALIZATIoN

EXnERTMENTs

I

patient education (the target cause)promotes physical recovery (the targ€t effect)

"*ong surgical patients (the target population of units) in hospitals (the target

univeise ofiettings). Another example occurs in basic research,in which the question frequently aiises as to whether the actual manipulations and measuresused

in an experiment really tap into the specific cause and effect constructs specified

by the theory. One way to dismiss an empirical challenge to a theory is simply to

make the casethat the data do not really represent the concepts as they are specified in the theory.

Empirical resnlts often force researchersto change their initial understanding

of whaithe domain under study is. Sometimesthe reconceptuahzation leads to a

more restricted inference about what has been studied. Thus the planned causal

agent in the Fortin and Kirouac (I976),study-patie,nt education-might need to

b! respecified as informational patient education if the information component of

the treatment proved to be causally related to recovery from surgery but the tour

of the hospital did not. Conversely data can sometimes lead researchersto think

in terms o?,"rg., constructs and categoriesthat are more general than those with

which they began a researchprogram. Thus the creative analyst of patient education studies mlght surmise that the treatment is a subclass of interventions that

"perceived control" or that recovery from surgery can be

function by increasing

;'p.tronal coping." Subsequentreaders of the study can

treated as a subclas of

even add their own interpietations, perhaps claiming that perceived control is really just a special caseof the even more general self-efficacy construct. There is a

sobtie interplay over time among the original categories the researcherintended

to represeni, the study as it was actually conducted, the study results, and subseqrr..ri interpretations. This interplay can change the researcher'sthinking about

what the siudy particulars actually achieved at a more conceptual level, as can

feedback fromreaders. But whatever reconceptualizationsoccur' the first problem

of causal generaltzationis always the same: How can we generalizefrom a sample of instancesand the data patterns associatedwith them to the particular target constructs they represent?

as Extrapolation

ExternalValidity:CausalGeneralization

The secondproblem of generalizationis to infer whether a causalrelationship

holdsovervariationsin p.rrorrt, settings,treatments,and outcomes.For example,

someonereadingthe resultsof an experimenton the effectsof a kindergarten

grammarschoolreadingtestscoresof poor

Head Startprogiam on the subsequent

African Americanchildrenin Memphis during the 1980smay want to know if a

programwith partially overlappingcognitiveand socialdevelopmentgoals_would

be aseffectivein improvingthi mathematicstest scoresof poor Hispanicchildren

in Dallas if this programwere to be implementedtomorrow.

This exampl. again reminds us that generahzationis not a synonym for

broader applicatiorr.H.r., generahzationis from one city to another city and

1. EXPERIMENTS

AND GENERALIZED

INFERENCE

CAUSAL

from one kind of clienteleto anotherkind, but thereis no presumptionthat Dallas is somehow broader than Memphis or that Hispanic children constitute a

broader population than African American children. Of course,some generalizations are from narrow to broad. For example,a researcherwho randomly

samplesexperimentalparticipants from a national population may generalize

(probabilistically)from the sampleto all the other unstudiedmembersof that

samepopulation. Indeed,that is the rationale for choosingrandom selectionin

the first place.Similarly when policymakersconsiderwhetherHead Start should

be continuedon a national basis,they are not so interestedin what happenedin

Memphis.They are more interestedin what would happenon the averageacross

the United States,as its many local programsstill differ from eachother despite

efforts in the 1990sto standardizemuch of what happensto Head Startchildren

and parents.But generalizationcan also go from the broad to the narrow. Cronbetween

bach(1982)givesthe exampleof an experimentthat studieddifferences

the performancesof groups of studentsattendingprivate and public schools.In

this case,the concernof individual parentsis to know which type of schoolis better for their particular child, not for the whole group. \Thether from narrow to

broad, broad to narroq or acrossunits at about the samelevelof aggregation,

all theseexamplesof externalvalidity questionssharethe sameneed-to infer the

extent to which the effect holds over variationsin persons,settings,treatments,

or outcomes.

Approaches

to MakingCausalGeneralizations

\Thichever way the causal generalization issue is framed, experiments do not

seem at first glance to be very useful. Almost invariablS a given experiment uses

a limited set of operations to represent units, treatments, outcomes, and settings.

This high degree of localization is not unique to the experiment; it also characterizes case studies, performance monitoring systems, and opportunisticallyadministered marketing questionnaires given to, say, a haphazard sample of respondents at local shopping centers (Shadish, 1995b). Even when questionnaires

are administered to nationally representative samples, they are ideal for representing that particular population of persons but have little relevanceto citizens

outside of that nation. Moreover, responsesmay also vary by the setting in which

the interview took place (a doorstep, a living room, or a work site), by the time

of day at which it was administered, by how each question was framed, or by the

particular race, age,and gender combination of interviewers. But the fact that the

experiment is not alone in its vulnerability to generalization issuesdoes not make

it any less a problem. So what is it that justifies any belief that an experiment can

achieve a better fit between the sampling particulars of a study and more general

inferences to constructs or over variations in persons, settings, treatments, and

outcomes?

oF cAUsALcoNNEcrtoNs I tt

ANDTHEGENERALtzATtoN

EXeERTMENTs

Samplingand CausalGeneralization

The methodmost often recommendedfor achievingthis closefit is the useof formal probabiliry samplingof instancesof units, treatments,observations,or setthat we have clearly

tings (Rossi,Vlright, & Anderson,L983). This presupposes

deiineatedpopulationsof eachand that we can samplewith known probability

from within eachof thesepopulations.In effect,this entailsthe random selection

earof instances,to be carefullydistinguishedfrom random assignmentdiscussed

repreto

chance

by

lier in this chapter.Randomselectioninvolvesselectingcases

sentthat popuiation,whereasrandom assignmentinvolvesassigningcasesto multiple conditions.

In cause-probingresearchthat is not experimental,random samplesof indilongitudinalsurveyssuchasthe PanelStudyof

viduals"r. oft.n nr.d. Large-scale

IncomeDynamicsor the National Longitudinal Surveyare usedto representthe

populationof the United States-or certainagebracketswithin it-and measures

Lf pot.ntial causesand effectsare then relatedto each other using time lags in

All this is donein

,nr^"r.rr.-ent and statisticalcontrolsfor group nonequivalence.

hopesof approximatingwhat a randomizedexperimentachieves.However,cases

of random ielection from a broad population followed by random assignment

from within this population are much rarer (seeChapter 12 for examples).Also

Such

rare arestudiesoi t".rdotn selectionfollowed by a quality quasi-experiment.

that

control

logistical

experimentsrequirea high levelof resourcesand a degreeof

prefer to rely on an implicit set of nonstais iarely feasible,so many researchers

tistical heuristicsfor generalizationthat we hope to make more explicit and systematicin this book.

Random selectionoccurseven more rarely with treatments'outcomes,and

settingsthan with people.Considerthe outcomesobservedin an experiment.How

ofterrlre they raniomly sampled?'Wegrant that the domain samplingmodel of

classicaltestiheory (Nunnally 6c Bernstein,1994)assumesthat the itemsusedto

measurea constructhavebeenrandomly sampledfrom a domain of all possible

items. However,in actual experimentalpracticefew researchersever randomly

sampleitemswhen constructingmeasures.Nor do they do so when choosingmanipulationsor settings.For instance,many settingswill not agreeto be sampled,

"rid ,o1n. of the settingsthat agreeto be randomly sampledwill almostcertainly

not agreeto be randomlyassignedto conditions.For treatments,no definitivelist

of poisible treatmentsusuallyexists,as is most obvious in areasin which treatare being discoveredand developedrapidly, such as in AIDS research.In

-*,,

general,then, random samplingis alwaysdesirable,but it is only rarely and confeasible.

tingently

"However,

formal samplingmethodsare not the only option. Two informal, purposive samplingmethodrare sometimesuseful-purposive sampling of heterogeneousinstancesand purposivesamplingof typical instances.In the former case'the

aim is to includeinrLni.r chosendeliberatelyto reflect diversity on presumptively

important dimensions,eventhough the sampleis not formally random. In the latter

INFERENCE

CAUSAL

ANDGENERALIZED

24 I .l. TxpEnIMENTS

case,the aim is to explicate the kinds of units, treatments, observations, and settings

to which one most wants to generalize andthen to selectat least one instance of each

class that is impressionistically similar to the class mode. Although these purposive

sampling methods are more practical than formal probability sampling, they are not

backed by a statistical logic that justifies formal generalizations.Nonetheless, they

are probabty the most commonly used of all sampling methods for facilitating generalizations. A task we set ourselvesin this book is to explicate such methods and to

describe how they can be used more often than is the casetoday.

However, sampling methods of any kind are insufficient to solve either problem of generalization. Formal probability sampling requires specifying a target

population from which sampling then takes place, but defining such populations

is difficult for some targets of generalization such as treatments. Purposive sampling of heterogeneousinstancesis differentially feasible for different elementsin

a study; it is often more feasible to make measuresdiverse than it is to obtain diverse settings, for example. Purposive sampling of typical instancesis often feasible when target modes, medians, or means are known, but it leaves questions

about generalizationsto a wider range than is typical. Besides,as Cronbach points

out, most challenges to the causal generalization of an experiment typically

emerge after a study is done. In such cases,sampling is relevant only if the instancesin the original study were sampled diversely enough to promote responsible reanalysesof the data to seeif a treatment effect holds acrossmost or all of the

targets about which generahzation has been challenged. But packing so many

sourcesof variation into a single experimental study is rarely practical and will almost certainly conflict with other goals of the experiment. Formal sampling methods usually offer only a limited solution to causal generalizationproblems. A theory of generalizedcausal inference needsadditional tools.

A GroundedTheoryof CausalGeneralization

in their research,and

Practicingscientistsroutinely make causal generalizations

do. In this book, we

they