An online dereverberation algorithm for hearing aids with binaural

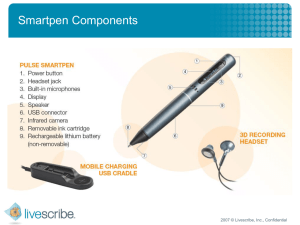

advertisement

An online dereverberation algorithm for

hearing aids with binaural cues preservation

1

Boaz Schwartz1, Sharon Gannot1, and Emanuël A.P. Habets2

2

Faculty of Engineering,

International Audio Laboratories, Erlangen

Bar-Ilan University

University of Erlangen-Nuremberg & Fraunhofer IIS

Ramat-Gan, Israel

Erlangen, Germany

boazsh0@gmail.com, sharon.gannot@biu.ac.il

Emanuel.Habets@audiolabs-erlangen.de

Experimental Study

Experiments took place in the Speech and Acoustic Lab

at Bar-Ilan University:

• Starkey hearing aids mounted on B&K HATS.

• RIRs were recorded.

• 3 different reverberation times, 3 positions, 5 angles.

Motivation

Previous work [1-3]:

3 Significant dereverberation was achieved.

3 Online algorithm tested on moving speakers.

7 Single-channel output → binaural required.

7 Early signal estimated → dry output.

In this work we present:

3 Online algorithm for binaural dereverberation.

3 Preserving the desired part of the RIR.

Fig. 1: Left and right RIRs

Algorithm outline - RKEMD

Statistical model

Fig. 3: Experimental setup - hearing aid (left), lab setup (right)

In the STFT domain, the model for speech signal in the

t-th time frame and frequency k is,

s(t, k) ∼ NC {0, φs(t, k)}

φs(t, k) =

−2

T φw (t) · 1 − at ek .

Using the convolutive transfer function (CTF) model for

reverberation, the j-th input signal is,

hj (l, k)s(t − l, k) + vj (t, k)

l=0 vj (t, k) ∼ NC 0, φvj (t, k)

j = 0, ..., J − 1 .

,

We use the state-vector representation (k omitted),

zj (t) = hjT st + vj (t) sTt ≡ [s(t − L + 1), . . . , s(t)]

hjT ≡ [hj (L − 1), ..., hj (0)] ,

and for the multi-channel signal we use matrix form,

Fig. 2: General scheme of the proposed algorithm

zt ≡ [z1(t), . . . , zJ (t)]T

vt ≡ [v1(t), . . . , vJ (t)]T

zt = Hst + vt

Fig. 4: ITD and ILD distribution for the different reverberation

levels (rows) and different values of α (columns). These plots relate

to the farthest speaker (denoted by speaker 3 in the above setup)

Recursive-EM algorithm

with H = (h0, . . . , hJ−1)T comprising all J CTFs.

In order to apply MMSE estimates, define the following

matrices,

!

φv1 · · · 0

0 ··· 0

0 I

. ..

. ..

.

.

.

.

. ,

. G= .

Φ=

Ft = .

T

00

0 · · · φvj

0 · · · φx(t)

and the state-space model of the desired source,

Binaural problem formulation

Define the following signal as the reference,

4

α=0.1

T

xt = Φxt−1 + ut , ut ≡ [0, . . . , x(t)] .

and xt defined similarly to st. The matrix form is now

zt =

t

+ vt with

=

e

h

0

1

e

,...,h

T

J−1

.

The target signals are defined by

iT

e

yj (t) = W · h

j xt ,

where W is a weighting matrix. An intuitive choice is,

Wα ≡ diag e , e

0

−α

, ..., e

b t|t−1 = Φ x

b t−1|t−1

x

Pt|t−1 = Φ Pt−1|t−1 ΦT + Ft

−(M −1)α

W∞ = diag {1, 0, ..., 0} , W0 = diag {1, 1, ..., 1} .

Update:

i−1

fx

et = zt − H

t b t|t−1

b t|t = x

b t|t−1 + Kt et

x

h

i

f P

Pt|t = IM − KtH

t

t|t−1

[0.5,4]

References

(t)

(t−1)

H

b t|tx

b t|t

Rxx

= β Rxx

+ (1 − β) · x

+ Pt|t

Finally, the binaural target signal is

xt

where r is the index of the reference microphone on the

right device.

i

(t)

(t−1)

∗

b

rxzj = β rxzj + (1 − β) · xt|t zj (t)

2

(t)

(t−1)

rzj zj = β rzj zj + (1 − β) · |zj (t)|

Parameters:

e (t) ← linear fit of x and z (t) (least-squares).

h

j

t

j

φvj (t) ← residual of the linear fit.

Output signal:

h

e (t) , h

e (t)

yB(t) = W · h

`

r

iT

b t|t

x

B. Schwartz, S. Gannot, and E. A. P. Habets, “Online

speech dereverberation using Kalman filter and EM

algorithm,” IEEE/ACM Transactions on Audio, Speech,

and Language Processing vol. 23, no. 2, February 2015.

2 B. Schwartz, S. Gannot, and E. A. P. Habets,

“Multi-microphone speech dereverberation using

expectation-maximization and Kalman smoothing,”

European Signal Processing Conference (EUSIPCO),

Marakech, Morocco, Sept. 2013.

3 B. Schwartz, S. Gannot, and E. A. P. Habets,

“LPC-based speech dereverberation using Kalman-EM

algorithm,” International Workshop on Acoustic Echo

and Noise Control (IWAENC), Antibes – Juan les Pins,

France, Sept. 2014.

1

Sufficient statistics:

h

e , h

e

yB(t) = W · h

`

r

[-2.3,0.5]

{0, 0.7}: more reverberation is removed.

• Above 0.7, there is a slight degradation, due to

estimation errors. This degradation is more pronounced

in subjective evaluation.

• As α increases, the signal becomes more directional, as

can be deduced from the more concentrated cue

scattering.

Statistics and M-step

iT

[-4,-2.3]

• Speech quality is improved as α increases in the range

o

and the two extremes would be

h

[-6,-4]

Conclusions

Predict:

fH H

fP

fH + G

Kt = Pt|t−1H

H

t t|t−1 t

t

t

n

2

Fig. 5: WSNR improvement (w.r.t the direct speech) for different αs.

E-step: Kalman filter

h

h

2.5

DRR range (dB)

e T x + v (t) with h

e = h−1(0) · h ,

zj (t) = h

j

j

j

j t

`

f

H

α=∞

3

[-10,-6]

where ` denotes the index of the reference microphone of

the left device. Now, we re-write the state vector representation as

f

Hx

α=0.7

1.5

x(t) = h`(0)s(t) ,

α=0.35

3.5

WSNR Imp (dB)

zj (t, k) ≈

L−1

X