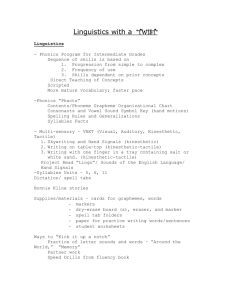

The effect of phonetic production training with visual feedback on the

advertisement