Solving the optimal PWM problem for single

advertisement

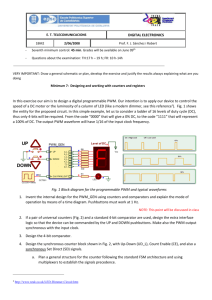

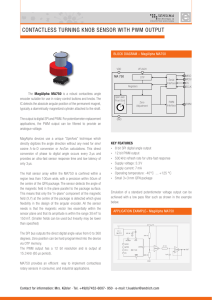

IEEE TRANSACTIONS ON CIRCUITS AND SYSTEMS—I: FUNDAMENTAL THEORY AND APPLICATIONS, VOL. 49, NO. 4, APRIL 2002 465 Solving the Optimal PWM Problem for Single-Phase Inverters Dariusz Czarkowski, Member, IEEE, David V. Chudnovsky, Member, IEEE, Gregory V. Chudnovsky, and Ivan W. Selesnick, Member, IEEE Abstract—In this paper, the basic algebraic properties of the optimal PWM problem for single-phase inverters are revealed. Specifically, it is shown that the nonlinear design equations given by the standard mathematical formulation of the problem can be reformulated, and that the sought solution can be found by computing the roots of a single univariate polynomial ( ), for which algorithms are readily available. Moreover, it is shown that the polynomials ( ) associated with the optimal PWM problem are orthogonal and can therefore be obtained via simple recursions. The reformulation draws upon the Newton identities, Padé approximation theory, and properties of symmetric functions. As a result, fast ( log2 ) algorithms are derived that provide the exact solution to the optimal PWM problem. For the PWM harmonic elimination problem, explicit formulas are derived that further simplify the algorithm. Index Terms—Harmonic elimination, Newton identities, orthogonal polynomials, Padé approximation, pulsewidth modulation (PWM), single-phase inverters, symmetric functions. I. INTRODUCTION T HE PROBLEM of the optimal design of pulsewidth modulated (PWM) waveforms for single-phase inverters [1], [2] is examined in this paper. PWM signals are used in power electronics, motor control and solid-state electric energy conversion [2], [3]. The best voltage signal for these purposes is one with a periodic time variation in which amplitudes of selected nonfundamental components of the signal have been controlled to increase efficiency and reduce damaging vibrations. How can the waveform be designed to most effectively accomplish this? This paper describes a contribution to the theory and practice of optimal PWM waveforms that addresses this question. A PWM waveform consists of a series of positive and negative pulses of constant amplitude but with variable switching instances as depicted in Fig. 1 (as in a power electronic PWM full-bridge inverter). A typical goal is to generate a train of pulses such that the fundamental component of the resulting waveform has a specified frequency and amplitude (e.g., for a speed control of an induction motor). Some of constant Manuscript received December 13, 1999; revised January 20, 2001. This work was supported in part by the National Science Foundation under CAREER Grant CCR-987452. This paper was recommended by Associate Editor H. S. H. Chung. D. Czarkowski and I. W. Selesnick are with the Department of Electrical and Computer Engineering, Polytechnic University, Brooklyn, NY 11201 USA (e-mail: dcz@pl.poly.edu; selesi@taco.poly.edu). D. V. Chudnovsky and G. V. Chudnovsky are with the Department of Mathematics, Polytechnic University, Brooklyn, NY 11201 USA. Publisher Item Identifier S 1057-7122(02)03137-9. Fig. 1. A three-level PWM waveform. the proposed methods for PWM waveform design are: modulating-function techniques, space-vector techniques, and feedback methods [2]. These methods suffer, however, from high residual harmonics that are difficult to control and from limitations in their applicability. A method that theoretically offers the highest quality of the output waveform is the so-called programmed or optimal PWM [4]. Owing to the symmetries in the PWM waveform of Fig. 1, only the odd harmonics exist. Assuming that the PWM waveform is chopped times per half a cycle, the Fourier coefficients of odd harmonics are given by (1) and is the amplitude of the square where wave. Amplitudes of any harmonics can be set by solving a system of nonlinear equations obtained from setting (1) equal to prespecified values. In the harmonic elimination programmed PWM method, the fundamental component is set to low-order harmonics are set to a required amplitude and zero. This is the most common approach in electric drives since low-order harmonics are the most detrimental to motor performance. In other applications, like active harmonic filters or control of electromechanical systems, harmonics are set to nonzero values. This task of designing a PWM waveform, the first Fourier series coefficients of which match those of a desired waveform has been the subject of many papers [1], [5]–[37]. Often, the Newton iteration method [5] or an unconstrained optimization approach [38] are used to solve the system of nonlinear equations (1). Those methods are computationally intensive for on-line calculations and the storage of off-line calculations leads to high memory requirements. Recent results from the research community [39], [40] show two approaches to real-time implementation of an approximate optimal PWM. One approach is to fine tune conventional PWM techniques like regular-sampled 1057-7122/02$17.00 © 2002 IEEE 466 IEEE TRANSACTIONS ON CIRCUITS AND SYSTEMS—I: FUNDAMENTAL THEORY AND APPLICATIONS, VOL. 49, NO. 4, APRIL 2002 [39] or space-vector methods [41], [40] to approximate programmed PWM switching patterns. Another approach is to simplify the nonlinear harmonic elimination equations [26] in order to obtain real-time approximate solutions using modern digital signal processors. algorithms for solving the This paper develops PWM harmonic elimination problem without any approximations in the problem statement. Since many PWM applications allow for a computational time frame of a few milliseconds, the developed algorithms will allow for real time generation of switching patterns with of the order of hundreds. Note that polynomial. Let where , then is the th Chebyshev .. . As the odd-indexed Chebyshev polynomials are odd polynomials, we can write II. CONVERTING THE PWM PROBLEM For the scope of this paper, a PWM waveform is a -periodic that is binary-valued for and has the function and as shown symmetries can be written with the Fourier series as in Fig. 1. As such, With this notation, the PWM equations become or with (6) where The optimal PWM problem, as it is considered here, is the deso that its first Fourier coeffisign of a PWM waveform cients are equal to prescribed values. For a PWM waveform, as shown in Fig. 1, we have Therefore, the optimal PWM problem gives rise to the following design equations [4]: are the sums of powers of . Equation (6) forms a set of linear equations for , . Once the values are obtained by solving the linear system (6), one has the fol, find the solution lowing problem. Given to the following system of nonlinear equations (7) (2) .. . .. . (8) (3) , we have equations Given the values unknowns; we would like to find the unknowns and , with . We can first simplify the equations as is done for example in [22]. for odd , and let for even . Then Let (4) .. . (5) Once are obtained, the original variables can be found by , for odd , and for letting even . Due to the symmetry with respect to , any permutation is also a solution set; likewise for . Yet it of a solution set is necessary to order appropriately such that . Note that for odd , gives . For even , gives or . This indicates how to obtain with the desired let ; ordering from : for those values of let . and for those values of However, the design equations (7) and (8) are nonlinear, so is not so straightforward. In obtaining the desired solution the following sections, this nonlinear system of equations will be closely examined and in Section III, a systematic procedure is given to obtain the solutions. CZARKOWSKI et al.: SOLVING THE OPTIMAL PWM PROBLEM FOR SINGLE-PHASE INVERTERS A. Specialization to the Harmonic Elimination Problem For the harmonic elimination PWM problem, which we will focus on below, the Fourier coefficients of the PWM waveform should match the Fourier coefficients of a pure sine wave. appearing in (2) and (3) are given by That is, the values , and for . For this case, the values depend on only and are given by 467 special cases this problem can be reduced to a manageable and “exactly solvable” one. The classical cases of sum of powers (Newton), Wronski and elementary symmetric functions are the ones well described in the literature (see, e.g., [42] on representation of symmetric groups and Frobenius and Schur functions). In Section III, we present a procedure to determine the polynomial for the case where one is given the “sums of odd powers,” as it appears in the optimal PWM problem. III. GENERALIZING NEWTON’S IDENTITY B. A Simpler Problem It is useful to consider first the related, simpler problem where contiguous sums of powers are known. Given the values ; find satisfying the following equations: To develop a procedure to solve the optimal PWM problem, it will be useful to examine more closely Newton’s identities. The simple derivation of Newton’s identities will serve as our as starting point. First write the polynomial (9) .. . then the logarithmic derivative is given by (10) It turns out that are the roots of the polynomial (11) Expanding each term in the sum, one gets where (13) where etc. The coefficients can be found as are the sums of the root powers, with Integrating (13) gives .. . . (12) etc. These are Newton’s Identities. When contiguous values are known one can easily obtain through these simple recan be obtained by taking the roots cursive relations. Then of (11) for which numerical algorithms are readily available. these identities involve coefficients beyond the (For highest power, which are implicitly defined as 0.) For the PWM problem outlined above, we know only the first odd , for . We do not know contiguous values ; therefore Newton’s identities cannot be used. Our question is: Does any such simple procedure exist for the harmonic elimination problem for PWM, where one is given only the odd , ? for . The Note that (7)–(10) are symmetric functions of with given values problem of finding the set of elements in , is in of arbitrary symmetric functions general a very complicated one because of nontrivial nature of relations between symmetric functions of high degrees. Only in Raising gives to the power of and using the last equation (14) In order to generalize Newton’s procedure, we will obtain an expression similar to (14), but having only odd . To this end, note that 468 IEEE TRANSACTIONS ON CIRCUITS AND SYSTEMS—I: FUNDAMENTAL THEORY AND APPLICATIONS, VOL. 49, NO. 4, APRIL 2002 and that is given by The ? indicates unknown coefficients. Then, using (17) and (18), can be determined up to and including or Writing out (16) gives Then Matching like powers of gives where With the notation (15) one has (16) is the monic polynomial related to by negating the . roots of Equation (16) is the counter-part to (14). Likewise, by setting like powers of equal, we can obtain equations that relate and . However, in order to do this, we need to expand into a power series of . Such a power series for can be obtained using the following algorithm, described in [43, Sec. 4.7]. Let and If for are known, then the values of are given by for (17) (18) . for When the first known for odd values of are known, then are . [ for and for .] Therefore, using the , and conserelations (17) and (18), we obtain for quently, we can write out Equation (16), matching like powers can be obtained. of , to obtain linear equations from which . That is, For example, we write out the expressions for we are given , , and , and our goal is to find the corre, sponding monic third degree polynomial Matching further like powers involves coefficients and higher, which are unknown. Note that the last three equations, , , and , can be written as the corresponding to following linear system of equations: This system is almost Toeplitz—only the signs of the odd columns must be negated. The true Toeplitz system where , , gives the solution for . Note that are the coefficients of defined in (15). As this system is Toeplitz (the diagonals of the system matrix are constant), the Levinson algorithm can be used to solve it instead of ] [44]. efficiently [ The same procedure for general gives rise to a Toeplitz system and comprises a generalization of Newton’s identities to the case where only the first odd values are known. The procedure for general follows likewise. Algorithm ODDSOL: Given the sums of odd powers for , the polynomial can be obtained as follows. for . Set 1) Set for . using Equations (17) and 2) Compute for (18). 3) Solve the Toeplitz system for .. . .. . .. . .. . .. . (19) . and set Algorithm HESOL: Given and , the steps to solve the harmonic elimination problem (2) and (3), are summarized as follows. CZARKOWSKI et al.: SOLVING THE OPTIMAL PWM PROBLEM FOR SINGLE-PHASE INVERTERS 469 TABLE I PARAMETER VALUES COMPUTED IN ALGORITHMS ODDSOL AND HESOL FOR THE PWM HARMONIC-ELIMINATION PROBLEM WITH n = 4 AND A = 0:6 Fig. 2. The PWM harmonic-elimination waveform obtained, with A = 0:6 and n = 15. 1) Set for . , , obtain the degree polyno2) From for which the roots satisfy the equations (7) mial and (8). (Use algorithm ODDSOL.) Find the roots of . , , with . 3) Set , set . For , set For . Sort the angles . can be For the general optimal PWM problem, the values obtained by solving the linear system (6). , . Example 1 (Harmonic Elimination): Let Then and orthogonal. Numerically stable algorithms using properties of orthogonal polynomials can therefore be developed and will be presented in future publications. IV. A RECURRENCE FOR The fast recursive algorithms for solving Toeplitz systems compute a solution using the solutions for smaller . The nowill be used to emphasize the dependence of tation on . Specifically, denotes the monic degree- polynomial associated with the harmonic elimination problem, with coefficients With this notation, a recurrence relation (20) Then, the parameters computed in algorithms ODDSOL and and , HESOL are shown in Table I. As , the shown in the table are obtained by letting , , , , and then reordering . It can be verified numerically that the harmonic elimination equations (2) and (3) are satisfied. Example 2: Fig. 2 illustrates the PWM signal obtained with and . It should be noted that for the optimal PWM problem, the grows Toeplitz matrix in (19) becomes ill-conditioned as larger. By using extended precision arithmetic, the range of for which this basic algorithm is useful can be extended. However, in the following sections it will be shown that the solution can also be expressed as the solution to a Padé approxare imation problem, and consequently the polynomials . For the harmonic elimination can be used to compute problem, the initial conditions can be taken to be and . The coefficients in the recursion can be computed using the following formula: (21) This recurrence exists because of the Toeplitz structure, see [45] are then determined for further details. The coefficients recursively as [this implements (20)] (22) . 470 IEEE TRANSACTIONS ON CIRCUITS AND SYSTEMS—I: FUNDAMENTAL THEORY AND APPLICATIONS, VOL. 49, NO. 4, APRIL 2002 In order for this algorithm to work, one still needs the coeffi. While can be computed cients in the expansion of using (17) and (18), in Section V, a more direct recursion will be presented for computing the values themselves. From (23) and (24) we have for the harmonic elimination and for so that problem, that (25) V. A RECURRENCE FOR The algorithms presented above for the PWM harmonic elimination problem do not fully utilize the fact that the parameters depend only on the single parameter . In this section we will show a relationship between the original (transcendental) ] formulation of the PWM problem [in terms of ). and the converted (algebraic) formulation (in terms of As a result, we obtain an expression for the function that is important for developing a recursion for generating the in the recurcoefficients that are needed for computing . sion In this section, we show how the transcendental case can be explicitly expressed using the introduced notations of and . The basic transformation is and the new transforma- Now, using tion one gets Therefore with one has . With the previous transformation . Solving for gives Note that, as Combining this with (25) and the identity (16) gives from which we determine that where is given by , one gets so that the equations (4) and (5) can now be written as (23) .. . The algorithm for computing is of complexity only. This algorithm follows the general power series algorithms described in [46], [47]. The key to this algorithm is to notice that satisfies a second order linear differential equation and an apparent singularity (with singularities at ): at (24) Therefore, if we define If we look at the expansion of then, then we get the fourth-order recurrence on the product has the roots and as such the key identity (16) can be applied directly to this problem—as it is of the “sums of odd powers” form. One gets The initial conditions are at for and where From these values and the equation for one derives the factors in the three-term recurrence for orthogonal polynomials . The first few are CZARKOWSKI et al.: SOLVING THE OPTIMAL PWM PROBLEM FOR SINGLE-PHASE INVERTERS All are rational functions in of rather special structure. Since the case of the continued fraction expansion of is not “explicitly solvable,” is not a “known” function and ; it belongs to the category of “higher Painleve” of transendencies (see examples of such functions in [48]). An is the growth important consequence of the “insolubility” of as rational functions in with integer of coefficients of coefficients. According to standard conjectures about explicit and nonexplicit continued fraction expansions (cf., [49]), the as a rational function in over grow as coefficients of for large . In fact, already for , the coefficients in are large integers. This makes it impractical of for large . It is also to precompute with full accuracy explicitly, unnecessary to analytically determine only in the range of that is since we need to know significant for applications—this is the range where the weight is positive. In this case a of the orthogonal polynomials much simpler best fit polynomial or rational approximation in to suffices. can be summaThe recursive algorithm for computing rized as follows. Algorithm RECSOL: Given and , the polynomials for be recursively computed as follows. , , and for to 1) Set let 2) Set let and and for to Find the roots of . 3) Set , , with . , set . For , set For . Sort the angles . Step 3 of algorithm RECSOL is the same as step 3 of algorithm HESOL. Using a computer algebra system, such as Maple or Mathematica, this recursive algorithm allows one to obtain as an explicit function of . A Maple program that implements algorithm RECSOL is given in the Appendix. VI. COMPLEXITY OF THE PWM PROBLEM What is the complexity (in the simplest definition, an operation count) of the problem of “sums of odd powers”—of the optimal PWM problem discussed above? In the algebraic formulation one can first ask the same question about Newton’s identities. If one uses Newton identities directly, the complexity , but a significantly faster scheme can be found. The key is 471 to this is the representation (14). Indeed, according to Brent’s theorem, terms of the power series expansion of can steps from the power series be computed in only (see [43, Sec. 4.7, Example 4]). This algoexpansion of rithm requires only FFT-techniques for fast convolution [50]. Similar complexity considerations can be applied to the that give problem of fast computation of polynomials the solution to the PWM problem of consecutive odd power sums. A naive method of computation of Padé approximants by solving dense systems of linear equations give a very high . A classical Levinson algorithm complexity bound of of solving systems of Toeplitz linear equations, or algorithms , based on three-term recurrence relations satisfied by give the complexity of computations of (all coefficients of) at . These algorithms are, perhaps, the best for moderate because of their simple nature and the fact that algorithm they use almost no additional space. Also, the but all for . yields not only the single However, for large these algorithms became impractical and fast algorithms are needed. A fast algorithm for computing (all coefficients of) with total complexity of works as follows. First, terms of the one applies Brent’s theorem to compute power series expansion (at infinity) of from the first terms with the complexity of only . Then, one uses fast Padé approximation algorithms (or equivalently, fast polynomial gcd algorithms). There is a variety of such algorithms (for the earliest of Knuth, see [51]), with the most popular belonging to Brent, Gustavson, and complexity. Thus, we can compute Yun [52] with in at most operations. Of course, this method should be used only for a large (with additional precision of calculations) since it relies on a variety of extensions of FFT methods that become advantageous only for very long arrays. We wish to note also that in [22] Sun and Grotstollen present a Newton’s iteration directly on the transformed variables , and furthermore they note that the Jacobian in that case is a algorithms are Vandermonde matrix, and that efficient algorithms are available, which available. [In fact become efficient for large ]. However, Newton’s iteration can be very sensitive to initial values. VII. CONCLUSIONS This paper presents a contribution to the theory of optimal pulse-width modulation and gives algorithms for efficient on-line calculation of PWM switching patterns. Some specific results for the harmonic elimination problem are also presented. A number of new results regarding the PWM problem are derived in this paper. It is shown that: • by a transformation of variables the solution to the optimal PWM problem is given by the roots of a polynomial that are appropriately sorted; 472 IEEE TRANSACTIONS ON CIRCUITS AND SYSTEMS—I: FUNDAMENTAL THEORY AND APPLICATIONS, VOL. 49, NO. 4, APRIL 2002 • the polynomials APPENDIX A FIRST FEW satisfy Using the recursive algorithm HESOL, the polynomials as explicit functions of can be obtained for the harmonic elimination problem using computer algebra software. for Here are the first few where and are the roots of -degree monic polynomial ; can be found by solving a Toeplitz • the polynomials system of linear equations; give also the solution to a Padé • the polynomials approximation problem and, therefore, constitute a set of orthogonal polynomials; are obtained through a simple re• the polynomials currence APPENDIX B MAPLE PROGRAM where an expression for is given in (21); • the complexity of a fast algorithm for the optimal PWM . problem is An important consequence of the solution of the transcendental “sums of odd cosines” problem in PWM applications lies in the ability to construct high-quality digital (step-function) approximations to arbitrary harmonic series. In this paper, we have paid particular attention to the PWM harmonic eliminain (6) depend only on tion problem in which coefficients . In general, optimal PWM the fundamental component depend on the amplitudes of other harmonics coefficients which do not add to the complexity of the problem since system (6) is linear. Since the proposed algorithms can be executed quite fast on any processor with high-performance DSP capabilities, it opens a possibility of better on-the-fly construction of arbitrary (analog) waveforms using simple digital logic. Areas of application include not only power electronic converters but also, for instance, control of microelectromechanical systems and digital audio amplification. It should be noted that the design equations corresponding to optimal PWM with bi-level waveforms [1] have the same structure as (2) and (3), therefore the results presented in this paper are directly applicable. While applying these basic algorithms, one should be aware of the well-known inherent ill-conditioning of the corresponding Toeplitz matrix and Padé approximation calculations. Techniques for the regularization of the basic algorithms presented here will be dealt with in detail in future publications. Future work will also focus on such practical applications as three-phase inverters which eliminates harmonics with orders divisible by three in (2) and (3). A more detailed description of this work is given in the technical report [45]. The following Maple program implements algorithm RECSOL for solving the PWM harmonic elimination problem. Other programs are available from the authors. A 2 2 2 2 n i i CZARKOWSKI et al.: SOLVING THE OPTIMAL PWM PROBLEM FOR SINGLE-PHASE INVERTERS 473 Now, if we write the normalized (monic) polynomials and in terms of their roots we get an identification of symmetric functions in and with the sequence of in the definition of . Namely, we get m APPENDIX C RELATION TO THE THEORY OF PADÉ APPROXIMATION The solution to the modified Newton problem, where one has the first sums of odd powers, can also be obtained using the theory of Padé approximation. Moreover, using the theory of are orPadé approximation, we find that the polynomials thogonal polynomials with respect to a specific weighting function that depends on only. Consequently, we can draw upon methods developed for orthogonal polynomials to develop numerically stable fast algorithms for computing both the polynofor and their roots. mials We start with the general relation between Padé approximations and the generalization of Newton relations between power and elementary symmetric functions. Let us look at the Padé to the series at . approximation of the order is defined via the generating function Here the function : . The Padé apof the sequence to at (the neighborhood of) proximation of the order is a rational function with a polya polynomial of degree —such nomial of degree and matches the expansion of that the expansion of at up to the maximal order. This means that or After taking the logarithmic derivative of this definition, we obtain the following representation of this definition: for (where ). , one simply recovers Newton’s identities. In the case (the “diagonal” Padé approximations) is the The case case that solves the problem of “sums of odd powers”: Consider for and the “anti-symmetric” case when . In this case, in the notations above, for . to We, therefore, obtain the Padé approximation of order the following function: The Padé approximants are related in this case by . This relation between the numerator must and the denominator of the Padé approximants to satisfies the simple functional identity: hold because . This gives the main result: ( ) to the problem Theorem 1: The solution of “sums of odd powers” is given by the roots of the numerator of the Padé approximation of order to the function Another way to verify this approximation without specialization from the case of general sequence , is to take the identity (16). Proof of Theorem 1: First of all, the Padé approxiof order is mation rational function unique. [Indeed, if there would be two rational approximations and that approximate up to , then , and since degrees of are bounded by , then .] Then, is a Padé approximation of order to if , we assume that this representation of the rational and are relatively function is irreducible [i.e., that is a Padé approximation of order prime]. Then, to , and is a Padé approxito . Because of the functional mation of order , and the uniqueness of the Padé equation . approximations, we get . Moreover, since This equation means that at starts at 1 [i.e., the expansion of as ], we have as . This 474 IEEE TRANSACTIONS ON CIRCUITS AND SYSTEMS—I: FUNDAMENTAL THEORY AND APPLICATIONS, VOL. 49, NO. 4, APRIL 2002 means that and into account the above-mentioned “main” identity . Taking we see that the right-hand side of this identity and the expanat must agree up to (but not including) sion of . This means we have for . , the value(s) of Notice that in the definition of for have no impact on the definition of because only at for they enter the expansion of . This is obvious because . This completes the proof of Theorem 1. Since we identified the solution to the “sums of odd powers” problem with numerator (or denominator) in the (diagonal) Padé approximation problem, we infer from the standard theory of continued fraction expansions that the rational functions are partial fractions in the continued fraction at . This expansion of the generating function also means (see Szego for these and other facts of the theory of continued fraction expansions and orthogonal polynomials is the sequence [53]) that the sequence of polynomials of orthogonal polynomials [with respect to the weight that is ], and that the sequence of polyHilbert transform of satisfies three-term linear recurrence relation. nomials Since the same recurrence is satisfied both by numerators and denominators of the partial fractions, the recurrence is satisfied and . With the by two sequences— is 1, one obtains the particularly leading coefficient of simple three-term recurrence relation among for , which was introduced above where it was developed using the matrix notation. ACKNOWLEDGMENT The authors wish to thank H. Huang for helpful comments on the manuscript. REFERENCES [1] P. Enjeti, P. D. Ziogas, and J. F. Lindsay, “Programmed PWM techniques to eliminate harmonics: A critical evaluation,” IEEE Trans. Ind. Applicat., vol. 26, pp. 302–316, Mar. 1990. [2] A. M. Trzynadlowski, “An overview of modern PWM techniques for three-phase, voltage-controlled, voltage-source inverters,” in Proc. IEEE Symp. Industrial Electronics ISIE’96, 1996, pp. 25–39. [3] J. Holtz, “Pulsewidth modulation—A survey,” IEEE Trans. Ind. Electron., vol. 39, pp. 410–420, Oct. 1992. [4] H. S. Patel and R. G. Hoft, “Generalized technique of harmonic elimination and voltage control in thyristor inverters: Part I harmonic elimination,” IEEE Trans. Ind. Applicat., pp. 310–317, May 1973. [5] P. Enjeti and J. F. Lindsay, “Solving nonlinear equation of harmonic elimination PWM in power control,” Electron. Lett., vol. 23, no. 12, pp. 656–657, 1987. [6] S. R. Bowes and R. I. Bullough, “Optimal PWM microprocessor-controlled current-source inverter drives,” Proc. Inst. Elect. Eng. B, vol. 135, no. 2, pp. 59–75, 1988. [7] J. Richardson and S. V. S. Mohan-Ram, “An auto-adjusting PWM waveform generation technique,” in Proc. Conf. Power Electronics and Variable-Speed Drives, 1988, pp. 366–369. [8] S. R. Bowes and R. I. Bullough, “Novel PWM controlled series-connected current-source inverter drive,” Proc. Inst. Elect. Eng. B, vol. 136, no. 2, pp. 69–82, 1989. [9] K. E. Addoweesh and A. L. Mohamadein, “Microprocessor based harmonic elimination in chopper type AC voltage regulators,” IEEE Trans. Power Electron., vol. 5, pp. 191–200, Apr. 1990. [10] Q. Jiang, D. G. Holmes, and D. B. Giesner, “A method for linearising optimal PWM switching strategies to enable their computation on-line in real-time,” in Proc. IEEE Industry Applications Soc. Annu. Meeting, vol. 1, 1991, pp. 819–825. [11] T. Xinyuan and B. Jingming, “An algebraic algorithm for generating optimal PWM waveforms for AC drives—Part I: Selected harmonic elimination,” in Rec. IEEE Power Electronics Specialists Conf. (PESC’91), 1991, pp. 402–408. [12] S. R. Bowes and P. R. Clark, “Simple microprocessor implementation of new regular-sampled harmonic elimination PWM techniques,” IEEE Trans. Ind. Applicat., vol. 28, pp. 89–95, Jan. 1992. [13] P. N. Enjeti and W. Shireen, “A new technique to reject DC-link voltage ripple for inverters operating on programmed PWM waveforms,” IEEE Trans. Power Electron., vol. 7, pp. 171–180, Jan. 1992. [14] A. J. Frazier and M. K. Kazimierczuk, “Dc–dc power inversion using sigma–delta modulation,” IEEE Trans. Circuits Syst. I, vol. 46, pp. 79–82, Jan. 2000. [15] A. Wang and S. R. Sanders, “On optimal programmed PWM waveforms for dc–dc converters,” in Rec. IEEE Power Electronics Specialists Conference (PESC’92), 1992, pp. 571–578. [16] S. R. Bowes and P. R. Clark, “Transputer-based harmonic-elimination PWM control of inverter drives,” IEEE Trans. Ind. Applicat., vol. 28, pp. 72–80, Jan. 1992. [17] E. Destobbeleer and D. Dubois, “Solving a nonlinear and incomplete equation system for a programmed PWM: Application to electric traction,” in Proc. IEE Colloq. Variable Speed Drives and Motion Control, 1992, pp. 5/1–5/5. [18] J. Sun and H. Grotstollen, “Solving nonlinear equations for selective harmonic eliminated PWM using predicted initial values,” in Proc. Int. Conf. Industrial Electr., Control and Instr., 1992, pp. 259–264. [19] F. Swift and A. Kamberis, “Walsh domain technique of harmonic elimination and voltage control in pulse-width modulated inverters,” IEEE Trans. Power Electron., vol. 8, pp. 170–185, Apr. 1993. [20] U. S. Sapre, N. K. Ashar, and S. A. Joshi, “A new approach to programmed PWM implementation,” in Proc. Conf. Appl. Power Electronics Conf. and Expos. (APEC’93), 1993, pp. 793–798. [21] J. Sun, S. Beineke, and H. Grotstollen, “DSP-based real-time harmonic elimination of PWM inverters,” in Rec. IEEE Power Electronics Specialists Conf. (PESC’94), 1994, pp. 679–685. [22] J. Sun and H. Grotstollen, “Pulsewidth modulation based on real-time solution of algebraic harmonic elimination equations,” in Proc. Int. Conf. Industrial Electronics, Control and Instrumentation, (IECON’94), 1994, pp. 79–84. [23] H. R. Karshenas, H. A. Kojori, and S. B. Dewan, “Generalized techniques of selective harmonic elimination and current control in current source invertersconverters,” IEEE Trans. Power Electron., vol. 10, pp. 566–573, Sept. 1995. [24] J. X. Lee and W. J. Bonwick, “Solving nonlinear cost function for optimal PWM strategy,” in Proc. Int. Conf. Power Electronics and Drive Systems, vol. 1, 1995, pp. 395–400. [25] H. L. Liu, G. H. Cho, and S. S. Park, “Optimal PWM design for high power three-level inverter through comparative studies,” IEEE Trans. Power Electron., vol. 10, pp. 38–47, Jan. 1995. [26] J. Sun, S. Beineke, and H. Grotstollen, “Optimal PWM based on real-time solution of harmonic elimination equations,” IEEE Trans. Power Electron., vol. 11, pp. 612–621, July 1996. [27] J. Richardson, G. J. Bezanov, and G. M. Hashem, “Optimal PWM waveform modeling for on-line ac drive control,” in Proc. Conf. Power Electronics and Variable Speed Drives, 1996, pp. 496–501. [28] G. Bezanov and J. Richardson, “Algorithmic modeling of PWM solution sets for online control of inverters,” in Proc. Power Electronics Specialists Conf. (PESC ’92), 1996, pp. 595–600. [29] T.-J. Liang, R. M. O’Connell, and R. G. Hoft, “Inverter harmonic reduction using Walsh function harmonic elimination method,” IEEE Trans. Power Electron., vol. 12, pp. 971–982, Nov. 1997. [30] J. W. Chen and T. J. Liang, “A novel algorithm in solving nonlinear equations for programmed PWM inverter to eliminate harmonics,” in Proc. Int. Conf. Industrial Electronics, Control and Instrumentation (IECON’97), vol. 2, 1997, pp. 698–703. [31] H. Han, Z. Hui, H. Yongxuan, and J. Yanchao, “A new optimal programmed PWM technique for power convertors,” in Proc. Int. Conf. Power Electronics and Drive Systems, vol. 2, 1997, pp. 740–743. CZARKOWSKI et al.: SOLVING THE OPTIMAL PWM PROBLEM FOR SINGLE-PHASE INVERTERS [32] R. Pindado, C. Jaen, and J. Pou, “Robust method for optimal PWM harmonic elimination based on the Chebyshev functions,” in Proc. 8th Int. Conf. on Harmonics and Quality of Power, vol. 2, 1998, pp. 976–981. [33] J. Singh, “Performance analysis of microcomputer based PWM strategies for induction motor drive,” in Proc. IEEE Int. Symp. Industrial Electronics (ISIE’98), vol. 1, 1998, pp. 277–282. [34] A. I. A. Maswood and L. Jian, “Optimal online algorithm derivation for PWM-SHE switching,” Electron. Lett., vol. 34, no. 8, pp. 821–823, 1998. [35] G.-H. Choe and M.-H. Park, “A new injection method for AC harmonic elimination by active power filter,” IEEE Trans. Ind. Electron., vol. 35, pp. 141–147, Feb. 1988. [36] T. Kato, “Sequential homotopy-based computation of multiple solutions for selected harmonic elimination in PWM inverters,” IEEE Trans. Circuits Syst. I, vol. 46, pp. 586–593, May 1999. [37] J. W. Dixon, J. M. Contardo, and L. A. Moran, “A fuzzy-controlled active front-end rectifier with current harmonic filtering characteristics and minimum sensing variables,” IEEE Trans. Power Electron., vol. 14, pp. 724–729, July 1999. [38] L. Li, D. Czarkowski, Y. Liu, and P. Pillay, “Suppression of harmonics in multilevel series-connected PWM inverters,” in Proc. IEEE IAS Annual Meeting, 1998, pp. 1454–1461. [39] S. R. Bowes and P. R. Clark, “Regular-sampled harmonic-elimination PWM control of inverter drives,” IEEE Trans. Power Electron., vol. 10, pp. 521–531, Sept. 1995. [40] S. R. Bowes and S. Grewal, “A novel harmonic elimination PWM strategy,” in Proc. Conf. Power Electronics and Variable Speed Drives, Sept. 1998, pp. 426–432. , “Simplified harmonic elimination PWM control strategy,” Elec[41] tron. Lett., vol. 34, no. 4, pp. 325–326, 19, 1998. [42] W. Ledermann, Introduction to Group Character. Cambridge, MA: Cambridge Univ. Press, 1977. [43] D. E. Knuth, The Art of Computer Programming. New York: AddisonWesley, 1981, vol. 2. [44] S. Zohar, “The solution of a Toeplitz set of linear equations,” J. Assoc.Comput. Mach., vol. 21, no. 2, pp. 272–276, Apr. 1974. [45] D. Czarkowski, D. Chudnovsky, G. Chudnovsky, and I. Selesnick, “Solving the optimal PWM problem for single-phase inverters,” Polytechnic Univ., Brooklyn, NY, Tech. Rep., 1999. [46] D. V. Chudnovsky and G. V. Chudnovsky, “On expansion of algebraic functions in power and Puiseux series, I,” J. Complexity, vol. 2, no. 4, pp. 271–294, 1986. [47] , “On expansion of algebraic functions in power and Puiseux series, II,” J. Complexity, vol. 3, no. 1, pp. 1–25, 1987. , “Explict continued fractions and quantum gravity,” Acta Applic. [48] Math, vol. 36, pp. 167–185, 1994. [49] , “Transcendental methods and theta-functions,” in Proc. Symp. Pure Math., vol. 2, 1989, pp. 167–232. [50] A. B. Borodin and I. Munro, The Computational Complexity of Algebraic and Numeric Problems, NY: American Elsevier, 1975. [51] D. Knuth, “The analysis of algorithms,” Actes Congrès. Intern. Math., vol. 3, pp. 269–274, 1970. [52] R. P. Brent, F. G. Gustavson, and D. Y. Yun, “Fast solution of Toeplitz systems of equations and computation of Padé approximants,” J. Algorithms, vol. 1, no. 3, pp. 259–295, Sept. 1980. [53] G. Szego, Orthogonal Polynomials. Providence, RI: AMS, 1978. Dariusz Czarkowski (M’97) received the M.S. degrees in electronics and electrical engineering from the University of Mining and Metallurgy, Cracow, Poland, and from Wright State University, Dayton, OH, in 1989 and 1993, respectively, and the Ph.D. degree in electrical engineering from the University of Florida, Gainesville, FL, in 1996. He joined the Polytechnic University, Brooklyn, NY, as an Assistant Professor of electrical engineering in 1996. His research interests are in the areas of power electronics, electric drives, and power quality. He coauthored a book entitled Resonant Power Converters (New York: Wiley Interscience, 1995). 475 David V. Chudnovsky (M’00) received the Ph.D. degree from the Institute of Mathematics of Ukranian Academy of Science, Kiev, Ukraine, in 1972. He was Senior Research Scientist in the Department of Mathematics at Columbia University, New York, from 1978 to 1995, and a Research Scientist with CNRS and Ecole Polytechnique Paris, France, from 1977 to 1981. Since 1996, he is a Distinguished Industry Professor of Mathematics, Department of Mathematics, Polytechnic University, Brooklyn, New York, and the Director of Institute for Mathematics and Advanced Supercomputing (IMAS), Brooklyn, New York. His research interests include: partial differential equations and Hamiltonian systems, quantum systems, computer algebra and complexity, large scale numerical mathematics, parallel computing and digital signal processing. Dr. Chudnovsky is a member of ACM and APS. Gregory V. Chudnovsky received the Ph.D. degree from the Institute of Mathematics of Ukranian Academy of Science, Kiev, Ukraine, in 1975. He was Senior Research Scientist in the Department of Mathematics at Columbia University, New York, from 1978 to 1995 and a Research Scientist with CNRS at IHES, Paris, France, from 1977 to 1981. Since 1996, he is a Distinguished Industry Professor of Mathematics, Department of Mathematics, Polytechnic University and Director of Institute for Mathematics and Advanced Supercomputing (IMAS), Brooklyn, NY. His research interests include: number theory, mathematical physics, computer algebra and complexity, large scale numerical mathematics, parallel computing and digital signal processing. Dr. Chudnovsky is a member of APS. Ivan W. Selesnick (S’91–M’95) received the B.S., M.E.E., and Ph.D. degrees in electrical engineering, from Rice University, Houston, TX, in 1990, 1991, and 1996, respectively. In 1997, he was a visiting professor at the University of Erlangen, Nurnberg, Germany. He is currently an Aassistant Professor in the Department of Electrical and Computer Engineering at Polytechnic University, Brooklyn, NY. His current research interests are in the area of digital signal processing and wavelet-based signal processing. As a Ph.D. student Dr. Selesnick received a DARPA-NDSEG fellowship in 1991, and his Ph.D. dissertation received the Budd Award for Best Engineering Thesis at Rice University in 1996 and an award from the Rice-TMC chapter of Sigma Xi. He received an Alexander von Humboldt Award in 1997 and a National Science Foundation Career award in 1999. He is a member of Eta Kappa Nu, Phi Beta Kappa, Tau Beta Phi.