Solution to Chapter 5 Analytical Exercises

advertisement

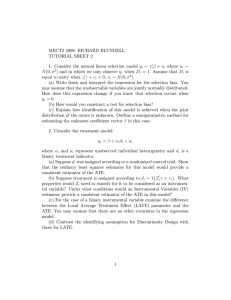

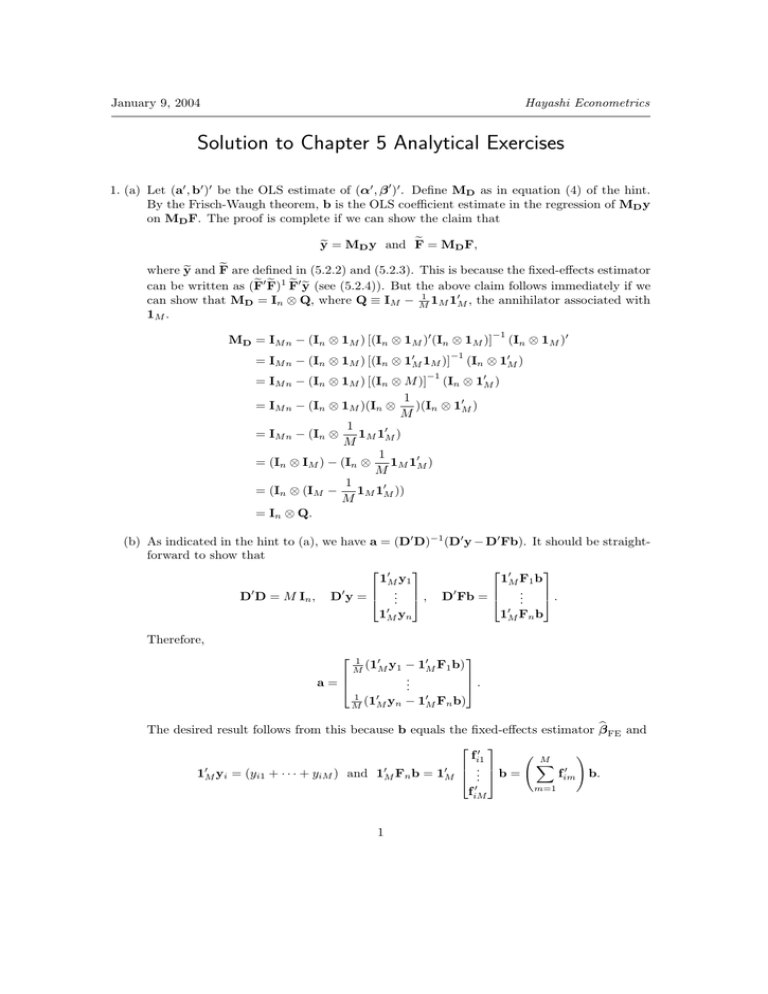

January 9, 2004 Hayashi Econometrics Solution to Chapter 5 Analytical Exercises 1. (a) Let (a0 , b0 )0 be the OLS estimate of (α0 , β 0 )0 . Define MD as in equation (4) of the hint. By the Frisch-Waugh theorem, b is the OLS coefficient estimate in the regression of MD y on MD F. The proof is complete if we can show the claim that e = MD F, e = MD y and F y e are defined in (5.2.2) and (5.2.3). This is because the fixed-effects estimator e and F where y e 0 F) e 1F e 0y e (see (5.2.4)). But the above claim follows immediately if we can be written as (F 1 can show that MD = In ⊗ Q, where Q ≡ IM − M 1M 10M , the annihilator associated with 1M . −1 MD = IM n − (In ⊗ 1M ) [(In ⊗ 1M )0 (In ⊗ 1M )] −1 = IM n − (In ⊗ 1M ) [(In ⊗ 10M 1M )] (In ⊗ 1M )0 (In ⊗ 10M ) −1 = IM n − (In ⊗ 1M ) [(In ⊗ M )] (In ⊗ 10M ) 1 = IM n − (In ⊗ 1M )(In ⊗ )(In ⊗ 10M ) M 1 = IM n − (In ⊗ 1M 10M ) M 1 = (In ⊗ IM ) − (In ⊗ 1M 10M ) M 1 = (In ⊗ (IM − 1M 10M )) M = In ⊗ Q. (b) As indicated in the hint to (a), we have a = (D0 D)−1 (D0 y − D0 Fb). It should be straightforward to show that 0 0 1M F1 b 1M y 1 .. D0 D = M In , D0 y = ... , D0 Fb = . . 10M yn 10M Fn b Therefore, a= 0 1 M (1M y1 0 1 M (1M yn − 10M F1 b) .. . . − 10M Fn b) b The desired result follows from this because b equals the fixed-effects estimator β FE and 0 ! fi1 M X 0 0 0 .. 0 1M yi = (yi1 + · · · + yiM ) and 1M Fn b = 1M . b = fim b. 0 m=1 fiM 1 (c) What needs to be shown is that (3) and conditions (i)-(iv) listed in the question together imply Assumptions 1.1-1.4. Assumption 1.1 (linearity) is none other than (3). Assumption 1.3 is a restatement of (iv). This leaves Assumptions 1.2 (strict exogeneity) and Assumption 1.4 (spherical error term) to be verified. The following is an amplification of the answer to 1.(c) on p. 363. E(η i | W) = E(η i | F) (since D is a matrix of constants) = E(η i | F1 , . . . , Fn ) = E(η i | Fi ) (since (η i , Fi ) is indep. of Fj for j 6= i) by (i) =0 (by (ii)). Therefore, the regressors are strictly exogenous (Assumption 1.2). Also, E(η i η 0i | W) = E(η i η 0i | F) = E(η i η 0i | Fi ) = ση2 IM (by the spherical error assumption (iii)). For i 6= j, E(η i η 0j | W) = E(η i η 0j | F) = E(η i η 0j | F1 , . . . , Fn ) = E(η i η 0j | Fi , Fj ) = = = (since (η i , Fi , η j , Fj ) is indep. of Fk for 0 E[E(η i η j | Fi , Fj , η i ) | Fi , Fj ] E[η i E(η 0j | Fi , Fj , η i ) | Fi , Fj ] E[η i E(η 0j | Fj ) | Fi , Fj ] (since (η j , Fj ) is independent of =0 (since E(η 0j k 6= i, j by (i)) (η i , Fi ) by (i)) | Fj ) by (ii)). So E(ηη 0 | W) = ση2 IM n (Assumption 1.4). Since the assumptions of the classical regression model are satisfied, Propositions 1.1 holds for the OLS estimator (a, b). The estimator is unbiased and the Gauss-Markov theorem holds. As shown in Analytical Exercise 4.(f) in Chapter 1, the residual vector from the original regression (3) (which is to regress y on D and F) is numerically the same as the residual e (= MD F)). So the two SSR’s are the same. e (= MD y) on F vector from the regression of y 2. (a) It is evident that C0 1M = 0 if C is what is referred to in the question as the matrix of first differences. Next, to see that C0 1M = 0 if C is an M × (M − 1) matrix created by dropping one column from Q, first note that by construction of Q, we have: Q 1M = (M ×M ) 0 , (M ×1) which is a set of M equations. Drop one row from Q and call it C0 and drop the corresponding element from the 0 vector on the RHS. Then C0 1M = ((M −1)×M ) 0 . ((M −1)×1) (b) By multiplying both sides of (5.1.100 ) on p. 329 by C0 , we eliminate 1M · bi γ and 1M · αi . 2 (c) Below we verify the five conditions. • The random sample condition is immediate from (5.1.2). • Regarding the orthogonality conditions, as mentioned in the hint, (5.1.8b) can be written as E(η i ⊗ xi ) = 0. This implies the orthogonality conditions because E(b η i ⊗ xi ) = E[(C0 ⊗ IK )(η i ⊗ xi )] = (C0 ⊗ IK ) E(η i ⊗ xi ). • As shown on pp. 363-364, the identification condition to be verified is equivalent to (5.1.15) (that E(QFi ⊗ xi ) be of full column rank). b i ≡ C0 η i = C0 εi . So η biη b 0i = C0 εi ε0i C and • Since εi = 1M · αi + η i , we have η b 0i | xi ) = E(C0 εi ε0i C | xi ) = C0 E(εi ε0i | xi )C = C0 ΣC. E(b ηi η (The last equality is by (5.1.5).) bi , we have: g bi g bi0 = η biη b 0i ⊗ xi x0i . But as just shown above, • By the definition of g 0 biη b i = C0 εi ε0i C. So η bi g bi0 = C0 εi ε0i C ⊗ xi x0i = (C0 ⊗ IK )(εi ε0i ⊗ xi x0i )(C ⊗ IK ). g Thus bi0 ) = (C0 ⊗ IK ) E[(εi ε0i ⊗ xi x0i )](C ⊗ IK ) E(b gi g = (C0 ⊗ IK ) E(gi gi0 )(C ⊗ IK ) (since gi ≡ εi ⊗ xi ). bi0 ) is Since E(gi gi0 ) is non-singular by (5.1.6) and since C is of full column rank, E(b gi g non-singular. b i ≡ C0 Fi , we can rewrite Sxz and sxy as (d) Since F n n 1 X 1 X Sxz = (C0 ⊗ IK ) Fi ⊗ xi , sxy = (C0 ⊗ IK ) yi ⊗ xi . n i=1 n i=1 So c xz S0xz WS = n 1 X n F0i ⊗ x0i i=1 n X " 0 (C ⊗ IK ) (C C) " −1 ⊗ n 1 X n xi x0i −1 i=1 (C0 ⊗ IK ) n 1 X n # n −1 1 X F0i ⊗ x0i C(C0 C)−1 C0 ⊗ xi x0i Fi ⊗ xi = n i=1 n i=1 n i=1 " # n n n 1 X 1 X −1 1 X F0i ⊗ x0i Q ⊗ xi x0i Fi ⊗ xi = n i=1 n i=1 n i=1 1 n 1 X # (since C(C0 C)−1 C0 = Q, as mentioned in the hint). Similarly, c xy S0xz Ws = n 1 X n F0i ⊗ x0i " Q⊗ i=1 3 n 1 X n i=1 xi x0i # n −1 1 X yi ⊗ xi . n i=1 i=1 Fi ⊗ xi 0 Noting that fim is the m-th row of Fi and writing out the Kronecker products in full, we obtain c xz = S0xz WS c xy = S0xz Ws M X M X qmh M X M X qmh m=1 h=1 m=1 h=1 n n 1 X fim x0i n n 1 X fim x0i n n i=1 i=1 n 1 X xi x0i n 1 X xi x0i n n i=1 i=1 n −1 1 X 0 xi fih n −1 1 X xi · yih n n o , i=1 o , i=1 where qmh is the (m, h) element of Q. (This is just (4.6.6) with xim = xi , zim = fim , −1 c = Q ⊗ 1 Pn xi x0 W .) Since xi includes all the elements of Fi , as noted in the hint, i i=1 n xi “dissappears”. So c xz = S0xz WS c xy = S0xz Ws M X M X qmh n 1 X qmh n X m=1 h=1 M X M X m=1 h=1 n M M 1 X X X 0 0 fim fih = qmh fim fih , n i=1 n i=1 m=1 1 n h=1 fim · yih = i=1 1 n M X n X M X qmh fim · yih . i=1 m=1 h=1 Using the “beautifying” formula (4.6.16b), this expression can be simplified as n X c xz = 1 S0xz WS F0 QFi , n i=1 i n c xy = S0xz Ws 1X 0 F Qyi . n i=1 i −1 c xz c xy is the fixed-effects estimator. So S0xz WS S0xz Ws c (e) The previous part shows that the fixed-effects estimator is not efficient because the W −1 c in (10) does not satisfy the efficiency condition that plim W = S . Under conditional b being a consistent estimator of b 0i ) ⊗ E(xi x0i ). Thus, with Ψ homoskedasticity, S = E(b ηi η 0 b i ), the efficient GMM estimator is given by setting E(b ηi η n X −1 c =Ψ b −1 ⊗ 1 W xi x0i . n i=1 This is none other than the random-effects estimator applied to the system of M − 1 equab i, Σ b = Ψ, b yi = y bi in (4.6.80 ) and (4.6.90 ) on p. 293, we tions (9). By setting Zi = F obtain (12) and (13) in the question. It is shown on pp. 292-293 that these “beautified” formulas are numerically equivalent versions of (4.6.8) and (4.6.9). By Proposition 4.7, the random-effects estimator (4.6.8) is consistent and asymptotically normal and the asymptotic variance is given by (4.6.9). As noted on p. 324, it should be routine to show that those conditions verified in (c) above are sufficient for the hypothesis of Proposition 4.7. b i ⊗ xi ). In (c), In particular, the Σxz referred to in Assumption 4.40 can be written as E(F we’ve verified that this matrix is of full column rank. (f) Proposition 4.1, which is about the estimation of error cross moments for the multipleequation model of Section 4.1, can easily be adapted to the common-coefficient model of Section 4.6. Besides linearity, the required assumptions are (i) that the coefficient estimate 4 b ) used for calculating the residual vector be consistent and (ii) that the cross (here β FE b i ) and those from moment between the vector of regressors from one equation (a row from F b another (another row from Fi ) exist and be finite. As seen in (d), the fixed-effects estimator 0 b β FE is a GMM estimator. So it is consistent. As noted in (c), E(xi xi ) is non-singular. Since xi contains all the elements of Fi , the cross moment assumption is satisfied. (g) As noted in (e), the assumptions of Proposition 4.7 holds for the present model in question. b defined in (14) is consistent. Therefore, Proposition 4.7(c) It has been verified in (f) that Ψ holds for the present model. b i ≡ C0 η i , we have E(b b 0i ) = E(C0 η i η 0i C) = ση2 C0 C (the last equality is by ηi η (h) Since η b =σ c in the answer to (e) (thus setting (15)). By setting Ψ b2 C0 C in the expression for W P η −1 n c = σ W bη2 C0 C ⊗ n1 i=1 xi x0i ), the estimator can be written as a GMM estimator 0 c −1 0 c (Sxz WSxz ) Sxz Wsxy . Clearly, it is numerically equal to the GMM estimator with −1 c = C0 C ⊗ 1 Pn xi x0 W , which, as was verified in (d), is the fixed-effects estimator. i i=1 n (i) Evidently, replacing C by B ≡ CA in (11) does not change Q. So the fixed-effects estimator is invariant to the choice of C. To see that the numerical values of (12) and (13) are invariant to the choice of C, let F̌i ≡ B0 Fi and y̌i ≡ B0 yi . That is, the original M equations (5.1.100 ) are transformed into M − 1 equations by B = CA, not by C. Then b i and y̌i = A0 y bi . If Ψ̌ is the estimated error cross moment matrix when (14) is F̌i = A0 F b i , then we have: Ψ̌ = A0 ΨA. b bi and F̌i replacing F used with y̌i replacing y So −1 −1 F̌0i Ψ̌ b 0 A(A0 ΨA) b −1 A0 F bi = F b 0 AA−1 Ψ b F̌i = F i i −1 Similarly, F̌0i Ψ̌ b0Ψ b −1 y bi . y̌i = F i −1 bi = F b0Ψ b (A0 )−1 A0 F i b i. F 3. From (5.1.100 ), vi = C0 (yi − Fi β) = C0 η i . So E(vi vi0 ) = E(C0 η i η 0i C) = C0 E(η i η 0i )C = ση2 C0 C. By the hint, plim SSR = trace (C0 C)−1 ση2 C0 C = ση2 trace[IM −1 ] = ση2 · (M − 1). n 4. (a) bi is absent from the system of M equations (or bi is a zero vector). yi1 yi0 yi = ... , Fi = ... . yiM yi,M −1 (b) Recursive substitution (starting with a substitution of the first equation of the system into the second) yields the equation in the hint. Multiply both sides of the equation by ηih and take expectations to obtain E(yim · ηih ) = E(ηim · ηih ) + ρ E(ηi,m−1 · ηih ) + · · · + ρm−1 E(ηi1 · ηih ) 1 − ρm + E(αi · ηih ) + ρm E(yi0 · ηih ) 1−ρ = E(ηim · ηih ) + ρ E(ηi,m−1 · ηih ) + · · · + ρm−1 E(ηi1 · ηih ) (since E(αi · ηih ) = 0 and E(yi0 · ηih ) = 0) ( ρm−h ση2 = 0 if h = 1, 2, . . . , m, if h = m + 1, m + 2, . . . . 5 (c) That E(yim · ηih ) = ρm−h ση2 for m ≥ h is shown in (b). Noting that Fi here is a vector, not a matrix, we have: E(F0i Qη i ) = E[trace(F0i Qη i )] = E[trace(η i F0i Q)] = trace[E(η i F0i )Q] = trace[E(η i F0i )(IM − 1 110 )] M = trace[E(η i F0i )] − 1 trace[E(η i F0i )110 ] M = trace[E(η i F0i )] − 1 0 1 E(η i F0i )1. M By the results shown in (b), E(η i F0i ) can be 0 1 0 0 .. .. . E(η i F0i ) = ση2 . 0 · · · 0 · · · 0 ··· written as ρ 1 .. . ρ2 ρ .. . ··· ··· ··· 0 ··· ··· · · · ρM −2 · · · ρM −3 .. ··· . . 1 ρ 0 1 ··· 0 So, in the above expression for E(F0i Qη i ), trace[E(η i F0i )] = 0 and 10 E(η i F0i )1 = sum of the elements of E(η i F0i ) = sum of the first row + · · · + sum of the last row M −1 1 − ρM −2 1−ρ 2 1−ρ = ση + + ··· + 1−ρ 1−ρ 1−ρ = ση2 M − 1 − M ρ + ρM . (1 − ρ)2 (d) (5.2.6) is violated because E(fim · ηih ) = E(yi,m−1 · ηih ) 6= 0 for h ≤ m − 1. 5. (a) The hint shows that 0 0 −1 e0F e E(F E(QFi ⊗ xi ). i i ) = E(QFi ⊗ xi ) IM ⊗ E(xi xi ) By (5.1.15), E(QFi ⊗ xi ) is of full column rank. So the matrix product above is nonsingular. (b) By (5.1.5) and (5.1.60 ), E(εi ε0i ) is non-singular. b i ≡ C0 Fi , we have (c) By the same sort of argument used in (a) and (b) and noting that F b 0 Ψ−1 F b i ) = E(C0 Fi ⊗ xi )0 Ψ−1 ⊗ E(xi x0 ) −1 E(C0 Fi ⊗ xi ). E(F i i We’ve verified in 2(c) that E(C0 Fi ⊗ xi ) is of full column rank. 6 6. This question presumes that fi1 .. xi = . and fim = A0m xi . fiM bi 0 0 = x0i Am . (a) The m-th row of Fi is fim and fim e i ⊗ xi ) be of full column rank (where F e i ≡ QFi ). (b) The rank condition (5.1.15) is that E(F 0 0 e i ⊗ xi ) = [IM ⊗ E(xi x )](Q ⊗ IK )A. Since E(xi x ) is non-singular, By the hint, E(F i i IM ⊗ E(xi x0i ) is non-singular. Multiplication by a non-singular matrix does not alter rank. 7. The hint is the answer. 7