NVMe over Fabrics

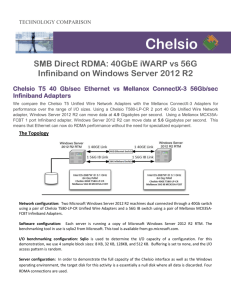

advertisement

NVMe over Fabrics Wael Noureddine, Ph.D. Chelsio Communications Inc. 2015 Storage Developer Conference. © Chelsio Communications Inc. All Rights Reserved. Overview r Introduction r NVMe/Fabrics and iWARP r RDMA Block Device and results r Closing notes 2 2015 Storage Developer Conference. © Chelsio Communications Inc. All Rights Reserved. NVMe Devices r Accelerating pace of progress r Capacity r Reliability r Efficiency r Performance r Latency r Bandwidth Samsung Keynote, Flash Memory Summit, Santa Clara, August’2015 r IOPS – Random Access 3 2015 Storage Developer Conference. © Chelsio Communications Inc. All Rights Reserved. A Decade of NIC Evolution 2003 2013 2013 2013 2013 2007 7Gbps aggregate PCI-X Physical Functions: 1 DMA Queues: 1 Interrupt: Line Logical NICs: 1 Lock-based Synchronization 11Gbps bidirectional PCIe Gen1 Physical Functions: 1 DMA Queues: 8 Interrupt: MSI Logical NICs: 8 Up to 8 cores 55Gbps bidirectional PCIe Gen3 Physical Functions: 8 DMA Queues: 64K Interrupt: MSI-X Logical NICs: 128 Lock-free user space I/O 2015 Storage Developer Conference. © Chelsio Communications Inc. All Rights Reserved. 4 NVMe – Non-Volatile Memory Express r Modern PCIe interface architecture Benefit from experience with other high performance devices r Scale with exponential speed increases r r r Standardized for enhanced plug-andplay Parallels to high performance NIC interface 64K x deep queues r MSI-X r Lock-free operation r Security r Data integrity protection r Register set r Feature set r Command set r 5 2015 Storage Developer Conference. © Chelsio Communications Inc. All Rights Reserved. iWARP RDMA over Ethernet Host MEMORY Payload Host CQ No(fica(ons No(fica(ons Unified Wire RNIC RDMA TCP IP ETH MEMORY CQ Payload Unified Wire RNIC End-to-end CRC TCP/IP in hardware r Open, transparent and mature r r RDMA TCP IP ETH Plug-and-play r r r r TCP/IP/Ethernet Frames r r r LAN/Data Center WAN/Long Haul r Architecture, scale, distance, RTT, link speeds Hardware performance r Any Switches/Routers Regular switches Same network appliances Works with DCB but NOT required No need for lossless configuration No network restrictions r r Standard and proven TCP/IP Built-in reliability congestion control, flow control Natively routable Cost effective r TCP/IP/Ethernet Frames IETF Standard (2007) r r Exception processing in HW Ultra low latency High packet rate and bandwidth 2015 Storage Developer Conference. © Chelsio Communications Inc. All Rights Reserved. NVMe over Fabrics r r r Address PCI reach and scalability limits Extend native NVMe over large fabric iWARP RDMA over Ethernet first proof point r r r Intel NVMe drives Chelsio 40GbE iWARP Compared to local disk r r Less than 8usec additional RTT latency Same IOPS performance 7 2015 Storage Developer Conference. © Chelsio Communications Inc. All Rights Reserved. NVMe/Ethernet Fabric with iWARP SMB3 T5 Unified Adapter Windows clients T5 Unified Adapter NVMe/Fabrics NVM Storage ANY Switch/ router ANY Switch/ router iSCSI SMBv3 NVMe/Fabrics iSCSI NVM Storage NVM Storage …. Linux clients NVMe/Fabrics Appliance Frontend Remote replicaIon ANY Switch/ router NVM Storage NVMe op(mized client Ethernet Front-­‐end Fabric Ethernet Back-­‐end Fabric T5 supports iSCSI, SMBDirect and NVMe/Fabrics offload simultaneously 8 2015 Storage Developer Conference. © Chelsio Communications Inc. All Rights Reserved. T5 Storage Protocol Support SMB NBD NFS RPC Network Driver SMB Direct OS Block IO NDK NVMe/Fabrics and RBD RDMA Driver iSCSI FCoE iSER FCoE Driver iSCSI Driver Lower Layer Driver T10-DIX RDMA Offload NIC iSCSI Offload FCoE Offload TCP Offload T5 Hardware 9 2015 Storage Developer Conference. © Chelsio Communications Inc. All Rights Reserved. NVMe/Fabrics Layering Ini(ator Target Linux File System Linux Block IO Kernel NVMe Device Driver NVMe/Fabrics T5 NIC Kernel NVMe/Fabrics RDMA Verbs RDMA Verbs RDMA Driver RDMA Driver NVMe Device Driver RDMA Offload NVMe Drive RDMA Offload TCP Offload T5 NIC TCP Offload NVMe Drive 10 2015 Storage Developer Conference. © Chelsio Communications Inc. All Rights Reserved. RBD Layering Ini(ator Target Linux File System Linux Block IO Kernel T5 NIC RDMA Block Driver Linux Block IO Kernel RDMA Block Driver RDMA Verbs RDMA Verbs RDMA Driver RDMA Driver Block Device Driver RDMA Offload NVMe Drive RDMA Offload TCP Offload T5 NIC TCP Offload SAS Drive.. 11 2015 Storage Developer Conference. © Chelsio Communications Inc. All Rights Reserved. Generic RDMA Block Device (RBD) r Open Source Chelsio Linux driver r r r r Claims the physical device using blkdev_get_by_path() Submits IOs from the Initiator via submit_bio() Initiator r r r Part of Chelsio’s Unified Wire SW r r r r Registers as a BIO device driver Registers connected Target devices as a BIO device using add_disk() Max RBD IO size is 128KB 256 max outstanding IOs in flight Initiator physical/logical sector size is 4KB Initiator provides character device command interface for adding/ removing devices Simple command, rbdctl, used to connect, login, and register the target devices Examples r Target r r Current RBD Protocol Limits r Management via rbdctl command r Virtualizes block devices over an RDMA connection Target r r r Load rbdt module Initiator r r r r Load rbdi module Add a new target:rbdct –n –a <ipaddr> -d /dev/nvme0n1 Remove an RBD device: rbdctl – r –d /dev/rbdi0 List connected devices: rbdctl -l 12 2015 Storage Developer Conference. © Chelsio Communications Inc. All Rights Reserved. RBD vs. NBD Throughput – 40GbE 40 35 Throughput (Gbps) 30 25 20 15 10 5 0 4k 8k 16k 32k 64k 128k 256k 512k 1M I/O Size (B) RBD_read NBD_Read RBD_Write NBD_Write Intel Xeon CPU E5-1660 v2 6-core processor clocked at 3.70GHz (HT enabled) with 64 GB of RAM, Chelsio T580-CR, RHEL 6.5 (Kernel 3.18.14), standard MTU of 1500B, using RAMdisks on target (4) fio --name=<test_type> --iodepth=32 --rw=<test_type> --size=800m --direct=1 --invalidate=1 --fsync_on_close=1 --norandommap --group_reporting --ioengine=libaio --numjobs=4 --bs=<block_size> --runtime=30 --time_based --filename=/dev/rbdi0 13 2015 Storage Developer Conference. © Chelsio Communications Inc. All Rights Reserved. RBD vs. NBD Latency – 40GbE 200 180 160 Latency (us) 140 120 100 80 60 40 20 0 4k 8k 16k 32k 64k 128k I/O Size (B) RBD_Read NBD_Read RBD_Write NBD_Write Intel Xeon CPU E5-1660 v2 6-core processor clocked at 3.70GHz (HT enabled) with 64 GB of RAM. Chelsio T580-CR, RHEL 6.5 (Kernel 3.18.14), standard MTU of 1500B, using RAMdisk on target (1) fio --name=<test_type> --iodepth=1 --rw=<test_type> --size=800m --direct=1 --invalidate=1 --fsync_on_close=1 --norandommap --group_reporting --ioengine=libaio --numjobs=1 --bs=<block_size> --runtime=30 --time_based --filename=/dev/rbdi0 14 2015 Storage Developer Conference. © Chelsio Communications Inc. All Rights Reserved. SSD Latency Results Random Read Latency 450 400 350 Latency (usec) 300 250 Local 200 RBD 150 100 50 0 4 8 16 32 64 128 256 I/O Size (KB) Supermicro MB, 16 cores - Intel(R) Xeon(R) CPU E5-2687W 0 @ 3.10GHz, 64GB memory Chelsio T580-CR, RHEL6.5, linux-3.18.14 kernel, standard MTU of 1500B, through Ethernet switch, NVMe SSD target fio-2.1.10, 1 thread, direct IO, 30 second runs, libaio IO engine, IO depth = 1, random read, write, and read-write. 15 2015 Storage Developer Conference. © Chelsio Communications Inc. All Rights Reserved. SSD Bandwidth Results Random Read Throughput (1 Thread) 9 8 Throughput (Gbps) 7 6 5 Local 4 RBD 3 2 1 0 4 8 16 32 64 128 256 I/O Size (KB) Supermicro MB, 16 cores - Intel(R) Xeon(R) CPU E5-2687W 0 @ 3.10GHz, 64GB memory Chelsio T580-CR, RHEL6.5, linux-3.18.14 kernel, standard MTU of 1500B, through Ethernet switch, SSD target fio-2.1.10, 1 thread, direct IO, 30 second runs, libaio IO engine, IO depth = 32, random read, write, and read-write. 16 2015 Storage Developer Conference. © Chelsio Communications Inc. All Rights Reserved. Local vs. RBD Bandwidth – 2 SSD Write 9 14 Throughput (Gbps) Throughput (Gbps) 10 Read 16 12 10 8 6 4 2 8 7 6 5 4 3 2 1 0 0 4 8 16 32 64 128 256 4 8 16 I/O Size (KB) Local 32 64 128 256 I/O Size (KB) RBD Local RBD Intel(R) Xeon(R) CPU E5-2687W 0 @ 3.10GHz 2x6 cores 64GB RAM, 2xNVMe SSD Chelsio T580-CR, CentOS Linux release 7.1.1503 Kernel 3.17.8, standard MTU of 1500B through 40Gb switch fio --direct=1 --invalidate=1 --fsync_on_close=1 --norandommap --group_reporting --name=foo --bs=$2 --size=800m --numjobs=8 --ioengine=libaio --iodepth=16 --rw=randwrite --runtime=30 --time_based --filename_format=/dev/rbdi\$jobnum --directory=/ 17 2015 Storage Developer Conference. © Chelsio Communications Inc. All Rights Reserved. RDMA over Fabrics Evolution Memory CPU Memory CPU Memory CPU PCIe RNIC PCIe SSD SSD DMA to/from host memory CPU handles command transfer YESTERDAY PCIe SSD SSD RNIC Peer-to-peer DMA through PCI bus No host memory use TODAY SSD SSD RNIC Network integrated NVMe storage No CPU or memory involvement TOMORROW 18 2015 Storage Developer Conference. © Chelsio Communications Inc. All Rights Reserved. Conclusions r r r NVMe over Fabrics for scalable high performance and high efficiency fabrics that leverage disruptive solid-state storage Standard interfaces and middleware r Ease of use and widespread support iWARP RDMA ideal for NVMe/Fabrics r Familiar and proven foundation r Plug-and-play Works with DCB but does not require it r No DCB tax and complexity r r Scalability, reliability, routability Any switches and any routers over any distance r Scales from 40 to 100Gbps and beyond r r NVMe/Fabrics devices with built-in processing power 2015 Storage Developer Conference. © Chelsio Communications Inc. All Rights Reserved. 19