distortion-class weighted acoustic modeling for robust speech

advertisement

DISTORTION-CLASS WEIGHTED ACOUSTIC MODELING FOR ROBUST SPEECH

RECOGNITION UNDER GSM RPE-LTP CODING

Juan M. Huerta and Richard M. Stern

Carnegie Mellon University

Department of Electrical and Computer Engineering

Email: {juan, rms}@speech.cs.cmu.edu

separate the set of phonemes into cluster

their relative spectral distortion. In Sec

to weight two sets of acoustic mo

We present a method to reduce the effect a

ofmethod

full-rate

the

distortion

categories introduced in S

GSM RPE-LTP coding by combining two sets of acoustic

describe

the

results

of recognition experi

models during recognition, one set trained on GSMtechniques

in

Section

6.

distorted speech and the other trained on clean uncoded

ABSTRACT

speech. During recognition,

a posteriori

the

probabilities

THE RPE-LTP

CODEC AS A SOURCE

of an utterance are calculated as a sum of the 2.

posteriors

of

the individual models, weighted according to OF

theACOUSTIC DEGRADATION

distortion class each state in the model represents. We

The

full-rate

GSM codec decomposes the sp

analyze the origin of spectral distortion to

the

quantized

into

a

set

of

LAR coefficients and a shor

long-term residual introduced by the RPE-LTP codec and

signal

[1].

The

LAR coefficients are qu

discuss how this distortion varies according to phonetic

transmitted

to the decoder while the shortclass.

For the Research Management corpus,

the

proposed method reduces the degradation in segmented

frame-by- into subframes and coded by an

coder

In this section we discuss

frame phonetic recognition accuracy introduced by [3].

GSM

degradation

that

exist in the RPE-LTP code

coding by more than 20 percent.

Figures 1 and 2, a simplified version of

LTP codec and a simplified version of a r

1.INTRODUCTION

codec respectively.

Speech coding reduces the accuracy of Figure

speech1 depicts an ideal codec able to

recognition systems [4]. As speech recognition

signal that is identical to the original

application in cellular and mobile environments

Even become

though this codec would not achieve a

more ubiquitous, robust recognition in these

of conditions

the bit rate of the input sequence it h

becomes crucial. Even though the speech codec

is only

sources

and nature of the distortion of t

one of the several factors that contribute

to the

introduced

by the actual RPE-LTP codec.

degradation in recognition accuracy, it is necessary to

The in

ideal

RPE-LTP codec works as follow

understand and compensate for this degradation

order

sequence

(the short-term residual) enter

to achieve a system’s full potential. We focus

on x[n]

the

and GSM

is codec

compared to a predicted sequ

acoustic distortion introduced by the full-rate

reconstructedof short-term residual) prod

[1]. This distortion can be traced to the quantization

predictorandblock (the LTP section). The

the log-area ratio (LAR) and to the quantization

between

downsampling performed in the RPE-LTP process.

The these two signals is what the pred

unable

to predict, which we will refe

distortion introduced in the residual signal affects

“innovation

sequence”. In the absence of

recognition to a larger extent than the quantization that

acoustic

degradation,

our ideal codec wi

the LAR coefficients undergo [2].

generate a reconstructed time sequence

In Section 2 of this paper, we discuss the together

origin ofthe

theinnovation sequence and th

distortion in the RPE-LTP codec. We observe sequence.

that based This reconstructed sequence wil

on the “predictability” of the short-term residual

signal, time sequence

to the original

by definition.

x[n]

the RPE-LTP will be able to minimize the error

in the

reality,

the RPE-LTP coder, does not t

quantized long-term residual. This predictability

is later

necessary

information for the decoder t

shown to be related to phonetic characteristics

of

innovation the

sequence exactly.

subsampled

It sends and

signal. In Section 3 we show that the relative

spectral

quantized

information that will result in

distortion introduced in the quantized long-term

residual

innovation

sequence (called the reconstruc

tends to be concentrated around three levels,

and that that

this will approximate the origi

residual)

amount of relative spectral distortion can

be loosely

sequence

to the extent possible.

The

related to the relative degradation introduced

to the frame

degradation

in the reconstructed signal wi

recognition accuracy by GSM coding. In Section

we

the4 energy

of the innovation signal which

on how good the predictor module (RPE)

We observ

“follow” or predict the next subframe ofdistortion

the time observed in a frame.

sequence based on previous reconstructed subframes.

counts are roughly clustered in 3 regions:

and high distortion, separated by breakpoint

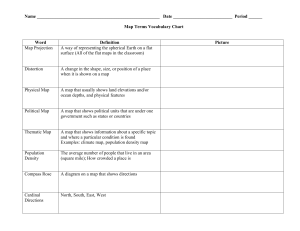

Input

33 and 67 percent. We also observe that the

sequence

the frames suffer only a small amount of dist

Innovation

most of the time the LTP section of the code

do a reasonably good job of predicting the

residual signal.

Predicted sequence

Predictor

.

6

Reconstructed

sequence

5

Input

sequence

Innovation

Subsampling

and Coding

Log Counts

4 coder.

Figure 1. Simplified block diagram of an ideal RPE-LTP

Reconstructed

Innovation

2

Predicted sequence

Predictor

3

1

0 0

Reconstructed

sequence

0.2

0.4

0.6

0.8

Relative Log Spectral Distortion

1

Figure 3.

Histogram of the log distribution o

spectral

Figure 2. Simplified block diagram of an actual

RPE-LTPdistortion introduced to a large samp

coder.

1.3.Impact of relative log spectral distor

on phonetic recognition

Percentage Increase in Frame Error Rate

3.THE RPE-LTP INDUCED

SPECTRAL DISTORTION

140

3.1.Relative

log spectral distortion introduced

120

by the RPE-LTP coder

We use the relative log spectral distance 100below to

measure the dissimilarity between the reconstructed and

80

the original innovation sequence.

ω ) is theE( power

spectrum of the innovation sequence

ω ) isand

theER ( 60

power spectrum of the quantized innovation sequence.

We integrate the absolute value of the difference

between

40

both log power spectra and normalize it by the integral

of the log of the power spectrum of the 20original

innovation signal.

π

L1 = ∫0 log( E(ω ) − log( E R (ω ))) dω

π

∫0 log( E(ω )) dω

0

0

0.17

0.33

0.5

0.67

0.88

1.0

Relative Spectral Distortion

Figure 4. Relative degradation in frame-ba

phonetic accuracy as a function of the rel

1.2.Distribution of the relative log spectral

spectral distortion introduced by the RTEdistortion

coder.

We computed the relative log spectral distortion

order to analyze the relation that exi

introduced by the RPE-LTP codec to the RM In

corpus.

degradation

in recognition performance and t

Figure 3 is a histogram that shows the log of

the relative

of

relative

log

spectral distortion introduce

frequency of observing various levels of log spectral

LTP

block,

we

performed

two phonetic reco

distortion. The horizontal axis is the amount of relative

experiments: one training and testing

i.e

.,using

A plot of the normalized histograms of the

non-GSM coded) speech data and a second experiment

below. These five classes exhibit

using speech that underwent GSM coding. We shown

computed

pattern

of distortion in each of the three

the frame accuracy for each amount of relative

spectral

defined

in Section 3.2. Classes 1 and 3

distortion both for when GSM was present and

when it

percentage of counts in the high distort

was absent. Figure 4 depicts how much the frame-based

phonetic recognition error rate increased presence

due to in

thethe middle area, while Classes

introduction of the GSM coding as a function

the in the middle area. Class 5

more of

counts

relative spectral distortion.

We observe that,

almostgenerally

completely concentrated in the lo

speaking, the phonemes that produced a greater

amount

region.

of distortion due to GSM coding also suffered greater

amounts of frame error rate.

0.4

Class 1

0.2

4.RELATING RELATIVE LOG SPECTRAL

DISTORSION PATTERNS TO PHONETIC

CLASSES

0

0

1

0.4

Class 2

0.2

0

0

1

0.4

4.1.Clustering Phonetic-classes using the

0.2

relative log-spectral distortion

0

Class 3

0

1

We grouped the 52 phonetic units used by our 0.4recognition

Class 4

0.2

system into phonetic clusters by incrementally

clustering

the closest histograms of the counts of the0 relative log

0

1

spectral distortion for the frames associated

with each

0.4

Class 5

phonetic unit in the corpus. The distance 0.2between each

pair of histograms was calculated using normalized-area

0

0

0.4

0.6

0.8

1

histograms. The clustering yielded five classes,

as 0.2shown

Relative Log Spectral Distortion

in the table below.

Figure 5.Histograms of the relative spectral dist

five phonetic classes of Table 1.

Class

Phonemes in cl Frame

Acc.

Frame

Acc.,

GSM

%

Degradation

1

PD KD

2

IX B BD DD DH 68.28% 58.34% 31.34%

M N JH NG V W

Y DX ZH AX SH

3

D G K P T TD CH 70.88% 65.94% 16.96%

4

F HH S Z TH TS 72.39% 57.63% 53.43%

5

46.76% 44.10% 5%

IY OW OY UW L 74.37% 67.90% 25.24%

R AA AE AH AXR

EH AO IH ER AW

AY UH EY

5.ACOUSTIC MODEL WEIGHTING

Acoustic modeling for HMM-based speech re

commonly makes use of mixtures of G

distribution representing a set of tied sta

probability that an observed vector has be

certain state is thus expressed by

b j (ot ) =

Mj

∑ c[ j, k ]N (ot , µ jk , C jk )

k =1

The term

c[j, k] expresses the prior probabi

kth

th

Gaussian component j

of the

HMM. For a givenj st

Table 1. Phonetic classes generated by automatically

the sum of c[j,k]

over k

all

is equal to 1.

clustering the phonemes distortion histograms

We can

the amount of distortion t

Classes 1, 3, and 4 encompass the majority

of consider

the

a phonetic

class undergoes while evaluatin

consonants, Class 4 being mostly fricatives.

Class 2

using several models that

includes the remaining consonants and a probability

couple of

vowels, while Class 5 encompass the vowels, distortion

diphthongs regions. We can express this

f that weights th

and semivowels. Silence units were omittedintroducing

from this a function

th

probability

of

the

model

p

dependingdt, onthe

analysis. We can see that even though the classes do not

distortion

of

the

observed

j,

frame

which

t, indicat

and

exactly correspond to phonetic classes, they achieve a

the

model

representing

a

given

phonetic

reasonable partition between broad classes of sounds.

f also depends on the prior probabi

The pattern of distributions of relative function

log spectral

model crelated

p[j,k]. This function can also be

distortion introduced by the RPE-LTP is strongly

weighted version [j,k].

of c p The resulting po

to phonetic properties of the signal.

becomes

2

M

also made these weights dependent of the phon

using three automatically-clustered phonet

p =1 k =1

While there is a modest advantage of weig

models differently, either of these two weigh

The function

f can also

dependent

be

on the distortion

class the model represents.

f will

This weight

way,

moreprovide better results than using multi-s

models.that

The 3-class optimized weights red

the clean models for states that model phonemes

degradation

in frame error rate introduced

suffer small average GSM distortion.

Alternatively, one

coding

by

27%,

from

5.36% to 3.87%.

The

can make the function f depend on knowledge of the

frame

weighting

improves

recognition

ac

instantaneous relative distortiondt of

if each

this frame

approximately

equally

for

all

three

phonetic

c

information is available. This function should give more

b j, d (ot ) =

∑ ∑ f (c p [ j, k ], dt , j )) N p (ot , µ jk , C jk )

weight to the distorted models when the relative frame

Weighted modeling

distortion is greater.

6.SPEECH RECOGNITION EXPERIMENTS

Equal weights

Phone

Accuracy

Frame

Accuracy

61.2%

65.77%

3-class optimized weights

61.2%

65.99%

Phonetic recognition experiments were performed

using

Table 3. Frame accuracy obtained when using equally

the Resource Management corpus and the SPHINX-3

and tied

when using phonetic-class based weightin

system. Our basic acoustic models consistedmodels

of 2500

states, each modeled by a mixture of two Gaussians. One

set of models was trained on clean speech while the other

7.CONCLUSIONS

set of models was trained with speech that underwent

In this paper

we examined the distortion in

GSM coding. We obtained our reference transcription

by

the

RPE-LTP

GSM

codec to the short-term r

performing automatic forced alignment on the 1600 test

signal.

We

related

this distortion to the re

utterances using their pronunciations. We computed both

distortion

the phone accuracy and the frame accuracy.

Phone produced by the quantized long-te

We observed

accuracy is calculated considering insertions

and that this distortion influences

performance,

deletions of phones in the hypothesis. We evaluated

the and that it can be related to

properties of the speech signal. We introduc

frame accuracy by making a frame-by-frame comparison

mixwith

two the

acoustic models that had been traine

of the output of the phonetic recognizer

and distorted

speech by taking into consid

alignment. Table 2 summarizes recognition accuracy

for

amount

of

distortion

suffered by the phonetic

clean and GSM speech, using clean and GSM models.

We also show the results when models are trained using

both GSM-coded speech and clean speech (multi-style

8.REFERENCES

trained models).

Testing Training da

data

Phone

Accuracy

Frame

Accuracy

Clean

Clean

63.3%

69.86%

GSM

Clean

55.7%

61.20%

GSM

GSM

60.1%

64.50%

60.1%

64.64%

GSM

GSM+Clean

Multistyle

[1] European Telecommunication Standards Institute

pean digital telecommunications system (Phase

rate speech processing functions (GSM 06.01

1994.

[2] Huerta, J. M. and Stern R. M., “Speech Recog

GSM Codec Parameters” Proc. ICSLP-98, 1998.

[3] Kroon, P., Deprettere, E. F., Sluyter, R. F. “

Excitation - A Novel Approach to Effective and

Multi-pulse Coding of Speech”, IEEE Trans. on A

Speech and Signal Processing, 34:1054-1063,

1986.

Table 2. Baseline frame accuracy and phoneme recognition

[4] Lilly, B. T.,

Paliwal,

and

K. K. “Effect of Speech C

accuracy in Resource Management

on Speech Recognition Performance”,

Proc. ICSLP-96,

1996.

From Table 2 we can see that the effect of having GSM

codec distortion during training and recognition reduces

frame accuracy by 5.35 points. Recognition accuracy can

improved somewhat by using multi-style trained models.

6.1.Experiments using weighted acoustic models

We performed recognition experiments using weighted

models by considering different mixing factors for two

acoustic models, trained GSM-coded and clean speech.

Table 3 shows results weighting both models equally. We