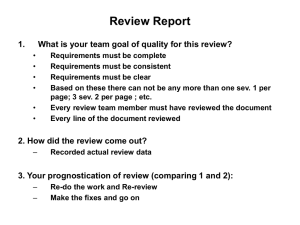

Level Severity Term Severity Description 1 Blocker/Critical Prevents

advertisement

Quality Assurance Test Plan Responses 8.14.14 Dear ISAB members, Thank you for taking the time to review the BikeHike Mobile App Software Quality Assurance Plan. Please find responses to your questions below. The Cary Bike and Hike mobile application project budget was contractually established for under $30,000, excluding long-term technical support. This was negotiated after a Town solicitation and vendor proposal, price review, vendor vetting, and procurement process. This budget generally is insufficient for larger scale simultaneous user stress testing typically conducted on mission critical application systems, but it is believed the vendor’s technical solution, software development approaches, risk management philosophy, and application fault tolerances will be sufficient to avoid most all potential problems associated with application release. Robert Campbell’s comments (from email): 1) No indication on scale testing, what is the scale target or means to test that. The app data will reside on Microsoft Windows Azure cloud services with server capabilities for high traffic demand. The app is engineered to accommodate approximately 300 simultaneous active users for long periods of time with response time averages of 3-4 seconds; and peak loads of 600 active users for short durations with average response times of 8 – 9 seconds. 2) No exit criteria given. What is the criteria that the app will be considered released and generally available? The app’s testing exit criteria can be summarized as follows: 1. All planned test scripts have been executed and 98% of test cases have passed, 2. All test cases marked n/a will be reviewed and approved prior to testing completion, 3. No priority or severity level 1 – 3 bugs open or pending; any pri/sev 4 defects can be deferred only if reviewed and approved by the main project team acceptance test users, 4. All known defects are closed, deferred and approved by the main Project Manager, 5. A Test closure report will be provided to the Cary PM for review and sign-off, 6. Apple completes its own mobile application QA review of the application and code before releasing any app to the public via their iTunes app store. Fillmore Bowen’s comments (from minutes): - What is considered an acceptable number of errors? No numbers included in test plan that are related to what’s quality and what’s not quality. The Cary project team and vendor both believe that zero errors are acceptable because of the relatively small number of mobile application features, functionalities and potential user scenarios. - Disappointed in the generic nature of the test plan. The test plan has no value when testing unique applications. - What’s the definition of an error? Examples: sev 1: Application dies every time the app is opened; sev 2: Third time the app is used – the system hangs; sev 3: not working the way the user would like, but it works; sev 4: future features in next release. Level 1 Severity Term Blocker/Critical Severity Description Prevents function from being used, no work-around, blocking user progress. Examples include application stability problems, performance aspects not meeting software requirements, memory corruptions, and non-functioning or incorrectly implemented features. 2 Major 3 Normal 4 Minor 5 Trivial Prevents function from being used, but a work-around is possible. Examples include temporary (recoverable) error conditions and erroneous status indicators. A problem making a function difficult to use but no special work-around is required. Examples include text or format errors, poor user interface design, minor rendering errors (browser), or malformed display icons or text. A problem not affecting the actual function, but the behavior is not natural. Examples include requirement clarifications, test case clarifications, issues regarding documentation, user interface improvement suggestions, and industrial design or mechanical improvement suggestions. A problem not affecting the actual function, including typos, grammar, color mismatches, etc. - How do we track errors? The vendor uses Bugzilla, which is a Web-based general-purpose bug tracker and testing tool originally developed and used by the Mozilla project, creators of the Firefox browser. The Town project team acceptance test users are logging their bugs and other requests on Microsoft Excel and Word. - How many errors do we expect? Zero. - All the sev 1 and 2 should be closed before releasing. A determined number of Sev 3 and 4 could be allowed (i.e. allow 5 - sev 3 and allow 20 - sev 4). Is there any kind of calculation on how many errors we can find before putting the app in production? The app’s testing exit criteria can be summarized as follows: 1. All planned test scripts have been executed and 98% of test cases have passed, 2. All test cases marked n/a will be reviewed and approved prior to testing completion, 3. No priority or severity level 1 – 3 bugs open or pending; any pri/sev 4 defects can be deferred only if reviewed and approved by the main project team acceptance test users, 4. All known defects are closed, deferred and approved by the main Project Manager, 5. A Test closure report will be provided to the Cary PM for review and sign-off, 6. Apple completes its own mobile application QA review of the application and code before releasing any app to the public via their iTunes app store.