8. Linear least

advertisement

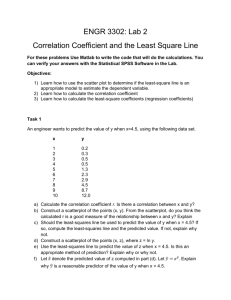

EE103 (Fall 2011-12) 8. Linear least-squares • definition • examples and applications • solution of a least-squares problem, normal equations 8-1 Definition overdetermined linear equations Ax = b (A is m × n with m > n) if b 6∈ range(A), cannot solve for x least-squares formulation 1/2 n m X X aij xj − bi)2 minimize kAx − bk = ( i=1 j=1 • r = Ax − b is called the residual or error • x with smallest residual norm krk is called the least-squares solution • equivalent to minimizing kAx − bk2 Linear least-squares 8-2 Example 2 0 A = −1 1 , 0 2 1 b= 0 −1 least-squares solution minimize (2x1 − 1)2 + (−x1 + x2)2 + (2x2 + 1)2 to find optimal x1, x2, set derivatives w.r.t. x1 and x2 equal to zero: 10x1 − 2x2 − 4 = 0, −2x1 + 10x2 + 4 = 0 solution x1 = 1/3, x2 = −1/3 (much more on practical algorithms for LS problems later) Linear least-squares 8-3 r22 = (−x1 + x2)2 r12 = (2x1 − 1)2 30 20 15 20 10 10 5 0 2 0 2 2 0 2 0 0 x2 −2 −2 x2 x1 0 −2 −2 x1 r12 + r22 + r32 r32 = (2x2 + 1)2 30 60 20 40 10 20 0 2 0 2 2 0 x2 Linear least-squares 0 −2 −2 x1 2 0 x2 0 −2 −2 x1 8-4 Outline • definition • examples and applications • solution of a least-squares problem, normal equations Data fitting fit a function g(t) = x1g1(t) + x2g2(t) + · · · + xngn(t) to data (t1, y1), . . . , (tm, ym), i.e., choose coefficients x1, . . . , xn so that g(t1) ≈ y1, g(t2) ≈ y2, ..., g(tm) ≈ ym • gi(t) : R → R are given functions (basis functions) • problem variables: the coefficients x1, x2, . . . , xn • usually m ≫ n, hence no exact solution with g(ti) = yi for all i • applications: developing simple, approximate model of observed data Linear least-squares 8-5 Least-squares data fitting compute x by minimizing m X (g(ti) − yi)2 = m X (x1g1(ti) + x2g2(ti) + · · · + xngn(ti) − yi) 2 i=1 i=1 in matrix notation: minimize kAx − bk2 where g1(t1) g2(t1) g3(t1) · · · gn(t1) g1(t2) g2(t2) g3(t2) · · · gn(t2) , A= . . . . . . . . g1(tm) g2(tm) g3(tm) · · · gn(tm) Linear least-squares y1 y2 b= . . ym 8-6 Example: data fitting with polynomials g(t) = x1 + x2t + x3t2 + · · · + xntn−1 basis functions are gk (t) = tk−1, k = 1, . . . , n t21 t22 tn−1 1 n−1 t2 1 t1 ··· 1 t2 ··· , A= . . . . . . . . 1 tm t2m · · · tn−1 m y1 y2 b= .. ym interpolation (m = n): can satisfy g(ti) = yi exactly by solving Ax = b approximation (m > n): make error small by minimizing kAx − bk Linear least-squares 8-7 example. fit a polynomial to f (t) = 1/(1 + 25t2) on [−1, 1] • pick m = n points ti in [−1, 1], and calculate yi = 1/(1 + 25t2i ) • interpolate by solving Ax = b n = 15 n=5 8 1.5 6 1 4 0.5 2 0 −0.5 −1 0 −0.5 0 0.5 1 −2 −1 −0.5 0 0.5 1 (dashed line: f ; solid line: polynomial g; circles: the points (ti, yi)) increasing n does not improve the overall quality of the fit Linear least-squares 8-8 same example by approximation • pick m = 50 points ti in [−1, 1] • fit polynomial by minimizing kAx − bk n=5 n = 15 1 1 0.8 0.8 0.6 0.6 0.4 0.4 0.2 0.2 0 0 −0.2 −1 −0.5 0 0.5 1 −0.2 −1 −0.5 0 0.5 1 (dashed line: f ; solid line: polynomial g; circles: the points (ti, yi)) much better fit overall Linear least-squares 8-9 Least-squares estimation y = Ax + w • x is what we want to estimate or reconstruct • y is our measurement(s) • w is an unknown noise or measurement error (assumed small) • ith row of A characterizes ith sensor or ith measurement least-squares estimation choose as estimate the vector x̂ that minimizes kAx̂ − yk i.e., minimize the deviation between what we actually observed (y), and what we would observe if x = x̂ and there were no noise (w = 0) Linear least-squares 8-10 Navigation by range measurements find position (u, v) in a plane from distances to beacons at positions (pi, qi) beacons (p1, q1) (p4, q4) ρ1 ρ4 ρ2 (p2, q2) (u, v) unknown position ρ3 (p3, q3) four nonlinear equations in two variables u, v: p (u − pi)2 + (v − qi)2 = ρi for i = 1, 2, 3, 4 ρi is the measured distance from unknown position (u, v) to beacon i Linear least-squares 8-11 linearized distance function: assume u = u0 + ∆u, v = v0 + ∆v where • u0, v0 are known (e.g., position a short time ago) • ∆u, ∆v are small (compared to ρi’s) p (u0 + ∆u − pi)2 + (v0 + ∆v − qi)2 p (u0 − pi)∆u + (v0 − qi)∆v 2 2 ≈ (u0 − pi) + (v0 − qi) + p (u0 − pi)2 + (v0 − qi)2 gives four linear equations in the variables ∆u, ∆v: p (u0 − pi)∆u + (v0 − qi)∆v p ≈ ρi − (u0 − pi)2 + (v0 − qi)2 (u0 − pi)2 + (v0 − qi)2 for i = 1, 2, 3, 4 Linear least-squares 8-12 linearized equations Ax ≈ b where x = (∆u, ∆v) and A is 4 × 2 with b i = ρi − ai1 = ai2 = p (u0 − pi)2 + (v0 − qi)2 (u0 − pi) p (u0 − pi)2 + (v0 − qi)2 p (u0 − pi)2 + (v0 − qi)2 (v0 − qi) • due to linearization and measurement error, we do not expect an exact solution (Ax = b) • we can try to find ∆u and ∆v that ‘almost’ satisfy the equations Linear least-squares 8-13 numerical example • beacons at positions (10, 0), (−10, 2), (3, 9), (10, 10) • measured distances ρ = (8.22, 11.9, 7.08, 11.33) • (unknown) actual position is (2, 2) linearized range equations (linearized around (u0, v0) = (0, 0)) −1.00 0.00 −1.77 0.98 −0.20 ∆u 1.72 −0.32 −0.95 ∆v ≈ −2.41 −0.71 −0.71 −2.81 least-squares solution: (∆u, ∆v) = (1.97, 1.90) (norm of error is 0.10) Linear least-squares 8-14 Least-squares system identification measure input u(t) and output y(t) for t = 0, . . . , N of an unknown system unknown system u(t) y(t) example (N = 70): 4 5 y(t) u(t) 2 0 0 −2 −4 0 20 40 t 60 −5 0 20 40 60 t system identification problem: find reasonable model for system based on measured I/O data u, y Linear least-squares 8-15 moving average model ymodel(t) = h0u(t) + h1u(t − 1) + h2u(t − 2) + · · · + hnu(t − n) where ymodel(t) is the model output • a simple and widely used model • predicted output is a linear combination of current and n previous inputs • h0, . . . , hn are parameters of the model • called a moving average (MA) model with n delays least-squares identification: choose the model that minimizes the error E= N X t=n Linear least-squares (ymodel(t) − y(t))2 !1/2 8-16 formulation as a linear least-squares problem: E N X = (h0u(t) + h1u(t − 1) + · · · + hnu(t − n) − y(t))2 t=n !1/2 = kAx − bk A = x = Linear least-squares u(n) u(n − 1) u(n − 2) u(n + 1) u(n) u(n − 1) u(n + 2) u(n + 1) u(n) .. .. .. u(N ) u(N − 1) u(N − 2) h0 y(n) y(n + 1) h1 h2 y(n + 2) , b= .. .. hn y(N ) ··· ··· ··· u(0) u(1) u(2) .. · · · u(N − n) 8-17 example (I/O data of page 8-15) with n = 7: least-squares solution is h0 = 0.0240, h4 = 0.2425, h1 = 0.2819, h5 = 0.4873, 5 h2 = 0.4176, h6 = 0.2084, h3 = 0.3536, h7 = 0.4412 solid: y(t): actual output 4 dashed: ymodel(t) 3 2 1 0 −1 −2 −3 −4 0 10 20 30 40 50 60 70 t Linear least-squares 8-18 model order selection: how large should n be? relative error E/kyk 1 0.8 0.6 0.4 0.2 0 0 20 40 n • suggests using largest possible n for smallest error • much more important question: how good is the model at predicting new data (i.e., not used to calculate the model)? Linear least-squares 8-19 model validation: test model on a new data set (from the same system) 4 5 ȳ(t) ū(t) 2 0 0 −2 −4 0 20 40 60 relative prediction error t −5 0 20 40 60 t 1 0.8 • for n too large the predictive ability of the model becomes worse! 0.6 0.4 validation data modeling data 0.2 0 0 20 • validation data suggest n = 10 40 n Linear least-squares 8-20 for n = 50 the actual and predicted outputs on system identification and model validation data are: I/O set used to compute model 5 model validation I/O set 5 solid: y(t) dashed: ymodel(t) 0 −5 0 solid: ȳ(t) dashed: ȳmodel(t) 0 20 40 t 60 −5 0 20 40 60 t loss of predictive ability when n is too large is called overfitting or overmodeling Linear least-squares 8-21 Outline • definition • examples and applications • solution of a least-squares problem, normal equations Geometric interpretation of a LS problem minimize kAx − bk2 A is m × n with columns a1, . . . , an • kAx − bk is the distance of b to the vector Ax = x1a1 + x2a2 + · · · + xnan • solution xls gives the linear combination of the columns of A closest to b • Axls is the projection of b on the range of A Linear least-squares 8-22 example b 1 −1 2 , A= 1 0 0 a2 1 b= 4 2 a1 Axls = 2a1 + a2 least-squares solution xls 1 Axls = 4 , 0 Linear least-squares xls = 2 1 8-23 The solution of a least-squares problem if A is left-invertible, then xls = (AT A)−1AT b is the unique solution of the least-squares problem minimize kAx − bk2 • in other words, if x 6= xls, then kAx − bk2 > kAxls − bk2 • recall from page 4-25 that AT A is positive definite and that (AT A)−1AT is a left-inverse of A Linear least-squares 8-24 proof we show that kAx − bk2 > kAxls − bk2 for x 6= xls: kAx − bk2 = kA(x − xls) + (Axls − b)k2 = kA(x − xls)k2 + kAxls − bk2 > kAxls − bk2 • 2nd step follows from A(x − xls) ⊥ (Axls − b): (A(x − xls))T (Axls − b) = (x − xls)T (AT Axls − AT b) = 0 • 3rd step follows from zero nullspace property of A: x 6= xls Linear least-squares =⇒ A(x − xls) 6= 0 8-25 The normal equations (AT A)x = AT b if A is left-invertible: • least-squares solution can be found by solving the normal equations • n equations in n variables with a positive definite coefficient matrix • can be solved using Cholesky factorization Linear least-squares 8-26