University of Massachusetts-Dartmouth College of Nursing Final

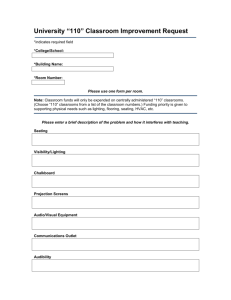

advertisement

University of Massachusetts-Dartmouth College of Nursing Final Project Report, July 31, 2015 Project Title: Establishing preliminary psychometric analysis of a new instrument: Nurse Competency Assessment Tool (NCAT) based on MA Nurse of the Future Nursing Core Competencies Model. Partnership Members and Support Staff: UMASS-Dartmouth (UMD), Saint Anne’s Hospital-Steward (SAH),and Saint Vincent Hospital (SVH). Support staff: Eileen O’Neill PhD, RN, Nurse Researcher, and Amruta Meshram, computer science graduate student. Report Completed by: Kerry Fater, PhD, RN, CNE Project Coordinator, and Professor of Nursing at UMASS-Dartmouth College of Nursing. This report is submitted in compliance with the guidelines identified in the request for proposals (RFP) in 2014. Data collection for the study was completed on August 28, 2014, in advance of the projected timeline. Data analysis was completed spring 2015. This report is based on the guidelines provided. Executive summary/overview of project accomplishments UMASS-Dartmouth and several healthcare systems in the state collaborated in the study for the purpose of gathering psychometric data on the revised Nurse Competency Assessment Tool (NCAT). Originally, the practice partners consisted of Saint Anne’s Hospital-Steward, and St. Vincent Hospital in Worcester. When recruitment of subjects was difficult, additional healthcare agencies were willing to participate: They included MetroWest Medical Center, and Southcoast Health Systems. Study participants were Registered Nurses at all educational levels who work in acute care settings, and with varying levels of experience. A total of 180 nurses participated, 20 for phase 1 of test-retesting of the NCAT for reliability purposes, and 160 for phase 2 to gather validity data. The NCAT was refined from a 50 item 4 response multiple choice test to a 30 item 3-response multiple choice test. A panel of expert reviewers participated in the process of identifying test items that adequately reflected NOFNCC core competencies knowledge, attitudes, and skills. The psychometrics for the large sample (N=160) in phase 2 include the following: a mean score of 80%, a median score of 80%, with a range of 30%-96.67% and a Cronbach’s alpha of .550. The low score of 30% was an outlier. The correlation between NCAT score and educational attainment was significant at the .05 level. Most of the study participants were bachelor prepared in nursing but the educational range included ADN to doctoral level (DNP). While the overall statistics appear to be adequate, review of test analysis reveals unacceptable test items related to difficulty indices and discrimination indices for 5 of the 30 test items. This findings indicates that further instrument refinement is needed. The purpose of the refinements is to eliminate ambiguous or misleading items thereby enhancing the overall adequacy of test construction. Based on these finding, the NCAT is not as yet a valid and reliable tool for measuring Register Nurse competency attainment related to NOFNCC. Research findings to date As reported in October in the Interim report, most of the psychometrics on the NCAT had been completed by the Fall. However, additional measures were done after that time. A brief description of all the analyses on the NCAT that were done are described below: 1. Content validity of the NCAT was determined through several sequential steps. Seven experts on the MA Nurse of the Future Nursing Core Competencies served as expert reviewers of the original 50-item NCAT. They evaluated each of the 50 test items (questions and response options) for clarity and relevance to the competencies. Percentage of agreement to retain each question was determined. The content validity index (I-CVI) was determined for each item base on expert reviewers’ evaluations. The mean I- CVI was .9416 indicating that the tool is valid (.90 is acceptable). The overall scale content validity index (S-CVI) was 72% indicating universal agreement on 72% of the items. This is acceptable but pointed out some areas in need of revision. The above findings along with previous reviews contributed to reducing the NCAT from 50 items to 30 to maximize its usefulness and ease of administration. Then, relevancy scores (RS) for each question were calculated. Twenty-eight test items had 100% relevancy, and two questions had 71% relevancy. Based on these findings, items were revised, some extensively. Also, all references to age, gender, and time were removed as part of the revision. In addition to reducing the total number of items (50) in the NCAT, the research team considered ways to improve ease of administration and economy of time without sacrificing the validity of the tool. To this end, the nurse researcher reviewed several authoritative sources on test construction and based on this review, the decision was made to delete one distractor (response option) from each question (Haladyna & Rodrigues, 2012), resulting in a total of 3 options for each question. Expert panel reviewers’ ratings and comments were used to determine which NCAT items could/should be removed. A computer science graduate student was hired to develop the online platform for the NCAT and participant demographic data. The revised NCAT was loaded onto the electronic platform, accessible to study participants who were given the link in the online consent form. After phase 1, and phase 2, the graduate student manually entered the data into Statistical Package for Social Science (SPSS) files for data analysis. 2. Determining reliability of the NCAT was determined using test-retest method during phase 1 of the study. The principal Investigators at St Vincent Hospital and Saint Anne’s Hospital recruited 10 nurses from each site. The data collection began on May 15th and ended on July 8th. The nurses recruited were described as a “fresh sample” meaning that they did not previously participate in the 2012 NCAT study. Participants were staff nurses who worked in acute care and were willing to take the NCAT twice, approximately two weeks apart. The computer science graduate student sent an email reminder to each participant about the need to complete the NCAT again approximately 2 weeks after the first time. The test-retest reliability was r= .76, indicating that the tool is stable over repeated testing (DeVellis, 2011). 3. The number of study participants in Phase 1 was small (N=20), yet the Cronbach’s alpha was .63, respectable for a newly created tool. Following a few minor revisions to the NCAT, Phase 2 began for the purpose of establishing internal consistency. A fresh sample of at least 150 staff nurses were recruited to participate, 160 participants completed the instrument. The data analysis provided some strong indicators of the soundness of the test: the average test score of 80% and the high score was 97%. However, there was an outlier at 30%. The sample consisted of 160 staff RNs, 149 females and 11 males. The majority of study participants held baccalaureate degrees in nursing but the range was from ADN to doctoral degree (one nurse held a DNP degree). Years of experience practicing as an RN was between 1 and 46 years, with a mean of 14 years. Table 1. A comparison of phase 1 and phase 2 psychometrics Statistics Mean Median Minimum Maximum Cronbach’s alpha Pearson correlations Phase 1 (N=20) 81% 80% 60% 96.67% .600 No demographic variables were significant with NCAT score Phase 2 (N= 160) 79.625% 80% 30% 96.67% .550 Level of educational attainment and NCAT score significant @.05 level. 4. To determine of test items were overlapping conceptually, extensive factor analysis was done on all 30 items. No correlations were noted which is consistent with what one might expect on a test rather than a survey. Consistent with test analysis, difficulty indices and discrimination indices were determined. The difficulty index is an indicator of the overall difficulty of the test item based on the performance of all of the test-takers (study participants). For a three-response multiple choice item, and ideal difficulty is about 77%. The discrimination index (DI) for each item compares the performance of top 25% of the test takers to the bottom 25% of the test takers. A range above .30 indicates a well constructed test item that is able to discriminate high performers from poor performers. The table below reveals these analyses for NCAT items with unsatisfactory findings. Table 2. Comparison of NCAT phase 1 and phase 2 test analyses Competency domain Phase 1 N= 20 posttest Difficulty index Phase 1 Discrimination Index Phase 2 N=160 Difficulty index Phase 2 Discrimination index Info /Tech #15 60% .50 #15 64% QI #25 50% .33 #25 65% QI #26 70% .33 #26 58% .21* .02* .21* QI #27 95% * 0.0 * #27 49% .14* EBP #30 65% .17 * #30 45% .29* *items with unacceptable difficulty or discrimination indices. Test analysis of individual test items in phase 1 and phase 2 NCAT psychometrics yielded some interesting but concerning findings: all of the above NCAT test item difficulty indices did not meet acceptable levels at about 77%. It is interesting to note that in phase 1, test items 15, 25, and 26 had acceptable levels of discrimination indices. In the larger sample of 160, there were a few changes in the discrimination indices: 3 NCAT indices were poorer in phase 2, while 2 others improved slightly. All 5 items listed in phase 2 reflect both unacceptable levels on the difficulty index and on the discrimination index as well. The discrimination indexes above in phase 2 suggest that there was little difference in performance on the above test items by high scorers versus low scorers. It is clear that these NCAT items (# 15, 25, 26, 27, 30) need to be further refined and tested with a fresh pool of staff nurses. Changes implemented and plan for sustainability Some improvements were noted in item psychometrics in each iteration of the NCAT. However, several of the NCAT test items need further refinement to increase their discrimination indices to over .3. It is important to note that the reported 2014 finding on the NCAT revealed lowest scores in the domains of quality improvement, and evidence-based practice (Fater, Weatherford, Ready, & Finn, 2014). Similar findings are noted in this study, along with a one item from information and technology. This may suggest that these NCAT items shared the same weaknesses in both iterations. At all phases of NCAT refinement, feedback from expert volunteers and study participants was analyzed. Changes were made to NCAT items in attempt to clarify language and eliminate ambiguity. Yet despite our best attempts, the NCAT is not as yet a valid and reliable test to measure competency attainment of staff nurses in acute care as planned. Barriers encountered, addressed/worked around The only barrier, not uncommon in nursing research, is finding willing study participants. Clearly the $25 gift card incentive contributed to our success in recruiting nearly 200 study participants overall. But in the latter stage of phase 2, it was clear that our target number would not likely be met using only the 2 agencies (St. Anne’s Hospital- Steward, St.Vincent Hospital), as originally planned. Staff nurses from MetroWest Medical Center followed by Southcoast Hospitals Group were recruited following IRB approvals which rapidly contributed to meeting and ultimately exceeding our targeted number of study participants. Ongoing project dissemination The Project Coordinator, Kerry Fater, presented a paper on this project at a regional meeting, Eastern Nursing Research Society in Washington, DC in April. She and Robert Ready, PI from St. Vincent’s hospital will present a poster presentation at National League for Nursing Education Summit in September in Las Vegas. The team plans on submitting a manuscript for publication by early fall. Opportunities for scale up projects/replication There is need to continue to refine the NCAT to achieve validity and reliability of the tool. These findings are consistent with the overall approach to new instrument development. It is a multistage process whereby validity and reliability is established including with a larger sample. A great deal has been accomplished to date but the work needs to continue Key lessons learned Instrument development is a multistage process requiring multiple iterations. Recommendations for DHE The research team is disappointed that it has not achieved the outcome it set out to achieve. Offering continued funding opportunity may facilitate the continued work needed to achieve the goal of a valid and reliable tool to become part of the toolbox. An accounting of the grant expenses compared to budget using Form 1A is attached. The final grant expenses mirror the proposed grant budget. While there as a small cost overrun for 10 additional study participants in phase 2, that additional cost was covered by the Grant Coordinator. I. FORM 1A Proposed Budget/Actual Budget Please complete the table below with a breakdown of the requested funding from the Nursing and Allied Health Initiative. Please provide an additional Budget Narrative explaining any unusual or unexpected costs or activities. Project name: Psychometric Analysis of New Instrument NCAT Project Manager: Kerry Fater Total Grant Funds Requested Categories Total Salaries Administrators(3 agency co-PIs) Support staff (online manager & NCAT researcher) Total Funds Used Difference $10,460 $10,453 $2,160 $2,153 $7 $8,300 $8,300 $0 Other $0 Fringe Benefits $0 $6 -$6 Travel Contractual services (Human subjects) $4750 $0 $0 $0 $4,750 $4,750 $0 $0 $0 $0 Curriculum $0 $0 $0 Equipment $0 $0 $0 Other $0 $0 $0 Transportation $0 $0 $0 Training $0 $0 $0 Tuition & Stipends $0 $0 $0 Other $0 $0 $0 Evaluation $0 $0 $0 $1,520 $1,521 -$1 $16,730 $16,730 $0 Total Supplies & Materials Indirect Costs (10% Max.) Total Plus Private Matching Funds Grand Total $0 $16,730 $0 $16,730 $0