EECS 470 Lecture 16 Prefetching Prefetching

advertisement

© Wenisch 2007 -- Portions © Austin, Brehob, Falsafi,

Hill, Hoe, Lipasti, Shen, Smith, Sohi, Tyson, Vijaykumar

EECS 470

Lecture 16

Prefetching

Fall 2007

Fall 2007

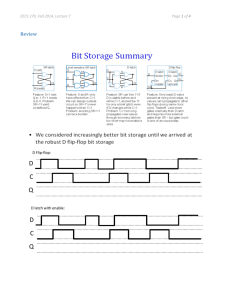

History Table

Correlating Prediction Table

Latest

A0

A0,A1

A3 11

A1

Prof. Thomas Wenisch

Prefetch A3

http://www.eecs.umich.edu/courses/eecs470

Slides developed in part by Profs. Austin, Brehob, Falsafi, Hill, Hoe, Lipasti,

Shen, Smith, Sohi, Tyson, and Vijaykumar of Carnegie Mellon University,

Purdue University, University of Michigan, and University of Wisconsin.

EECS 470

Lecture 16

Slide 1

Announcements

© Wenisch 2007 -- Portions © Austin, Brehob, Falsafi,

Hill, Hoe, Lipasti, Shen, Smith, Sohi, Tyson, Vijaykumar

HW # 5 (due 11/16)

HW # 5 (due 11/16)

Will be posted by Wednesday

Milestone 2 (due 11/14)

EECS 470

Lecture 16

Slide 2

Readings

© Wenisch 2007 -- Portions © Austin, Brehob, Falsafi,

Hill, Hoe, Lipasti, Shen, Smith, Sohi, Tyson, Vijaykumar

For Wednesday:

EECS 470

H&P Appendix C.4‐C.6.

Jacob & Mudge. Virtual Memory in Contemporary Processors

Lecture 16

Slide 3

© Wenisch 2007 -- Portions © Austin, Brehob, Falsafi,

Hill, Hoe, Lipasti, Shen, Smith, Sohi, Tyson, Vijaykumar

Latency vs. Bandwidth

Latency can be handled by

Latency can be handled by

hiding/tolerating techniques

e.g., parallelism may increase bandwidth demand

may increase bandwidth demand

reducing techniques

Ultimately limited by physics

EECS 470

Lecture 16

Slide 4

© Wenisch 2007 -- Portions © Austin, Brehob, Falsafi,

Hill, Hoe, Lipasti, Shen, Smith, Sohi, Tyson, Vijaykumar

Latency vs. Bandwidth (Cont.)

Bandwidth can be handled by

Bandwidth can be handled by

banking/interleaving/multiporting wider buses

hi

hierarchies (multiple levels)

hi ( lti l l l )

Wh t h

What happens if average demand not supplied?

if

d

d t

li d?

bursts are smoothed by queues

EECS 470

if burst is much larger than average => long queue

eventually increases delay to unacceptable levels

Lecture 16

Slide 5

The memory wall

© Wenisch 2007 -- Portions © Austin, Brehob, Falsafi,

Hill, Hoe, Lipasti, Shen, Smith, Sohi, Tyson, Vijaykumar

Perforrmance

10000

1000

Processor

100

10

Memory

1

1985

1990

1995

2000

2005

Source: Hennessy & Patterson, Computer Architecture: A Quantitative Approach, 4thh

2010

ed.

Today: 1 mem access ≈ 500 arithmetic ops

How to reduce memory stalls for existing SW?

EECS 470

Lecture 16

Slide66

© Wenisch 2007 -- Portions © Austin, Brehob, Falsafi,

Hill, Hoe, Lipasti, Shen, Smith, Sohi, Tyson, Vijaykumar

Conventional approach #1:

Avoid main memory accesses

C h hi

Cache hierarchies:

hi

Trade off capacity for speed

Write data

Write data

CPU

CPU

2 clk

64K

data

20 lk

20 clk

Add more cache levels?

4M

Diminishing locality returns

200 clk

No help for shared data in MPs

Main memory

Main memory

EECS 470

Lecture 16

Slide77

© Wenisch 2007 -- Portions © Austin, Brehob, Falsafi,

Hill, Hoe, Lipasti, Shen, Smith, Sohi, Tyson, Vijaykumar

Conventional approach #2:

Hide memory latency

Overlap compute & mem stalls

In order

In order

execcution

O

Out‐of‐order execution:

f d

i

OoO

compute

mem stall

Expand OoO instruction window?

Issue & load‐store logic hard to scale

No help for dependent instructions

EECS 470

Lecture 16

Slide88

© Wenisch 2007 -- Portions © Austin, Brehob, Falsafi,

Hill, Hoe, Lipasti, Shen, Smith, Sohi, Tyson, Vijaykumar

Challenges of server apps

Defy conventional approaches:

Frequent sharing

Many linked‐data structures

+ E.g., linked list, B tree, …

Lead to dependent miss chains

Lead to dependent miss chains

50‐66%

50

66% time stalled on memory

time stalled on memory

[Trancoso 97][Barroso 98][Ailamaki 99] … …

compute

mem stall

Read stalls Æ this talk

Write stalls Æ ISCA 07

Write stalls Æ

ISCA 07

Our goal: Fetch data earlier & in parallel

EECS 470

Goal

exeecution

Today

Lecture 16

Slide99

© Wenisch 2007 -- Portions © Austin, Brehob, Falsafi,

Hill, Hoe, Lipasti, Shen, Smith, Sohi, Tyson, Vijaykumar

What is Prefetching?

•

Fetch memory ahead of time

•

Targets compulsory, capacity, & coherence misses

Targets compulsory, capacity, & coherence misses Big challenges:

Big challenges:

knowing “what” to fetch

1.

•

Fetching useless info wastes valuable resources

“when” to fetch it

2.

•

•

EECS 470

Fetching too early clutters storage

Fetching too late defeats the purpose of “pre”‐fetching

h

l

d f

h

f“ ” f h

Lecture 16

Slide 10

Prefetching Data Cache

© Wenisch 2007 -- Portions © Austin, Brehob, Falsafi,

Hill, Hoe, Lipasti, Shen, Smith, Sohi, Tyson, Vijaykumar

Branch

Predictor

I-cache

Decode Buffer

Decode

Dispatch Buffer

Dispatch

p

Memory

Reference

Prediction

Reservation

Stations

branch integer

g

integer

g

floating

g

point

i t

Complete

Store Bufffer

Completion

p

Buffer

store

Prefetch

Queue

load

Data Cache

Main Memory

EECS 470

Lecture 16

Slide 11

© Wenisch 2007 -- Portions © Austin, Brehob, Falsafi,

Hill, Hoe, Lipasti, Shen, Smith, Sohi, Tyson, Vijaykumar

Software Prefetching

Compiler/programmer places prefetch instructions

Compiler/programmer places prefetch instructions

requires ISA support

why not use regular loads?

f

found in recent ISA’s such as SPARC V‐9

di

t ISA’

h SPARC V 9

P f t hi t

Prefetch into

EECS 470

register (binding)

caches (non‐binding): preferred in multiprocessors

Lecture 16

Slide 12

© Wenisch 2007 -- Portions © Austin, Brehob, Falsafi,

Hill, Hoe, Lipasti, Shen, Smith, Sohi, Tyson, Vijaykumar

Software Prefetching (Cont.)

e.g., e.g.,

for (I = 1; I < rows; I++)

for (I 1; I < rows; I++)

for (J = 1; J < columns; J++)

{ prefetch(&x[I+1,J]);

sum = sum + x[I J];

sum = sum + x[I,J];

} EECS 470

Lecture 16

Slide 13

© Wenisch 2007 -- Portions © Austin, Brehob, Falsafi,

Hill, Hoe, Lipasti, Shen, Smith, Sohi, Tyson, Vijaykumar

Software Prefetching Support

PowerPC Data Cache Block Touch Instruction (dcbt EA)

“a hint that performance will probably be improved if the block containing the p f

p

y

p

f

g

byte addressed by EA is fetched into the data cache”

A correct implementation of dcbt is to do nothing

Or, as a load instruction with no destination register except it should not trigger page or protection faults

Wh

Where should compilers insert dcbt?

h ld

il i

t d bt?

in front of every load: wastes I‐cache and D‐cache bandwidth

where are loads likely to miss

where load misses would really hurt performance

EECS 470

When traversing large data sets (arrays in scientific code)

pointer arguments to functions

linked‐list traversal ‐ find loads whose data address is itself the result of a previous load

Lecture 16

Slide 14

© Wenisch 2007 -- Portions © Austin, Brehob, Falsafi,

Hill, Hoe, Lipasti, Shen, Smith, Sohi, Tyson, Vijaykumar

Hardware Prefetching

What to prefetch?

What to prefetch?

one block spatially ahead?

use address predictors Æ work well for regular patterns (e.g., x, x+8, x+16 )

x+16,.)

When to prefetch?

on every reference

y

on every miss

when prior prefetched data is referenced

upon last processor reference

upon last processor reference

Where to put prefetched data?

auxiliary buffers

y

caches

EECS 470

Lecture 16

Slide 15

© Wenisch 2007 -- Portions © Austin, Brehob, Falsafi,

Hill, Hoe, Lipasti, Shen, Smith, Sohi, Tyson, Vijaykumar

Spatial Locality and Sequential

P f t hin

Prefetching

Works well for I‐cache

Instruction fetching tend to access memory sequentially

Doesn’t work very well for D‐cache

More irregular access pattern

regular patterns may have non‐unit stride (e.g. matrix code)

l

tt

h

it t id (

ti

d )

Relatively easy to implement

Large cache block size already have the effect of prefetching

Large

cache block size already have the effect of prefetching

After loading one‐cache line, start loading the next line automatically if the line is not in cache and the bus is not busy

What if you fetch at the wrong time ….

h f

f h

h

Imagine if you started sequential prefetching of a long cache line and so happens you get a load miss to the middle of that line?

A ii l

A critical‐word‐first reload triggered by the load miss itself may d fi

l d i

d b h l d i i lf

actually have restarted computation sooner!!

EECS 470

Lecture 16

Slide 16

Stride Prefetchers

© Wenisch 2007 -- Portions © Austin, Brehob, Falsafi,

Hill, Hoe, Lipasti, Shen, Smith, Sohi, Tyson, Vijaykumar

Access pattern for a particular static load is more predictable

Access pattern for a particular static load is more predictable

Reference Prediction Table

Load

Inst

PC

Load Inst.

Load Inst.

Last Address

Last Address

Last

PC (tag)

Referenced

Stride

…….

…….

……

Flags

Remembers previously executed loads, their PC, the last address Remembers

previously executed loads their PC the last address

referenced, stride between the last two references When executing a load, look up in RPT and compute the distance between the current data addr and the last addr

‐ if the new distance matches the old stride p

,g

p

⇒ found a pattern, go ahead and prefetch “current addr+stride”

‐ update “last addr” and “last stride” for next lookup

EECS 470

Lecture 16

Slide 17

Stream Buffers

© Wenisch 2007 -- Portions © Austin, Brehob, Falsafi,

Hill, Hoe, Lipasti, Shen, Smith, Sohi, Tyson, Vijaykumar

Each stream buffer holds one stream of Each

stream buffer holds one stream of

sequentially prefetched cache lines No cache pollution

On a load miss check the head of all stream buffers for an address match

FIFO

if hit, pop the entry from FIFO, update the cache if

hit pop the entry from FIFO update the cache

with data if not, allocate a new stream buffer to the new miss address (may have to recycle a stream

miss address (may have to recycle a stream buffer following LRU policy)

DCache

Stream buffer FIFOs are continuously topped‐off with subsequent cache lines whenever there is ith b

t

h li

h

th

i

room and the bus is not busy

FIFO

Stream buffers can incorporate stride prediction mechanisms to support non‐unit‐stride streams

FIFO

EECS 470

Indirect array accesses (e.g., A[B[i]])?

M

Memory

interfacce

FIFO

Lecture 16

Slide 18

© Wenisch 2007 -- Portions © Austin, Brehob, Falsafi,

Hill, Hoe, Lipasti, Shen, Smith, Sohi, Tyson, Vijaykumar

Generalized Access Pattern Prefetchers

How do you prefetch 1

1.

Heap data structures?

Heap data structures? 2.

Indirect array accesses? 3

3.

Generalized memory access patterns?

Generalized memory access patterns?

Current proposals:

Current proposals:

•

Precomputation prefetchers

•

Address correlating prefetchers

Address correlating prefetchers

EECS 470

Lecture 16

Slide 19

© Wenisch 2007 -- Portions © Austin, Brehob, Falsafi,

Hill, Hoe, Lipasti, Shen, Smith, Sohi, Tyson, Vijaykumar

Runahead Prefetchers

M i

Main Prefetch

P f h

Proposed for I/O prefetching first (Gibson et al.)

Duplicate the program

Duplicate the program

Thread Thread

• Only execute the address generating stream

• Let it run ahead

Let it run ahead

May run as a thread on

May run as a thread on

• A separate processor

• The same multithreaded processor

The same multithreaded processor

Or custom address generation logic

Or custom address generation logic

Many names: slipstream, precomp., runahead, …

EECS 470

Lecture 16

Slide 20

© Wenisch 2007 -- Portions © Austin, Brehob, Falsafi,

Hill, Hoe, Lipasti, Shen, Smith, Sohi, Tyson, Vijaykumar

Runahead Prefetcher

To get ahead:

•

Must avoid waiting

Must avoid waiting

•

Must compute less

Predict

1.

Control flow thru branch prediction

2

2.

Data flow thru value prediction

Data flow thru value prediction

3.

Address generation computation only

+

Prefetch any pattern (need not be repetitive) ―

Prediction only as good as branch + value prediction

Prediction only as good as branch + value prediction

EECS 470

How much prefetch lookahead?

Lecture 16

Slide 21

© Wenisch 2007 -- Portions © Austin, Brehob, Falsafi,

Hill, Hoe, Lipasti, Shen, Smith, Sohi, Tyson, Vijaykumar

Correlation-Based Prefetching

Consider the following history of Load addresses emitted by a processor

A, B, C, D, C, E, A, C, F, F, E, A, A, B, C, D, E, A ,B C, D, C

, , , , , , , , , , , , , , , , , , , ,

After referencing a particular address (say A or E), are some addresses more likely to be referenced next

.2

.2

.6

A

B

1.0

C

.67

Markov

Model

.2

.6

.2

D

.33

E

.5

F

5

.5

1.0

EECS 470

Lecture 16

Slide 22

Correlation-Based Prefetching

© Wenisch 2007 -- Portions © Austin, Brehob, Falsafi,

Hill, Hoe, Lipasti, Shen, Smith, Sohi, Tyson, Vijaykumar

Load

Data

Add

Addr

Load Data Addr

Prefetch

(tag)

Candidate 1

…….

…….

Confidence

……

….

Prefetch

….

Candidate N

.…

…….

Confidence

……

….

Track the likely next addresses after seeing a particular addr

Track the likely next addresses after seeing a particular addr.

Prefetch accuracy is generally low so prefetch up to N next addresses to increase coverage (but this wastes bandwidth)

Prefetch accuracy can be improved by using longer history

Decide which address to prefetch next by looking at the last K load addresses instead of just the current one

addresses instead of just the current one e.g. index with the XOR of the data addresses from the last K loads

Using history of a couple loads can increase accuracy dramatically

This technique can also be applied to just the load miss stream

EECS 470

Lecture 16

Slide 23

© Wenisch 2007 -- Portions © Austin, Brehob, Falsafi,

Hill, Hoe, Lipasti, Shen, Smith, Sohi, Tyson, Vijaykumar

Example Address Correlating:

M k Prefetchers

Markov

P f t h

Markov Prefetchers (Joseph & Grunwald, ISCA’97) • Correlate subsequent cache misses

• Trigger prefetch on miss

• Predict & prefetch 4 candidates: predicting 1 results in low coverage!

• Prefetch into a buffer

EECS 470

Lecture 16

Slide 24

© Wenisch 2007 -- Portions © Austin, Brehob, Falsafi,

Hill, Hoe, Lipasti, Shen, Smith, Sohi, Tyson, Vijaykumar

Problems with Markov

Either low coverage or low accuracy

•

P f t h several addresses to try to eliminate one miss Prefetches

l dd

t t t li i t

i

Insufficicient Lookahead

•

Di t

Distance between two misses is usually small

b t

t

i

i

ll

ll

load/store A1 (miss)

load/store A1 (hit)

...

l d/ t

load/store

C3 (miss)

( i )

lookahead

load/store A3 (miss)

Fetch on miss

EECS 470

Lecture 16

Slide 25

© Wenisch 2007 -- Portions © Austin, Brehob, Falsafi,

Hill, Hoe, Lipasti, Shen, Smith, Sohi, Tyson, Vijaykumar

Spatio-Temporal

p

p

Memory

y Streaming

g

[ISCA 05][ISCA 06]

M

Memory

accesses repeatt

CPU

patterns

Ld Q

Ld W

Ld E

sequences

stream

tim

me

spaace

Ld Q

Ld W

Ld E

• Observe patterns/sequences

• Stream data to CPU ahead of requests

EECS 470

Q

W

E

..

L1

L2

Memoryy or

other CPUs

26

© Wenisch 2007 -- Portions © Austin, Brehob, Falsafi,

Hill, Hoe, Lipasti, Shen, Smith, Sohi, Tyson, Vijaykumar

My thesis:

Temporall M

T

Memory St

Streaming

i

(TMS)

HW records & replays

p y recurring

g miss sequences

q

Baseline

TMS

A

time

CPU

A

B

B

CPU

C

Must wait to follow pointers

C

F t h in

Fetch

i parallel

ll l

• Stream data to CPU

In advance – Ahead of CPU requests

In parallel – Even for dependent accesses

In order – Flow control to manage storage/BW

6-21% speedup in Web & OLTP apps.

EECS 470

27

© Wenisch 2007 -- Portions © Austin, Brehob, Falsafi,

Hill, Hoe, Lipasti, Shen, Smith, Sohi, Tyson, Vijaykumar

Miss addresses are correlated

• Intuition: Miss sequences

q

repeat

p

Because code/data traversals repeat

Miss seq. Q W A B C D E R … T A B C D E Y

• Temporal Address Correlation

Prior evidence: [[Joseph

p 97][Luk

][

99][Chilimbi

][

01][Lai

][

01]]

Contrast: temporal locality

stream = ordered address sequence

EECS 470

28

© Wenisch 2007 -- Portions © Austin, Brehob, Falsafi,

Hill, Hoe, Lipasti, Shen, Smith, Sohi, Tyson, Vijaykumar

Recent streams recur

• Intuition: Streams exhibit temporal locality

Because working set exhibits temporal locality

MP: repetition often across CPUs

CPU 1 Q W A B C D E R

CPU 2

T A B C D E Y

• Temporal Stream Locality

Streams evolve as structures change

Track streams at run-time, not statically

EECS 470

29

© Wenisch 2007 -- Portions © Austin, Brehob, Falsafi,

Hill, Hoe, Lipasti, Shen, Smith, Sohi, Tyson, Vijaykumar

TMS – 10,000

,

feet

c Log

L

CPU

Load A

Load B

Load C

TMS

{A,B,C,…}

d Lookup

L k

e Stream

St

Load A

CPU

TMS

{A,B,C,…}

CPU

Prefetch B

Prefetch C

TMS

{A,B,C,…}

K

Key

HW design

d i

challenge:

h ll

L

Lookup

k

mechanism

h i

EECS 470

30

© Wenisch 2007 -- Portions © Austin, Brehob, Falsafi,

Hill, Hoe, Lipasti, Shen, Smith, Sohi, Tyson, Vijaykumar

System

y

models & applications

pp

16-node DSM

CPU

4-core CMP

CPU

8MB L2

8MB L2

Core

& L1

Core

& L1

Shared 8MB L2

Directory

Directory

Memory

Memory

Coherence misses

• Web: SPECweb99

Apache, Zeus

• OLTP: TPC-C

DB2, Oracle

EECS 470

Core

& L1

Mem.

Core

& L1

Capacity/conflict misses

• DSS: DB2

Qry 1, 2, 17

• Scientific

em3d, moldyn, ocean

31

© Wenisch 2007 -- Portions © Austin, Brehob, Falsafi,

Hill, Hoe, Lipasti, Shen, Smith, Sohi, Tyson, Vijaykumar

TMS opportunity

pp

y

%O

Off-chip m

misses

Recurrence:

System-wide

New/Heads

100%

Same CPU only

80%

60%

40%

20%

0%

DSM

CMP

Web

DSM

CMP

OLTP

DSM

CMP

DSS

DSM

CMP

Sci

Avg. 75% misses in repetitive streams

Streams recur across processors (esp. DSM)

EECS 470

32

© Wenisch 2007 -- Portions © Austin, Brehob, Falsafi,

Hill, Hoe, Lipasti, Shen, Smith, Sohi, Tyson, Vijaykumar

Strea

amed blo

ocks

Stream length

g

100%

Web DSM

Web CMP

OLTP DSM

OLTP CMP

DSS DSM

DSS CMP

80%

60%

median length ≈ 10

40%

20%

0%

1

10

100

1000

10000

Stream length

• Long streams (esp. CMP) Æ need flow control

• Contrast: “Depth” prefetching [Solihin 03][Nesbit 04]

EECS 470

Short sequences (~4 misses)

Variable length Æ log in circular buffer

33

© Wenisch 2007 -- Portions © Austin, Brehob, Falsafi,

Hill, Hoe, Lipasti, Shen, Smith, Sohi, Tyson, Vijaykumar

Stream reuse

Web DSM

Web CMP

OLTP DSM

OLTP CMP

DSS DSM

DSS CMP

Off-chiip misse

es

20%

15%

10%

5%

0%

1

10

102

103

104

105

106

107

Stream reuse distance

• Coherence: reuse = F( sharing behavior )

• Capacity: reuse = F( L2 size )

Reuse distance ≥ 105 misses Æ log off-chip

EECS 470

34

© Wenisch 2007 -- Portions © Austin, Brehob, Falsafi,

Hill, Hoe, Lipasti, Shen, Smith, Sohi, Tyson, Vijaykumar

Correlated & strided misses

Web

OLTP

Temporally-correlated

DSS

Strided

Sci

Non-correlated

TMS & stride target different access patterns

DSS N

DSS:

New TMS opportunity

t it < 20%

EECS 470

35

© Wenisch 2007 -- Portions © Austin, Brehob, Falsafi,

Hill, Hoe, Lipasti, Shen, Smith, Sohi, Tyson, Vijaykumar

Sources of repetitive

p

streams

Top contributors to TMS opportunity

Web

Dynamic content generation in PERL (21%)

System calls: poll, read, write, stat (15%)

Kernel STREAMS sub-system (13%)

OLTP

(DB2)

Index, tuple & page accesses (23%)

SQL requestt control

t l & runtime

ti

interpreter

i t

t (14%)

Kernel MMU & register window traps (13%)

DSS

B lk memory copies

Bulk

i (55%)

Can extrapolate to new workloads

via source breakdown

EECS 470

36

© Wenisch 2007 -- Portions © Austin, Brehob, Falsafi,

Hill, Hoe, Lipasti, Shen, Smith, Sohi, Tyson, Vijaykumar

Coverage

g & timeliness

Opportunity

% misses

100%

Fully Hidden

Partially Hidden

80%

60%

40%

20%

em3d

moldyn

ocean

Apache

DB2

Oracle

Timing

T

Trace

T

Timing

T

Trace

T

Timing

T

Trace

T

Timing

T

Trace

T

Timing

T

Trace

T

Timing

T

Trace

T

Timing

T

Trace

T

0%

Zeus

• Stream lookup latency ≈ miss latency

~1 miss/stream untimely (partial or no overlap)

TMS-DSM achieves avg. 75% of opportunity

EECS 470

37

© Wenisch 2007 -- Portions © Austin, Brehob, Falsafi,

Hill, Hoe, Lipasti, Shen, Smith, Sohi, Tyson, Vijaykumar

Performance impact

p

Time Breakdown

Speedup

Busy

1.0

0.8

0.6

0.4

0.2

0.0

Other Stalls

Off-chip Read Stalls

1.3

95% CI

12

1.2

base

e

TMS

S

Oracle Zeus

Zeus

Oracle

1.0

em3d

moldyn

m

ocean

Apache

A

DB2

Oracle

Zeus

em3d moldyn

moldyn ocean

ocean Apache

Apache DB2

DB2

em3d

base

e

TMS

S

base

e

TMS

S

base

e

TMS

S

base

e

TMS

S

base

e

TMS

S

1.1

base

e

TMS

S

Normaliz ed Time

33

3.3

• TMS-DSM eliminates 25%-95% of read stalls

Commercial apps: 6% to 21% improvement

EECS 470

38

© Wenisch 2007 -- Portions © Austin, Brehob, Falsafi,

Hill, Hoe, Lipasti, Shen, Smith, Sohi, Tyson, Vijaykumar

Memory

y wall summary

y

Main memory accesses cost 100s cycles

Solution: Temporal Memory Streaming

Record & replay recurring miss sequences

Breaks pointer

pointer-chasing

chasing dependence

Performance improvement:

7

7-230%

230% in scientific apps.

apps

6-21% in commercial Web & OLTP apps.

EECS 470

39

© Wenisch 2007 -- Portions © Austin, Brehob, Falsafi,

Hill, Hoe, Lipasti, Shen, Smith, Sohi, Tyson, Vijaykumar

Improving Cache Performance: Summary

Miss rate

large block size

higher associativity

victim caches

skewed‐/pseudo‐associativity

skewed

/pseudo associativity

hardware/software prefetching

compiler optimizations

Miss penalty

Hit time (difficult?)

small and simple caches

p

avoiding translation during L1 indexing (later)

pipelining writes for fast pipelining writes for fast

write hits

subblock placement for fast write hits in write through g

caches

give priority to read misses over writes/writebacks

subblock placement

early restart and critical word first

non‐blocking caches

non blocking caches

multi‐level caches

EECS 470

Lecture 16

Slide 40