EECS 470 Lecture 1 Computer Architecture

advertisement

© Wenisch 2007 -- Portions © Austin, Brehob, Falsafi,

Hill, Hoe, Lipasti, Shen, Smith, Sohi, Tyson, Vijaykumar

EECS 470

Lecture 1

Computer

Architecture

Fall 2007

Prof. Thomas Wenisch

http://www.eecs.umich.edu/courses/eecs470/

Slides developed in part by Profs. Austin, Brehob, Falsafi, Hill, Hoe, Lipasti, Shen,

Smith, Sohi, Tyson, Vijaykumar, and Wenisch of Carnegie Mellon University,

Purdue University, University of Michigan, and University of Wisconsin.

EECS 470

Lecture 1

Slide 1

Announcements

© Wenisch 2007 -- Portions © Austin, Brehob, Falsafi,

Hill, Hoe, Lipasti, Shen, Smith, Sohi, Tyson, Vijaykumar

HW # 1 due Friday 9/14

Programming assignment #1 due Friday 9/14

EECS 470

More details in discussion this Friday

Lecture 1

Slide 2

Readings

© Wenisch 2007 -- Portions © Austin, Brehob, Falsafi,

Hill, Hoe, Lipasti, Shen, Smith, Sohi, Tyson, Vijaykumar

For Monday:

Task of the Referee. A.J. Smith

(on readings web page, username and p/w in printed syllabus)

H & P Chapter 1

H & P Appendix

A

di B

EECS 470

Lecture 1

Slide 3

© Wenisch 2007 -- Portions © Austin, Brehob, Falsafi,

Hill, Hoe, Lipasti, Shen, Smith, Sohi, Tyson, Vijaykumar

What Is Computer Architecture?

“The

The term architecture is used here to describe the

attributes of a system as seen by the programmer, i.e., the

conceptual structure and functional behavior as distinct

from the organization

g

of the dataflow and controls,, the

logic design, and the physical implementation.”

Gene Amdahl, IBM Journal of R&D, April 1964

EECS 470

Lecture 1

Slide 4

© Wenisch 2007 -- Portions © Austin, Brehob, Falsafi,

Hill, Hoe, Lipasti, Shen, Smith, Sohi, Tyson, Vijaykumar

Architecture, Organization,

I l

Implementation

t ti

Computer architecture: SW/HW interface

instruction set

memory management and protection

i t

interrupts

t and

d ttraps

floating-point standard (IEEE)

Organization: also called microarchitecture

number/location of functional units

pipeline/cache configuration

d t

datapath

th connections

ti

Implementation:

EECS 470

low-level

low

level circuits

Lecture 1

Slide 5

© Wenisch 2007 -- Portions © Austin, Brehob, Falsafi,

Hill, Hoe, Lipasti, Shen, Smith, Sohi, Tyson, Vijaykumar

What Is 470 All About?

High-level

High

level understanding of issues in modern architecture:

Dynamic out-of-order processing

Memory architecture

I/O architecture

hit t

Multicore / multiprocessor issues

Lectures, HW & Reading

Low-level understanding of critical components:

EECS 470

Microarchitecture of out-of-order machines

Caches & memory sub-system

Project & Reading

Lecture 1

Slide 6

© Wenisch 2007 -- Portions © Austin, Brehob, Falsafi,

Hill, Hoe, Lipasti, Shen, Smith, Sohi, Tyson, Vijaykumar

EECS 470 Class Info

Instructor: Professor Thomas Wenisch

URL: http://www.eecs.umich.edu/~twenisch

Research interests:

Multicore / multiprocessor arch. & programmability

D t center

Data

t architecture

hit t

Tools and methods for design evaluation

GSI:

Ian Kountanis (ikountan@umich.edu)

Class info:

EECS 470

URL: http://www.eecs.umich.edu/courses/eecs470/

Wiki for discussing HW & projects

Lecture 1

Slide 7

Meeting Times

© Wenisch 2007 -- Portions © Austin, Brehob, Falsafi,

Hill, Hoe, Lipasti, Shen, Smith, Sohi, Tyson, Vijaykumar

Lecture

MW 3:00pm – 4:30pm (1303 EECS)

Discussion (attendance mandatory!)

F 3:30pm – 4:30pm (1010 Dow)

Office Hours

EECS 470

Office hours:

Offi

h

W 1:00-2:00

1 00 2 00 & F 2:00-3:00

2 00 3 00 pm (4620 CSE)

Ians’ office hours: TBD

Lecture 1

Slide 8

© Wenisch 2007 -- Portions © Austin, Brehob, Falsafi,

Hill, Hoe, Lipasti, Shen, Smith, Sohi, Tyson, Vijaykumar

Who Should Take 470?

Seniors & Graduate Students

1.

2.

3.

Computer architects to be

Computer system designers

Those interested in computer systems

Required Background

EECS 470

Basic digital logic (EECS 270)

Basic architecture (EECS 370)

Verilog/VHDL experience helpful

(covered in discussion)

Lecture 1

Slide 9

Grading

© Wenisch 2007 -- Portions © Austin, Brehob, Falsafi,

Hill, Hoe, Lipasti, Shen, Smith, Sohi, Tyson, Vijaykumar

Grade breakdown

Midterm:

25%

Final:

25%

Homework:

10% (total of 6, drop lowest grade)

Verilog assignments: 10% (total of 3: 2% 2% 6%)

Project:

30%

Open-ended (group) processor design in Verilog

Median will be ~80% (so is a distinguisher!)

Participation + discussion count toward the Project grade

EECS 470

Lecture 1

Slide 10

Grading (Cont.)

© Wenisch 2007 -- Portions © Austin, Brehob, Falsafi,

Hill, Hoe, Lipasti, Shen, Smith, Sohi, Tyson, Vijaykumar

No late homeworks, no kidding

Group studies are encouraged

Group discussions are encouraged

All homeworks must be results of individual work

There is no tolerance for academic dishonesty. Please refer

to the University Policy on cheating and plagiarism.

Di

Discussion

i and

d group studies

t di are encouraged,

d b

butt allll

submitted material must be the student's individual work (or

in case of the project, individual group work).

EECS 470

Lecture 1

Slide 11

© Wenisch 2007 -- Portions © Austin, Brehob, Falsafi,

Hill, Hoe, Lipasti, Shen, Smith, Sohi, Tyson, Vijaykumar

Hours (and hours) of work

Attend class & discussion

R d book

Read

b k & handouts

h d t

6, 6, 20 hrs = 32 hrs / 15 weeks

~2 hrs/week

Project

j

6 assignments @ ~4 hrs each / 15 weeks

~1-2 hrs/week

3 Programming assignments

~2-3 hrs / week

Do homework

~4 hrs / week

~100 hrs / 15 weeks = ~7 hrs / week

Studying, etc.

~2 hrs/week

Expect to spend ~20 hours / week on this class!!

EECS 470

Lecture 1

Slide 12

© Wenisch 2007 -- Portions © Austin, Brehob, Falsafi,

Hill, Hoe, Lipasti, Shen, Smith, Sohi, Tyson, Vijaykumar

Fundamental concepts

EECS 470

Lecture 1

Slide 13

Amdahl’s Law

© Wenisch 2007 -- Portions © Austin, Brehob, Falsafi,

Hill, Hoe, Lipasti, Shen, Smith, Sohi, Tyson, Vijaykumar

Speedup= timewithout enhancement / timewith enhancement

Suppose an enhancement speeds up a fraction f

of a task by a factor of S

timenew = timeorig·( (1-f) + f/S )

Soverall = 1 / ( ((1-f)) + f/S )

time

time

orig

orig

(1 - f)

(1 - f)1 f

f

timenew

(1 - f)

EECS 470

(1

f/S- f)

f/S

Lecture 1

Slide 14

© Wenisch 2007 -- Portions © Austin, Brehob, Falsafi,

Hill, Hoe, Lipasti, Shen, Smith, Sohi, Tyson, Vijaykumar

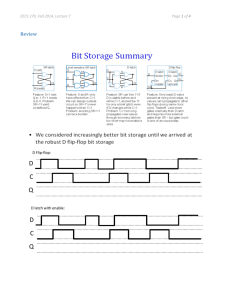

Parallelism: Work and Critical Path

Parallelism - the amount of

independent sub-tasks available

W k T1 - time

Work=T

ti

t complete

to

l t a

computation on a sequential

system

Critical Path=T∞ - time to complete

the same computation on an

infinitely-parallel

infinitely

parallel system

x = a + b;

y = b * 2

z =(x-y) * (x+y)

a

b

*2

+

x

Average Parallelism

Pavg = T1 / T∞

For a p wide system

p T∞ }

Tp ≥ max{{ T1/p,

Pavg>>p ⇒ Tp ≈ T1/p

EECS 470

y

-

+

*

Lecture 1

Slide 15

© Wenisch 2007 -- Portions © Austin, Brehob, Falsafi,

Hill, Hoe, Lipasti, Shen, Smith, Sohi, Tyson, Vijaykumar

Locality Principle

One’s recent past is a good indication of near future.

Temporal

Locality: If you looked something up, it is very

likely that you will look it up again soon

Spatial Locality: If you looked something up, it is very

likely

e y you will look

oo up so

something

e

g nearby

ea by next

e

Locality == Patterns == Predictability

Converse:

Anti-locality : If you haven’t done something for

a very long time, it is very likely you won’t do it

in the near future either

EECS 470

Lecture 1

Slide 16

Memoization

© Wenisch 2007 -- Portions © Austin, Brehob, Falsafi,

Hill, Hoe, Lipasti, Shen, Smith, Sohi, Tyson, Vijaykumar

Dual of temporal locality but for computation

If something is expensive to compute, you might want to

remember the answer for a while, just in case you will

need the same answer again

Why does memoization work??

Real life examples:

whatever results of work you will soon reuse

Examples

EECS 470

Trace caches

Lecture 1

Slide 17

Amortization

© Wenisch 2007 -- Portions © Austin, Brehob, Falsafi,

Hill, Hoe, Lipasti, Shen, Smith, Sohi, Tyson, Vijaykumar

overhead cost : one-time cost to set something up

per-unit

per

unit cost : cost for per unit of operation

total cost = overhead + per-unit cost x N

It is often okay to have a high overhead cost if the cost

can be distributed over a large number of units

⇒ lower the average cost

average cost = total cost / N

= ( overhead / N ) + per-unit cost

EECS 470

Lecture 1

Slide 18

© Wenisch 2007 -- Portions © Austin, Brehob, Falsafi,

Hill, Hoe, Lipasti, Shen, Smith, Sohi, Tyson, Vijaykumar

Measuring performance

EECS 470

Lecture 1

Slide 19

© Wenisch 2007 -- Portions © Austin, Brehob, Falsafi,

Hill, Hoe, Lipasti, Shen, Smith, Sohi, Tyson, Vijaykumar

Metrics of Performance

Time (latency)

elapsed time vs. processor time

Rate (bandwidth or throughput)

performance = rate = work per time

Distinction is sometimes blurred

consider batched vs. interactive processing

consider overlapped vs. non-overlapped processing

may require

conflicting optimizations

Wh t iis the

What

th mostt popular

l metric

t i off performance?

f

?

EECS 470

(Hint: AMD Athlon numbering scheme)

Lecture 1

Slide 20

© Wenisch 2007 -- Portions © Austin, Brehob, Falsafi,

Hill, Hoe, Lipasti, Shen, Smith, Sohi, Tyson, Vijaykumar

The “Iron Law” of Processor

P f

Performance

Processor Performance =

Instructions

= -----------------Program

(code size)

Time

--------------Program

Cycles

C

X ---------------- X

Instruction

(CPI)

Time

-----------Cycle

(cycle time)

A hit t

Architecture

-->

> Implementation

I

l

t ti

-->

> Realization

R li ti

Compiler Designer

EECS 470

Processor Designer

Chip Designer

Lecture 1

Slide 21

MIPS

© Wenisch 2007 -- Portions © Austin, Brehob, Falsafi,

Hill, Hoe, Lipasti, Shen, Smith, Sohi, Tyson, Vijaykumar

MIPS - Millions of Instructions per Second

MIPS =

benchmark

# of instructions

X

total run time

benchmark

1,000,000

When comparing two machines (A, B) with the same instruction set,

MIPS is a fair comparison (sometimes…)

(sometimes )

But MIPS can be a “meaningless

But,

meaningless indicator of performance…

performance ”

•

instruction sets are not equivalent

•

different programs use a different instruction mix

•

instruction count is not a reliable indicator of work

EECS 470

some optimizations add instructions

instructions have varying work

Lecture 1

Slide 22

MIPS (Cont.)

© Wenisch 2007 -- Portions © Austin, Brehob, Falsafi,

Hill, Hoe, Lipasti, Shen, Smith, Sohi, Tyson, Vijaykumar

Example:

Machine A has a special instruction for performing square root

It ttakes

k 100 cycles

l tto execute

t

Machine B doesn’t have the special instruction

must perform square root in software using simple instructions

e.g, Add

Add, M

Mult,

lt Shift eachh ttake

k 1 cycle

l tto execute

t

Machine A: 1/100 MIPS

Machine B: 1 MIPS

= 0.01 MIPS

H about

How

b tR

Relative

l ti MIPS = (time

(ti reference/time

/ti new) x MIPSreference ?

EECS 470

Lecture 1

Slide 23

MFLOPS

© Wenisch 2007 -- Portions © Austin, Brehob, Falsafi,

Hill, Hoe, Lipasti, Shen, Smith, Sohi, Tyson, Vijaykumar

MFLOPS = (FP ops/program) x (program/time) x 10-6

Popular in scientific computing

There was a time when FP ops were much much slower

th regular

than

l iinstructions

t ti

(i(i.e., off-chip,

ff hi sequential

ti l execution)

ti )

N t greatt for

Not

f “predicting”

“ di ti ” performance

f

bbecause it

ignores other instructions (e.g., load/store)

not all FP ops

p are created equally

q y

depends on how FP-intensive program is

Beware of “peak” MFLOPS!

EECS 470

Lecture 1

Slide 24

© Wenisch 2007 -- Portions © Austin, Brehob, Falsafi,

Hill, Hoe, Lipasti, Shen, Smith, Sohi, Tyson, Vijaykumar

Comparing Performance

Often, we want to compare the performance of different machines or

different programs.

programs Why?

help architects understand which is “better”

give marketing a “silver bullet” for the press release

help customers understand why they should buy <my machine>

EECS 470

Lecture 1

Slide 25

© Wenisch 2007 -- Portions © Austin, Brehob, Falsafi,

Hill, Hoe, Lipasti, Shen, Smith, Sohi, Tyson, Vijaykumar

Comparing Performance (Cont.)

If machine A is 50% slower than B, and timeA=1.0s, does that mean

case 1: timeB= 0.5s

since timeB/timeA=0.5

0.5

case 2: timeB = 0.666s

since timeA/timeB=1.5

What if I say machine B is 50% faster than A?

If machine A is 1000% faster than B, and timeA=1.0s, does than

mean

case 1: timeB=10.0s

A is 10 times faster

case 2: timeB=11.0s

A is 11 times faster

EECS 470

Lecture 1

Slide 26

By Definition

© Wenisch 2007 -- Portions © Austin, Brehob, Falsafi,

Hill, Hoe, Lipasti, Shen, Smith, Sohi, Tyson, Vijaykumar

Machine A is n times faster than machine B iff

perf(A)/perf(B) = time(B)/time(A) = n

Machine A is x% faster than machine B iff

perf(A)/perf(B) = time(B)/time(A) = 1 + x/100

E.g., A 10s, B 15s

15/10 = 11.55 Æ A is 11.55 times faster than B

15/10 = 1 + 50/100 Æ A is 50% faster than B

EECS 470

Remember to compare “performance”

Lecture 1

Slide 27

© Wenisch 2007 -- Portions © Austin, Brehob, Falsafi,

Hill, Hoe, Lipasti, Shen, Smith, Sohi, Tyson, Vijaykumar

Performance vs. Execution Time

Often, we use the phrase “X is faster than Y”

Means the response time or execution time is lower on X than on Y

Mathematically, “X is N times faster than Y” means

Execution TimeY

Execution TimeX

Execution TimeY

Execution TimeX

=

=

N

N = 1/PerformanceY = PerformanceX

1/PerformanceX

PerformanceY

Performance and Execution time are reciprocals

Increasing

EECS 470

performance decreases execution time

Lecture 1

Slide 28

© Wenisch 2007 -- Portions © Austin, Brehob, Falsafi,

Hill, Hoe, Lipasti, Shen, Smith, Sohi, Tyson, Vijaykumar

Let’s Try a Simpler Example

Two machines timed on two benchmarks

Program 1

Program 2

Machine A

Machine B

2 seconds

12 seconds

4 seconds

8 seconds

How much faster is Machine A than Machine B?

Attempt 1: ratio of run times

times, normalized to Machine A times

program1: 4/2

program2 : 8/12

Machine A ran 2 times faster on program 1, 2/3 times faster on program 2

On average, Machine A is (2 + 2/3) /2 = 4/3 times faster than Machine B

It turns this “averaging” stuff can fool us; watch…

EECS 470

Lecture 1

Slide 29

Example (con’t)

© Wenisch 2007 -- Portions © Austin, Brehob, Falsafi,

Hill, Hoe, Lipasti, Shen, Smith, Sohi, Tyson, Vijaykumar

Two machines timed on two benchmarks

Program 1

Program 2

Machine A

Machine B

2 seconds

12 seconds

4 seconds

8 seconds

How much faster is Machine A than B?

Attempt 2: ratio of run times,

times normalized to Machine B times

program 1: 2/4 program 2 : 12/8

EECS 470

Machine A ran program 1 in 1/2 the time and program 2 in 3/2 the time

On average, (1/2 + 3/2) / 2 = 1

Put another way,

way Machine A is 1.0

1 0 times faster than Machine B

Lecture 1

Slide 30

Example (con’t)

© Wenisch 2007 -- Portions © Austin, Brehob, Falsafi,

Hill, Hoe, Lipasti, Shen, Smith, Sohi, Tyson, Vijaykumar

Two machines timed on two benchmarks

Program 1

Program 2

Machine A

Machine B

2 seconds

12 seconds

4 seconds

8 seconds

How much faster is Machine A than B?

Attempt 3: ratio of run times, aggregate (total sum) times, norm. to A

Machine A took 14 seconds for both programs

Machine B took 12 seconds for both programs

Therefore,

Th f

M

Machine

hi A ttakes

k 14/12

off th

the titime off M

Machine

hi B

Put another way, Machine A is 6/7 faster than Machine B

EECS 470

Lecture 1

Slide 31

Which is Right?

© Wenisch 2007 -- Portions © Austin, Brehob, Falsafi,

Hill, Hoe, Lipasti, Shen, Smith, Sohi, Tyson, Vijaykumar

Question:

How can we get three different answers?

Solution

B

Because,

while

hil they

th are allll reasonable

bl calculations…

l l ti

…each answers a different question

We need to be more precise in understanding and posing these

performance & metric questions

EECS 470

Lecture 1

Slide 32

© Wenisch 2007 -- Portions © Austin, Brehob, Falsafi,

Hill, Hoe, Lipasti, Shen, Smith, Sohi, Tyson, Vijaykumar

Arithmetic and Harmonic Mean

Average of the execution time that tracks total execution time is the

arithmetic mean

1 n

∑

n i =1Timei

This is the

defn for “average”

you are most

familiar with

If performance is expressed as a rate,

rate then the average that tracks total

execution time is the harmonic mean

n

1

∑

Rate

This is a different

defn for “average”

you are prob. less

f ili with

familiar

ith

n

i =1

EECS 470

i

Lecture 1

Slide 33

© Wenisch 2007 -- Portions © Austin, Brehob, Falsafi,

Hill, Hoe, Lipasti, Shen, Smith, Sohi, Tyson, Vijaykumar

Problems with Arithmetic Mean

Applications do not have the same probability of being run

Longer programs weigh more heavily in the average

For example,

example two machines timed on two benchmarks

Machine A

Program 1

Program 2

EECS 470

2 seconds (20%)

12 seconds (80%)

Machine B

4 seconds (20%)

8 seconds (80%)

If we do arithmetic mean, Program 2 “counts more” than Program 1

an improvement in Program 2 changes the average more than a

proportional improvement in Program 1

But perhaps Program 2 is 4 times more likely to run than Program 1

Lecture 1

Slide 34

© Wenisch 2007 -- Portions © Austin, Brehob, Falsafi,

Hill, Hoe, Lipasti, Shen, Smith, Sohi, Tyson, Vijaykumar

Weighted Execution Time

Often, one runs some programs more often than others. Therefore, we

should weight the more frequently used programs’ execution time

Ti

W i h i×Time

∑ Weight

n

i =1

i

Weighted Harmonic Mean

1

Weight i

∑

Rate

n

i =1

EECS 470

i

Lecture 1

Slide 35

© Wenisch 2007 -- Portions © Austin, Brehob, Falsafi,

Hill, Hoe, Lipasti, Shen, Smith, Sohi, Tyson, Vijaykumar

Using a Weighted Sum

( weighted

(or

i ht d average))

Program 1

Program 2

Total

Machine A

Machine B

2 seconds (20%)

12 seconds (80%)

10 seconds

4 seconds (20%)

8 seconds (80%)

7.2 seconds

Allows us

to determine relative performance 10/7.2

10/7 2 = 11.38

38

--> Machine B is 1.38 times faster than Machine A

EECS 470

Lecture 1

Slide 36

Quiz

© Wenisch 2007 -- Portions © Austin, Brehob, Falsafi,

Hill, Hoe, Lipasti, Shen, Smith, Sohi, Tyson, Vijaykumar

E.g., 30 mph for first 10 miles, 90 mph for next 10 miles

What is the average speed?

Average speed = (30 + 90)/2 = 60 mph (Wrong!)

The correct answer:

Average speed = total distance / total time

= 20 / (10/30 + 10/90)

= 45 mph

For rates use Harmonic Mean!

EECS 470

Lecture 1

Slide 37

© Wenisch 2007 -- Portions © Austin, Brehob, Falsafi,

Hill, Hoe, Lipasti, Shen, Smith, Sohi, Tyson, Vijaykumar

Another Solution

Normalize runtime of each program to a reference

Program 1

Program 2

Total

Program 1

g

2

Program

Average?

Machine A (ref)

Machine B

2 seconds

12 seconds

10 seconds

4 seconds

8 seconds

7.2 seconds

Machine A

(norm to B)

Machine B

(norm to A)

0.5

1.5

1.0

2.0

0.666

1.333

S when

So

h we normalize

li A to

t B

B, andd average, it looks

l k lik

like A & B are th

the same.

But when we normalize B to A, it looks like A is better!

EECS 470

Lecture 1

Slide 38

Geometric Mean

© Wenisch 2007 -- Portions © Austin, Brehob, Falsafi,

Hill, Hoe, Lipasti, Shen, Smith, Sohi, Tyson, Vijaykumar

Used for relative rate or performance numbers

Timeref

Rate

Relative _ Rate =

=

Rateref

Time

Geometric mean

n

n

∏Relative_Ratei =

i =1

EECS 470

n

n

∏Rate

i =1

i

Ratereff

Lecture 1

Slide 39

© Wenisch 2007 -- Portions © Austin, Brehob, Falsafi,

Hill, Hoe, Lipasti, Shen, Smith, Sohi, Tyson, Vijaykumar

Using Geometric Mean

Program 1

P

Program 2

Geometric Mean

Machine A

(norm to B)

Machine B

(norm to A)

00.55

1.5

0.866

22.00

0.666

1.155

1.155 = 1/0.8666!

Drawbacks:

•

Does not ppredict runtime because it normalizes

•

Each application now counts equally

EECS 470

Lecture 1

Slide 40

Well…..

© Wenisch 2007 -- Portions © Austin, Brehob, Falsafi,

Hill, Hoe, Lipasti, Shen, Smith, Sohi, Tyson, Vijaykumar

Geometric mean of ratios is not proportional to total time

Arithmetic mean in example says machine B is 9.1 times faster

Geometric mean says they are equal

If we took total execution time, A and B are equal only if

program 1 is run 100 times more than program 2

Rule of thumb: Use AM for times, HM for rates, GM for ratios

EECS 470

Lecture 1

Slide 41

Summary

© Wenisch 2007 -- Portions © Austin, Brehob, Falsafi,

Hill, Hoe, Lipasti, Shen, Smith, Sohi, Tyson, Vijaykumar

Performance is important to measure

For architects comparing different deep mechanisms

For developers of software trying to optimize code, applications

For users, trying to decide which machine to use, or to buy

Performance metric are subtle

EECS 470

Easy to mess up the “machine A is XXX times faster than machine B”

numerical performance comparison

You need to know exactly what you are measuring: time, rate, throughput,

CPI, cycles, etc

You need to know how combining these to give aggregate numbers does

different kinds of “distortions” to the individual numbers

No metric is perfect, so lots of emphasis on standard benchmarks today

Lecture 1

Slide 42