φ φ ε

advertisement

1

HG

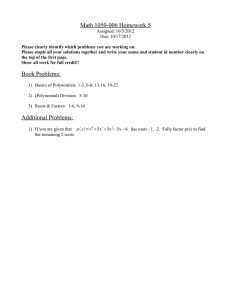

Second lecture - 22. Jan. 2014

1.

Dynamic Multipliers.

Suppose Yt ~ AR(1) which is a dynamic model for the time series, {Yt } , where

the dynamics is specified in terms of a stochastic difference equation

Yt 0 1Yt 1 t where

Yt Z t where

(1)

Zt

j t j

2 t 2 1 t 1 0 t 1 t 1 2 t 2

j

t ~ WN (0, 2 )

Remember:

i.

If 1 1, (1) has a causal stationary solution

Yt

(2)

ii.

0

t 1 t 1 12 t 2

1 1

1t 0 1t 1 1

If 1 1, (1) has a non-causal stationary solution

Yt

0

t 1 b1t 2 b12t 3

1 1

b1jt j 1

2

t 1

~ WN 0, 2 . Any causal solution is nonwhere t 1

1

1

stationary and explosive.

iii.

If 1 1, (1) has no stationary solution. Its solution is a random walk

plus (if 0 0 ) a deterministic trend.

2

A general stationary solution for a dynamically modelled univariate time

series, {Yt } - with only one white noise error term, t - has the form

Yt Z t where

(3)

Zt

j t j

2 t 2 1 t 1 0 t 1 t 1 2 t 2

j

t ~ WN (0, 2 ) , and { j }j is a sequence of numbers

where

satisfying

(4)

j

j

The condition (4) ensures that Z t is a meaningful random variable with

expectation 0 ( E (Yt ) ), and covariance stationary with

autocovariance ,

(5)

( h ) ( h)

2

j

j h

j

A solution is causal - which we will concentrate on - when all 1 , 2 ,

0, so that

Yt Zt where

(6)

Zt

j t j

0 t 1 t 1 2 t 2

j 0

where Z t is covariance stationary with E ( Zt ) 0 and

(7)

( h ) ( h) 2

j0

j

j h

are

3

E.g., in the (stable) AR(1) case, j 1j

(with 0 1 and 1 1) , so the

covariance function for Z t (and for Yt as well) is

( h) ( h)

2

j h j

j0

2

j0

2

3

1 12 12 12

2

12 j h

h

1

2 1

1 12

h

Interpretation of the j ‘s

The j ’s in the linear process solution (7) are called dynamic multipliers in

the time series {Yt } , i.e., the effect of a unit change in t on {Yt j }j 0 , when

there are no changes in the other s ' s. Due to the linear structure, this effect can

be obtained by the derivative, Yt j t :

Yt j

0 t j 1 t j 1 2 t j 2

t

t

for j 0,1, 2, .

j t

j

The immediate effect on Yt is 0 1 (usually).

The effect on Yt 1 is 1

….

The effect on Yt j is j

which 0 as j since

| |

j

.

j

The total effect on {Yt } of a unit change in t (i.e., “the long run effect”) is

then given as

(8)

j 0

Yt j

t

j 0

j

4

In the stable AR(1) case, the long run effect becomes

Yt j

t

j 0

1j

j 0

1

1 1

Sometimes, when Yt is measured in money terms, one may be interested in the

discounted long run effect (discount factor ), defined by

j 0

j

Yt j

t

j j

stable AR(1) case

j 0

j 0

j1j

1

1 1

Similar simple expression can be derived in the general stable AR(p) case.

2.

Lag Polynomials and Linear Filters

We now introduce the lag-operator, L, working on a time series {Yt } , and

def

defined by, LYt Yt 1 . Similarly we define, L2 L L by

L2Yt L( LYt ) L(Yt 1 ) Yt 2 , and in general, LjYt Yt j , j 0,1, 2,

(implicitly defining, L0Yt Yt )

We extend this definition to the forward shift operator, L1 , defined by

L1Yt Yt 1 , so that L1LYt L1 ( LYt ) L1 Yt 1 Yt (similarly LL1Yt Yt ).

Hence, LL1 L1 L L0 .

We get as above, L jYt Yt j , j 0,1, 2,

Using L as basis operator, we can build up quite general operators. For example,

we had the first difference operator, , defined by Yt Yt Yt 1 Yt LYt .

The last expression we write as (1 L)Yt by definition, where we consider 1 L

a simple lag-polynomial. We identify this with , i.e., 1 L .

5

The second difference, as a lag polynomial becomes

def

Yt (Yt ) (Yt Yt 1 ) Yt Yt 1 (Yt 1 Yt 2 ) Yt 2Yt 1 Yt 2

2

(1 2 L L2 )Yt

We see that we get the same answer if we multiply out 2 as if L was just a

number:

2 (1 L)2 1 2L L2

We now define a general lag-polynomial of order p as the operator

( L) 0 1L 2 L2

p Lp

that, by definition, works on any series {w t } (stochastic or not)

( L) wt 0 1 L 2 L2

p Lp wt

def

0 wt 1 Lwt 2 L2 wt

p Lp wt

0 wt 1wt 1 2 wt 2

p wt p

The operator ( L) corresponds to a usual polynomial (the companion

polynomial), (z) 0 1 z 2 z 2

p z p , where z is a numeric

variable.

(9)

Some much used properties:

( L)(ut wt ) ( L)ut ( L) wt (follows directly from the definition

– just write it out).

If wt c is constant for all t, clearly Lwt Lc c and Lj wt Lj c c ,

and

( L)c 0 c 1 Lc 2 L2c

( 0 1 2

p )c (1)c

where (1) 0 1 2

companion polynomial.

p Lp c

p is obtained by replacing z by 1 in the

6

If ( L) 0 1 L

p Lp , ( L) 0 1L

q Lq are two

lag polynomials, the product ( L) ( L) is commutative (the order of

multiplication does not matter) , and a lag polynomial of order (p+q),

( L) ( L) ( L) ( L) 0 1L

pq Lpq , where the

coefficients, j , can be found by multiplying the corresponding

companion polynomials in the usual manner,

(z) (z) (z) (z) 0 1 z

pq z pq (straightforward,

although somewhat messy to prove…)

Unfortunately, we need to go one step further, and define lag “polynomials” of

infinite orders. A causal stationary solution for {Yt } , expressed by L, has the

form from (6)

Yt

0 t 1 t 1 2 t 2

j t j

j 0

0 t 1 L t

def

j L t

j

j L t

j 0

j

( L) t

where ( L)

j Lj , satisfying

| |

j

, thus, is an “infinite order

j

j 0

lag polynomial”, with the more common name, linear (time-homogeneous)

filter, a basic tool in time series analysis.

A special case is the MA(q) process

Yt

j t j

t 1 t 1 2 t 2

q t q ( L) t

j 0

where j 0 for j q, j j for j q, and 0 1, and

( L) 1 1L 2 L2

q Lq

7

For such linear filters strong mathematical results can be proven:

Theorem 1. If ( L) is a linear filter satisfying

| |

j

, and {ut } is

j

any covariance stationary time series, then the filtered series

Yt ( L)ut 0ut 1ut 1 2ut 2

j ut j

are well defined as random variables and the series {Yt }is covariance stationary.

(The proof requires some Hilbert space theory and is skipped here. Formulaes

for the autocovariance can be found in other textbooks, e.g., in Brockwell and

Davis referred to below.)

Also the three properties in (9) are still valid. First we define the companion

infinite order “polynomial”, called a power series where z is a usual numeric

variable:

(z) 0 1 z 2 z 2

j z j

8

Corresponding to (9) we then have (formulated for causal linear filters that we

need here, but is valid also for more general and non-causal filters):

Theorem 2. If ( L) is a (causal) linear filter satisfying

| |

j

, the

j

following is true:

i.

( L)(ut wt ) ( L)ut ( L)wt (i.e., ( L) is a linear operator)

ii.

If wt c is constant for all t,

( L)c 0 c 1 Lc 2 L2c

( 0 1 2 )c (1)c

where (1) 0 1 2

j

is obtained by replacing z by 1

j 0

in the companion power series.

iii.

If ( L) 0 1 L 2 L2

, ( L) 0 1L 2 L2

are two

linear filters (satisfying finite sum of absolute values of coefficients), the

product ( L) ( L) is commutative, and defines a linear filter

( L) ( L) ( L) ( L) 0 1L 2 L2

, where

| |

j

j

and where the coefficients, j , can be found by multiplying the

corresponding companion power series in the usual manner,

(z) (z) (z) (z) 0 1 z 2 z 2

(The proof of i. and ii. is straightforward as in (9). The proof of iii. requires

some theory of complex power series that we skip here. Formal proofs can be

found, e.g., in P.J. Brockwell and R.A. Davis, “Time Series: Theory and

Methods”, Springer Verlag 1987 or later.)

,

9

Application.

We are here primarily concerned with (possible) causal solutions of

(10) AR(p) with specification

Yt 0 1Yt 1

pYt p t where t ~ WN (0, 2 )

and

(11) ARMA(p,q) with specification

Yt 0 1Yt 1

pYt p t 1 t 1

q t q , where

t ~ WN (0, 2 )

As a first step we write (10) and (11) as

Yt 1Yt 1

pYt p wt , or, with corresponding lag polynomial

(12) ( L)Yt wt , where ( L) 1 1 L 2 L2

p Lp

and where wt 0 t in the AR(p) case, and

wt 0 t 1 t 1

q t q 0 ( L) t in the ARMA(p,q) case.

The idea is to try to construct a (causal) linear filter,

( L) 0 1L 2 L2

such that

(13) ( L) ( L) 1

If we succeed with that, we can simply multiply both sides of (12) to obtain our

solution:

( L) ( L)Yt 1 Yt ( L)wt giving (using theorem 2)

i.

ii.

AR(p) case: Yt ( L)(0 t ) ( L)0 ( L) t (1)0 ( L) t

Hence, solving (13) gives us the directly the linear filter solution.

10

The ARMA(p,q) case is a little more complicated. Multiplying both sides by

( L) , we get

Yt ( L)(0 ( L) t ) (1)0 ( L) (L) t

We see that, in this case, the job is not completely done yet since we need to

write the linear filter ( L) (L) ( L) 1 1 L 2 L2 3 L3

of a linear filter, ( L) 1 1 L 2 L2 3 L3

in the form

. This is best accomplished

by the method of solving a difference equation as described in the summary at

the end before the appendix.

Note, however, that in both cases, we find E (Yt ) (1)0

j 0

j 0

Now to (13) relevant for the AR(p) case: To simplify the notation we look at the

case, p 2 (the general case is quite similar):

(13) 1 (1 1 L 2 L2 )( 0 1 L 2 L2

0 1 L 2 L2

)

3 L3 4 L4

1 0 L 1 1 L2 1 2 L3 1 3 L4

2 0 L2 2 1 L3 2 2 L4

Collecting terms we get

2

1 0 ( 1

1 0 ) L ( 2

1 1 2 0 ) L

j

( j

1 j 1 2 j 2 ) L

For this equality to hold, we must have 0 1 and all coefficients for any term

with some Lk , k 1, 2,

, must be equal to 0. Hence

0 1, 1 1 , and for all j 2 , we must have j

1 j 1 2 j 2 0 .

In other words: The sequence { t } (for t 2 ) must satisfy the same difference

equation as {Yt } in (12) only with 0 instead of wt on the right side. Such

difference equations (with 0 on the right side) are called homogeneous.

11

So, the task has been reduced to finding the general solution of the

homogeneous difference equation

(14) t

(or ( L) t 0 ) for t 2 .

1 t 1 2 t 2 0 ,

Note that also the autocovariance function { (h)} satisfies the same difference

equation for h 2 (see next lecture or Hamilton).

We find the general solution (see e.g., Sydsæter II) from the companion

polynomial and its roots, i.e., the solutions, z1 , z2 of

(15) 1 1 z 2 z 2 0

Hamilton operates with the roots, r1 , r2 , of the “reversed” version of the

polynomial

(16)

x 2 1 x 2 ( x r1 )( x r2 ) 0

Clearly, we must have ri 1 zi , i 1, 2

[for putting z 1 x in (15) gives, 1 1 z 2 z

2

1

2

( x 1 x 2 ) ,

2

x

which is 0 for x r1 or r2 , i.e., for z 1 r1 or 1 r2 implying

zi 1 ri , i 1, 2 ]

Factorizing (15), we get (writing z 1 x )

1 2

1

( x 1 x 2 ) 2 ( x r1 )( x r2 )

2

x

x

1

1

z 2 r1

r2 (1 r1 z )(1 r2 z )

z

z

1 1 z 2 z 2

Hence, the corresponding lag polynomial can be factorized

12

1

1

1 1 L 2 L2 (1 r1 L)(1 r2 L) 1 L 1 L

z1 z2

The case of different roots. If z1 z2 (i.e., r1 r2 ), the general solution of

(14) is

(17) t c1

1

1

c

c1r1t c2 r2t where c1 , c2 are arbitrary constants

2

t

t

z1

z2

To see this we substitute in (14) (for t 2 )

t

t

t 1

t

c2 r2t 1 ) 2 (c1r1t 2 c2 r2t 2 )

1 t 1 2 t 2 c1r1 c2 r2 1 (c1r1

c1 ( r1t 1r1t 1 2 r1t 2 ) c2 ( r2t 1r2t 1 2 r2t 2 )

c1r1t 2 ( r12 1r1 2 ) c2 r2t 2 (r22 1r2 2 ) 0

Now, we further determine the free constants c1 , c2 such that our two initial

conditions, 0 1, 1 1 , are fulfilled, i.e.,

(18)

1 0 c r c r c1 c2

0

1 1

0

2 2

1 1 c1r11 c2 r21 c1r1 c2 r2

c1

c2

1 r2

r1 r2

r1 1

r1 r2

With these values of c1 , c2 the solution is

(19) t c1

1

1

t

t

c

2 t c1r1 c2 r2 for t 0,1, 2,3,

t

z1

z2

From this we get the stability condition (i.e., the same as the condition that the

solution time series is a causal linear filter (see note next page)):

13

The condition that the solution filter ( L) 0 1 L 2 L2

is a

proper filter is that

| | | c r c r |

t

1 1

j

j 0

t

2 2

j 0

The necessary and sufficient condition for this is that both roots must be less

than 1 in absolute value ( ri 1 ), otherwise the sum must be . The roots are

sometimes complex (see appendix), so this is the same as saying that the roots of

the reversed companion polynomial must be inside the unit circle (or,

equivalently, the roots of the companion polynomial must be outside the unit

circle, zi 1 ).

Note on stability. It is reasonable to call this root condition a stability

condition, since, not only will the condition ensure the existence of a causal

stationary solution, Yt ( L) wt , but assuming that {Yt } starts somewhere

(arbitrary) in p (fixed) start values, Y0 , Y1 , Y2 ,

, Y ( p1) , a similar argument as

for the AR(1) case will show that the resulting series is not necessarily

stationary from the start, but it will approximate the stationary solution as time t

increases. I.e., the stationary solution appears to play the role as an equilibrium

state for {Yt } . It is also possible to establish a special start condition by

postulating that the start variables, Y0 , Y1 , Y2 ,

, Y ( p1) have the particular joint

distribution determined by the infinite solution filter ( L) . Then {Yt } will be

stationary from the start.

The case of equal roots ( r1 r2 r ) is similar but the general solution of (14)

t

(or ( L) t 0 ) for t 2 .

1 t 1 2 t 2 0 ,

looks different:

(20) t (c1 c2t )r t for t 2 and c1 , c2 arbitrary constants that will be

14

determined by the initial conditions 0 1, 1 1 :

1 0 (c1 c2 0)r 0 c1

1 1 (c1 c21)r1

c1 1

c2

1

r

1

The condition for a causal linear filter solution and stability is also here that the

common root, r, is inside the unit circle ( r 1 ). (Then, and only then,

|

j

| 1 )

j 0

A numerical example.

Consider the following AR(2) specification

(*)

Yt 1 0.3Yt 1 0.1Yt 2 t where t ~ WN (0, 2 )

Does (*) have a causal stationary solution?

Is {Yt } stable?

Find the causal solution, the long-run effect, and E (Yt ) .

Write first (*) as (12):

Yt 0.3Yt 1 0.1Yt 2 1 t wt

Lag polynomial:

( L) 1 0.3L 0.1L2

0 1, 1 0.3, 2 0.1

Companion polynomial:

( z ) 1 0.3z 0.1z 2

Reversed comp. polynomial:

h( x) x 2 0.3x 0.1

15

Roots, i.e., solutions of h( x) 0 :

ri

0.2

1

1

0.3 0.09 0.4 0.3 0.7

2

2

0.5

Both roots are less than 1 in absolute value, so the answer to the first two

questions is “Yes”, a causal stationary solution exists and the system is stable

(implying convergence to the stationary solution for {Yt } from any start-value).

The solution filter, ( L)

j Lj

j 0

1 r2

(c1r1 j c2 r2j )Lj , where

j 0

0.3 0.5 2

r1 r2

0.7

7

r 0.2 0.3 5

c2 1 1

r1 r2

0.7

7

c1

( L)

j 0

5

2

j

j j

(0.2)

(

0.5)

L

7

7

We need to find the long run value, (1)

j

, which we get from (13),

j

( L) ( L) 1 , which, translating to usual polynomials and power series, must

be equivalent to ( z ) ( z) 1 . Since we are dealing with usual functions here,

this implies that ( z )

1

(valid for all z such that z 1 as long as the

( z)

roots of ( z ) are outside the unit circle).

In particular: (1)

1

1

1 6

(1) 1 0.3 0.1 1.2 5

As just after (13) we get

6

5

E (Yt ) E (1)0 ( L) t (1)0 1

(End of example)

6

5

16

Notice the last argument of the example. Due to theorem 2 all coefficients in the

product ( L) ( L) are the same as in the product ( z ) ( z ) between usual

functions. Since the equation ( z ) ( z) 1 has the solution, ( z )

natural to define a new operator,

1

, it is

( z)

1

, which, by definition, simply means the

( L)

filter ( L) . For example, using this new operator on a constant c, we get

def

Th. 2

1

1

c

c ( L)c ( z )c z 1

c

( L)

( z ) z 1 (1)

With this (extremely much used) trick of introducing

1

, we obtain an

( L)

elegant expression (not to say algebra) of solutions, e.g., of an ARMA(p,q) as

above

( L)Yt wt 0 ( L) t

(21) Yt

Th. 2

1

1

1

( L)

(

L

)

0

( L) t 0

0

t

( L)

( L)

( L)

(1) ( L) t

so the solution in this case is to express

( L) 1 1L 2 L2 3 L3

( L)

as a linear filter,

( L)

. i.e., solving

(22) ( L) ( L) ( L)

which leads to same homogeneous difference equation as for ( L) , but with

different initial conditions (see summary below).

17

In the AR(p) case:

(22) Yt

1

1

1

( L)

t , where

0 t 0

( L)

( L)

(1) ( L)

For example, in the AR(1) case that we solved last time,

Yt 0 1Yt 1 t or

Yt 1Yt 1 0 t

( L) 1 1L ( z ) 1 1 z

which has only one root, z 1 1 , that must be outside the unit circle to

generate a causal filter, i.e., 1 1.

1

1

Since then

( z ) 1 1 z

(1 z )

j

j 0

j 0

1

z

( L)

j

1

j

1j Lj

j 0

we get the solution

Yt

0

1

t 0 t 1 t 1 12 t 2

(1) ( L)

1 1

as we found before.

For completeness sake a summary of the general causal filter solution is given

Summary of the causal filter solution for general p.

We want a causal solution of

Yt 1Yt 1 pYt p wt , or, with corresponding lag polynomial,

(21) ( L)Yt wt , where ( L) 1 1 L 2 L2

polynomial and (z) 1 1 z 2 z 2

p Lp is the lag

p z p the companion polynomial.

18

The reversed companion polynomial is

(22) h( x) x p 1 x p1 2 x p2

p

that, according to the fundamental theorem of algebra (see appendix) always

must have p roots, r1 , r2 , , rp , some of which may be equal, and some of which

may be complex. The number of times a root occurs is called the multiplicity of

the root.

[For example, the polynomial, ( x 2)3 ( x 0.5)2 ( x 0.8) has order 6,

but only 3 different roots, 2, -0.5, and 0.8, with multiplicities 3, 2, and 1

respectively.]

So, in general, (22) has s different roots, r1 , r2 , , rs , with multiplicities,

m1 , m2 ,

, ms , respectively, where m1 m2

ms p .

AR(p) case: The filter solution, ( L)

j Lj , in the must satisfy (13), i.e.,

j 0

(23) ( L) ( L) 1

which, as above for p 2 , leads to the homogeneous difference equation for

{ t } for t p ,

(24) t

1 t 1

p t p 0, for t p , or ( L) t 0

The p initial conditions for AR(p) are

(25)

0 1, 1 1 , 2

1 1 2 , 3

1 2 2 1 3 ,

, p1

p1

1 p 2 2 p 3

19

The ARMA(p,q) case: The solution is

Yt

0 ( L)

( L)

satisfies

t 0 ( L) t , where ( L)

(1) ( L)

(1)

( L)

(26) ( L) ( L) ( L)

which leads to the same homogeneous difference equation as for { t } ,

(27) t 1 t 1

p t p 0, for t max( p, q 1)

with initial conditions for t max( p, q 1)

(28)

0 1

1 1 0 1

2 11 2 0 2

3 1 2 21 3 0 3

The general solution of (24) and (27) is

(i) If all roots, r1 , r2 , , rp , of the reversed companion polynomial are

different, then

t (or t ) c1r1t c2 r2t

where the constants, c1 , c2 ,

c p rpt

, c p are determined such that the initial

conditions (25) are fulfilled.

(ii) If only s roots, r1 , r2 ,

m1 , m2 ,

, rs , are different with multiplicities,

, ms , respectively, the general solution is

t (or t ) p1 (t )r1t p2 (t )r2t ps (t )rst

where pi (t ) is a (mi 1) -degree polynomial in t,

pi (t ) ci 0 ci1t ci 2t 2

ci ( mi 1)t mi 1 , where the coefficients are

determined such that the initial conditions (25) (or (28)) are fulfilled.

(iii) The solution filter is causal stationary if and only if all roots of the

reversed companion polynomial are inside the unit circle.

20

Appendix.

circle.

Crash course I in complex numbers – The unit

We introduce the symbol i 1 which is interpreted as a “number” that

satisfies i 2 1 , and that we call “imaginary” or “complex”. Then the equation

(1)

4 ( x 1)2 x2 2 x 5 0

gets two (complex) roots (notice that the expression on the left is larger than

zero always)1. The roots, r1, r2 , are found in the usual way:

x

1

1

1

2 4 4 5 1

16 1

1 16 1 2i

2

2

2

The polynomial in (1) can then be factorized (as in the real case)

x2 2x 5 ( x r1)( x r2 ) ( x 1 2i)( x 1 2i)

which can be checked by multiplying out the right side (assuming that all

common algebraic operations are valid):

( x 1 2i)( x 1 2i) ( x 1)2 ( x 1)2i 2i( x 1) 4i 2 ( x 1)2 4(1)

( x 1)2 4

Properties

1) All complex numbers can be written uniquely as z a ib where a and

b are real. z can be interpreted geometrically as a point, (a, b) , in the

usual two-dimensional plane, z a ib (a, b) . Hence i itself is

represented by the point (0,1) which corresponds to the unit vector on the

y-axis.

1

This was the way complex numbers were introduced originally. People noticed that by doing this, they

achieved that every n-dimensional polynomial always has n roots. This they considered a great advantage. It

took some time, however, before one managed to prove that this trick could not lead to contradictions in math.

21

2) Real numbers are now interpreted as special complex numbers, i.e., all

points on the x-axis, where b 0 , i.e., x a i(0) (a,0) .

3) It can be shown that all usual algebra for real numbers can be used for

complex numbers (e.g., 1 i i (ii) i (1) i (0, 1) ).

4) The absolute value of z a bi , also called the modulus (written | z | ), is

defined as the distance to the origin of the point (a, b) , a 2 b2 , (from

Pytagoras’ theorem). The modulus of a real number (a,0) then becomes

a2 02 a2 | a | , i.e., the usual absolute value of a.

5) The modulus satisfies z1 z2 z1 z2 .

Proof: Let z j a j ib j . Then

z1 z2 (a1 ib1 )(a2 ib2 ) (a1b1 a2b2 ) i(a1b2 a2b1 ) , from which

z1 z2 a12 a22 b12b22 2a1a2b1b2 a12b22 a22b12 2a1b2 a2b1

= (a12 b12 )(a22 b22 ) | z1 | | z2 |

In particular we get z 2 zz | z | | z || z |2 , and more generally, | z k | | z |k .

6) If z 1, we must have that z k 0 when k .

Proof: The distance to the origin for z k is given by z k z 0 , since z is

k

a real positive number 1 .

Example: Let z (1 i) / 2 , from which z 1 4 1 4 1

2

2 1 . We find

2

1 1 1 1 i 1 i

1 1 1

z i 2 i i

4

2 2 2

4 2 4 2

2 2

2

i 1 i i i2

1 i

z3 z 2 z

22 2 4 4

4 4

1

1 i 1 i 1 1 1 1

z 4 i

4

4 4 2 2 8 8 8 8

… etc.

(Locate these points in the plane. You will see that z

moves toward 0 along a spiral in the plane).

k

7) Complex conjugates. If z a ib is a complex number, we define

the complex conjugate of z (written z ) as z a ib . We have

i.

z1 z2 z1 z2

[Proof.

z j a j ib j z1 z2 ( a1 a2 ) i (b1 b2 )

z1 z2 ( a1 a2 ) i (b1 b2 ) a1 ib1 a 2 ib2

22

ii.

z1 z2 z1 z2 implying z k z k

[Proof.

z1 z2 ( a1 a2 b1b2 ) i ( a1b2 a2 b1 )

( a1 a2 b1b2 ) i ( a1b2 a2 b1 )

z1 z2 ( a1 ib1 )( a2 ib2 )

( a1 a2 b1b2 ) i ( a1b2 a2 b1 )

iii.

zz z

iv.

z real z z [ z a i 0 a i 0 a z ]

2

[ (a ib)(a ib) a 2 b 2 ]

The unit circle

is defined as all complex numbers, z, that has a constant distance = 1 to the

origin, i.e., such that z a bi a 2 b2 1 . Such points in the plane form a

circle around the origin with constant radius equal to 1. z is inside the circle if

z 1 since then the distance to the origin is smaller than 1, and z lies outside the

circle if z 1 .

The fundamental theorem of algebra

says that any p-degree polynomial, a0 a1 z a2 z 2

roots, r1 , r2 ,

a p z p , has exactly p

, rp , some of which may be equal but are counted as many times

they occur.

An important consequence of 7) is that if the polynomial

p( z ) a0 a1 z a2 z 2

a p z p has real coefficients ( a j ) only, and if r is a

complex root (i.e., such that p(r ) 0 , then the conjugate, r , is also a root. This

follows since

0 0 p(r ) a0 a1r a2 r

2

a0 a1r a2 r 2

7)

apr

p

a p r p a0 a1r a2 r 2

a pr p

Hence, if complex roots occur in real polynomials, they always occur in pairs, as

r and r .

23

Examples.

From the fundamental theorem follows that the equation x k 1 , for any k, has k

solutions always.

For example, x3 1 is a third degree polynomial and must have three roots.

Using polar coordinates (that we will not take up here although very important

tool) as described in Ragnar’s lecture notes on complex numbers on the web

page for the 2011 course, the three exact roots are

1

3

, and

r2 cos(2 3) i sin(2 3) i

2

2

1

3

r3 cos(4 3) i sin(4 3) i

2

2

r1 1 ,

(a slight mistake in Ragnar’s example)

Stata. In practice it is, maybe, better to use a computer to find roots. For

example, the routine Mata in Stata is easy to use (the command polyroots

calculates roots of polynomials):

The command, mata, brings you into Mata shown by the colon prompt “ : ”

instead of the usual point “ . ”. To get out of Mata you just write the command

end and enter. Mata identifies a polynomial by a vector of coefficients with the

lowest degree coefficients first. For example the polynomial, x2 2 x 5 , is

described by the vector (5, 2,1) , and the polynomial, x3 1, by (1,0,0,1) .

. mata

------------------------------------------------- mata (type end to exit) : polyroots((5,-2,1))

1

2

+-------------------+

1 | 1 - 2i

1 + 2i |

(As we got in the beginning)

+-------------------+

: polyroots((-1,0,0,1))

(The roots of x^3-1)

1

2

3

+-------------------------------------------------------------+

1 | -.5 - .866025404i

-.5 + .866025404i

1 |

+-------------------------------------------------------------+

: r=polyroots((-1,0,0,1))

(I want access to the first root r[1])

24

: r

1

2

3

+-------------------------------------------------------------+

1 | -.5 - .866025404i

-.5 + .866025404i

1 |

+-------------------------------------------------------------+

: r[1]

-.5 - .866025404i

: r[1]^3

1

(Checking that r[1] really is a root)

: end

---------------------------------------------------------------------------

.

(Further details on complex number can be read in Ragnar’s lecture notes on this

on the web page for the 2011 course.)