View PDF 239.14 K - Journal of Mathematical Research with

advertisement

Journal of Mathematical Research with Applications

Jan., 2014, Vol. 34, No. 1, pp. 114–126

DOI:10.3770/j.issn:2095-2651.2014.01.012

Http://jmre.dlut.edu.cn

A Modified Gradient-Based Neuro-Fuzzy Learning

Algorithm for Pi-Sigma Network Based on First-Order

Takagi-Sugeno System

Yan LIU1,2 ,

Jie YANG1 ,

Dakun YANG1 ,

Wei WU1,∗

1. School of Mathematical Sciences, Dalian University of Technology, Liaoning 116024, P. R. China;

2. School of Information Science and Engineering, Dalian Polytechnic University,

Liaoning 116034, P. R. China

Abstract This paper presents a Pi-Sigma network to identify first-order Tagaki-Sugeno

(T-S) fuzzy inference system and proposes a simplified gradient-based neuro-fuzzy learning

algorithm. A comprehensive study on the weak and strong convergence for the learning

method is made, which indicates that the sequence of error function goes to a fixed value, and

the gradient of the error function goes to zero, respectively.

Keywords

first-order Takagi-Sugeno inference system; Pi-Sigma network; convergence.

MR(2010) Subject Classification 47S40; 93C42; 82C32

1. Introduction

The combination of neural networks and fuzzy set theory is showing special promise and

is a growing hot topic in recent years. The Takagi-Sugeno (T-S) fuzzy model was proposed by

Takagi-Sugeno [1], which is characterized as a set of IF-THEN rules. Pi-Sigma Network (PSN)

[2] is a class of high-order feedforward network and is known to provide inherently more powerful

mapping abilities than traditional feedforward neural networks.

A hybrid Pi-Sigma network was introduced by Jin [3], which is capable of dealing with

the nonlinear systems more efficiently. Combination of the benefits of high-order network and

Takagi-Sugeno inference system makes Pi-Sigma network have a simple structure, less training

epoch and fast computational speed [4]. Despite numerous works dealing with T-S systems

analysis on identification and stability, fewer studies have been done concerning the convergence

of the learning process.

In this paper, a comprehensive study on convergence results for Pi-Sigma network based

on first-order T-S system is presented. In particular, the monotonicity of the error function in

the learning iteration is proven. Both the weak and strong convergence results are obtained,

Received July 19, 2012; Accepted November 25, 2012

Supported by the Fundamental Research Funds for the Central Universities, the National Natural Science Foundation of China (Grant No. 11171367) and the Youth Foundation of Dalian Polytechnic University (Grant No. QNJJ

201308).

* Corresponding author

E-mail address: wuweiw@dlut.edu.cn (Wei WU)

A modified learning algorithm for Pi-Sigma network based on first-order Takagi-Sugeno system

115

indicating that the gradient of the error function goes to zero and the weight sequence goes to a

fixed point, respectively.

The remainder of this paper is organized as follows. A brief introduction of first-order

Takagi-Sugeno system and Pi-Sigma neural network is proposed in the next section. Section 3

demonstrates our modified neuro-fuzzy learning algorithm based on gradient method. The main

convergence results are provided in Section 4. Section 5 presents a proof of the convergence

theorem. Some brief conclusions are drawn in Section 6.

2. First-order Takagi-Sugeno inference system and high-order network

2.1. First-order Takagi-Sugeno inference system

A general fuzzy system, which is a T-S model, is comprised of a set of IF-THEN fuzzy rules

having the following form:

Ri : If x1 is A1i and x2 is A2i and . . . and xm is Ami then yi = fi (·),

(1)

where Ri (i = 1, 2, . . . , n) denotes the i-th implication, n is the number of the fuzzy implications

of the fuzzy model, x1 , . . . , xm are the premise variables, fi (·) is the consequence of the i-th

implication, which is a nonlinear or linear function of the premises, and Ali is the fuzzy subset

whose membership function is continuous piecewise-polynomial function.

In the first-order Takagi-Sugeno system (T-S1), the output function fi (·) is a first order

polynomial of the input variables x1 , . . . , xm and the corresponding output yi is determined by

[5, 6]

yi = p0i + p1i x1 + · · · pmi xm .

(2)

Given an input x = (x1 , x2 , . . . , xm ), the final output of the fuzzy model is expressed by

y=

n

X

hi y i ,

(3)

i=1

where hi is the overall truth value of the premises of the i-th implication calculated as

hi = A1i (x1 )A2i (x2 ) . . . Ami (xm ) =

m

Y

Ali (xl ).

(4)

l=1

We mention that there is another form of (3), that is [7],

n

n

³X

´ ³X

´

y=

hi y i /

hi .

i=1

(5)

i=1

For simplicity of learning, a common strategy is to obtain the fuzzy consequence without computing the center of gravity [8]. Therefore, we adopt the form of (3) throughout our discussions.

2.2. Pi-Sigma neural network

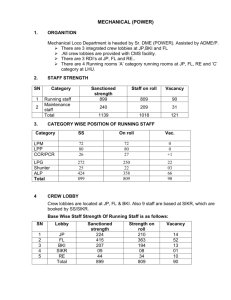

The conventional feed-forward neural network has summary nodes which is difficult to identify some complex problem. A hybrid Pi-Sigma neural network is shown in Figure 1. In this

116

Yan LIU, Jie YANG, Dakun YANG, et al

pi

6

6

6

3

A11

A12

A1n

x1

h1

A22

A2 n

Am1

xm

Am 2

Amn

6

A21

x2

3

h2

3

hn

Figure 1 Topological structure of the first-order Takagi-Sugeno inference system

high-order network, Σ denotes the summing neurons, Π denotes the product neurons. The output

of pi-sigma neural network is

y=

n

X

hi y i =

i=1

m

n

X

¡Y

i=1

¢

µAli (xl ) (p0i + p1i x1 + · · · + pmi xm ) = hPx,

(6)

l=1

where “ · ” denotes the usual inner product, pi = (p0i , p1i , . . . , pmi )T , i = 1, 2, . . . , n, h =

(h1 , h2 , . . . , hn ), P = (pT1 , pT2 , . . . , pTn )T , x = (1, x1 , x2 , . . . , xm )T . From (6), it can be seen that

this Pi-Sigma network is one form of T-S type fuzzy system. For fuzzy system implemented by

this type network, the degree of membership function and parameters can be updated indirectly.

For more efficient identification of nonlinear systems, Gaussian membership function is commonly

used for the fuzzy judgment “xl is Ali ” which is defined by

¡

¢

¡

¢

2

Ali (xl ) = exp − (xl − ali )2 /σli

= exp − (xl − ali )2 b2li ,

(7)

where ali is the center of Ali (xl ), and σli is the width of Ali (xl ), bli is the reciprocal of σli (xl ), i =

1, 2, . . . , n, l = 1, 2, . . . , m. What we need is to preset some initial weights, which will be updated

to their optimal values when some learning algorithm is implemented.

3. Modified gradient-based neuro-fuzzy learning algorithm

Let us introduce an operator “¯” for the description of the learning method.

Definition 3.1 ([8]) Let u = (u1 , u2 , . . . , un )T ∈ Rn , v = (v1 , v2 , . . . , vn )T ∈ Rn . Define the

operator “¯” by

u ¯ v = (u1 v1 , u2 v2 , . . . , un vn )T ∈ Rn .

It is easy to verify the following properties of the operator “¯”:

1) ku ¯ vk ≤ kukkvk,

2) (u ¯ v) · (x ¯ y) = (u ¯ v ¯ x) · y,

3) (u + v) ¯ x = u ¯ x + v ¯ x,

A modified learning algorithm for Pi-Sigma network based on first-order Takagi-Sugeno system

117

where u, v, x, y ∈ Rn , and “·” and “|| ||”represent the usual inner product and Euclidean norm,

respectively.

Suppose that the training sample set is {xj , Oj }Jj=1 ⊂ Rm × R for Pi-Sigma network, where

x and Oj are the input and the corresponding ideal output of the j-th sample, respectively.

The fuzzy rules are provided by (1). We denote the weight vector connecting the input layer and

the Σ layer by pi = (p0i , p1i , . . . , pmi )T , (i = 1, 2, . . . , n) (see Figure 1). Similarly, we denote the

centers and the reciprocals of the widths of the corresponding Gaussian membership functions

by

j

ai = (a1i , a2i , . . . , ami )T ,

bi = (b1i , b2i , . . . , bmi )T = (

1 1

1 T

,

,...,

) , 1 ≤ i ≤ n,

σ1i σ2i

σmi

(8)

respectively, and take them as the weight vector connecting the input layer and membership

layer. For simplicity, all parameters are incorporated into a weight vector

¡

¢T

W = pT1 , pT2 , . . . , pTn , aT1 , . . . , aTn , bT1 , . . . , bTn .

(9)

The error function is defined as

E(W) =

J

J

n

J

X

X

X

1X j

(y − Oj )2 =

gj (

hji (pi · xj )) =

gj (hj Pxj ),

2 j=1

j=1

i=1

j=1

(10)

where Oj is the desired output for the j-th training pattern xj , y j is the corresponding fuzzy

reasoning result, J is the number of training patterns, and

hj = (hj1 , hj2 , . . . , hjn )T = h(xj ), gj (t) =

1

(t − Oj )2 , t ∈ R, 1 ≤ j ≤ J.

2

(11)

The purpose of the network learning is to find W∗ such that

E(W∗ ) = min E(W).

(12)

The gradient descent method is often used to solve this optimization problem.

Remark 3.1 ([8]) Due to this simple simplification, the differentiation with respect to the

denominator is avoided, and the cost of calculating the gradient of the error function is reduced.

Let us describe our modified gradient-based neuro-fuzzy learning algorithm. Noting (8) is

valid, then we have

hjq =

m

Y

l=1

Alq (xjl ) =

m

Y

m

³X

¢

¡

¢´

¡

− (xjl − alq )2 b2lq .

exp − (xjl − alq )2 b2lq = exp

l=1

(13)

l=1

The gradient of the error function E(W) with respect to pi is given by

J

∂E(W) X 0 j j j j

=

gj (h Px )hi x .

∂pi

j=1

(14)

118

Yan LIU, Jie YANG, Dakun YANG, et al

To compute the partial gradient ∂E(W)

∂ai , we note, ∀1 ≤ i ≤ n, 1 ≤ q ≤ n

³P

³P

m ¡

m ¡

¢´

¢´

j

2 2

∂ exp

− (xjl − ali )2 b2li

∂ exp

− (xl − alq ) blq

j

∂hq

l=1

l=1

, q = i,

=

=

∂ai

∂ai

∂ai

0,

q 6= i,

and

∂ exp

³P

m

l=1

´

(−(xjl − ali )2 b2li )

∂ai

= (2hji (xj1 − a1i )b21i , · · · , 2hji (xjm − ami )b2mi )T

¡

¢

= 2hji (xj − ai ) ¯ bi ¯ bi .

(15)

(16)

It follows from (10), (15), (16), that, for 1 ≤ i ≤ n, the partial gradient of the error function

E(W) with respect to ai is

J

n

∂hjq

∂E(W) X 0 j j X

=

gj (h Px )( (pq · xj )

)

∂ai

∂ai

q=1

j=1

=2

J

X

¡

¢

gj0 (hj Pxj )(pi · xj )hji (xj − ai ) ¯ bi ¯ bi .

(17)

j=1

Similarly, for 1 ≤ i ≤ n, the partial gradient of the error function E(W) with respect to bi is

n

J

∂hjq ¢

∂E(W) X 0 j j ¡ X

(pq · xj )

=

gj (h Px )

∂bi

∂bi

q=1

j=1

= −2

J

X

¡

¢

gj0 (hj Pxj )(pi · xj )hji (xj − ai ) ¯ (xj − ai ) ¯ bi .

(18)

j=1

Combined with (14), (17) and (18), the gradient of the error function E(W) with respect to W

is constructed as follows

∂E(W) ³ ∂E(W) T

∂E(W) T ∂E(W) T

∂E(W) T

= (

) ,...,(

) ,(

) ,...,(

) ,

∂W

∂p1

∂pn

∂a1

∂an

∂E(W) T ´T

∂E(W) T

) ,...,(

)

.

(19)

(

∂b1

∂bn

Preset an arbitrary initial value W0 , the weights are updated in the following fashion based on

the modified neuro-fuzzy learning algorithm

Wk+1 = Wk + ∆Wk , k = 0, 1, 2, . . . ,

(20)

where

¡

¢T

∆Wk = (∆pk1 )T , . . . , (∆pkn )T , (∆ak1 )T , . . . , (∆akn )T , (∆bk1 )T , . . . , (∆bkn )T ,

and

∂E(W)

∂E(W)

∂E(W)

, ∆aki = −η

, ∆bki = −η

, 1 ≤ i ≤ n,

∂pi

∂ai

∂bi

η > 0 is a constant learning rate. (20) is also given by

∆pki = −η

Wk+1 = Wk − η

∂E(W)

, k = 0, 1, 2, . . . .

∂W

(21)

(22)

A modified learning algorithm for Pi-Sigma network based on first-order Takagi-Sugeno system

119

4. Convergence theorem

To analyze the convergence of the algorithm, we need the following assumption:

(A) There exists a constant C0 > 0 such that kpki k ≤ C0 , kaki k ≤ C0 , kbki k ≤ C0 for all

i = 1, 2, . . . , n, k = 1, 2, . . .

Let us specify some constants to be used in our convergence analysis as follows:

M = max {kxj k, kOj k},

1≤j≤J

C1 = max{C0 + M, (C0 + M )C0 },

C2 =2JC0 C1 (nC0 C1 + C1 ) max{C01 , C12 }+

2C02 C12 (nC0 C1 + C1 ) + 2JC0 C12 (C0 + C1 )(nC0 C1 + C1 ),

1

C3 =J(nC0 C1 + C1 ) max{ , 4C02 C12 , 4C14 },

2

C4 =4nJC12 max{C02 C12 , C12 , 1},

C5 =C2 + C3 + C4

where xj is the j-th given training pattern, Oj is the corresponding desired output, n and J are

the numbers of the fuzzy rules and the training patterns, respectively.

Theorem If Assumption (A) is valid, the error function E(W) is defined in (10) and the learning

rate η is chosen such that 0 < η < C15 is satisfied, then starting from an arbitrary initial value

W0 , the learning sequence {Wk } is generated by (22) and (19), and we have

(i) E(Wk+1 ) ≤ E(Wk ), k = 0, 1, 2, . . .; there exists E ∗ > 0 such that limk→∞ E(Wk ) = E ∗ ;

(ii) limk→∞ EW (Wk ) = 0.

5. Proof of the convergence theorem

The proof is divided into two parts dealing with Statements (i) and (ii), respectively.

Proof of Statement (i) For any 1 ≤ j ≤ J, 1 ≤ i ≤ n and k = 0, 1, 2, . . . we define the

following notations for convergence:

k,j k j

Φk,j

P x , Ψk,j = hk+1,j − hk,j , ξik,j = xj − aki , Φk,j

= ξik,j ¯ bki .

0 =h

i

(23)

Noticing error function (10) is valid, and applying the Taylor mean value theorem with Lagrange

remainder, we have

E(Wk+1 ) − E(Wk )

=

J

X

¡

¢

gj (Φ0k+1,j ) − gj (Φk,j

0 )

j=1

=

J h

X

j=1

i

1

2

k+1,j k+1 j

− Φk,j

gj0 (Φk,j

P

x − hk,j Pk xj ) + gj00 (sk,j )(Φk+1,j

0

0 )

0 )(h

2

120

Yan LIU, Jie YANG, Dakun YANG, et al

=

J h

X

¡ k,j

¢i

gj0 (Φk,j

∆Pk+1 xj + Ψk,j Pk+1 xj + Ψk,j ∆Pk xj +

0 ) h

j=1

J

1 X 00

2

g (sk,j )(Φ0k+1,j − Φk,j

0 ) ,

2 j=1 j

k+1,j

where sk,j ∈ R is a constant between Φk,j

.

0 and Φ0

Employing (14), we have

J

X

k,j

∆Pk xj ) =

gj0 (Φk,j

0 )(h

J

X

gj0 (Φk,j

0 )

=

n

X

¢ j

k T

hk,j

x

i (∆pi )

i=1

j=1

j=1

n

X

¡

(∆pki )T

i=1

n

X

J

X

k,j j

gj0 (Φk,j

0 )hi x

j=1

∂E(W ) ³

∂E(Wk ) ´

· −η

∂pi

∂pi

i=1

n

°

°

X ° ∂E(Wk ) °2

= −η

°

° .

∂pi

i=1

=

k

t,j

t,j

Using the Taylor expansion, and noticing ht,j

i = exp(−Φi · Φi ), partly similar with the

proof of Lemma 2 in [16], we have

Ψk,j Pk xj =

n

X

k

j

(hk+1,j

− hk,j

i

i )(pi · x ) =

i=1

=

n

X

³

´

k+1,j

k,j

k,j

(pki · xj ) exp(−Φk+1,j

·

Φ

)

−

exp(−Φ

·

Φ

)

i

i

i

i

i=1

k,j ¡

(pki · xj )hi

i=1

n

X

1

2

n

X

k,j ¢

− (Φk+1,j

· Φk+1,j

− Φk,j

i

i

i · Φi ) +

k+1,j

k,j 2

(pki · xj ) exp(e

ts,j

· Φk+1,j

− Φk,j

i )(Φi

i

i · Φi ) ,

i=1

k,j

where e

ts,j

lies between −Φk+1,j

· Φk+1,j

and −Φk,j

i

i

i

i · Φi .

Employing the property 2) of the operator“¯” in the Definition 3.1, we deduce

n

X

´

³

k,j

(pki · xj )hk,j

−(Φk+1,j

· Φk+1,j

− Φk,j

i

i

i

i · Φi )

i=1

=

n

X

´i

h ³

k,j

k+1,j

k,j

k+1,j

k,j

k+1,j

k,j

)

−

Φ

)

·

(Φ

−

Φ

)

+

(Φ

−

Φ

·

(Φ

(pki · xj )hk,j

−

2Φ

i

i

i

i

i

i

i

i

i=1

= −2

n

X

n

X

¡ k,j

k+1,j

k,j ¢

k+1,j

2

− Φk,j

)

−

−

Φ

(pki · xj )hk,j

(pki · xj )hk,j

·

(Φ

Φ

i k

i

i kΦi

i

i

i

i=1

i=1

= −2

n

X

(pki · xj )hk,j

i

³¡

¢ ¡

¢´

ξik,j ¯ bki · (−∆aki ) ¯ bk+1

+ ξik,j ¯ ∆bki −

i

i=1

n

X

i=1

k+1,j

2

− Φk,j

(pki · xj )hk,j

i k

i kΦi

(24)

A modified learning algorithm for Pi-Sigma network based on first-order Takagi-Sugeno system

=2

n

X

121

¡ k,j

¢ ¡

¢

(pki · xj )hk,j

ξi ¯ bki · ∆aki ¯ bk+1

−

i

i

i=1

2

n

X

k,j

¯ bki ) · (ξik,j ¯ ∆bki ) −

(pki · xj )hk,j

i (ξi

=2

2

k+1,j

2

− Φk,j

(pki · xj )hk,j

i )k

i kΦi

i=1

i=1

n

X

n

X

k,j

¯ bki ¯ bki ) · ∆aki + 2

(pki · xj )hk,j

i (ξi

n

X

i=1

i=1

n

X

n

X

k,j

(pki · xj )hk,j

¯ ξik,j ¯ bki ) · ∆bki −

i (ξi

i=1

k,j

¯ bki ¯ ∆bki ) · ∆aki −

(pki · xj )hk,j

i (ξi

k+1,j

2

(pki · xj )hk,j

− Φk,j

i kΦi

i )k .

i=1

So we have

J

X

gj0 (Φk,j

0 )

n

X

¡

k,j ¢

(pki · xj )hk,j

− (Φik+1,j · Φk+1,j

− Φk,j

i

i · Φi )

i

i=1

j=1

=2

J

X

gj0 (Φk,j

0 )

j=1

2

J

X

2

gj0 (Φk,j

0 )

=

i=1

n

X

k,j

(pki · xj )hk,j

¯ bki ¯ ∆bki ) · ∆aki −

i (ξi

i=1

gj0 (Φk,j

0 )

j=1

n

X

k,j

(pki · xj )hk,j

¯ bki ¯ bki ) · ∆aki +

i (ξi

i=1

j=1

J

X

n

X

n

X

k,j

(pki · xj )hk,j

¯ ξik,j ¯ bki ) · ∆bki −

i (ξi

i=1

J

X

gj0 (Φk,j

0 )δ

j=1

J

X

n

X

∂E(Wk )

k,j

· ∆aki + 2

gj0 (Φk,j

(pki · xj )hk,j

¯ bki ¯ ∆bki ) · ∆aki +

0 )

i (ξi

∂ai

j=1

i=1

n

X

∂E(Wk )

∂bi

i=1

· ∆bki −

J

X

gj0 (Φk,j

0 )

j=1

n

X

k+1,j

2

(pki · xj )hk,j

− Φk,j

i kΦi

i )k .

(25)

i=1

k,j

n

k

j

n

k

j

n

k

j

We can easily get kΦk,j

0 k = kΣi=1 hi (pi · x )k ≤ Σi=1 kpi · x k ≤ Σi=1 kpi kkx k = nM C0 ,

= kxj − aki k ≤ M + C0 . By definition of gj (t) in (11), it is easy to find that gj0 (t) = t − Oj ,

then we can get |gj0 (Φk,j

0 )| ≤ (nM C0 + M ) ≤ nC0 C1 + C1 .

kξik,j k

Together with Assumption (A), and the property 1) of the operator “¯”, we get

2

J

X

gj0 (Φk,j

0 )

j=1

n

X

k,j

(pki · xj )hk,j

¯ bki ¯ ∆bki ) · ∆aki

i (ξi

i=1

≤ 2JC02 C12 (nC0 C1 + C1 )

n

X

k∆bki kk∆aki k

i=1

≤ JC02 C12 (nC0 C1 + C1 )

n

X

¡

¢

k∆aki k2 + k∆bki k2 ,

i=1

and

−

J

X

j=1

gj0 (Φk,j

0 )

n

X

i=1

k+1,j

2

(pki · xj )hk,j

− Φk,j

i kΦi

i )k

(26)

122

Yan LIU, Jie YANG, Dakun YANG, et al

≤ C0 C1 (nC0 C1 + C1 )

J X

n

X

2

kΦik+1,j − Φk,j

i k

j=1 i=1

= C0 C1 (nC0 C1 + C1 )

J X

n

X

j=1 i=1

n

X

k(−∆aki ) ¯ bk+1

+ ξik,j ¯ ∆bki k2

i

¡

≤ 2JC0 C1 (nC0 C1 + C1 )

C02 k∆aki k2 + C12 k∆bki k2

¢

i=1

≤ C21

n

X

¡

¢

k∆aki k2 + k∆bki k2 ,

(27)

i=1

where C21 = 2JC0 C1 (nC0 C1 + C1 ) max{C02 , C12 }. The combination of (29)–(31) leads to

J

X

gj0 (Φk,j

0 )

j=1

≤

n

X

¡

k,j ¢

(pki · xj )hk,j

− (Φk+1,j

· Φk+1,j

− Φk,j

i

i

i · Φi )

i

i=1

n

X

∂E(Wk )

∂ai

i=1

· ∆aki +

n

X

∂E(Wk )

∂bi

i=1

· ∆bki + C22

n

X

¡

¢

k∆aki k2 + k∆bki k2 ,

i=1

where C22 = C21 + JC02 C12 (nC0 C1 + C1 ). Furthermore,

n

J

gj0 (Φk,j

0 )

1 XX k j

k+1,j

k,j 2

(p · x ) exp(e

ts,j

· Φk+1,j

− Φk,j

i )(Φi

i

i · Φi )

2 j=1 i=1 i

J

n

C0 C1 (nC0 C1 + C1 ) X X k+1,j

k,j 2

(Φi

· Φk+1,j

− Φk,j

i

i · Φi )

2

j=1 i=1

≤

n

J

¤2

C0 C1 (nC0 C1 + C1 ) X X £ k+1,j

k+1,j

(Φi

+ Φk,j

− Φk,j

=

i ) · (Φi

i )

2

j=1 i=1

≤

2C0 C12 (nC0 C1

+ C1 )

J X

n

X

2

kΦk+1,j

− Φk,j

i

i k

j=1 i=1

≤ C23

n

X

¡

¢

k∆aki k2 + k∆bki k2 ,

(28)

i=1

where C23 = 2JC0 C12 (C0 + C1 )(nC0 C1 + C1 ). Combining (28) and (32) leads to

J

X

k,j k j

gj0 (Φk,j

P x )

0 )(Ψ

j=1

≤

n

X

∂E(Wk )

∂ai

i=1

= −η

· ∆aki +

n

X

∂E(Wk )

· ∆bki + C2

n

X

¡

k∆aki k2 + k∆bki k2

¢

∂bi

i=1

i=1

n ³°

°

°

°

°

° ´

k °2 ´

k

X

2

° ∂E(W ) °

° ∂E(Wk ) °2 ° ∂E(Wk ) °2

° ∂E(W ) °

°

° +°

° + C2 η 2

° +°

° ,

°

∂ai

∂bi

∂ai

∂bi

i=1

n ³°

X

i=1

where C2 = C22 + C23 .

t,j

t

Notice kΦt,j

i k = kξi ¯ bi k ≤ C1 , kbi k ≤ C0 , kξi k ≤ C0 + M = C1 . By the Taylor mean

A modified learning algorithm for Pi-Sigma network based on first-order Takagi-Sugeno system

123

value theorem with Lagrange remainder and the properties of Euclidean norm, we get

° t+1,j

°2

° h1

°

− ht,j

1

°

t+1,j

t,j °

°

− h 2 °

h2

°

kΨt,j k2 = °

°

°

···

°

°

° hk+1,j − ht,j °

n

n

=

n

X

2

(ht+1,j

− ht,j

i

i ) =

i=1

=

n ³

X

´2

¢

¡

¡

t,j

t,j ¢

−

exp

−

Φ

·

Φ

exp − Φit+1,j · Φt+1,j

i

i

i

i=1

n ³

X

³¡

¢ ´2

¢ ¡ t+1,j

· Φi

− Φt,j

− exp(e

st,j

Φit+1,j + Φt,j

i

i )

i

i=1

≤

n

X

¡

¢¢2

¡

|2C1 | ξit+1,j ¯ bit+1,j − ξit,j ¯ bt,j

i

i=1

=

n ³

X

°

°´2

t,j °

|2C1 |°(ξit+1,j − ξit,j ) ¯ bit+1,j + ξit,j ¯ (bt+1,j

−

b

)

i

i

i=1

≤

n

X

¡

|2C1 C0 |kξit+1,j − ξit,j k + 2C12 kbt+1

− bti k

i

¢2

i=1

≤

n ³

X

n

´2

X

2

C31 (k∆ai t k + k∆bi t k) ≤ 2C31

(k∆ai t k2 + k∆bi t k2 ),

i=1

i=1

set,j

i

t,j

where C31 = 2C1 max{C0 , C1 } and

lies between −Φt+1,j

· Φt+1,j

and −Φt,j

i

i

i · Φi . A combination of Cauchy-Schwartz inequality and (33) gives

J

X

J

n

X

¡ k,j

¢ X

k T j

gj0 (Φk,j

∆Pk xj =

gj0 (Φk,j

Ψk,j

0 ) Ψ

0 )

i (∆pi ) x

j=1

j=1

=

i=1

J X

n

X

k,j

k T j

gj0 (Φk,j

0 )Ψi (∆pi ) x

j=1 i=1

≤C1 (nC0 C1 + C1 )

J X

n

X

k T

kΨk,j

i kk(∆pi ) k

j=1 i=1

J

≤

n

C1 (nC0 C1 + C1 ) X X

2

k T 2

(kΨk,j

i k + k(∆pi ) k )

2

j=1 i=1

2

≤J(nC0 C1 + C1 )C31

n

X

¡

¢

k∆aki k2 + k∆bki k2 +

i=1

n

(nC0 C1 + C1 ) X ¡

J

k(∆pki )T k2

2

i=1

≤C3

n

³X

¡

i=1

k(∆pki )T k2 +

°

°

k °2

°

2 ° ∂E(W ) °

,

=C3 η °

∂W °

n

X

i=1

k∆aki k2 +

n

X

i=1

k∆bki k2

´

124

Yan LIU, Jie YANG, Dakun YANG, et al

where C3 = J(nC0 C1 + C1 ) max{ 12 , 4C02 C12 , 4C14 }.

gj00 (t) = 1 is easily deduced from the definition of gj (t) in (11), and we get

J

1 X 00

2

g (sk,j )(Φk+1,j

− Φk,j

0

0 )

2 j=1 j

J

=

1 X k+1,j

2

kΦ

− Φk,j

0 k

2 j=1 0

J

1 X k+1,j k+1 j

=

kh

P

x − hk,j Pk xj k2

2 j=1

=

J

n

n

X

1 X X k+1,j k+1 j

k

j 2

k

hi

(pi · x ) −

hk,j

i (pi · x )k

2 j=1 i=1

i=1

=

J

n

n

X

1 X X k+1,j

k+1

k+1

k

(hi

− hk,j

· xj ) +

(hk+1,j

− hk,j

· xj )k2

i )(pi

i

i )(pi

2 j=1 i=1

i=1

≤

1 XX

k+1

k+1

k(hk+1,j

− hk,j

· xj ) + (hk+1,j

− hk,j

· xj )k2

i

i )(pi

i

i )(pi

2 j=1 i=1

≤

1 XX

k+1

k+1

[k(hk+1,j

− hk,j

· xj )k2 + k(hk+1,j

− hk,j

· xj )k2 ]

i

i )(pi

i

i )(pi

2 j=1 i=1

J

n

J

n

n ³°

°

°

°

°

° ´

X

° ∂E(Wk ) °2 ° ∂E(Wk ) °2 ° ∂E(Wk ) °2

° +°

° +°

°

°

∂pi

∂ai

∂bi

i=1

° ∂E(Wk ) °2

°

°

= C4 η 2 °

° ,

∂W

where C4 = 4nJC12 max{C02 C12 , C12 , 1}.

Using the Taylor expansion theorem, for k = 0, 1, 2, . . . we get

≤ C4 η 2

E(Wk+1 ) − E(Wk )

n °

n ³°

°

°

°

° ´

X

X

° ∂E(Wk ) °2

° ∂E(Wk ) °2 ° ∂E(Wk ) °2

≤ −η

°

° −η

°

° +°

° +

∂pi

∂ai

∂bi

i=1

i=1

n °

n °

n °

°

°

° ´

³X

° ∂E(Wk ) °2 X ° ∂E(Wk ) °2 X ° ∂E(Wk ) °2

C3 η 2

+

+

°

°

°

°

° +

°

∂pi

∂ai

∂bi

i=1

i=1

i=1

° ∂E(Wk ) °2

°

°

(C2 + C4 )η 2 °

°

∂W

° ∂E(Wk ) °2

°

°

≤ −(η − C5 η 2 )°

° ,

∂W

k+1,j

where C5 = C2 + C3 + C4 , and sk,j ∈ R lies between Φk,j

. Write β = η − C5 η 2 , then

0 and Φ0

° ∂E(Wk ) °2

°

°

E(Wk+1 ) ≤ E(Wk ) − β °

(30)

° .

∂W

Suppose the learning rate η satisfies

1

0<η<

.

(31)

C5

A modified learning algorithm for Pi-Sigma network based on first-order Takagi-Sugeno system

125

Then there holds

E(Wk+1 ) ≤ E(Wk ), k = 0, 1, 2, . . . .

(32)

From (32), we know the nonnegative sequence {E(W k )} is monotone, adding it is bounded

below, hence, there exists E ∗ > 0 such that limk→∞ E(Wk ) = E ∗ . The statement (i) is proved.

Proof of Statement (ii) Using (35), we have

k °

°

° ∂E(Wk ) °2

X

°

° ∂E(Wt ) °2

°

E(Wk+1 ) ≤ E(Wk ) − β °

° ≤ · · · ≤ E(W0 ) − β

°

° .

∂W

∂W

t=0

For E(Wk+1 ) ≥ 0, we get

β

Set k → ∞, then

This immediately gives

k °

°

X

° ∂E(Wt ) °2

°

° ≤ E(W0 ).

∂W

t=0

∞ °

°

X

1

° ∂E(Wt ) °2

°

° ≤ E(W0 ) < ∞.

∂W

β

t=0

° ∂E(Wk ) °

°

°

lim °

° = 0.

k→∞

∂W

(33)

The Statement (ii) is proved. And this completes the proof of Theorem.

6. Conclusion

First-order Takagi-Sugeno (T-S) system has recently been a powerful practical engineering

tool for modeling and control of complex systems. Ref. [7] showed that Pi-Sigma network is

capable of dealing with the nonlinear systems more efficiently, and it is a good model for firstorder T-S system identification.

We note that the convergence property for Pi-Sigma neural network learning is an interesting research topic which offers an effective guarantee in real application. To further enhance

the potential of Pi-Sigma network, a modified gradient-based algorithm based on first-order T-S

inference system has been proposed to reduce the computational cost of learning. Our contribution is to provide a rigorous convergence analysis for this learning method, and some convergence

results are given which indicate that the gradient of the error function goes to zero and the fuzzy

parameter sequence goes to a local minimum of the error function, respectively.

References

[1] T. TAKAGI, M. SUGENO. Fuzzy identification of systems and its applications to modeling and control.

IEEE Trans. Syst., Man, Cyber., 1985, SMC-15(1): 116–132.

[2] Y. SHIN, J. GHOSH. The Pi-Sigma networks: an efficient higher-order neural network for pattern classification and function approximation. in: Proceedings of International Joint Conference on Neural Networks,

1991, 1: 13–18.

[3] Yaochu JIN, Jingping JIANG, Jing ZHU. Neural network based fuzzy identification and its application to

modeling and control of complex systems. IEEE Transactions on Systems, Man, and Cybernetics, 1995,

25(6): 990–997.

126

Yan LIU, Jie YANG, Dakun YANG, et al

[4] Weixin YU, M. Q. LI, Jiao LUO, et al. Prediction of the mechanical properties of the post-forged Ti–6Al–4V

alloy using fuzzy neural network. Materials and Design, 2012, 31: 3282–3288.

[5] D. M. LIU, G. NAADIMUTHU, E. S. LEE. Trajectory tracking in aircraft landing operations management

using the adaptive neural fuzzy inference system. Comput. Math. Appl., 2008, 56(5): 1322–1327.

[6] I. TURKMEN, K. GUNEY. Genetic tracker with adaptive neuro-fuzzy inference system for multiple target

tracking. Expert Systems with Applications, 2008, 35(4): 1657–1667.

[7] K. CHATURVED, M. PANDIT, L. SRIVASTAVA. Modified neo-fuzzy neuron-based approach for economic

and environmental optimal power dispatch. Applied Soft Computing, 2008, 8(4): 1428–1438.

[8] Wei WU, Long LI, Jie YANG, et al. A modified gradient-based neuro-fuzzy learning algorithm and its

convergence. Information Sciences, 2010, 180(9): 1630–1642.

[9] E. CZOGALA, W. PEDRYCZ. On identification in fuzzy systems and its applications in control problems.

Fuzzy Sets and Systems, 1981, 6(1): 73–83.

[10] W. PEDRYCZ. An identification algorithm in fuzzy relational systems. Fuzzy Sets and Systems, 1984, 13(2):

153–167.

[11] Yongyan CAO, P. M. FRANK. Analysis and Synthesis of Nonlinear Time-Delay Systems via Fuzzy Control

Approach. IEEE Transactions on Fuzzy Systems, 2000, 8(2): 200–211.

[12] Yuju CHEN, Tsungchuan HUANG, Reychue HWANG. An effective learning of neural network by using

RFBP learning algorithm. Inform. Sci., 2004, 167(1-4): 77–86.

[13] Chao ZHANG, Wei WU, Yan XIONG. Convergence analysis of batch gradient algorithm for three classes of

sigma-pi neural networks. Neural Processing Letters, 2007, 26(3): 177–189.

[14] Chao ZHANG, Wei WU, Xianhua CHEN, et al. Convergence of BP algorithm for product unit neural

networks with exponential weights. Neurocomputing, 2008, 72(1–3): 513–520.

[15] J. S. WANG, C. S. G. LEE. Self-adaptive neuro-fuzzy inference systems for classification applications. IEEE

Transactions on Fuzzy Systems, 2002, 10(6): 790–802.

[16] H. ICHIHASHI, I. B. TKSEN. A neuro-fuzzy approach to data analysis of pairwise comparisons. Internat.

J. Approx. Reason., 1993, 9(3): 227–248.