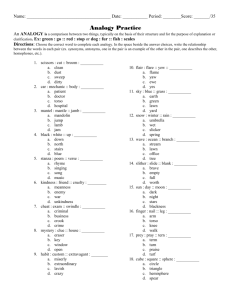

Analogy just looks like high level perception

advertisement