D-Index: Distance Searching Index for Metric Data Sets

Multimedia Tools and Applications, 21, 9–33, 2003 c 2003 Kluwer Academic Publishers. Manufactured in The Netherlands.

D-Index: Distance Searching Index for Metric

Data Sets

VLASTISLAV DOHNAL

Masaryk University Brno, Czech Republic xdohnal@fi.muni.cz

c.gennaro@isti.cnr.it

p.savino@isti.cnr.it

CLAUDIO GENNARO

PASQUALE SAVINO

ISI-CNR, Via Moruzzi, 1, 56124, Pisa, Italy

PAVEL ZEZULA

Masaryk University Brno, Czech Republic zezula@fi.muni.cz

Abstract.

In order to speedup retrieval in large collections of data, index structures partition the data into subsets so that query requests can be evaluated without examining the entire collection. As the complexity of modern data types grows, metric spaces have become a popular paradigm for similarity retrieval. We propose a new index structure, called D-Index, that combines a novel clustering technique and the pivot-based distance searching strategy to speed up execution of similarity range and nearest neighbor queries for large files with objects stored in disk memories. We have qualitatively analyzed D-Index and verified its properties on actual implementation.

We have also compared D-Index with other index structures and demonstrated its superiority on several real-life data sets. Contrary to tree organizations, the D-Index structure is suitable for dynamic environments with a high rate of delete/insert operations.

Keywords: metric spaces, similarity search, index structures, performance evaluation

1.

Introduction

The concept of similarity searching based on relative distances between a query and database objects has become essential for a number of application areas, e.g. data mining, signal processing, geographic databases, information retrieval, or computational biology. They usually exploit data types such as sets, strings, vectors, or complex structures that can be exemplified by XML documents. Intuitively, the problem is to find similar objects with respect to a query object according to a domain specific distance measure. The problem can be formalized by the mathematical notion of the metric space , so the data elements are assumed to be objects from a metric space where only pairwise distances between the objects can be determined.

The growing need to deal with large, possibly distributed, archives requires an indexing support to speedup retrieval. An interesting survey of indexes built on the metric distance paradigm can be found in [4]. The common assumption is that the costs to build and to maintain an index structure are much less important compared to the costs that are needed to execute a query. Though this is certainly true for prevalently static collections of data,

10 DOHNAL ET AL.

such as those occurring in the traditional information retrieval, some other applications may need to deal with dynamic objects that are permanently subject of change as a function of time. In such environments, updates are inevitable and the costs of update are becoming a matter of concern.

One important characteristic of distance based index structures is that, depending on specific data and a distance function, the performance can be either I/O or CPU bound.

For example, let us consider two different applications: in the first one, the similarity of text strings of one hundred characters is computed by using the edit distance , which has a quadratic computational complexity; in the second application, one hundred dimensional vectors are compared by the inner product , with a linear computational complexity. Since the text objects are four times shorter than the vectors and the distance computation on the vectors is one hundred times faster than the edit distance, the minimization of I/O accesses for the vectors is much more important that for the text strings. On the contrary, the CPU costs are more significant for computing the distance of text strings than for the distance of vectors. In general, the strategy of indexes based on distances should be able to minimize both of the cost components.

An index structure supporting similarity retrieval should be able to execute similarity range queries with any search radius. However, a significant group of applications needs retrieval of objects which are in a very near vicinity of the query object—e.g., copy (replica) detection, cluster analysis, DNA mutations, corrupted signals after their transmission over noisy channels, or typing (spelling) errors. Thus in some cases, special attention may be warranted.

In this article, we propose a similarity search structure, called D-Index, that is able to reduce both the I/O and CPU costs. The organization stores data objects in buckets with direct access to avoid hierarchical bucket dependencies. Such organization also results in a very efficient insertion and deletion of objects. Though similarity constraints of queries can be defined arbitrarily, the structure is extremely efficient for queries searching for very close objects.

A short preliminary version of this article is available in [8]. The major extensions concern generic search algorithms, an extensive experimental evaluation, and comparison with other index structures. The rest of the article is organized as follows. In Section 2, we define the general principles of the D-Index. The system structure, algorithms, and design issues are specified in Section 3, while experimental evaluation results can be found in Section 4. The article concludes in Section 5.

2.

Search strategies

A metric space M = ( D , d ) is defined by a domain of objects (elements, points) D and a total (distance) function d —a non negative and symmetric function which satisfies the triangle inequality d ( x

, y )

≤ d ( x

, z )

+ d ( z

, y )

, ∀ x

, y

, z

∈ D

. Without any loss of generality, we assume that the maximum distance never exceeds the maximum distance d

+

. In general, we study the following problem: Given a set X

⊆ D in the metric space

M

, pre-process or structure the elements of X so that similarity queries can be answered efficiently .

D-INDEX: DISTANCE SEARCHING INDEX FOR METRIC DATA SETS 11

For a query object q ∈ D , two fundamental similarity queries can be defined. A range query retrieves all elements within distance r to q , that is the set { x ∈ X , d ( q , x ) ≤ r } . A nearest neighbor query retrieves the k closest elements to q , that is a set A

⊆

X such that

|

A

| = k and

∀ x

∈

A

, y

∈

X

−

A

, d ( q

, x )

≤ d ( q

, y ).

According to the survey [4], existing approaches to search general metric spaces can be classified in two basic categories:

Clustering algorithms . The idea is to divide, often recursively, the search space into partitions

(groups, sets, clusters) characterized by some representative information.

Pivot-based algorithms . The second trend is based on choosing t distinguished elements from

D

, storing for each database element x distances to these t pivots, and using such information for speeding up retrieval.

Though the number of existing search algorithms is impressive, see [4], the search costs are still high, and there is no algorithm that is able to outperform the others in all situations.

Furthermore, most of the algorithms are implemented as main memory structures, thus do not scale up well to deal with large data files.

The access structure we propose in this paper, called D-Index, synergistically combines more principles into a single system in order to minimize the amount of accessed data, as well as the number of distance computations. In particular, we define a new technique to recursively cluster data in separable partitions of data blocks and we combine it with pivot-based strategies to decrease the I/O costs. In the following, we first formally specify the underlying principles of our clustering and pivoting techniques and then we present examples to illustrate the ideas behind the definitions.

2.1.

Clustering through separable partitioning

To achieve our objectives, we base our partitioning principles on a mapping function, which we call the

ρ

split function , where

ρ is a real number constrained as 0

≤ ρ < d

+

. In order to gradually explain the concept of ρ -split functions, we first define a first order ρ -split function and its properties.

Definition 1 .

Given a metric space (

D , d ), a first order

ρ

-split function s s

1 ,ρ s

1 ,ρ

( y )

=

1

⇒ d (

− ⇒ d ( x , y ) > ρ x

2

, y )

− ρ

1

>

2

ρ

(separable property) and

(symmetry property).

ρ

2

≥ ρ

1

∧ s

1 ,ρ

2

1 ,ρ

:

D → {

0

,

1

, −}

, such that for arbitrary different objects x

, y

∈ D , s is the mapping

1 ,ρ

( x )

=

0

∧

( x )

= − ∧ s

1 ,ρ

1 ( y )

=

In other words, the

ρ

-split function assigns to each object of the space

D one of the symbols

0, 1, or

−

. Moreover, the function must hold the separable and symmetry properties. The meaning and the importance of these properties will be clarified later.

We can generalize the ρ -split function by concatenating n first order ρ -split functions with the purpose of obtaining a split function of order n .

Definition 2 a

ρ

.

Given n first order

-split function of order n s n

,ρ

ρ

=

-split functions

( s

1

,ρ

1

, s

1

,ρ

2 s

, . . . ,

1 ,ρ

1 s

1

,ρ n

, . . . , s

1 ,ρ n

) :

D in the metric space (

→ {

0

,

1

, −} n

D , d ), is the mapping,

12 DOHNAL ET AL.

such that for arbitrary different objects x , y ∈ D , ∀ i s s n

,ρ

( x ) = s

− ∧ ∃ j s

1 ,ρ

1 j n

,ρ

( y ) ⇒ d ( x , y ) > 2 ρ (separable property) and ρ

( y ) = − ⇒ d ( x , y ) > ρ

2

− ρ

1 i

1

,ρ

( x ) = − ∧ ∀ j s

(symmetry property).

2

≥ ρ

1

1

,ρ j

( y

∧ ∀ i s i

)

1

,ρ

= − ∧

2 ( x ) =

An obvious consequence of the that by combining n ρ

ρ -split function definitions, useful for our purposes, is

-split functions of order 1 s

1

,ρ

1

, . . . , s

1

,ρ n

, which have the separable and symmetric properties, we obtain a

ρ

-split function of order n s n

,ρ

, which also demonstrates the separable and symmetric properties. We often refer to the number of symbols generated by s n

,ρ

, that is the parameter n , as the order of the

ρ

-split function. In order to obtain an addressing scheme, we need another function that transforms the

ρ

-split strings into integers, which we define as follows.

Definition 3 .

Given a string b

=

( b

·

:

{

0

,

1

, −} n →

[0

..

2 n

1

] is specified as:

, . . . , b n

) of n elements 0, 1, or

−

, the function b =

[ b

1

, b

2

, . . . , b n

]

2

= n j

=

1

2 j

−

1 b j

, if

∀ j b j

= −

2 n , otherwise

When all the elements are different from ‘

−

’, the function b simply translates the string b into an integer by interpreting it as a binary number (which is always

<

2 n ), otherwise the function returns 2 n .

By means of the

ρ

-split function and the b operator we can assign an integer number i (0

≤ i

≤

2

2 n n ) to each object x

∈ D

, i.e., the function can group objects from X

⊂ D in

+

1 disjoint sub-sets.

Assuming a split function s

ρ n ,ρ

-split function s n

,ρ

, we use the capital letter S n

,ρ to designate partitions

(sub-sets) of objects from X , which the function can produce. More precisely, given a

ρ

and a set of objects X , we define S n ,ρ

[ i ]

( X )

= { x

∈

X

| s n ,ρ

( x )

= i

}

. We call the first 2 n function s n ,ρ

( x sets the

) separable sets evaluates to 2 n

. The last set, that is the set of objects for which the

, is called the exclusion set .

For illustration, given two split functions of order 1, s

1

1

,ρ and s

2

1

,ρ

, a ρ -split function of order 2 produces strings as a concatenation of strings returned by functions s

1 ,ρ

1 and s

1 ,ρ

2

.

The combination of the

ρ

-split functions can also be seen from the perspective of the object data set. Considering again the illustrative example above, the resulting function of order 2 generates an exclusion set as the union of the exclusion sets of the two first order split functions, i.e. ( S

1 ,ρ

1[2]

( D ) ∪ S

1 ,ρ

2[2]

( D )). Moreover, the four separable sets, which are given by the combination of the separable sets of the original split functions, are determined as follows: { S

1

,ρ

1[0]

( D ) ∩ S

1

,ρ

2[0]

( D ) , S

1

,ρ

1[0]

( D ) ∩ S

1

,ρ

2[1]

( D ) , S

1

,ρ

1[1]

( D ) ∩ S

1

,ρ

2[0]

( D ) , S

1

,ρ

1[1]

( D ) ∩ S

1

,ρ

2[1]

( D ) } .

The separable property of the ρ -split function allows to partition a set of objects X in sub-sets S n

,ρ

[ i ]

( X ), so that the distance from an object in one sub-set to another object in a different sub-set is more than 2

ρ

, which is true for all i

<

2 n . We say that such disjoint separation of sub-sets, or partitioning, is separable up to 2

ρ

. This property will be used during the retrieval, since a range query with radius

≤ ρ requires to access only one of the separable sets and, possibly the exclusion set. For convenience, we denote a set of separable

D-INDEX: DISTANCE SEARCHING INDEX FOR METRIC DATA SETS 13 partitions

{

S n

,ρ

[0]

(

D

)

, . . . ,

S n

,ρ

[2 n −

1]

(

D

)

} as

{

S n

,ρ

[

·

]

(

D

)

}

. The last partition S n

,ρ

[2 n ]

(

D

) is the exclusion set.

When the

ρ parameter changes, the separable partitions shrink or widen correspondingly.

The symmetric property guarantees a uniform reaction in all the partitions. This property is essential in the similarity search phase as detailed in Sections 3.2 and 3.3.

2.2.

Pivot-based filtering

In general, the pivot-based algorithms can be viewed as a mapping F from the original metric space ( D , d ) to a t -dimensional vector space with the L ping assumes a set T

= { p

( d ( o

, p d ( q

, p

2

1

)

, d ( o

, p

2

)

, . . . , d ( o

, p

)

, . . . , d ( q

, p t

1

, p

2 t

, . . . , p t

} of objects from

)) and discard for the search radius r

D an object

∞ o distance. The map-

, called pivots, and for each database object o , the mapping determines its characteristic (feature) vector as F ( o )

=

)). We designate the new metric space as if

M

T

(

R t ,

L

∞

). At search time, we compute for a query object q the query feature vector F ( q )

=

( d ( q

, p

1

)

,

L

∞

( F ( o ) , F ( q )) > r (1)

In other words, the object o can be discarded if for some pivot p i

,

| d ( q

, p i

)

− d ( o

, p i

)

| > r (2)

Due to the triangle inequality, the mapping F is contractive , that is all discarded objects do not belong to the result set. However, some not-discarded objects may not be relevant and must be verified through the original distance function d (

·

). For more details, see for example [3].

2.3.

Illustration of the idea

Before we specify the structure and the insertion/search algorithms of D-Index, we illustrate the

ρ

-split clustering and pivoting techniques by simple examples.

Though several different types of first order ρ -split functions are proposed, analyzed, and evaluated in [7], the ball partitioning split ( bps ) originally proposed in [12] under the name excluded middle partitioning , provided the smallest exclusion set. For this reason, we also apply this approach, which can be characterized as follows.

The ball partitioning

ρ

-split function bps

ρ

( x

, x v

) uses one object x distance d m to partition the data file into three subsets BPS

1

,ρ

[0]

, v

BPS

∈ D and the medium

1

,ρ

[1] and BPS

1

,ρ

[2]

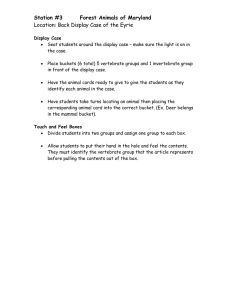

—the result of the following function gives the index of the set to which the object x belongs (see figure 1).

bps

1

,ρ

( x ) =

0 if d ( x

, x v

1 if d ( x

, x v

− otherwise

)

≤ d m

)

> d m

− ρ

+ ρ

(3)

14 DOHNAL ET AL.

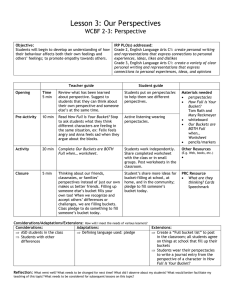

Figure 1 .

The excluded middle partitioning.

Note that the median distance d m is relative to x v and it is defined so that the number of objects with distances smaller than d m is the same as the number of objects with distances larger than d m

. For more details see [11].

In order to see that the bps

ρ

-split function behaves in the desired way, it is sufficient to prove that the separable and symmetric properties hold.

Separable property and d d x

0

(

( x x

0

0 d ( x

, x

1

1

, x v

Moreover, since

. We have to prove that

)

> d

)

+ d ( x

0

, x m v and d ( x because the proof for x

0

∈ x

BPS

1

, y )

> ρ

2

1

1 ,ρ

[0]

2

− ρ

1 then d m

− ρ

2 the above inequalities we obtain d ( y

, x v inequality we have that d ( x

0

)

−

, y )

> d ( y

, x v

∀ d x with

ρ

(

0 expression, we get d ( x

0

, x

1

Symmetric property . We have to prove that

∀ x

0

, y )

> ρ

2

− ρ

1 x

0

∈

∈

BPS

, x

BPS

≥ d ( x

)

− d ( x v

0

)

0

, x

, x

1

,ρ

[0] v

> ρ v

, x

1

, x

1

1 ,ρ

1

[2]

∈

BPS

+ ρ

. Since the triangle inequality among x

0

)

≥ d ( x

1 then d

1

,ρ

[1]

If we consider Definition 1 of the split function, we can write d ( x

, x v

(

0

: d ( x

0

,

, x v x v y

, x v

)

, x

1

)

>

2

ρ

.

)

≤ d m

− ρ

). If we combine these inequalities and simplify the

)

>

2

ρ

.

1

,ρ

[0]

2

, x

1

∈

BPS

1

,ρ

2

[1]

, holds, we get y

∈

>

BPS d m

1

,ρ

1

[2]

− ρ

). By summing both the sides of

2

− ρ

1

. Finally, from the triangle

) and then d ( x

0

, y )

≥ ρ

2

− ρ

1

.

1

:

2

≥ ρ

1

. We prove it only for the object is analogous. Since y

∈

BPS .

As explained in Section 2.1, once we have defined a set of first order

ρ

-split functions, it is possible to combine them in order to obtain a function which generates more partitions.

This idea is depicted in figure 2, where two bps -split functions, on the two-dimensional space, are used. The domain

D

, represented by the grey square, is divided into four regions

{

S

2 ,ρ

[

·

]

(

D

)

} = {

S

2 ,ρ

[0]

(

D

)

,

S tions. The partition S

2

,ρ

[4]

2 ,ρ

[1]

(

D

)

,

S

2 ,ρ

[2]

(

D

)

,

S

2 ,ρ

[3]

(

D

)

}

, corresponding to the separable parti-

(

D

), the exclusion set, is represented by the brighter region and it is formed by the union of the exclusion sets resulting from the two splits.

D-INDEX: DISTANCE SEARCHING INDEX FOR METRIC DATA SETS 15

Figure 2 .

Clustering through partitioning in the two-dimensional space.

Figure 3 .

Example of pivots behavior.

In figure 3, the basic principle of the pivoting technique is illustrated, where q is the query object, r the query radius, and p i a pivot. Provided the distance between any object and p i is known, the gray area represents the region of objects x , that do not belong to the query result.

This can easily be decided without actually computing the distance between q and x , by using the triangle inequalities d ( x

, p i

)

+ d ( x

, q )

≥ d ( p i

, q ) and d ( p i

, q )

+ d ( x

, q )

≥ d ( x

, p i

), and respecting the query radius r . Naturally, by using more pivots, we can improve the probability of excluding an object without actually computing its distance with respect to q .

3.

System structure and algorithms

In this section we specify the structure of the Distance Searching Index, D-Index, and discuss the problems related to the definition of

ρ

-split functions and the choice of reference objects.

16 DOHNAL ET AL.

Then we specify the insertion algorithm and outline the principle of searching. Finally, we define the generic similarity range and nearest neighbor algorithms.

3.1.

Storage architecture

The basic idea of the D-Index is to create a multilevel storage and retrieval structure that uses several

ρ

-split functions, one for each level, to create an array of buckets for storing objects. On the first level, we use a

ρ

-split function for separating objects of the whole data set. For any other level, objects mapped to the exclusion bucket of the previous level are the candidates for storage in separable buckets of this level. Finally, the exclusion bucket of the last level forms the exclusion bucket of the whole D-Index structure. It is worth noting that the

ρ

-split functions of individual levels use the same

ρ

. Moreover, split functions can have different order, typically decreasing with the level, allowing the D-Index structure to have levels with a different number of buckets. More precisely, the D-Index structure can be defined as follows.

Definition 4 .

Given a set of objects X , the h -level distance searching index DI m

2

, . . . , m h dent

ρ

-split functions s i m i

,ρ

( i

=

1

,

2

, . . . , h ) which generate:

ρ

( X

, m

1

) with the buckets at each level separable up to 2

ρ

, is determined by h indepen-

,

Separable buckets

{

B i

,

0

Exclusion bucket

,

B i

,

1

E

, . . . ,

B i

,

2 mi −

1 i

=

} =

S m

1

1[2

,ρ m

1 ]

( X ) if i

=

1

S m i i [2

,ρ mi

]

S

S m

1

1[

·

]

,ρ m i i [

·

]

,ρ

(

(

E

X i

−

1

)

)

( E i

−

1

) if i if if

> i i

1

=

>

1

1

From the structure point of view, you can see the buckets organized as the following two dimensional array consisting of 1

+ h i

=

1

2 m i elements.

.

..

B

1

,

0

,

B

1

,

1

, . . . ,

B

1

,

2 m

1

−

1

B

2

,

0

,

B

2

,

1

, . . . ,

B

2

,

2 m

2

−

1

B h

,

0

, B h

,

1

, . . . , B h

,

2 mh −

1

, E h

All separable buckets are included, but only the E h buckets E i

< h exclusion bucket is present—exclusion are recursively re-partitioned on level i + 1. Then, for each row (i.e. the D-

Index level) i , 2 m i buckets are separable up to 2 ρ thus we are sure that do not exist two buckets at the same level i both containing relevant objects for any similarity range query with radius r x

≤ ρ

. Naturally, there is a tradeoff between the number of separable buckets and the amount of objects in the exclusion bucket—the more separable buckets there are, the greater the number of objects in the exclusion bucket is. However, the set of objects in the exclusion bucket can recursively be re-partitioned, with possibly different number of bits ( m i

) and certainly different

ρ

-split functions.

D-INDEX: DISTANCE SEARCHING INDEX FOR METRIC DATA SETS 17

3.2.

Insertion and search strategies

In order to complete our description of the D-Index, we present an insertion algorithm and a sketch of a simplified search algorithm. Advanced search techniques are described in the next section.

Insertion of object x

∈

X in DI

ρ

( X

, m

1

, m

2

, . . . , m h

) proceeds according to the following algorithm.

Algorithm 1.

Insertion for i

=

1 to h if s i m i x

→

B exit ;

,ρ

( x )

<

2 i , s i mi m i

,ρ

( x )

; end if end for x

→

E h

;

Starting from the first level, Algorithm 1 tries to accommodate x into a separable bucket. If a suitable bucket exists, the object is stored in this bucket. If it fails for all levels, the object x is placed in the exclusion bucket E h

. In any case, the insertion algorithm determines exactly one bucket to store the object.

Given a query region

Q = R

( q

, r q

) with q

∈ D and r q execute the query as follows.

≤ ρ

, a simple algorithm can

Algorithm 2.

Search for i

=

1 to h return all objects x such that x ∈ Q ∩ B i , s i mi end for return all objects x such that x

∈ Q ∩

E h

;

,

0

( q )

;

The function s i m i

,

0

( q ) always gives a value smaller than 2 m i , because

ρ =

0. Consequently, one separable bucket on each level i is determined. Objects from the query response set cannot be in any other separable bucket on the level i , because r q is not greater than ρ and the buckets are separable up to 2 ρ . However, some of them can be in the exclusion zone, and Algorithm 2 assumes that exclusion buckets are always accessed. That is why all levels are considered, and also the exclusion bucket E h is accessed. The execution of Algorithm 2 requires h

+

1 bucket accesses, which forms the upper bound of a more sophisticated algorithm described in the next section.

3.3.

Generic search algorithms

The search Algorithm 2 requires to access one bucket at each level of the D-Index, plus the exclusion bucket. In the following two situations, however, the number of accesses can

18 DOHNAL ET AL.

even be reduced:

– if the query region is contained in the exclusion partition of the level i , then the query cannot have objects in the separable buckets of this level and only the next level, if it exists, must be considered;

– if the query region is contained in a separable partition of the level i , the following levels, as well as the exclusion bucket for i

= h , need not be accessed, thus the search terminates on this level.

Another drawback of the simple algorithm is that it works only for search radii up to

ρ

.

However, with additional computational effort, queries with r q

> ρ can also be executed.

Indeed, queries with r q

> ρ can be executed by evaluating the split function s s r q

− ρ r q

− ρ

. In case returns a string without any ‘

−

’, the result is contained in a single bucket (namely

B s rq

− ρ ) plus, possibly, the exclusion bucket.

Let us now consider that the string returned contains at least one ‘

−

’. We indicate this string as ( b

1

, . . . , b n

) with b i

= { 0 , 1 , −} . In case there is only one b all buckets B , whose index is obtained by substituting in s r q most general case we must substitute in ( b

1

, . . . , b n

− ρ i

= ‘ − ’, we must access the ‘ − ’ with 0 and 1. In the

) all the ‘ − ’ with zeros and ones and generate all possible combinations. A simple example of this concept is illustrated in figure 4.

In order to define an algorithm for this process, we need some additional terms and notation.

Definition 5 .

We define an extended exclusive OR bit operator,

⊗

, which is based on the following truth table: bit 1 bit 2

⊗

0

0

0

0

1

1

0

−

0

1

0

1

1

1

0

1

−

0

−

0

0

−

1

0

−

−

0

Figure 4 .

Example of use of the function G.

D-INDEX: DISTANCE SEARCHING INDEX FOR METRIC DATA SETS 19

Notice that the operator ⊗ can be used bit-wise on two strings of the same length and that it always returns a standard binary number (i.e., it does not contain any ‘ − ’). Consequently, s

1

⊗ s

2

<

2 n is always true for strings s

1 and s

2 of length n (see Definition 3).

Definition 6 .

Given a string s of length n , G ( s ) denotes a sub-set of n such that all elements e i

∈ n for which s

⊗ e i

= {

0

,

1

, . . . ,

2 n

=

0 are eliminated (interpreting e i

−

1

}

, as a binary string of length n ).

Observe that, G (

− − · · · −

)

= n

, and that the cardinality of the set is the second power of the number of ‘

−

’ elements, that is generating all partition identifications in which the symbol ‘

−

’ is alternatively substituted by zeros and ones. In fact, we can use G (

·

) to generate, from a string returned by a split function, the set of partitions that need be accessed to execute the query. As an example let us consider that n

=

2 as in figure 4. If s s

2 , r

2 , r q q

− ρ

− ρ

=

(

−

1) then we must access buckets B

1 and B

3

. In such a case G (

−

1)

= {

1

,

3

}

. If

=

(

−−

) then we must access all buckets, as G (

−−

)

= {

0

,

1

,

2

,

3

} indicates.

We only apply the function G when the search radius r q is greater than the parameter

ρ

.

We first evaluate the split function using r q

− ρ

(which is greater than 0). Next, the function

G is applied to the resulting string. In this way, G generates the partitions that intersect the query region.

In the following, we specify how such ideas are applied in the D-Index to implement generic range and nearest neighbor algorithms.

3.3.1. Range queries.

Given a query region Q = R ( q , r q

) with q ∈ advanced algorithm can execute the similarity range query as follows.

D and r q

≤ d

+

. An

Algorithm 3.

Range search

01 .

for i

=

1 to h

02

03 .

.

if s i m i

,ρ + r q exit ;

( q )

<

2 m i then (exclusive containment in a separable bucket) return all objects x such that x

∈ Q ∩

B i , s i mi

,ρ + rq

( q )

;

04 .

end if

05 .

if r q

≤ ρ then (search radius up to

ρ

)

06

07 .

.

if s i m i

,ρ − r q ( q )

<

2 m i then (not exclusive containment in an exclusion bucket) return all objects x such that x

∈ Q ∩

B i , s i mi

,ρ − rq

( q )

;

08 .

end if

09 .

else (search radius greater than

ρ

)

10

11 .

.

let

{ l

1

, l 2

, . . . , lk x

∈ Q ∩

B i , l k

} =

;

G ( s i m i

, r q

− ρ

( q )) return all objects x such that x

∈ Q ∩

B i , l

1

12 .

end if or x

∈ Q ∩

B i , l

2 or

. . .

or

13 .

end for

14 .

return all objects x such that x

∈ Q ∩

E h

;

20 DOHNAL ET AL.

In general, Algorithm 3 considers all D-Index levels and eventually also accesses the global exclusion bucket. However, due to the symmetry property, the test on the line 02 can discover the exclusive containment of the query region in a separable bucket and terminate the search earlier. Otherwise, the algorithm proceeds according to the size of the query radius. If it is not greater than

ρ

, line 05, there are two possibilities. If the test on line 06 is satisfied, one separable bucket is accessed. Otherwise no separable bucket is accessed on this level, because the query region is, from this level point of view, exclusively in the exclusion zone. Provided the search radius is greater than

ρ

, more separable buckets are accessed on a specific level as defined by lines 10 and 11. Unless terminated earlier, the algorithm accesses the exclusion bucket at line 14.

3.3.2. Nearest neighbor queries.

The task of the nearest neighbor search is to retrieve k closest elements from X to q

∈ D

, respecting the metric

M

. Specifically, it retrieves a set

A

⊆

X such that

|

A

| = k and

∀ x

∈

A

, y

∈

X

−

A

, d ( q

, x )

≤ d ( q

, y ). In case of ties, we are satisfied with any set of k elements complying with the condition. For convenience, we designate the distance to the k -th nearest neighbor as d k

( d k

≤ d

+

).

The general strategy of Algorithm 4 works as follows. At the beginning (line 01), we assume that the response set A is empty and d k

= d

+

. The algorithm then proceeds with an optimistic strategy (lines 02 through 13) assuming that the k -th nearest neighbor is at distance maximally ρ . If it fails, see the test on line 14, additional steps of search are performed to find the correct result. Specifically, the first phase of the algorithm determines the buckets (maximally one separable at each level) by using the range query strategy with radius r

= min

{ d k

, ρ }

. In this way, the most promising buckets are accessed and their identifications are remembered to avoid multiple bucket accesses. If d k

≤ ρ the search terminates successfully and A contains the result. Otherwise, additional bucket accesses are performed (lines 15 through 22) using the strategy for radii greater than

ρ of Algorithm 3, ignoring the buckets already accessed in the first (optimistic) phase.

Algorithm 4.

Nearest neighbor search

01.

A = ∅ , d k

= d

+

; (initialization)

02.

for i = 1 to h (first - optimistic - phase)

03.

04.

r if

= s i min m i

{

,ρ + r d k

, ρ }

;

( q )

<

2 m i then (exclusive containment in a separable bucket)

05.

06.

access bucket B i , s i mi

,ρ + r

( q )

; update A and d k

; if d k

≤ ρ then exit ; (the response set is determined)

07.

else

08.

if s i m i

09.

10.

end if

,ρ − r

( q )

< access bucket

2

B m i (not exclusive containment in an exclusion bucket) i

, s i mi , 0

( q )

; update A and d k

;

11.

end if

12.

end for

13. access bucket E h

; update A and d k

;

14.

if d k

> ρ

(second phase - if needed)

D-INDEX: DISTANCE SEARCHING INDEX FOR METRIC DATA SETS 21

15.

for i = 1 to h

16.

if s i m i

,ρ + d k ( q ) < 2

17.

access bucket B m i i

, s i mi then

,ρ + dk

(exclusive containment in a separable bucket)

( q ) if not already accessed; update A and d k

; exit ;

18.

19.

20.

21.

else let

{ b

1

, b

2

, . . . , access buckets b

B i k

,

} =

G s i m i b

1

, d

, . . . ,

B i

, b

2 k

− ρ

,

B

( q ) i

, b k end if if not accessed; update A and d k

;

22.

end for

23.

end if

The output of the algorithm is the result set A and the distance to the last ( k -th) nearest neighbor d k

. Whenever a bucket is accessed, the response set A and the distance to the k -th nearest neighbor must be updated. In particular, the new response set is determined as the result of the nearest neighbor query over the union of objects from the accessed bucket and the previous response set A . The value of d k

, which is the current search radius in the given bucket, is adjusted according to the new response set. Notice that the pivots are used during the bucket accesses, as it is explained in the next subsection.

3.4.

Design issues

In order to apply D-Index, the ρ -split functions must be designed, which obviously requires specification of reference (pivot) objects. An important feature of D-Index is that the same reference objects used in the

ρ

-split functions for the partitioning into buckets are also used as pivots for the internal management of buckets.

3.4.1. Choosing references.

The problem of choosing reference objects (pivots) is important for any search technique in the general metric space, because all such algorithms need, directly or indirectly, some “anchors” for partitioning and search pruning. It is well known that the way in which pivots are selected can affect the performance of proper algorithms.

This has been recognized and demonstrated by several researchers, e.g. [11] or [1], but specific suggestions for choosing good reference objects are rather vague. In this article, we use the same sets of reference objects both for the separable partitioning and the pivot-based filtering.

Recently, the problem was systematically studied in [2], and several strategies for selecting pivots have been proposed and tested. The generic conclusion is that the mean

µ

M

T of distance distribution in the feature space

M

T should be as high as possible, which is formalized by the hypothesis that the set of pivots T set T

2

= { p

1

, p

2

, . . . , p t

} if

1

= { p

1

, p

2

, . . . , p t

} is better than the

µ

M

T

1

> µ

M

T

2

(4)

However, the problem is how to find the mean for given pivot set T . An estimate of such quantity is proposed in [2] and computed as:

22 DOHNAL ET AL.

– at random, choose l pairs of elements

{

( o

1

, o

1

)

,

( o

2

, o

2

)

, . . . ,

( o l

, o l

)

} from

D

;

– for all pairs of elements, compute their distances in the feature space determined by the pivot set T ;

– compute

µ

M

T as the mean of these distances.

We apply the incremental selection strategy which was found in [2] as the most suitable for real world metric spaces, which works as follows. At the beginning, a set T = { p

1

} of one element from a sample of m database elements is chosen, such that the pivot p

1 has the maximum µ

M

T value. Then, a second pivot p

2 elements of the database, creating a new set K

= { p

1 is chosen from another sample of m

, p

2

} for fixed p

1

, maximizing

µ

M

T

.

The third pivot p

3 another set T

= { p is chosen from another sample of m elements of the database, creating

1

, p

2

, p

3

} for fixed p

1

, p

2

, maximizing

µ

M

T

. The process is repeated until t pivots are determined.

In order to verify the capability of the incremental (INC) strategy, we have also tried to select pivots at random (RAN) and with a modified incremental strategy, called the middle search strategy (MID). The MID strategy combines the original INC approach with the following idea. The set of pivots T

T

2

= { p

1

, p

2

, . . . , p t

} if

| µ

T

1

− d m distance in a given data set and

µ

T

| < | µ

T

2

1

− d m

= { p

1

, p

2

|

, where d

, . . . , p m t

} is better than the set represents the average global is the average distance in the set T . In principle, this strategy supports choices of pivots with high

µ

M

T but also tries to keep distances between pairs of pivots close to d m

. The hypothesis is that too close or too distant pairs of pivots are not suitable for good partitioning of metric spaces.

3.4.2. Elastic bucket implementation.

Since a uniform bucket occupation is difficult to guarantee, a bucket consists of a header plus a dynamic list of fixed size blocks of capacity that is sufficient to accommodate any object from the processed file. The header is characterized by a set of pivots and it contains distances to these pivots from all objects stored in the bucket. Furthermore, objects are sorted according to their distances to the first pivot p

1 to form a non-decreasing sequence. In order to cope with dynamic data, a block overflow is solved by splitting.

Given a query object q and search radius r a bucket evaluation proceeds in the following steps:

– ignore all blocks containing objects with distances to p r

, d ( q

, p

1

)

+ r ];

– for each not ignored block, if

∀ o

– access remaining blocks and ∀ o i i

,

L

, L

∞

∞

( F ( o i

1 outside the interval [

) , F ( q )) ≤ r compute d ( q , o i

). If d d

(

( F ( o i

)

,

F ( q ))

> r then ignore the block;

( q q

,

, p o i

1

)

−

) ≤ r , then o i qualifies.

In this way, the number of accessed blocks and necessary distance computations are minimized.

3.5.

Properties of D-index

On a qualitative level, the most important properties of D-Index can be summarized as follows:

D-INDEX: DISTANCE SEARCHING INDEX FOR METRIC DATA SETS 23

– An object is typically inserted at the cost of one block access, because objects in buckets are sorted. Since blocks are of fixed length, a block can overflow and a split of an overloaded block costs another block access. The number of distance computations between the reference (pivot) and inserted objects varies from m inserted on level j , j i

=

1 m i

1 to distance computations are needed.

h i

=

1 m i

. For an object

– For all response sets such that the distance to the most dissimilar object does not exceed

ρ , the number of bucket accesses is maximally h + 1. The smaller the search radius, the more efficient the search can be.

– For r

=

0, i.e. the exact match, the successful search is typically solved with one block access, unsuccessful search usually does not require any access—very skewed distance distributions may result in slightly more accesses.

– For search radii greater than

ρ

, the number of bucket accesses is higher than h

+

1, and for very large search radii, the query evaluation can eventually access all buckets.

– The number of accessed blocks in a bucket depends on the search radius and the distance distribution with respect to the first pivot.

– The number of distance computations between the query object and pivots is determined by the highest level of an accessed bucket. Provided the level is j , the number of distance computations is j i

=

1 m i

, with the upper bound for any kind of query h i

=

1 m i

.

– The number of distance computations between the query object and data objects stored in accessed buckets depends on the search radii and the number of pre-computed distances to pivots.

From the quantitative point of view, we investigate the performance of D-Index in the next section.

4.

Performance evaluation and comparison

We have implemented the D-Index and conducted numerous experiments to verify its properties on the following three metric data sets:

VEC 45-dimensional vectors of image color features compared by the quadratic distance measure respecting correlations between individual colors.

URL sets of URL addresses attended by users during work sessions with the Masaryk

University information system. The distance measure used was based on set similarity, defined as the fraction of cardinalities of intersection and union (Jacard coefficient).

STR sentences of a Czech national corpus compared by the edit distance measure that counts the minimum number of insertions, deletions or substitutions to transform one string into the other.

We have chosen these data sets not only to demonstrate the wide range of applicability of the

D-Index, but also to show its performance over data with significantly different and realistic data (distance) distributions. For illustration, see figure 5 for distance densities of all our data sets. Notice the practically normal distribution of VEC, very discrete distribution of

URL, and the skewed distribution of STR.

24

Frequency

0.008

VEC Frequency

0.16

URL Frequency

0.008

DOHNAL ET AL.

STR

0.004

0.08

0.004

0

0 2000 4000 6000 8000

Distance

0

0 0.2

0.4

0.6

0.8

Distance

1

Figure 5 .

Distance densities for VEC, URL, and STR.

0

0 200 600 1000

Distance

1600

In all our experiments, the query objects are not chosen from the indexed data sets, but they follow the same distance distribution. The search costs are measured in terms of distance computations and block reads. We deliberately ignore the L

∞ distance measures used by the D-Index for the pivot-based filtering, because according to our tests, the costs to compute such distances are several orders of magnitude smaller than the costs needed to compute any of the distance functions of our experiments. All presented cost values are averages obtained by executing queries for 50 different query objects and constant search selectivity, that is queries using the same search radius or the same number of nearest neighbors.

4.1.

D-Index performance evaluation

In order to test the basic properties of the D-Index, we have considered about 11000 objects for each of our data sets VEC, URL, and STR. Notice that the number of object used does not affect the significance of the results, since the cost measures (i.e., the number of block reads and the number of the distance computations) are not influenced by the cache of the system. Moreover, as shown in the following, performance scalability is linear with data set size. First, we have tested the efficiency of the incremental (INC), the random (RAN), and the middle (MID) strategies to select good reference objects. For each set of the selected reference objects and specific data set, we have built D-Index organizations of pre-selected structures. In Table 1, we summarize characteristics of resulting D-Index organizations, separately for individual data sets.

We measured the search efficiency for range queries by changing the search radius to retrieve maximally 20% of data, that is about 2000 objects. The results, presented in graphs

Table 1 .

D-Index organization parameters.

Set

VEC

URL

STR h

9

9

9

No. of buckets

21

21

21

No. of blocks

2600

228

289

Block size

1 KB

13 KB

6 KB

ρ

1200

0.225

14

D-INDEX: DISTANCE SEARCHING INDEX FOR METRIC DATA SETS 25

Distance Computations VEC

7000

6000

INC

MID

RAN

5000

4000

3000

2000

1000

0

0 2 4 6 8 10 12 14 16 18 20

Search radius (x100)

Distance Computations

12000

10000

INC

MID

RAN

8000

6000

URL

4000

2000

0

0 0.1 0.2 0.3 0.4 0.5 0.6 0.7 0.8

Search radius

Distance Computations STR

8000

7000

6000

5000

INC

MID

RAN

4000

3000

2000

1000

0

0 10 20 30 40 50 60 70

Search radius

Figure 6 .

Search efficiency in the number of distance computations for VEC, URL, and STR.

Page Reads VEC

1800

1600

1400

1200

1000

INC

MID

RAN

800

600

400

200

0

0 2 4 6 8 10 12 14 16 18 20

Search radius (x100)

Page Reads

250

200

INC

MID

RAN

150

100

50

URL

0

0 0.1 0.2 0.3 0.4 0.5 0.6 0.7 0.8

Search radius

Page Reads STR

160

140

120

INC

MID

RAN

100

80

60

40

20

0

0 10 20 30 40 50 60 70

Search radius

Figure 7 .

Search efficiency in the number of block reads for VEC, URL, and STR.

for all three data sets, are available in figure 6 for the distance computations and in figure 7 for the block reads.

The general conclusion is that the incremental method proved to be the most suitable for choosing reference objects for the D-Index. Except for very few search cases, the method was systematically better and can certainly be recommended—for the sentences, the middle technique was nearly as good as the incremental, and for the vectors, the differences in performance of all three tested methods were the least evident. An interesting observation is that for vectors with distribution close to uniform, even the randomly selected reference points worked quite well. However, when the distance distribution was significantly different from the uniform, the performance with the randomly selected reference points was poor.

Notice also some differences in performance between the execution costs quantified in block reads and distance computations. But the general trends are similar and the incremental method is the best. However, different cost quantities are not equally important for all the data sets. For example, the average time for quadratic form distances for the vectors were computed in much less than 90

µ sec, while the average time spent computing a distance between two sentences was about 4.5 msec. This implies that the reduction of block reads for vectors was quite important in the total search time, while for the sentences, it was not important at all.

26

Figure 8 .

Nearest neighbor search on sentences using different D-Index structures.

DOHNAL ET AL.

Figure 9 .

Range search on sentences using different D-Index structures.

The objective of the second group of tests was to investigate the relationship between the D-Index structure and the search efficiency. We have tested this hypothesis on the data set STR, using

ρ =

1

,

14

,

25

,

60. The results of experiments for the nearest neighbor search are reported in figure 8 while performance for range queries is summarized in figure 9. It is quite obvious that very small

ρ can only be suitable when range search with small radius is used. However, this was not typical for our data where the distance to a nearest neighbor was rather high—see in figure 8 the relatively high costs to get the nearest neighbor. This implies that higher values of

ρ

, such as 14, are preferable. However, there are limits to increase such value, since the structure with

ρ =

25 was only exceptionally better than the one with ρ = 14, and the performance with ρ = 60 was rather poor. The experiments demonstrate that a proper choice of the structure can significantly influence the performance. The selection of optimized parameters is still an open research issue, that we plan to investigate in the near future.

In the last group of tests, we analyzed the influence of the block size on system performance. We have experimentally studied the problem on the VEC data set both for the nearest neighbor and the range search. The results are summarized in figure 10.

In order to obtain an objective comparison, we express the cost as relative block reads, i.e. the percentage of blocks that was necessary to execute a query.

D-INDEX: DISTANCE SEARCHING INDEX FOR METRIC DATA SETS 27

Page Reads (%)

80

70

60

50

40

30

20

10

0

1 block=400 block=1024 block=65536

5 10 20

Nearest neighbors

50 100

Figure 10 .

Search efficiency versus the block size.

Page Reads (%)

70

60

50

40

30

20

10

0

0 block=400 block=1024 block=65536

5 10 15

Search radius (x100)

20

We can conclude from the experiments that the search with smaller blocks requires to read a smaller fraction of the entire data structure. Notice that the number of distance computations for different block sizes is practically constant and depends only on the query. However, a small block size implies a large number of blocks; this means that in case the access cost to a block is significant (e.g., if blocks are allocated randomly) than large block sizes may become preferable. However, when the blocks are stored in a continuous storage space, small blocks can be preferable, because an optimized strategy for reading a set of disk pages, such as that proposed in [10], can be applied. For a static file, we can easily achieve such storage allocation of the D-Index through a simple reorganization.

4.2.

D-Index performance comparison

We have also compared the performance of D-Index with other index structures under the same workload. In particular, we considered the M-tree 1 [5] and the sequential organization,

SEQ, because, according to [4], these are the only types of index structures for metric data that use disk memories to store objects. In order to maximize objectivity of comparison, all three types of index structures are implemented using the GIST package [9], and the actual performance is measured in terms of the number of distance comparisons and I/O operations—the basic access unit of disk memory for each data set was the same for all index structures.

The main objective of these experiments was to compare the similarity search efficiency of the D-Index with the other organizations. We have also considered the space efficiency, costs to build an index, and the scalability to process growing data collections.

4.2.1. Search efficiency for different data sets.

We have built the D-Index, M-tree, and

SEQ for all the data sets VEC, URL, and STR. The M-tree was built by using the bulk load package and the Min-Max splitting strategy. This approach was found in [5] as the most efficient to construct the tree. We have measured average performance over 50 different query objects considering numerous similarity range and nearest neighbor queries. The

28 DOHNAL ET AL.

Distance Computations

12000

10000

8000 dindex mtree seq

6000

4000

2000

0

0 5 10 15

Search radius (x100)

VEC

20

Distance Computations

12000

10000

8000 dindex mtree seq

6000

URL

4000

2000

0

0 0.1 0.2 0.3 0.4 0.5 0.6 0.7 0.8

Search radius

Distance Computations

12000

10000

8000 dindex mtree seq

6000

STR

4000

2000

0

0 10 20 30 40 50 60 70

Search radius

Figure 11 .

Comparison of the range search efficiency in the number of distance computations for VEC, URL, and STR.

Page Reads

1000

900

800

700

600

500

400

300

200

100

0

0 dindex mtree seq

5 10 15

Search radius (x100)

VEC

20

Page Reads

120

100

80

60 dindex mtree seq

URL

40

20

0

0 0.1 0.2 0.3 0.4 0.5 0.6 0.7 0.8

Search radius

Page Reads STR

140

120

100

80

60 dindex mtree seq

40

20

0

0 10 20 30 40 50 60 70

Search radius

Figure 12 .

Comparison of the range search efficiency in the number of block reads for VEC, URL, and STR.

Distance Computations

12000

10000

8000 dindex mtree seq

6000

4000

2000

0

1 5 10 20

VEC

50 100

Nearest neighbors

Distance Computations

12000

10000

8000 dindex mtree seq

6000

4000

2000

0

1 5 10 20

URL

50 100

Nearest neighbors

Distance Computations

12000

10000

8000 dindex mtree seq

6000

4000

2000

0

1 5 10 20

STR

50 100

Nearest neighbors

Figure 13 .

Comparison of the nearest neighbor search efficiency in the number of distance computations for

VEC, URL, and STR.

results for the range search are shown in figures 11 and 12, while the performance for the nearest neighbor search is presented in figures 13 and 14.

For all tested queries, i.e. retrieving subsets up to 20% of the database, the M-tree and the D-Index always needed less distance computations than the sequential scan. However, this was not true for the block accesses, where even the sequential scan was typically

D-INDEX: DISTANCE SEARCHING INDEX FOR METRIC DATA SETS 29

Page Reads

1200

1000

800 dindex mtree seq

600

400

200

0

1 5 10 20

Nearest neighbors

50

VEC

100

Page Reads

120

100

80 dindex mtree seq

60

40

20

0

1 5 10 20

Nearest neighbors

50

URL

100

Page Reads

140

120

100

80

60

40

20

0

1 dindex mtree seq

5 10 20

Nearest neighbors

50

STR

100

Figure 14 .

Comparison of the nearest neighbor search efficiency in the number of block reads for VEC, URL, and STR.

more efficient than the M-tree. In general, the number of block reads of the M-tree was significantly higher than for the D-Index. Only for the URL sets when retrieving large subsets, the number of block reads of the D-Index exceeded the SEQ. In this situation, the

M-tree accessed nearly three times more blocks than the D-Index or SEQ. On the other hand, the M-tree and the D-Index required for this search case much less distance computations than the SEQ.

Figure 12 demonstrates another interesting observation: to run the exact match query, i.e.

range search with r q

=

0, the D-Index only needs to access one block. As a comparison, see

(in the same figure) the number of block reads for the M-tree: they are one half of the SEQ for vectors, equal to the SEQ for the URL sets, and even three times more than the SEQ for the sentences. Notice that the exact match search is important when a specific object is to be eliminated—the location of the deleted object forms the main cost. In this respect, the

D-Index is able to manage deletions more efficiently than the M-tree. We did not verify this fact by explicit experiments, because the available version of the M-tree does not support deletions.

Concerning the block reads, the D-Index looks to be significantly better than the Mtree, while comparison on the level of distance computations is not so clear. The D-Index certainly performed much better for the sentences and also for the range search over vectors.

However, the nearest neighbor search on vectors needed practically the same number of distance computations. For the URL sets, the M-tree was on average only slightly better than the D-Index.

4.2.2. Search, space, and insertion scalability.

To measure the scalability of the D-Index,

M-tree, and SEQ, we have used the 45-dimensional color feature histograms. Particularly, we have considered collections of vectors ranging from 100,000 to 600,000 elements. For these experiments, the structure of D-Index was defined by 37 reference objects and 74 buckets, where only the number of blocks in buckets was increasing to deal with growing files. The results for several typical nearest neighbor queries are reported in figure 15. The execution costs for range queries are reported in figure 16. Except for the SEQ organization, the individual curves are labelled with a number, representing either the number of nearest neighbors or the search radius, and a letter, where D stands for the D-Index and M for the M-tree.

30 DOHNAL ET AL.

Distance Computations

600000

500000

400000

1 D

100 D

1 M

100 M

SEQ

300000

200000

100000

0

100 200 300 400 500 600

Data Set Size (x1000)

Figure 15 .

Nearest neighbor search scalability.

Page Reads

30000

25000

20000

1 D

100 D

1 M

100 M

SEQ

15000

10000

5000

0

100 200 300 400 500 600

Data Set Size (x1000)

Distance Computations

600000

500000

400000

1000 D

2000 D

1000 M

2000 M

SEQ

300000

200000

100000

0

100 200 300 400 500 600

Data Set Size (x1000)

Figure 16 .

Range search scalability.

Page Reads

30000

25000

20000

1000 D

2000 D

1000 M

2000 M

SEQ

15000

10000

5000

0

100 200 300 400 500 600

Data Set Size (x1000)

We can observe that on the level of distance computations the D-Index is usually slightly better than the M-tree, but the differences are not significant—the D-Index and M-tree can save considerable number of distance computations compared to the SEQ. To solve a query, the M-tree needs significantly more block reads than the D-Index and for some queries, see 2000M in figure 16, this number is even higher than for the SEQ.We have also investigated the performance of range queries with zero radius, i.e. the exact match.

Independently of the data set size, the D-Index required one block access and 18 distance comparisons. This was in a sharp contrast with the M-tree, which needed about 6,000 block reads and 20,000 distance computations to find the exact match in the set of 600,000 vectors.

The search scale up of the D-Index was strictly linear. In this respect, the M-tree was even slightly better, because the execution costs for processing a two times larger file were not two times higher. This sublinear behavior should be attributed to the fact that the M-tree is incrementally reorganizing its structure by splitting blocks and, in this way, improving the clustering of data. On the other hand, the D-Index was using a constant bucket structure, where only the number of blocks was changing. Such observation is posing another

D-INDEX: DISTANCE SEARCHING INDEX FOR METRIC DATA SETS 31 research challenge, specifically, to build a D-Index structure with a dynamic number of buckets.

The disk space to store data was also increasing in all our organizations linearly. The

D-Index needed about 20% more space than the SEQ. In contrast, the M-tree occupied two times more space than the SEQ. In order to insert one object, the D-Index evaluated on average the distance function 18 times. That means that distances to (practically) one half of the 37 reference objects were computed. For this operation, the D-

Index performed 1 block read and, due to the dynamic bucket implementation using splits, a bit more than one block writes. The insertion into the M-tree was more expensive and required on average 60 distance computations, 12 block reads, and 2 block writes.

5.

Conclusions

Recently, metric spaces have become an important paradigm for similarity search and many index structures supporting execution of similarity queries have been proposed. However, most of the existing structures are only limited to operate on main memory, so they do not scale up to high volumes of data. We have concentrated on the case where indexed data are stored on disks and we have proposed a new index structure for similarity range and nearest neighbor queries. Contrary to other index structures, such as the M-tree, the D-Index stores and deletes any object with one block access cost, so it is particularly suitable for dynamic data environments. Compared to the M-tree, it typically needs less distance computations and much less disk reads to execute a query. The D-Index is also economical in space requirements. It needs slightly more space than the sequential organization, but at least two times less disk space compared to the M-tree. As experiments confirm, it scales up well to large data volumes.

Our future research will concentrate on developing methodologies that would support optimal design of the D-Index structures for specific applications. The dynamic bucket management is another research challenge. We will also study parallel implementations, which the multilevel hashing structure of the D-Index inherently offers. Finally, we will carefully study two very recent proposals to index structures based on selection of reference objects [6, 13] and compare the performance of D-index with these approaches.

Acknowledgments

We would like to thank the anonymous referees for their comments on the earlier version of the paper.

Note

1. The software is available at http://www-db.deis.unibo.it/research/Mtree/

32 DOHNAL ET AL.

References

1. T. Bozkaya and Ozsoyoglu, “Indexing large metric spaces for similarity search queries,” ACM TODS, Vol.

24, No. 3, pp. 361–404, 1999.

2. B. Bustos, G. Navarro, and E. Chavez, “Pivot selection techniques for proximity searching in metric spaces,” in Proceedings of the XXI Conference of the Chielan Computer Science Society (SCCC01), IEEE CS Press,

2001, pp. 33–40.

3. E. Chavez, J. Marroquin, and G. Navarro, “Fixed queries array: A fast and economical data structure for proximity searching,” Multimedia Tools and Applications, Vol. 14, No. 2, pp. 113–135,

2001.

4. E. Chavez, G. Navarro, R. Baeza-Yates, and J. Marroquin, “Proximity searching in metric spaces,” ACM

Computing Surveys. Vol. 33, No. 3, pp. 273–321, 2001.

5. P. Ciaccia, M. Patella, and P. Zezula, “M-tree: An efficient access method for similarity search in metric spaces,” in Proceedings of the 23rd VLDB Conference, Athens, Greece, 1997, pp. 426–435.

6. R.F.S. Filho, A. Traina, C. Traina Jr., and C. Faloutsos, “Similarity search without tears: The OMNI-family of all-purpose access methods,” in Proceedings of the 17th ICDE Conference, Heidelberg, Germany, 2001, pp. 623–630.

7. V. Dohnal, C. Gennaro, P. Savino, and P. Zezula, “Separable splits in metric data sets,” in Proceedings of

9-th Italian Symposium on Advanced Database Systems, Venice, Italy, June 2001, pp. 45–62, LCM Selecta

Group—Milano.

8. C. Gennaro, P. Savino, and P. Zezula, “Similarity search in metric databases through Hashing,” in Proceedings of ACM Multimedia 2001 Workshops, Oct. 2001, Ottawa, Canada, pp. 1–5.

9. J.M. Hellerstein, J.F. Naughton, and A. Pfeffer, “Generalized search trees for database systems,” in Proceedings of the 21st VLDB Conference, 1995, pp. 562–573.

10. B. Seeger, P. Larson, and R. McFayden, “Reading a set of disk pages,” in Proceedings of the 19th VLDB

Conference, 1993, pp. 592–603.

11. P.N. Yianilos, “Data structures and algorithms for nearest neighbor search in general metric spaces,” ACM-

SIAM Symposium on Discrete Algorithms (SODA), 1993, pp. 311–321.

12. P.N. Yianilos, “Excluded middle vantage point forests for nearest neighbor search,” Tech. rep., NEC Research

Institute, 1999, Presented at Sixth DIMACS Implementation Challenge: Nearest Neighbor Searches workshop,

Jan. 15, 1999.

13. C. Yu, B.C. Ooi, K.L. Tan, and H.V. Jagadish, “Indexing the Distance: An efficient method to KNN processing,” in Proceedings of the 27th VLDB Conference, Roma, Italy, 2001, pp. 421–430.

Vlastislav Dohnal received the B.Sc. degree and M.Sc. degree in Computer Science from the Masaryk University,

Czech Republic, in 1999 and 2000, respectively. Currently, he is a Ph.D. student at the Faculty of Informatics,

Masaryk University. His interests include multimedia data indexing, information retrieval, metric spaces, and related areas.

D-INDEX: DISTANCE SEARCHING INDEX FOR METRIC DATA SETS 33

Claudio Gennaro received the Laurea degree in Electronic Engineering from University of Pisa in 1994 and the

Ph.D. degree in Computer and Automation Engineering from Politecnico di Milano in 1999. He is now researcher at IEI an institute of National Research Council (CNR) situated in Pisa. His Ph.D. studies were in the field of Performance Evaluation of Computer Systems and Parallel Applications. His current main research interests are Performance Evaluation, Similarity retrieval, Storage Structures for Multimedia Information Retrieval and

Multimedia document modelling.

Pasquale Savino graduated in Physics at the University of Pisa, Italy, in 1980. From 1983 to 1995 he has worked at the Olivetti Research Labs in Pisa; since 1996 he has been a member of the research staff at CNR-IEI in Pisa, working in the area of multimedia information systems. He has participated and coordinated several EU-funded research projects in the multimedia area, among which MULTOS (Multimedia Office Systems), OSMOSE (Open Standard for Multimedia Optical Storage Environments), HYTEA (HYperText Authoring), M-CUBE (Multiple Media

Multiple Communication Workstation), MIMICS (Multiparty Interactive Multimedia Conferencing Services),

HERMES (Foundations of High Performance Multimedia Information Management Systems). Currently, he is coordinating the project ECHO (European Chronicles on Line). He has published scientific papers in many international journals and conferences in the areas of multimedia document retrieval and information retrieval. His current research interests are multimedia information retrieval, multimedia content addressability and indexing.

Pavel Zezula is Full Professor at the Faculty of Informatics, Masaryk University of Brno. For many years, he has been cooperating with the IEI-CNR Pisa on numerous research projects in the areas of Multimedia Systems and Digital Libraries. His research interests concern storage and index structures for non-traditional data types, similarity search, and performance evaluation. Recently, he has focused on index structures and query evaluation of large collections of XML documents.