Spectral Imaging for Remote Sensing

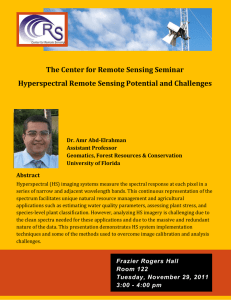

advertisement