Operational Acceptance Testing

advertisement

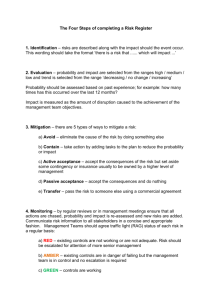

White Paper Operational Acceptance Testing Business continuity assurance December 2012 Dirk Dach, Dr. Kai-Uwe Gawlik, Marc Mevert SQS Software Quality Systems Whitepaper / Operational Acceptance Testing Contents 1. Management Summary . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 3 2. Introduction . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 3 3. Market – Current Status and Outlook . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 5 4. Scope of Operational Acceptance Testing . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 6 5. Types of Operational Acceptance Testing . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 8 5.1. Operational Documentation Review . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 8 5.2. Code Analysis . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 10 5.3. Dress Rehearsal . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 11 5.4. Installation Testing . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 12 5.5. Central Component Testing . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 14 5.6. End-to-End (E2E) Test Environment Operation . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 15 5.7. SLA / OLA Monitoring Test . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 17 5.8. Load and Performance (L&P) Test Operation . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 17 5.9. OAT Security Testing . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 19 5.10. Backup and Restore Testing . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 20 5.11. Failover Testing . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 21 5.12. Recovery Testing . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 22 6. Acceptance Process . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 24 7. Conclusion and Outlook . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 25 8. Bibliographical References . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 25 SQS Software Quality Systems © 2012 Page 2 Whitepaper / Operational Acceptance Testing 1. Management Summary Operational Acceptance is the last line of defence between a software development project and the productive use of the software. If anything goes wrong after the handover of the finished project to the application owner and the IT operation team, the customer’s business will immediately be affected by any negative consequences. In order to minimise the risk of going live, operational acceptance testing is the instrument of choice. The ISTQB defines Operational Acceptance Testing (OAT) as follows: Operational testing in the acceptance test phase, typically performed in a (simulated) operational environment by operations and / or systems administration staff focusing on operational aspects, e.g. recoverability, resource-behaviour, installability and technical compliance. Definition 1: Operational acceptance testing (International Software Testing Qualifications Board, 2010) This definition points out the growing importance of activities connected with OAT in times of increasing cloud computing which poses additional risks for business continuity. The present whitepaper will give a complete overview of OAT with respect to all relevant quality aspects. It will show that OAT is not only restricted to a final acceptance phase but can be implemented systematically following best practices so as to minimise the risks for day one and beyond. 2. Introduction When the project teams have completed the development of the software, it is released and handed over to the operation team and the application owner. It immediately becomes part of the business processes as it is launched into the production environments. Consequently, known and unknown defects of the software will directly impact business continuity and potentially cause damage to a greater or lesser extent. In addition, responsibility typically is transferred from the development units to two stakeholders: —— Application Owner: The single point of contact for business units concerning the operation of dedicated applications. These units are the internal part of the line organisation managing the maintenance, and constitute the interface to the operation team. —— Operation Team: An internal or external team deploying and operating software following well-defined processes, e.g. tailored by ITIL (APM Group Limited, 2012). These units are equipped with system and application utilities for managing the operation, and they will contact the application owners if bigger changes should be necessary to guarantee business continuity. SQS Software Quality Systems © 2012 Page 3 Whitepaper / Operational Acceptance Testing OAT subsumes all test activities performed by application owners and operation teams to arrive at the acceptance decision with respect to their capability to operate the software under agreed Service Level Agreements and Operation Level Agreements (SLAs / OLAs). These agreements provide measurable criteria which – if they are fulfilled – implicitly ensure business continuity. Operation capability covers three groups of tasks: —— Transition: This designates the successful deployment of software into the production environment using existing system management functionality. The production environment could be a single server or thousands of workstations in agencies. Software deployment includes software, database updates, and reorganisation but also fallback mechanisms in case of failures. The operation team has to be trained and equipped with the respective tools. —— Standard Operation: This designates successful business execution and monitoring on both the system and the application level. It includes user support (helpdesk for user authorisation and incident management) and operation in case of non-critical faults, e.g. switching to a mirror system in case of failure analysis on the main system. —— Crisis Operation: System instability causes failures and downtimes. Operation teams must be able to reset the system and its components into defined states and continue stable standard operation. Downtimes have to be as short as possible, and reset must be achievable without data or transaction loss and / or damage. Operational activities are divided between the application owner and the operation team, depending on the individual organisation, and due to compliance requirements they must be traceable. Division of work has to be taken into account for OAT. For many years now, functional testing has been performed systematically, and its methods can directly be applied to OAT: —— Risk-based approach: Test effort is spent on components involving the most probable and greatest possible damage. This is done in order to achieve the highest quality on a given budget. —— Early error detection: Test activities are executed as soon as possible in the software development life cycle because the earlier any defects are found the less will be the cost of correction. OAT activities are allocated to all phases of the software development and coupled with quality gates to follow the cost-saving principle (see Figure 1). The present paper gives an overview of how to use ISO 25000 (ISO/IEC JTC 1/SC 7 Software and Systems Engineering, 2010) to scope out OAT systematically, how to adjust activities along the software life cycle, and how to apply test methods successfully by having application owners and operation teams involve other stakeholders like architects, developers, or infrastructure. SQS Software Quality Systems © 2012 Page 4 Whitepaper / Operational Acceptance Testing Business Ope ration Teams Transition Standard Operation Crisis Operation Ap Analysis Design plic atio n O wne rs Implementation Functional Testing Operation Quality Gates Operational Acceptance Testing Figure 1: Main activities and stakeholders of OAT 3. Market – Current Status and Outlook Apart from the general requirements outlined above, companies will also be influenced by the implementation models used by cloud service providers. Gartner recommends that enterprises should apply the concepts of cloud computing to future data centre and infrastructure investments in order to increase agility and efficiency. This, of course, affects operational models, and OAT will have to support business continuity in this growing market. Forrester estimates that cloud revenue will grow from $ 41 billion in 2011 to $ 241 billion in 2020 (Valentino-DeVries, 2011). Today, OAT is already being executed by a variety of stakeholders. Target groups are specialists like application owners or operation units in large organisations, as well as individuals in the private sector. Internal departments and / or vendors need to be addressed to establish sustainable processes. SQS currently has many interfaces with the above-mentioned stakeholders through projects regarding test environment and test data management. As the leader in this field, SQS notices a strong demand on the clients’ side to move OAT from an unsystematic to a systematic approach by applying methods adapted from functional testing. Said demand arises independently in different business sectors as customers increasingly feel the need to use outsourcing, offshoring, and cloud processing but do not yet fully understand what these require from the point of view of capability and methodology. SQS Software Quality Systems © 2012 Page 5 Whitepaper / Operational Acceptance Testing From a tester’s perspective, many companies neglect the definition of requirements for operating software systems. Quite often, not just defects but also gaps in mandatory functionality are only identified shortly before release. These features will not only be missing in production, they already cause delays when executing E2E tests. For this reason, the functional and non-functional requirements of operation teams have to be systematically managed, implemented, and tested, just like business functions. In times of cloud computing and operation outsourcing, it is of great importance to support business continuity and ensure trust by verifying operation under agreed SLAs / OLAs. Especially monitoring is crucial to achieve transparency and obtain early indicators in order to avoid incidents. OAT should follow a systematic approach so as to mitigate the risks of cloud providers and outsourcing partners, because companies which offer services using these types of sourcing will not notice any incidents but their impact will be felt directly by the clients. 4. Scope of Operational Acceptance Testing ISO 25010 addresses the quality attributes of software products. One of these attributes – operability – is directly aimed at stakeholders of operation. But considering operability as a mere subattribute of usability is not enough. Software development does not produce functionality for business purposes only – dedicated functionality or process definition for operating purposes also forms part of the final software products. These artefacts are evaluated in terms of the quality attributes of ISO 25010, and the respective OAT activities are performed close to the artefacts’ creation time along the software life cycle. The various stakeholders can shape an OAT environment according to their own focus and determine questions that take their specific requirements into account, e.g.: —— Application Owner: Is it possible to perform changes to the system with a low risk of side effects (maintainability), thus ensuring time to market and a minimum of test effort? —— Operation Team (general): Is it possible to operate the system using given manuals (operability)? Is the operation team equipped with adequate logging functions (functional completeness) and trained in terms of how to obtain and interpret information from different application trace levels (analysability)? —— Third Party Vendor (external operation): Is it possible to operate my cloud service / software as agreed in the contracts in view of the needs of customers in my cloud environment? The relevance of the different types of OAT is derived from the individual artefacts and their quality attributes. The major test types are allocated, and decisions about test execution follow from the risk evaluation – the scope of OAT is ultimately defined by selecting columns from the table shown in Figure 2. SQS Software Quality Systems © 2012 Page 6 SQS Software Quality Systems © 2012 x x x x x x x x x x x x x x x x x x x x x Recovery Testing x x x x x x Failover Testing x x x x Backup and Restore Testing x x x x Dress Rehearsal Crisis Mode x x x x x x Security Testing x L&P Test Operation x SLA/OLA Monitoring Test x E2E Test Environment Operation Dress Rehearsal Standard Mode x Central Component Testing Installation Testing x x x Dress Rehearsal Transition Mode Transition Standard Crisis Functional suitability Functional completeness Functional correctness Functional appropriateness Performance efficiency Time behaviour Resource utilisation Capacity Compatibility Coexistence Interoperability Usability Appropriateness recognisability Learnability Operability User error protection User interface aesthetics Accessibility Reliability Maturity Availability Fault tolerance Recoverability Security Confidentiality Integrity Non-reputation Accountability Authenticity Maintainability Modularity Reusability Analysability Modifiability Testability Portability Adaptability Installability Replaceability Code Analysis Figure 2: Example of the evaluation of quality attributes Operational Documentation Review Whitepaper / Operational Acceptance Testing x x x x x x x x x x x x x x x x x x x x x x x x x x x x x x x x x x x x x x x x x x x x x x x x x x x x x x x x x x x x x x x x x x x x x x x x x x x x x x x x x x x x x x x x x x x x x x x x x x x x x x x x Page 7 Whitepaper / Operational Acceptance Testing Test activities can be allocated according to their main focus on the transition, standard or crisis mode, respectively. However, L&P testing for instance addresses standard operation as well as the crisis mode, the latter including the case of system load entering the stress region with the objective of crashing the system. Consequently, there is a certain overlap as to which mode is addressed. Test preparation and execution is performed all along the software life cycle so that defects are detected as early as possible. At the end of the design phase, architecture analysis applying ATAM (Software Engineering Institute, 2012) or FMEA (Wikipedia contributors, 2012) based on use cases will guarantee fulfilment of all recovery requirements. But not only that: these use cases can be re-employed as test cases for execution during the test phase. 5. Types of Operational Acceptance Testing As outlined in Section 3, in the following we shall present the various test types in detail with a short profile in terms of definition, objective, parties involved, point in time (of the SDLC), its inputs and outputs, the test approach, the test environment, and the risk incurred if the respective test type is not executed. 5.1. Operational Documentation Review Definition: All the documents that are necessary to operate the system (e.g. architecture overviews, operational documentation) are identified, and it is checked that they are available in the final version and accessible to the relevant people. In the documents, transition, standard, and crisis mode are addressed. Objective: The aim is to achieve correctness, completeness, and consistency of the operation handbooks and manuals. The operation team shall be committed to ensure that a given system is operable using released documentation. Parties Involved: The application owners are generally responsible for providing the necessary documentation, while analysis may also be performed by an independent tester or testing team. It is necessary to include the operation team in the discussions. Time: Operational documentation review should start as early as possible in the development process. In any case, it must be completed before going live, but only reviewing the required documents after deployment and before going live is too risky. SQS Software Quality Systems © 2012 Page 8 Whitepaper / Operational Acceptance Testing Input: A checklist showing the relevant documents and (if possible) the documents under review should be available. Depending on the system, the documents included in the checklist may vary, such as: —— Blueprints (including hardware and software) —— Implementation plan (Go-live Plan, i.e. the activities required to go live mapped onto a timeline) —— Operational documentation (providing guidance on how to operate the system in the transition, standard/daily and crisis modes) —— Architectural overviews (technical models of the system to support impact analysis and customisation to specific target environments) —— Data structure explanations (data dictionaries) —— Disaster recovery plans —— Business continuity plans Test Approach: The task of the OAT tester is to ensure that these documents are available finalised and in sufficient quality to enable smooth operation. The documents must be handed over and accepted by the teams handling the production support, helpdesk, disaster recovery, and business continuity. The handover must be documented. Operation teams must be included as early as possible to obtain their valuable input about the documentation required for operating the system. This way, transparency about the completeness of documents is achieved early on in the development process, and there will be sufficient time left for countermeasures if documents are missing or lacking in quality. Output: A protocol is to record the handover to the operation team including any missing documents and open issues in the documentation that still need to be sorted before going live. OAT Test Environment: In order to review the documents, no special test environment is required. It is, however, essential for document management to trace the right versions and record the review process. Risk of Not Executing: If the operational documentation review is omitted or performed too late, testing will start without the final documentation available which reduces the effectiveness of the tests. As a result, an increased number of issues may be raised, causing delays in the timeline. For operation teams, not having the correct documentation can affect their ability to maintain, support, and recover the systems. SQS Software Quality Systems © 2012 Page 9 Whitepaper / Operational Acceptance Testing 5.2. Code Analysis Definition: Code analysis is a tool-based automated and / or manual analysis of source code with respect to dedicated metrics and quality indicators. The results of this analysis form part of the acceptance process during transition because low code quality has an impact on the standard (maintenance) and crisis modes (stability). Objective: This type of test is to ensure transparency about the maintainability and stability of software systems. Applying correction and refactoring increases manageability of incidents and problems needing hot fixes with a low risk of side effects and as little effort in terms of regression testing as possible. Parties Involved: The application owners are generally responsible for fixing issues and use the results of the code analysis as acceptance criteria. Analysis can be performed in the context of projects or systematically integrated into the maintenance process. Time: Code analysis can take place at dedicated project milestones or be consistently integrated into the implementation phase, e.g. as part of the daily build. The time for corrections has to be planned. Input: Code analysis is performed on the basis of the source code of an implemented software system – external libraries or generated code are not considered here. The coding and architecture guidelines are required to perform the analysis. Test Approach: —— Creating a quality model derived from best practice, coding and architectural guidelines (a quality model maps code metrics to quality attributes, and allows decisions based on quality and corrections at code level) —— Selecting the relevant code and tool set-up —— Executing code analysis —— Assessing results and thresholds concerning quality attributes —— Analysing trends with respect to former analysis —— Performing benchmarking with respect to standards —— Deciding on acceptance or rejection Output: This way, a quality model is created which is reusable for all systems implemented in the same programming language. The code quality is made transparent by meeting the requirements of different thresholds, and any defects are pointed out at code level. SQS Software Quality Systems © 2012 Page 10 Whitepaper / Operational Acceptance Testing OAT Test Environment: Depending on the programming language and tool code, analysis is directly performed inside an IDE or on a separate platform using extracted code. Risk of Not Executing: During incidents or in problem cases, there is a high risk of side effects. Moreover, problems can only be fixed with delays due to the great effort involved in regression testing. 5.3. Dress Rehearsal Definition: While functional testing integrates business functions, OAT integrates all functions and stakeholders of a production system. A dress rehearsal is necessary for bigger changes in production environments, especially when they concern many stakeholders. This final phase is performed by the people involved in the change scenario. Typical scenarios comprise the following: —— Transition mode – e.g. big software changes or data migrations with points of no return. In these cases, fallback scenarios also form part of OAT. —— Standard mode – e.g. the first operation after change operation control or the release of a completely new software —— Crisis mode – e.g. failover and recovery after bigger changes in standard procedures or emergency plans. Dress rehearsals in the crisis mode form part of the regular training in order to reduce risks caused by ignorance or routine, raising the awareness of all stakeholders and reminding them of critical processes. Objective: Bigger changes or fallbacks of software systems involve many different stakeholders, in particular operation teams, and a great number of manual changes and monitoring activities have to be performed. Through a dress rehearsal, risks of failures in the process chain and longer system downtimes shall be minimised or avoided. Parties Involved: They comprise all participants in the transition, standard or crisis modes of operation, including the application owners and operation teams. Time: In order to plan a dress rehearsal, operational models are defined for each of the above modes. Fallback scenarios and emergency plans are defined, and the software is tested completely so that its maturity for production (business) and operation is ensured. Consequently, performing a dress rehearsal is the last step before going live. Input: Operational models and handbooks, scenarios, fully tested software, and completed training of stakeholders in their roles all provide the basis for the dress rehearsal. SQS Software Quality Systems © 2012 Page 11 Whitepaper / Operational Acceptance Testing Test Approach: —— Deriving principal scenarios from operational models —— Refining scenarios to sequence planning with respect to each role —— Preparing and executing the test with the operation teams —— Deciding on acceptance or rejection Output: Software or process changes are carried out in order to correct defects. Those that cannot be corrected but are not critical are collected and communicated to the helpdesk, business units and users. OAT Test Environment: A dress rehearsal requires a production-like E2E test environment. Alternatively, a future operation system not yet in use can be employed for dress rehearsal purposes. Activities in the crisis mode, however, may tear down complete environments so that they will need to be refreshed extensively. There should be dedicated environments set up for crisis mode tests in order to avoid delays of parallel functional testing. Risk of Not Executing: Initially, processing will be performed by insufficiently skilled staff. Therefore, it is highly probable that incidents may go unnoticed and data may be irreversibly corrupted. There is a high risk of running into disaster after passing the point of no return. 5.4. Installation Testing Definition: This test activity ensures that the application installs and de-installs correctly on all intended platforms and configurations. This includes installation over an old version and constitutes the crucial part of transition acceptance. Objective: Installation testing is to ensure correctness, completeness, and successful integration into system management functionality for the following: —— Installation —— De-installation —— Fallback —— Upgrade —— Patch SQS Software Quality Systems © 2012 Page 12 Whitepaper / Operational Acceptance Testing Parties Involved: Since the installer is part of the software, it is provided by the projects. Application owner, development and operation team should collaborate closely when creating and testing the installer, while analysis may also be performed by an independent tester or testing team. In the early stages, the focus is on the installer. Later on, the integration into system management functions (e.g. automated deployment to 60,000 workstations) is addressed using tested installers. Time: Irrespective of performing installation tests when the application is ready to be deployed, the required resources (registry entries, libraries) should be discussed as early as possible with operation teams in order to recognise and avoid potential conflicts (e.g. with other applications, security policies). Installation testing in different test environments can be a systematic part of the software’s way from development to production. Input: What is required are target systems with different configurations (e.g. created from a base image), as well as the specification of changes to the operating system (e.g. registry entries), and a list of additional packages that need to be installed. Additional input is provided by a checklist of necessary test steps (see Test Approach), as well as installation instructions listing prerequisites and run-time dependencies as a basis for the creation of test scenarios. Test Approach: The following aspects must be checked to ensure correct installation and de-installation: —— The specified registry entries and number of files available need to be verified after the installation is finished. Registry entries and installed files should be removed completely using the de-installation routine. This will ensure that the software can be removed quickly (returning the system to its previous state) in case any problems arise after the installation. The same applies to additional software that may be installed with the application (e.g. language packs or frameworks). —— The application must use disk space as specified in the documentation in order to avoid problems with insufficient space on the hard disk. —— If applicable, the installation over old(er) version(s) must be tested as well – the installer must correctly detect and remove or update old resources. —— The occurrence of installation breaks has to be tested in each installer step, as well as breaks due to other system events (e.g. network failure, insufficient resources). The application and the operating system must be in a defined and consistent state after every installation break possible. —— Handling shared resources is another issue. Shared resources may have to be installed or updated during installation, and while these processes are performed, conflicts with other applications must be avoided. In terms of deinstallation, the system should be cleaned from shared resources that are not used any more. This will result in higher performance and increased security for the whole system. —— Since the installation routine is an integral part of the application and a potentially complicated software process, it is subject to the regulations of ISO 25010. SQS Software Quality Systems © 2012 Page 13 Whitepaper / Operational Acceptance Testing —— The main steps of installation testing are: —— Identification of required platforms —— Identification of scenarios to be executed —— Environment set-up —— Deployment and installation —— Static check of the target environment —— Dynamic check of the environment by executing installed software (smoke test) —— Test evaluation and defect reporting Output: A protocol records all the checked potential installation problems (see Test Approach) and issues that have been found. It also includes suggestions for resolving these issues. OAT Test Environment: Ideally, the deployment should be tested in an environment that is a replica of the live environment. Moreover, a visual review of existing specifications can be carried out. Before testing the installer, the number and the relative importance of the configurations must be checked with the operation teams. Every possible configuration should receive an appropriate level of testing. Since installation depends on the location, type, and language of the user, an environment landscape covers all relevant target environments as a basis for the installation. Each of these types of environment has to be reset as rapidly as possible in case of defects during installation; server and client types must be covered. Risk of Not Executing: Omitted or delayed installation testing may result in conflicts with other applications, compromised, insecure or even broken systems. Problems with the installation will result in low user acceptance even though the software itself has been properly tested and works as designed. On top of this, the number of manual actions and thus effort, costs, and conflicts will increase in large software systems (e.g. 60,000 clients). 5.5. Central Component Testing Definition: Central component testing comprises test activities that ensure the successful exchange or upgrade of central components like run-time environments, database systems, or standard software versions. All the operation modes – transition, standard, and crisis – are addressed. Objective: The aim of central component testing is to obtain proof of correct functionality, sufficient performance, or fulfilment of other quality requirements. SQS Software Quality Systems © 2012 Page 14 Whitepaper / Operational Acceptance Testing Parties Involved: Normally, operation teams, infrastructure teams or vendors are responsible for central components and are following the market cycles of producers. When changes have been made, a regression test has to be performed by application testing in projects or line organisation (application owner). Time: There are two possible approaches for introducing central components: The first approach would be to set up central components as productive within the development system, i.e. central components would move parallel to the application software along the test stages towards a release date according to a common release plan. Testing would start implicitly with developer tests. The second approach would be to test changing a central component in a production-like maintenance landscape. In this case, a dedicated regression test would be performed parallel to production. Central components would be released for both operation and development. Input: This requires an impact analysis for introducing central components, and a regression test for the applications. Test Approach: —— Deriving relevant applications from impact analysis —— Selecting regression tests on the basis of risk assessment —— Performing regression tests (including job processing) —— parallel to development —— in a dedicated maintenance environment —— Deciding on acceptance or rejection Output: This method yields regression test results and locates possible defects. OAT Test Environment: Depending on the approach, central component testing is performed in existing project test environments or in a dedicated maintenance test environment. Risk of Not Executing: System downtimes, missing fallbacks, and unresolvable data defects are all probable consequences. This is of crucial importance for the use of external vendors or cloud computing. 5.6. End-to-End (E2E) Test Environment Operation Definition: E2E test environment operation introduces operation teams to the operation of test environments and to processing and monitoring the concepts of the new application. This test type addresses the standard operation mode since E2E testing focuses on this area – other modes only become relevant in case test failures lead to problems. SQS Software Quality Systems © 2012 Page 15 Whitepaper / Operational Acceptance Testing Objective: The aim of this test type is to enable operation teams to process new or changed flows and monitor progress by applying monitoring and logging functionality. In this context, especially changed or new operation control is checked for its operability. In case errors occur during tests, the recovery functionality is either trained or implemented additionally. The helpdesk is made familiar with the system, which reduces support times after the release of the software. Parties Involved: The E2E test management is responsible for tests. Operation teams are involved in the definition of test scenarios by setting control points as well as by activation or deactivation of trace levels. In addition, processing is performed in a production-like manner by staff which later will be responsible for performing the same activities in a production environment. Time: E2E test environment operation is synchronous to E2E tests. Therefore, test preparation can begin as soon as the business concepts have been described and the operation functionality has been designed. Input: The operational activities are determined on the basis of the business concepts, the operation design, and various handbooks. Test Approach: —— Deriving a functional test calendar from E2E test cases —— Creating the operation calendar by supplementing the test calendar with operational activities —— Preparing and executing the test completely in a production-like manner, following the operation calendar —— Evaluating the test —— Correcting the operational procedures and job control —— Deciding on acceptance or rejection Output: As a result of this test, operation handbooks are corrected, and the operation team is fully trained for operation. Corrected operational procedures and job control are implemented. Moreover, activities can be derived which serve close environment monitoring during the first few weeks after the release of the software. OAT Test Environment: Production-like E2E test environment operation is only possible in highly integrated test environments that employ production-like processing and system management functions. There is no need, however, for production-like sizing of the respective environment. Risk of Not Executing: Initially, processing will be performed by insufficiently skilled staff. Therefore, it is highly probable that incidents may go unnoticed and data may be irreversibly corrupted. SQS Software Quality Systems © 2012 Page 16 Whitepaper / Operational Acceptance Testing 5.7. SLA / OLA Monitoring Test Definition: This test type examines the implemented monitoring functionality in order to measure the service and operation level. The test activities can be performed as part of other OAT activities mainly addressing the standard and crisis modes. Objective: Monitoring functionality is to be characterised by completeness, correctness, and operability in order to derive the right service and operation level. Parties Involved: This test is aimed at operation teams and stakeholders of other test types. Time: In principle, monitoring tests can be performed directly after the implementation if an individual application has been developed. If monitoring is integrated into standard system management functions, tests will be possible parallel to the E2E tests. Test Approach: —— Selecting relevant SLAs / OLAs —— Deriving monitoring scenarios to estimate service levels —— Integrating scenarios into the test scenarios of other test types —— Executing tests and calculating service levels from monitoring Output: On the basis of the monitoring test, the service and operation level of test environments is determined. OAT Test Environment: This test type requires a dedicated test environment. Risk of Not Executing: The service level may be assessed incorrectly, which would result in faults in billing based on SLAs / OLAs. 5.8. Load and Performance (L&P) Test Operation Definition: Load and performance test operation comprises the operation of test environments by the operation team and the integration of operation into the modelling of load scenarios and monitoring concepts. These scenarios address both the load for standard operation and stress loads to enter the crisis mode. Objective: The aim of this test type is to enable the operation team to apply implemented performance monitoring functionality. Moreover, operation units shall identify bottlenecks caused by hardware restrictions and solve this problem by changing e.g. the capacity, bandwidth, or MIPs of the production environment before a release is going live. In addition, the run-time of batch operation is determined and can be integrated into the job planning. SQS Software Quality Systems © 2012 Page 17 Whitepaper / Operational Acceptance Testing Parties Involved: The non-functional test management is responsible for the load and performance tests, while operation teams are involved in the definition of test requirements in order to assess a system from the performance point of view. Time: Load and performance testing is a discipline which comes into play very early in the software development life cycle, starting with infrastructure evaluation and applying simple load scenarios (ping and packages) through to the testing of prototypes and simulating realistic loads in production-like environments. Operational activities grow along this path but already start very early on during the design phase. Input: The basis for the operational activities is provided by the existing infrastructure landscape and by the requirements for performance monitoring derived from system management functions which are supported by a dedicated tool set-up. The scenarios are derived from the operation handbooks. Test Approach: —— Collecting test requirements from an operational point of view —— Integrating requirements into the load model —— Integrating requirements into the monitoring model —— Preparing the test environment and test data —— Executing and analysing the test —— Defining mitigation scenarios for performance risks —— Deciding on acceptance or rejection Output: As result of the test, the operation handbooks are corrected, the operation team is trained to operate, and activities to prepare the operational environment for the new software release are defined. Moreover, activities for intensive environment monitoring during the first few weeks after the software is released can be derived. OAT Test Environment: The test environments depend on the different test stages and are accompanied by dedicated load and performance tests. There is a strong demand for performance tests in production-like environments applying production processing and monitoring. Risk of Not Executing: Performance issues may not be recognised after going live. In case of problems, no adequate measures will have been prepared which can be applied fast. In a worst case scenario, an environment which is not prepared to host an application that fulfils performance requirements would negatively influence business. SQS Software Quality Systems © 2012 Page 18 Whitepaper / Operational Acceptance Testing 5.9. OAT Security Testing Definition: Security testing includes all activities required to verify the operability of given security functionality and handbooks. Objective: This type of test is to ensure that all security issues relating to a lack of going-live maturity are identified (e.g. test accounts, debug information). Parties Involved: The application owners are generally responsible for ensuring on the basis of project results that the product has reached maturity before going live. The operation teams are responsible for applying given security guidelines. Consequently, application owners and operation teams have to be involved as active testers, but analysis may also be performed by an independent tester or testing team. Likewise, compliance departments have to be involved to assure fulfilment of their requirements. Time: OAT security testing has to be performed after deployment and before going live. Input: The system, test documentation, and configuration files as well as the live system are required for OAT security testing. Test Approach: —— For all relevant input channels: —— Check that trash data is handled carefully (fuzzing data), i.e. ensure that the CIA concept (Confidentiality, Integrity, Availability) is still met. —— Check that the overall system works properly when system components are switched off (e.g. firewall, sniffer, proxies). —— For all components: —— Check that all test-motivated bypasses (e.g. test users, test data) are deleted for all configuration items. —— Check that all production support members have corresponding access to all configuration items. —— Check that all sensitive data are handled according to security guidelines. —— Check that no debugging information is shown and error messages are customised (information disclosure). —— Check that standard credentials are changed to individual and secure credentials. —— Check the configuration, switch off unused components and close unused ports (firewall). —— Check used certificates for validity. SQS Software Quality Systems © 2012 Page 19 Whitepaper / Operational Acceptance Testing Output: A protocol records all the checked potential security problems (see Test Approach) and security issues that have been found. It also includes suggestions for resolving these issues. OAT Test Environment: OAT security testing has to be performed in the E2E test environment and live system. Risk of Not Executing: There is a high risk that security vulnerabilities created during the last steps in the application development life cycle (test data, debug functionality, misconfiguration) will affect the security of the system. 5.10. Backup and Restore Testing Definition: In contrast to a disaster recovery scenario resulting from an event (an interruption or a serious failure), backup and restore functionality fulfils the need of reliability under standard operation. The event of a data loss can be an accidental deletion by the user within the normal system functionality. Objective: Backup and restore testing focuses on the quality of the implemented backup and restore strategy. This strategy may not only have an impact on the requirements for software development but also on SLAs that have to be fulfilled in operation. In an expanded test execution, the test objective of a backup includes all the resources, ranging from hardware to software and documentation, people and processes. Parties Involved: In the event of a backup and restore request, different groups of administrators placed in operation teams are involved: this includes the database, infrastructure, file system, and application software. These groups of people are stakeholders who are interested in the quality of the backup and restore process but they are also key players during test execution. Time: Backup and restore testing can be launched when the test environment is set up and working in standard mode. The tests have to consider the backup schedule, e.g. monthly or weekly backups. Input: Backup and restore testing requires a working test environment and operation process. Test Approach: Backup and restore testing can be executed in a use-case scenario based on well-defined test data and test environments. In general, a test will comprise the following steps: —— Quantifying or setting up the initial, well-defined testing artefacts —— Backing up existing testing artefacts —— Deleting the original artefacts —— Restoring artefacts from backup SQS Software Quality Systems © 2012 Page 20 Whitepaper / Operational Acceptance Testing —— Comparing original artefacts with restored ones, and also analysing the backup logs —— If applicable, performing a roll-forward and checking again Output: As a result of backup and restore testing, missing components, defects in existing components, and corrections to be made in handbooks and manuals are identified. Also defects with respect to requirements are identified. OAT Test Environment: If backup and restore functionality is available, testing can in principle be executed parallel to early functional testing. However, since the tests will involve planned downtimes or phases of exclusive usage of environments, additional test environments will be set up temporarily in order to avoid time delays in the functional testing area. Moreover, this activity will require the following: —— Representative test data —— Established backup infrastructure —— Established restore infrastructure Risk of Not Executing: The possibility that backups (regular and ad hoc) may not work carries the risk of losing data in a restore situation and can impede the ability to perform a disaster recovery. Restore times may increase due to not having a prepared functionality but trying to solve problems in an unpredictable manner in a task force mode. 5.11. Failover Testing Definition: The real event of a serious failure shows the degree of fault tolerance of a system. The failover test systematically triggers this failure or abnormal termination of a system to simulate the crisis mode. Objective: A system must handle a failure event – as far as possible – with an automatic failover reaction. If human intervention is needed, the corresponding manual process has to be tested, too. The objective of failover testing can be subdivided into two categories: —— The degree of the quality of fault recognition (technical measures have to be implemented to detect the failure event, e.g. a heartbeat) —— The efficiency and effectiveness of the automatic failover reaction in terms of reaction time and data loss The use of cloud technologies can make a positive contribution to the reliability of quality model characteristics. In case you are a cloud service customer, it can be difficult to arrange all requirements necessary for the OAT test environment and test execution. SQS Software Quality Systems © 2012 Page 21 Whitepaper / Operational Acceptance Testing Parties Involved: A system designer is needed to plan and implement the possible failure events for the test execution based on ATAM use cases or FMEA. The failure and result analysis can require additional groups of people from database administration and infrastructure management, e.g. technical staff member checking the uninterruptible power supply (UPS). Processes und documentation have to be in place in order to handle the event. Time: Failover testing can be launched when the test environment is set up and working in standard mode. To prevent interruption of normal test activities, the test schedule has to consider a period of exclusive usage. Input: The test scenarios are derived from architecture overviews, as well as experts’ experiences and methods. Handbooks and manuals for failure handling also need to be available. Test Approach: The test case specification has to describe the measures taken to trigger the failure event. It is not necessary to execute events exactly as they happen in the real world since it can be sufficient to simulate them with technical equipment. For instance: —— Failure: Lost Network Connection Activity: Remove Network cable —— Failure: File system or hard disk failure Activity: Remove hot swappable hard disk within a RAID system Output: The failover execution protocols show the time slots needed to bring the system back up and running so that they can be compared to the SLAs. OAT Test Environment: Even though it is just a test, it can happen that the OAT test environment cannot be used in standard mode after the failure event. A failover test may have an impact on further test activities and schedules. Consequently, this kind of test is executed in dedicated temporary environments or functional test environments and carries the risk of time delay. Risk of Not Executing: Failover may not work or take longer than expected, thus leading to service outages. 5.12. Recovery Testing Definition: A recovery strategy for IT systems comprises a technical mechanism similar to backup and restore techniques but also requires additional policies and procedures that define disaster recovery standards: the capability of the software product to re-establish a specified level of performance and recover the data directly affected in case of failure. SQS Software Quality Systems © 2012 Page 22 Whitepaper / Operational Acceptance Testing Objective: For OAT, this discipline focuses on the documentation, the described processes, and the resources involved. In the phase needed to bring back the failed application, a downtime and reduced performance as well as a minimum of data and business loss is to be expected. The degree of recoverability is defined by the achievable minimum of interrupted business functions. Recovery testing is to ensure predictable and manageable recovery. Parties Involved: In the event of a recovery request, different groups of administrators placed in operation teams are involved: this includes the database, infrastructure, file system, and application software. These groups of people are stakeholders who are interested in the quality of the recovery process but they are also key players during test execution. Time: Since the recovery test is much more of a static test approach to the recovery plans and procedures, it can be applied early on in the development process. Its findings can be integrated in the further system design and process development. Dynamic test activities are executed parallel to functional testing. Input: The recovery test can be performed with respect to processes, policies, and procedures. All documentation describing the processes must be in place, and also recovery functionality has to be available. Test Approach: Performing a test in a real OAT system with full interruption may be costly. Therefore, simulation testing can be an alternative. ATAM or FMEA are static ways to determine the feasibility of the recovery process. The following questions have to be considered during the tests: —— What are the measures to restart the system? How long is the estimated downtime? —— What are the measures to rebuild, re-install or restore? —— What are the minimum performance and data loss volumes you can accept after recovery? Output: The downtime – i.e. counting from the moment when the service is not available any more to the moment when the service is back up and running – is estimated. As a result of recovery testing, missing components, defects in existing components, and corrections to be made in handbooks and manuals are identified. Also defects with respect to requirements are identified. OAT Test Environment: If recovery functionality is available, testing can in principle be executed parallel to early functional testing. However, since the tests will involve planned downtimes or phases of exclusive usage of environments, additional test environments will be set up temporarily in order to avoid time delays in the functional testing area. Risk of Not Executing: The failover process may not work or take longer than expected, leading to service outages, and recovery could fall into task force mode with unpredictable downtimes. SQS Software Quality Systems © 2012 Page 23 Whitepaper / Operational Acceptance Testing 6. Acceptance Process The objective of OAT is to achieve the commitment of a handover of the software to the application owner and the operation team. Acceptance is based on successful tests and known but manageable defects throughout the entire life cycle of the Operation Installation System Test E2E Installation Integration Test Installation Component Test Design Analysis High-intensity activities Low-intensity activities Implementation software development project (see Figure 3). L&P Test Operation E2E Test Environment Operation Operation Documentation Review Code Analysis Installation Testing Security Testing Central Component Testing Backup and Restore Testing Dress Rehearsal Transition Mode Dress Rehearsal Normal Mode Dress Rehearsal Crisis Mode Failover Testing Recovery Testing SLA/OLA Monitoring Test OAT-QG5 OAT-QG4 OAT-QG3 OAT-QG2 OAT-QG1 Quality Gates: Figure 3: Start and main test phases of different OAT types A set of quality gates has to be defined which collect all the information about the various operation tests and allow decisions to be made concerning acceptance. The testing is organised among all the stakeholders. The application owner and the operation team —— perform a test of their own which is integrated into the overall test plan, —— support the testing teams in achieving the test results, —— or check third-party results with respect to acceptance criteria. Consequently, operational acceptance starts early on in the project and is achieved after successfully passing all the quality gates. SQS Software Quality Systems © 2012 Page 24 Whitepaper / Operational Acceptance Testing 7. Conclusion and Outlook Operational Acceptance Testing comprises a number of different test types which are derived from quality attributes in order to support business continuity in the transition, standard and crisis modes of operation. The relevancy and intensity of each test type can be decided on the basis of risks involved. For each test type, there is a test scheme which is selected according to the technology and system management functions used. The spotlight of OAT shifts between several focal points over the course of the software development life cycle. Following this systematic approach, any errors will be detected as early as possible – which means that OAT is well suited to meet any current and future demands and, in particular, fulfils the operational requirements of cloud computing. 8. Bibliographical References APM Group Limited, HM Government and TSO. ITIL – Information Technology Infrastructure Library. High Wycombe, Buckinghamshire. [Online] 2012. http://www.itil-officialsite.com. International Software Testing Qualifications Board. ISTQB Glossary. [Online] March 2010. http://www.istqb.org/downloads/glossary.html. ISO/IEC JTC 1/SC 7 Software and Systems Engineering. ISO/IEC 25000 Software Engineering – Software Product Quality Requirements and Evaluation (SQuaRE) – Guide to SQuaRE. [Online] 17/12/2010. http://www.iso.org/iso/iso_catalogue/catalogue_tc/catalogue_detail.htm?csnumber=35683. Software Engineering Institute. Architecture Tradeoff Analysis Method. Carnegie Mellon University, Pittsburgh, PA. [Cited: 2012.] http://www.sei.cmu.edu/architecture/tools/evaluate/atam.cfm. Valentino-DeVries, J. More Predictions on the Huge Growth of ‘Cloud Computing’. The Wall Street Journal Blogs. [Online] 21/04/2011. http://blogs.wsj.com/digits/2011/04/21/more-predictions-on-the-huge-growth-of-cloud-computing/. Wikipedia contributors. FMEA – Failure Mode and Effects Analysis. [Online] 2012. http://de.wikipedia.org/wiki/FMEA. SQS Software Quality Systems © 2012 Page 25 Authors: Dirk Dach Dr. Kai-Uwe Gawlik Marc Mevert Senior Consultant Head of Technical Quality Senior Consultant dirk.dach@sqs.com kai-uwe.gawlik@sqs.com marc.mevert@sqs.com sqs.com