EECS 570 Lecture 1 Parallel Computer Architecture

advertisement

EECS 570 Lecture 1 Parallel Computer Architecture Winter 2016 Prof. Thomas Wenisch h6p://www.eecs.umich.edu/courses/eecs570/ Slides

developed in part by Profs. Austin, Adve, Falsafi, Martin, Narayanasamy,

Nowatzyk, Peh, and Wenisch of CMU, EPFL, MIT, UPenn, U-M, UIUC.

EECS 570

Lecture 1

Slide 1

Announcements

No discussion on Friday. Online quizzes (Canvas) on 1st readings due Monday, 1:30pm. Sign up for piazza. EECS 570

Lecture 1

Slide 2

Readings

For Monday 1/12 (quizzes due by 1:30pm) ❒

❒

David Wood and Mark Hill. “Cost-­‐EffecTve Parallel CompuTng,” IEEE Computer, 1995. Mark Hill et al. “21st Century Computer Architecture.” CCC White Paper, 2012. For Wednesday 1/14: ❒

EECS

570

Seiler et al. Larrabee: A Many-­‐Core x86 Architecture for Visual CompuTng. Siggraph 2008. Lecture 1

Slide 3

EECS 570 Class Info

Instructor: Professor Thomas Wenisch ❒

URL: hap://www.eecs.umich.edu/~twenisch Research interests: ❒

❒

❒

MulTcore / mulTprocessor arch. & programmability Data center architecture, server energy-­‐efficiency Accelerators for medical imaging, data analyTcs GSI: ❒

Amlan Nayak (amlan@umich.edu) Class info: ❒

❒

❒

EECS 570

URL: hap://www.eecs.umich.edu/courses/eecs570/ Canvas for reading quizzes & reporTng grades Piazza for discussions & project coordinaTon Lecture 1

Slide 4

Meeting Times

Lecture ❒

MW 1:40pm – 3:00pm (1017 Dow) Discussion ❒

❒

❒

❒

F 1:40pm – 2:30pm (1200 EECS) Talk about programming assignments and projects Make-­‐up lectures Keep the slot free, but we oken won’t meet Office Hours ❒

❒

Prof. Wenisch: M 3-­‐4 (4620 CSE) Amlan: TBD (LocaTon: TBD) Q&A EECS 570

Fri 1:30-­‐2:30 (LocaTon: TBD) when no discussion Use Piazza for all technical quesTons Use e-­‐mail sparingly Lecture 1

Slide 5

Who Should Take 570?

Graduate Students (& seniors interested in research)

1.

2.

3.

Computer architects to be Computer system designers Those interested in computer systems Required Background ❒

❒

EECS 570

Computer Architecture (e.g., EECS 470) C / C++ programming Lecture 1

Slide 6

Grading

2 Prog. Assignments:

Reading Quizzes:

Midterm exam:

Final exam:

Final Project:

5% & 10%

10%

25%

25%

25%

Attendance & participation count

(your goal is for me to know who you are)

EECS 570

Lecture 1

Slide 7

Grading (Cont.)

Group studies are encouraged Group discussions are encouraged All programming assignments must be results of individual work All reading quizzes must be done individually, quesTons/answers should not be posted publicly There is no tolerance for academic dishonesty. Please refer to the University Policy on chea;ng and plagiarism. Discussion and group studies are encouraged, but all submi@ed material must be the student's individual work (or in case of the project, individual group work). EECS 570

Lecture 1

Slide 8

Some Advice on Reading…

If you carefully read every paper start to finish… …you will never finish Learn to skim past details EECS 570

Lecture 1

Slide 9

Reading Quizzes

• You must take an online quiz for every paper Quizzes must be completed by class start via Canvas • There will be 2 mulTple choice quesTons ❒

❒

The quesTons are chosen randomly from a list You only have 5 minutes ❍

❒

Not enough Tme to find the answer if you haven’t read the paper You only get one aaempt • Some of the quesTons may be reused on the midterm/final • 4 lowest quiz grades (of about 40) will be dropped over the course of the semester (e.g., skip some if you are travelling) ❒

EECS 570

Retakes/retries/reschedules will not be given for any reason Lecture 1

Slide 10

Final Project

• Original research on a topic related to the course ❒ Goal: a high-­‐quality 6-­‐page workshop paper by end of term ❒ 25% of overall grade ❒ Done in groups of 3 ❒ Poster session -­‐ April 21, 10:30am-­‐12:30pm (exam slot for 7:30am classes) • See course website for Tmeline • Available infrastructure ❒ FeS2 and M5 mulTprocessor simulators ❒ GPGPUsim ❒ Pin ❒ Xeon Phi accelerators • Suggested topic list will be distributed in a few weeks You may propose other topics if you convince me they are worthwhile EECS 570

Lecture 1

Slide 11

Course Outline

Unit I – Parallel Programming Models and ApplicaTons ❒

❒

Message passing, shared memory (pthreads and GPU) ScienTfic and commercial parallel applicaTons Unit II – SynchronizaTon ❒

SynchronizaTon, Locks and TransacTonal Memory Unit III – Coherency and Consistency ❒

❒

❒

Snooping bus-­‐based systems Directory-­‐based distributed shared memory Memory Models Unit IV – InterconnecTon Networks ❒

On-­‐chip and off-­‐chip networks Unit V – Modern & UnconvenTonal MulTprocessors ❒

EECS 570

Simultaneous & speculaTve threading Lecture 1

Slide 12

Parallel Computer Architecture

The Multicore Revolution

Why is it happening? EECS 570

Lecture 1

Slide 13

If you want to make your computer faster, there are only two opTons: 1. increase clock frequency 2. execute two or more things in parallel InstrucTon-­‐Level Parallelism (ILP) Programmer specified explicit parallelism EECS 570

Lecture 1

Slide 14

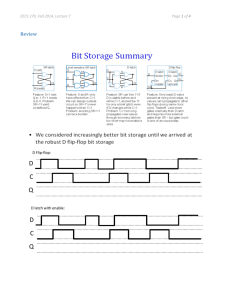

The ILP Wall

Olukotun et al ASPLOS 96 • 6-­‐issue has higher IPC than 2-­‐issue, but not by 3x ❒

EECS 570

Memory (I & D) and dependence (pipeline) stalls limit IPC Lecture 1

Slide 15

Single-thread performance

Performance

10000

15%/yr. 1000

52%/yr. 100

10

1

1985

1990

1995

2000

2005

2010

Source: Hennessy & Patterson, Computer Architecture: A Quantitative Approach, 4th ed.

Conclusion: Can’t scale MHz or issue width to keep selling chips Hence, mul<core! EECS 570

Lecture 1

Slide 16

The

Power

E2UDC ERC VWall

ision 1000000 Transistors (100,000's) 100000 10000 Power (W) Performance (GOPS) Efficiency (GOPS/W) 1000 100 10 Limits on heat extracTon 1 0.1 0.01 Limits on energy-­‐efficiency of operaTons 0.001 1985 EECS 570

1990 1995 2000 2005 2010 2015 2020 Lecture 1

Slide 17

The

Power

E2UDC ERC VWall

ision 1000000 Transistors (100,000's) 100000 Power (W) Performance (GOPS) 10000 Efficiency (GOPS/W) 1000 100 10 Limits on heat extracTon 1 Stagnates performance growth 0.1 0.01 Limits on energy-­‐efficiency of operaTons 0.001 1985 1990 1995 2000 2005 2010 2015 2020 Era of High Performance CompuTng Era of Energy-­‐Efficient CompuTng c. 2000 EECS 570

Lecture 1

Slide 18

Classic CMOS Dennard Scaling:

the Science behind Moore’s Law

Scaling:

Voltage: Oxide: V/α

tOX/α

Source: Future of Computing Performance:

Game Over or Next Level?, National Academy Press, 2011

Results:

1/α2 Power/ckt: Power Density: ~Constant EECS 570

P = C V2 f

Lecture 1

Slide 19

Post-classic CMOS Dennard Scaling

TODO:

Chips w/ higher power (no), smaller (L),

dark silicon (J), or other (?)

Post Dennard CMOS Scaling Rule

Scaling:

Voltage: Oxide: V/α V tOX/α

Results:

1/α2 1 Power/ckt: Power Density: ~Constant α2 EECS 570

P = C V2 f

Lecture 1

Slide 20

Leakage Killed Dennard Scaling

Leakage: • ExponenTal in inverse of Vth • ExponenTal in temperature • Linear in device count To switch well • must keep Vdd/Vth > 3 ➜ Vdd can’t go down EECS 570

Lecture 1

Slide 21

Multicore:

Solution to Power-constrained design?

Power = CV2F F ∝ V Scale clock frequency to 80% Now add a second core Performance Power Same power budget, but 1.6x performance! But: ❒

❒

EECS 570

Must parallelize applicaTon Remember Amdahl’s Law! Lecture 1

Slide 22

What Is a Parallel Computer?

“A collecTon of processing elements that communicate and cooperate to solve large problems fast.” EECS 570

Almasi & Go@lieb, 1989 Lecture 1

Slide 23

Spectrum of Parallelism

Bit-­‐level Pipelining EECS 370 ILP EECS 470 MulTthreading MulTprocessing Distributed EECS 570 EECS 591 Why mulTprocessing? • Desire for performance • Techniques from 370/470 difficult to scale further EECS 570

Lecture 1

Slide 24

Why Parallelism Now?

• These arguments are no longer theoreTcal • All major processor vendors are producing mulTcore chips ❒

❒

Every machine will soon be a parallel machine All programmers will be parallel programmers??? • New sokware model ❒

❒

Want a new feature? Hide the “cost” by speeding up the code first All programmers will be performance programmers??? • Some may eventually be hidden in libraries, compilers, and high level languages ❒

But a lot of work is needed to get there • Big open quesTons: ❒

❒

EECS 570

What will be the killer apps for mulTcore machines? How should the chips, languages, OS be designed to make it easier for us to develop parallel programs? Lecture 1

Slide 25

Multicore in Products

• “We are dedicaTng all of our future product development to mulTcore designs. … This is a sea change in compuTng” Paul Otellini, President, Intel (2005) •

All microprocessor companies switch to MP (2X cores / 2 yrs) Intel’s NehalemEX

Azul’s Vega

nVidia’s Tesla

Processors/System

4

16

4

Cores/Processor

8

48

448

Threads/Processor

2

1

Threads/System

64

768

EECS 570

1792

Lecture 1

Slide 26

Revolution Continues..

Azul’s Vega 3 7300 54-­‐core chip Blue Gene/Q Sequoia 16-­‐core chip 864 cores 1.6 million cores 768 GB Memory May 2008 1.6 PB 2012 Sun’s Modular DataCenter ‘08 8-­‐core chip, 8-­‐thread/core 816 cores / 160 sq.feet Lakeside Datacenter (Chicago) 1.1 milion sq.feet ~45 million threads EECS 570

Lecture 1

Slide 27

Multiprocessors Are Here To Stay

• Moore’s law is making the mulTprocessor a commodity part ❒

❒

❒

1B transistors on a chip, what to do with all of them? Not enough ILP to jusTfy a huge uniprocessor Really big caches? thit increases, diminishing %miss returns • Chip mulSprocessors (CMPs) ❒

Every compuTng device (even your cell phone) is now a mulTprocessor EECS 570

Lecture 1

Slide 28

Parallel Programming Intro

EECS 570

Lecture 1

Slide 29

Motivation for MP Systems

• Classical reason for mulTprocessing: More performance by using mulTple processors in parallel ❒ Divide computaTon among processors and allow them to work concurrently ❒

AssumpTon 1: There is parallelism in the applicaTon ❒

AssumpTon 2: We can exploit this parallelism EECS 570

Lecture 1

Slide 30

Finding Parallelism

1.

FuncTonal parallelism ❒

❒

❒

2.

Data parallelism ❒

3.

Vector, matrix, db table, pixels, … Request parallelism ❒

EECS 570

Car: {engine, brakes, entertain, nav, …} Game: {physics, logic, UI, render, …} Signal processing: {transform, filter, scaling, …} Web, shared database, telephony, … Lecture 1

Slide 31

Computational Complexity of (Sequential)

Algorithms

• Model: Each step takes a unit Tme • Determine the Tme (/space) required by the algorithm as a funcTon of input size EECS 570

Lecture 1

Slide 32

Sequential Sorting Example

• Given an array of size n • MergeSort takes O(n log n) Tme • BubbleSort takes O(n2) Tme • But, a BubbleSort implementaTon can someTmes be faster than a MergeSort implementaTon • Why? EECS 570

Lecture 1

Slide 33

Sequential Sorting Example

• Given an array of size n • MergeSort takes O(n log n) Tme • BubbleSort takes O(n2) Tme • But, a BubbleSort implementaTon can someTmes be faster than a MergeSort implementaTon • The model is sTll useful ❒

❒

EECS 570

Indicates the scalability of the algorithm for large inputs Lets us prove things like a sorTng algorithm requires at least O(n log n) comparisons Lecture 1

Slide 34

We need a similar model for parallel

algorithms

EECS 570

Lecture 1

Slide 35

Sequential Merge Sort

16MB input (32-­‐bit integers) Time Recurse(lek) Recurse(right) SequenTal ExecuTon Merge to scratch array Copy back to input array EECS 570

Lecture 1

Slide 36

Parallel Merge Sort

(as Parallel Directed Acyclic Graph)

16MB input (32-­‐bit integers) Time Recurse(lek) Recurse(right) Parallel ExecuTon Merge to scratch array Copy back to input array EECS 570

Lecture 1

Slide 37

Parallel DAG for Merge Sort

(2-core)

SequenTal Sort Merge SequenTal Sort Time EECS 570

Lecture 1

Slide 38

Parallel DAG for Merge Sort

(4-core)

EECS 570

Lecture 1

Slide 39

Parallel DAG for Merge Sort

(8-core)

EECS 570

Lecture 1

Slide 40

The DAG Execution Model of a

Parallel Computation

• Given an input, dynamically create a DAG • Nodes represent sequenTal computaTon ❒

Weighted by the amount of work • Edges represent dependencies: ❒

EECS 570

Node A à Node B means that B cannot be scheduled unless A is finished Lecture 1

Slide 41

Sorting 16 elements in four cores

EECS 570

Lecture 1

Slide 42

Sorting 16 elements in four cores

(4 element arrays sorted in constant time)

1 8 1 1 1 16 1 1 8 1 EECS 570

Lecture 1

Slide 43

Performance Measures

• Given a graph G, a scheduler S, and P processors • Tp(S) : Time on P processors using scheduler S • Tp

: Time on P processors using best scheduler • T1 : Time on a single processor (sequenTal cost) • T∞

: Time assuming infinite resources EECS 570

Lecture 1

Slide 44

Work and Depth

• T1 = Work ❒

The total number of operaTons executed by a computaTon • T∞ = Depth ❒

The longest chain of sequenTal dependencies (criTcal path) in the parallel DAG EECS 570

Lecture 1

Slide 45

T∞ (Depth): Critical Path Length

(Sequential Bottleneck)

EECS 570

Lecture 1

Slide 46

T1 (work): Time to Run Sequentially

EECS 570

Lecture 1

Slide 47

Sorting 16 elements in four cores

(4 element arrays sorted in constant time)

1 8 1 1 1 16 1 1 8 1 EECS 570

Work = Depth = Lecture 1

Slide 48

Some Useful Theorems

EECS 570

Lecture 1

Slide 49

Work Law

• “You cannot avoid work by parallelizing” T1 / P ≤ TP

EECS 570

Lecture 1

Slide 50

Work Law

• “You cannot avoid work by parallelizing” T1 / P ≤ TP

Speedup = T1 / TP

EECS 570

Lecture 1

Slide 51

Work Law

• “You cannot avoid work by parallelizing” T1 / P ≤ TP

Speedup = T1 / TP

• Can speedup be more than 2 when we go from 1-­‐core to 2-­‐

core in pracTce? EECS 570

Lecture 1

Slide 52

Depth Law

• More resources should make things faster • You are limited by the sequenTal boaleneck EECS 570

TP ≥ T∞

Lecture 1

Slide 53

Amount of Parallelism

Parallelism = T1 / T∞

EECS 570

Lecture 1

Slide 54

Maximum Speedup Possible

Speedup T1 / TP ≤ T1 / T∞ Parallelism “speedup is bounded above by available parallelism” EECS 570

Lecture 1

Slide 55

Greedy Scheduler

• If more than P nodes can be scheduled, pick any subset of size P • If less than P nodes can be scheduled, schedule them all EECS 570

Lecture 1

Slide 56

Performance of the Greedy Scheduler

TP(Greedy) ≤ T1 / P + T∞

Work law

T1 / P ≤ TP

Depth law T∞ ≤ TP

EECS 570

Lecture 1

Slide 57

Greedy is optimal within factor of 2

TP ≤ TP(Greedy) ≤ 2 TP

Work law

T1 / P ≤ TP

Depth law T∞ ≤ TP

EECS 570

Lecture 1

Slide 58

Work/Depth of Merge Sort

(Sequential Merge)

• Work T1 :

O(n log n)

• Depth T∞ : O(n)

❒

Takes O(n) Tme to merge n elements • Parallelism: ❒

EECS 570

T1 / T∞ = O(log n) à really bad! Lecture 1

Slide 59

Main Message

• Analyze the Work and Depth of your algorithm • Parallelism is Work/Depth • Try to decrease Depth ❒

❒

the criTcal path a sequen;al boaleneck • If you increase Depth ❒

EECS 570

beaer increase Work by a lot more! Lecture 1

Slide 60

Amdahl’s law

• SorTng takes 70% of the execuTon Tme of a sequenTal program • You replace the sorTng algorithm with one that scales perfectly on mulT-­‐core hardware • How many cores do you need to get a 4x speed-­‐up on the program? EECS 570

Lecture 1

Slide 61

Amdahl’s law, 𝑓=70%

Speedup(f, c) = 1 / ( 1 – f) + f / c

f

1-f

c

EECS 570

= the parallel porTon of execuTon = the sequenTal porTon of execuTon

= number of cores used Lecture 1

Slide 62

Amdahl’s law, 𝑓=70%

4.5 4.0 3.5 Desired 4x speedup Speedup 3.0 2.5 2.0 Speedup achieved (perfect scaling on 70%) 1.5 1.0 0.5 0.0 1 EECS 570

2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 #cores Lecture 1

Slide 63

Amdahl’s law, 𝑓=70%

4.5 4.0 3.5 Desired 4x speedup Speedup 3.0 2.5 Limit as c→∞ = 1/(1-­‐f) = 3.33 2.0 1.5 Speedup achieved (perfect scaling on 70%) 1.0 0.5 0.0 1 EECS 570

2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 #cores Lecture 1

Slide 64

Amdahl’s law, 𝑓=10%

1.12 1.10 1.08 Speedup 1.06 Speedup achieved with perfect scaling 1.04 Amdahl’s law limit, just 1.11x 1.02 1.00 0.98 0.96 0.94 1 EECS 570

2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 #cores Lecture 1

Slide 65

Amdahl’s law, 𝑓=98%

60 50 Speedup 40 30 20 10 0 1 7 13 19 25 31 37 43 49 55 61 67 73 79 85 91 97 103 109 115 121 127 #cores EECS 570

Lecture 1

Slide 66

Lesson

• Speedup is limited by sequenTal code • Even a small percentage of sequenTal code can greatly limit potenTal speedup EECS 570

Lecture 1

Slide 67

Gustafson’s Law

Any sufficiently large problem can be parallelized effecTvely Speedup(f, c) = f c + (1 – f)

f

1-f

c

= the parallel porTon of execuTon = the sequenTal porTon of execuTon

= number of cores used Key assump;on: 𝑓 increases as problem size increases EECS 570

Lecture 1

Slide 68