Research Interests - SEAS - University of Pennsylvania

advertisement

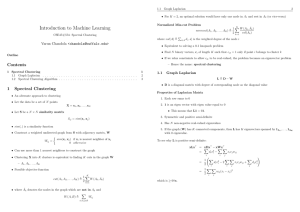

Research Interests João Sedoc I am a PhD candidate in the computer science department at the University of Pennsylvania. Description of work Presently my main research interest is the development and application of machine learning and statistical techniques toward natural language processing. The representation of words using vector space models is widely used for a variety of natural language processing (NLP) tasks. The two main word embedding categories are cluster based and dense representations. Brown Clustering and other hierarchical clustering methods group similar words based on context. Dense representations such as Latent Semantic Analysis / Latent Semantic Indexing (LSA/LSI), Low Rank Multi-View Learning (LR-MVL) and Word2Vec have shown state-ofthe-art results in syntactic and sentiment tasks. Since current embeddings are focused on word level rather than phrase, they do not capture both fine-grained and coarse context. Therefore encoding meaning at the word, phrase and sentence level is an area of intense research focus. Finally distributional similarity in context does not imply semantic similarity, we find that often antonyms are distributionally similar. Research goals The purpose of our research is to further develop and study novel methods for lower dimensional representation of words, phrases and sentences using structured factorial hidden Markov model (HMM) and signed normalized cut spectral clustering. Understanding meaning at the word, phrase and sentence level is an active area of research; however, our approach is unique in that we extend singular value decomposition based methods using both fine and coarse context as well as paraphrases. By employing these two novel methods, we are able to address these currently unresolved problems. 1 We propose a novel multi-scale factorial hidden Markov model (MFHMM) and an associated spectral estimation scheme. MFHMM provide us with a statistically sound framework for incorporating both fine and coarse context. Using both scales of context is important for tasks such as sentiment analysis and provides better word embeddings for words, phrases and sentences. Our second novel method is the introduction of polarity clustering, which is not captured in popular word embeddings such as Glove, word2vec and eigenwords. Our approach is to apply semi-supervised signed clustering (SSC) by adding negative edges to the graph Laplacian using antonym relations. This technique allows us to generalize to phrases and discriminate polarity at a phrase based level which is currently largely missing in the field. Description of Work In the 2014 academic year, I worked on three main projects. The first project was to understand the correlation between word embeddings and neuroanatomical representations at a sentence level. This project was a collaboration with Murray Grossmans lab in particular Jeff Philips and Brian Avants as well as HRL. My main contribution has to provide vector embeddings which represented sentences. This was achieved using the Stanford parser. This work is leading to a paper where I will be the second author. As we were looking toward reducing the dimensional representation, I started to work on word embedding clusters which brought a major issue that antonyms like happy and sad can belong to the same cluster since these two words can occur in the same context, for instance, I am happy today and I am sad today. This led to signed spectral clustering, which I worked with Jean Gallier who solved the problem of K-cluster signed clustering. This work is moving forward to a paper. Using my prior experience in finance I worked with Jordan Rodu on stock covariance estimation. We extended spectral estimation methods for Hidden Markov Models to MFHMM. We successfully applied and fit this model. We submitted a paper to NIPS. I also presented my preliminary results at the PGMO-COPI conference. I also applied for a Bloomberg research grant with Dean Foster in order to continue my research on MFHMM and SSC. Future Research Academic year 2015 I plan to complete my last WPE I requirement. As well next semester I plan to work with my advisor to select my thesis topic. I intend to finish for publication the paper on multi-scale factorial HMM, a second on signed spectral clustering, and the collaborative paper with Jeff Philips. On the spectral clustering work, a large component of the mathematical theory has already been developed and we have preliminary results; however, many details, such as statistical perturbation and optimization theory still needs to be analyzed formally. Another possible outcome of this research direction is the extension of semantic similarity entailment on the paraphrase database. As well we believe that we maybe able to create antonym relations to other languages using our clustering method with standard machine translation.