The completeness and accuracy of electoral registers in Great Britain

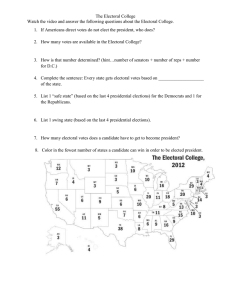

advertisement