A Method for Balancing Loads in Workflow Management Systems

advertisement

ISSN 0918-2802

Technical Report

OXTHAS: A Method for Balancing Loads in

Workflow Management Systems with Web Services

Hideyuki Katoh,

Neila Ben Lakhal,

Takashi Kobayashi1 and

Haruo Yokota1

TR06-0005 Mar

D EPARTMENT OF C OMPUTER S CIENCE

TOKYO I NSTITUTE OF T ECHNOLOGY

Ôokayama 2-12-1 Meguro Tokyo 152-8552, Japan

http://www.cs.titech.ac.jp/

c

The

author(s) of this report reserves all the rights.

1

The author is with Global Scientific Information and Computing Center, Tokyo Institute of

Technology

Abstract

In the context of Workflow management systems handling a large amount of

processes, balancing the workload by using efficient scheduling strategies is more

and more recognized as essential.

In this paper, we propose a load-balancing method for scheduling the activity

instances. In reaching this goal, we propose to use the history of the past activity

instances requests. In this history, we collected the size of the different activity

instances workload and their observed execution time, by the diverse Workflow

engines. Particularly, the considered Workflow engines in our Workflow management system are assimilated to Web services.

Each Workflow engine calculates an estimation of the throughput of each executor, on the base of the past history, and later, it allocates the different activity

instances requests to executors according to the estimated processing capacity and

to the considered activity load size. Finally, we compare the performances of our

proposed method with the conventional round-robin and random scheduling algorithms to validate our proposal and show its effectiveness.

1 Introduction

The Workflow paradigm target is automatizing of business processes. In a Workflow, a

process is viewed as an association of activities. The actual enactment of the processes

and the activities is done through activities and processes instances invocations. In order to control the automatic enactment of the different processes and activities, Workflow Management Systems(WFMS) emerged[1]. Among others awaited merits, the

WFMS introduction allowed to decrease significantly the cost of manually-performed

activities.

Up to now, a wide range of WFMS were proposed, by the Workflow community

and as commercial products. More recently, as a result of the widespread application of WFMS, the workload increased significantly. As a consequence, balancing the

workload is more and more recognized as being indispensable.

Meanwhile, the Web services architecture, which is mainly known for allowing the

connection of widely distributed and heterogeneous systems, by building mainly on

HTTP and SOAP protocols, is attracting extensively researchers interests. The Web

services technology strength resides chiefly in its ability of surpassing computing environments incompatibilities.

As a consequence, the Web services technology application is far more promising

then any other distributed object technologies, exemplified by CORBA, DCOM, and

so forth. When Web services firstly emerged, several standards, namely SOAP, WSDL,

and UDDI were also proposed. They were followed later by, WS-Security, XML signature and a lot of other similar protocols which are triggering massive research efforts.

Moreover, there is noticeable tendency towards adapting BPEL4WS specification, WSTransaction[3], WS-Coordination[4], WS-CAF[5] and the likes in implementing Web

services-based systems.

BPEL4WS[2]is an XML-based Workflow definition language. As for WS-Business

Activity, WS-AtomicTransaction[3] and WS-Coordination[4], they were proposed to

enhance the reliability by allowing a transactional execution. Pursuing the same goals,

1

Flight

Reserve

Hotelë

Business Process

print

ticket

Verif.

Activity

Transition

Car

rental

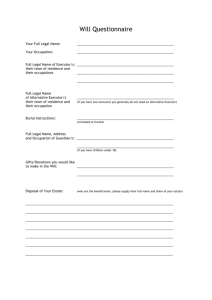

Figure 1: Workflow process model

WS-CAF[5] specification deals with how, between different Web services, important

information about a transaction are shared[5]. As a direct consequence of these different specifications emergence, the possibility of building Web services-based Workflows has become an active research issue. However, these specifications are not addressing yet failures handling and workload balancing and the likes. In fact, the more

commonly-used forms of WFMS are (1)centralized control,(2)distributed control, (3)

control delegated to agents. The three of them differ considerably in the way they

should deal with failures recovery and workload balancing. So far, we proposed an

approach for failures recovery in a Web services-based Workflow executed in a centralized manner[6]. We have also proposed an approach where the execution control

is distributed dynamically among engines[7]. However, we have not dealt yet with

workload balancing issue.

To cope with this issue, in this paper, we propose OXTHAS (Observed Execution

Time History and Activity Size based scheduling), our novel scheduling strategy for

load-balancing in Web services-based Workflows with centralized control. In OXTHAS, in computing the estimated processing capacity, we take into account the possible differences, such as the environment where each activity is executed. on the base of

the estimated throughout, the potential assignment of the different activities’ instances

to the executors is decided. The throughput is calculated on the base of the history of

the previous execution of the different activity instances in terms of processing load

size and execution time, as observed by each Workflow engine.

In what follows, we will first explain our Workflow Management System model

as it is the main object of our paper. Later, we will describe how we estimate the

throughput and explain how it is used to assign the different activities instances to the

different executors. Next, to validate our proposal, we describe our implemented simulator and we make a comparison of the performances of our proposal with round-robin

and random assignment strategies. Finally, we discuss several related work, conclude

our present work, and describe our future directions.

2

2 Workflow Management System Model

2.1 Process model

A business process is often assimilated to a graph(Figure.1) composed of nodes and

arcs. Nodes represent work activities to be executed and arcs define execution dependencies among activities. In case this business process is automated, the portion which

performs that control and management is called a Workflow engine. The Workflow

engine is viewed as being a part the architecture of a WFMS rather than a part of the

process model itself.

2.2 Workflow Management System Architecture

In this paper, the WFMS architecture (Figure.2) follows a centralized control. The

WFMS mainly consists of Workflow engines and executors. The outline of its internal

functioning is described in what follow:

1. The scheduler of each Workflow engine maintains a queue in which the clients

insert the activity instances of each process instance. The scheduler pulls out

activity instance from the queue for execution in their order of insertion. Simultaneously, it has also to keep the information on each process instance in a

process instances table. Similarly, it also have to pull out, one by one, the activity

instances from the queue of activity instances, previously inserted by the Executor. It extracts the necessary information about the process instance to which this

activity instance appertains from the process instances table, and decides which

next activity instance is to be executed.

2. The schedular, with taking into account the information about the activity instance to be executed, and the information it has about the capabilities of the

different executors, its decides to which executor the activity instance execution

is delegated and inserts it in that executor processing queue.

3. Every executor starts processing the different activity instances, inserted in its

queue, by pulling out activity instances from the head of the queue. When the

activity instance execution is finished, the execution results are inserted in the

Workflow engine queue.

4. These steps are repeated until all the process instances processing is terminated.

It becomes possible to perform load-balancing of the whole system by defining the

strategy by which the executors are being assigned the different activities instances,

as described in the above-mentioned procedure. Here, we assume that two or more

Workflow engines exist and each Workflow engine is premised on the ability of sharing

arbitrary the executors. Moreover, we assume that each Workflow engine should know

to which executor its request of processing a particular process instance (and activity

instances) is passed to. However, it cannot know about the process instances or activity

instances of the others.

3

Client

Client

Client

Client m

PI

PI

PI

AI

AI

Workflow

Engine(WE1)

WEi

...

Scheduler

Scheduler

Process

Instance

Table

AI

WEk

...

Scheduler

Process

Instance

Table

AI

AI

Process

Instance

Table

AI

AI

AI from WEk

AI from WE1

AI from WEi

Executor1

...

Executorj

...

Executorn

Figure 2: Workflow Management System Architecture

3 Load-balancing in Workflow Management System

In this section, we deal with the scheduling strategy we are consider in order to perform

load-balancing in the architecture of the above-mentioned Workflow Management system. In order to perform load-balancing, we take into account the processing time of

each activity instance observed by the Workflow engine. Below, we first examine the

case of scheduling strategy in a simple WFMS in which only one Workflow engine is

used. Then, we investigate the different points which should be taken into consideration

in case a WFMS is built using Web services. Furthermore, we discuss the differences

of what we propose with related work concerning load-balancing in a WFMS.

3.1 Load-balancing with One Workflow Engine

In a Workflow, unless there is a dependency between different activity instances, the

activity instance cannot be performed. Furthermore, if there is only one Workflow

engine, since the requests included in the executor will consist only of that Workflow

engine’s requests, the Workflow engine can manage the length of the queue of the

Executor and can presume the processing time in the executor–excluding the waiting

time in the queue. As a result, if the number of Workflow engines is one, load-balancing

can be realized rather easily by applying conventional work scheduling strategies.

4

3.2 Web Services-based System

In what follows, we consider a Web services-based WFMS and we build on the following assumptions:

1. If we take into consideration the possibility that two or more WFMS may coexist,

then two or more Workflow engines may share the same executor(s).

2. An executor can process more than one kind of activity instances.

3. The possibility that the processing load size of each activity instance varies is

large.

4. It is possible that the capabilities and the environment of each executor are not

fixed. In addition, although two or more activity instances might be simultaneously processed within one executor, we restrict here the number of activity

instances to be simultaneously processed to one.

Considering the first assumption, if each executor is assimilated to be one Web service,

then many Workflow engines may not only use the executor functionalities as a Web

service but they may use the same functionalities in other forms. In this case, the requests for activity instances processing that one executor queue contains are most likely

to be sent from different Workflow engines. As each Workflow engine can only know

about the activity instances it is responsible of managing, it is extremely complex to it

to know the length of the executor queue. Therefore, the execution time of the activity

instance which the Workflow engine can only determine is the elapsed time between

the moment the Workflow engine sends a processing request to the executor until the

moment in which the processing termination response is returned to the Workflow engine: it is the total of the waiting time in queue, the processing time in the executor,

and network delay.

In the second assumption, when considering that there are two or more kinds of

activity instances which can be processed by the same executor, it becomes very complicated to determine which activity instance is waiting by what length. As a consequence, the presumed throughput of each executor should take into consideration every

activity instance which can be processed by the executor. As for the third assumption,

it is necessary to keep in mind that the input processing load size effects on the processing time vary from one activity to another. In this paper, in computing the presumed

throughput, we consider that the processing time is proportional to the size. Finally,

concerning the forth assumption that says that the capabilities and/or environment of

each executor are not fixed, this can be explained as follows. Simply, we may think that

the throughput of a certain activity instance differs. Besides, the quality of the network

(e.g., low performances because failures have occurred) and the executors degree of

congestion can influence the environment of execution of the activity instances. As a

consequence, processing delay, retry, and the likes can take place.

The aforementioned premises justify perfectly the need to define a scheduling strategy for WFMS to perform load-balancing. In this case, the only information that every

Workflow engine can use is the history of the already completed activity instances: the

processing load size and the observed execution time.

5

In this paper, we propose to build on this history to define our scheduling strategy.

In fact, this history encompasses also the network situation and executor processing

environment. In addition, these information differ for every Workflow engine.

4 OXTHAS Scheduling Strategy

We propose OXTHAS, our scheduling strategy. In OXTHAS the scheduling is done

one the base of the expected throughput which is computed with taking into account,

also, the environment variables, as observed by the executor of each Workflow engine.

4.1 Estimated Processing Capacity

We give in what follow several relevant definitions:

• Process instance number: P = {p 1 , . . . pi , . . . pl }

• Activity number: A = {a 1 , . . . aj , . . . am }

• Executor number: E = {e 1 , . . . ek , . . . en }

• Processing load size of a j from pi : spi ,aj

• The possibility of mapping a j from pi to ek is mpi ,aj ,ek : if the mapping to the

executor is possible it is equal to 1 otherwise it is equal to 0.

• The observed execution time of a j from pi : tpi ,aj

The observed execution time t pi ,aj is showing the elapsed time from when a Workflow

engine advances a request to an Executor until the moment the processing is finished

and a response is sent back to the Workflow engine. Besides to the processing time, it

contains also, the waiting time in the executor queue, the network delay, retry time etc.

The expected throughput equation builds on the above-defined variables as follows:

cek ,aj =

l

sp ,a

( i j · mpi ,aj ,ek )

t

p =1 pi ,aj

i

l

mpi ,aj ,ek

pi =1

The expected throughput c ek ,aj is calculated from the average value of the work load

per unit time from the past processing time. The larger the estimation of c ek ,aj is

the larger the executor Throughput is. However, other facts might be behind the fact

that cek ,aj may grow larger. For example, when that executor is seldom used, then,

there is almost no waiting time and the value if its c ek ,aj becomes larger than for others

executors. Moreover, if the activity instances that a certain executor has been executing

are mostly activities for which it is suitable, this may multiply the throughput of other

activity instances also and make c ek ,aj value larger for this executor.

6

4.2 OXTHAS

4.2.1

OXTHAS-1: Basic Scheduling Strategy

As described previously, the larger the estimated processing capacity c ek ,aj is, the

larger is the executor throughput value is. Thus, when dispatching an activity instance

to an executor, the value of c ek ,aj of each executor is calculated and the activity instance processing is passed to the executor with the largest value; this is actually the

fundamental of our load balancing strategy OXTHAS-1.

4.2.2 OXTHAS-N: Extended Scheduling Strategy

Keeping only on simply assigning activity instances to executors where the value of

cek ,aj is large does not allow this strategy to be regarded as the best scheduling strategy, especially, if we are dealing with a large number of process instances with long

scheduling time. Since the value of c ek ,aj is computed on the base of the previous execution history, a tendency to a certain kind is shown, however, since the obtained value

does not change flexibly in time, when many requests of activity instances from the

same kind are sent by a certain Workflow engine, at a certain moment, all the requests

will be dispatched to the executor with the highest c ek ,aj value. As a a result, the queue

length is biased and after a while, c ek ,aj value of this executor will fall dramatically. In

our present work, in order to process more efficiently the activity instances, we observe

the processing load size of each activity instance.

When the size of an activity instance is large, it should be assigned to an executor

where the value of c ek ,aj is naturally the largest. Conversely, when the activity instance

size is small, even if it is not necessarily assigned to the executor where the value of

cek ,aj is the smallest, in the future, the activity instance is assigned to a better executor

with more suitable value of c ek ,aj , the bias is decreased, and the efficiency is increased.

Then, for each of the N candidate executors, a threshold for dividing the processing

load size of activity instances is established, this is the main concept on which we build

our proposal OXTHAS-N: load balancing technique for performing activity instances

assignment according to the processing load size. With: w i = (borderline of size),

n =(the number of division), and S max =(the maximum size of the activity instance

in the inside of the past history), the threshold to divide the processing load size of an

activity instance is:

i

n

j

k · Smax

wi =

j=1

k=1

5 Experimental Validation

5.1 Simulator architecture

In order to show the validity of our load-balancing strategy, we have implemented a

simulation system. We have simulated the architecture of the WFMS, shown in (Figure.3), on one computer. In our simulation, we measured the necessary time to com-

7

data flowŒ

WE1

processing

flowŒ

Ex1

PI

Table

Round

Robin

OXTHAS

WEi

Exi

PI

Table

PI Generater

Round

Robin

OXTHAS

WEk

Simulator

start

Exn

PI

Table

Round

Robin

OXTHAS

Figure 3: Simulator architecture

plete all the generated process instances with steps numbers: a step is defined by one

round trip of a dashed line in (Figure.3).

First, PI Generator generates process instances and sends them to WEs. Each WE,

when it finds instances in its queue, it pulls out one instance and passes it to an executor

according to a chosen strategy. Then, each executor, when an activity instance is in

its queue, it pulls it out and processes it. When the activity instance processing is

completed, it is returned to the WE. This is repeated by the flow of a dashed line,

increasing one step at a time. We measure the processing step with which all the

process instances are completed. In addition, in the present paper, we do not consider

network delay or retry.

5.2 Simulation Description

5.3 Experimental Results and Discussion

We first make the number of process instances vary and compare the obtained results of different algorithms: round-robin algorithm, random algorithm, OXTHAS-1,

OXTHAS-2, and OXTHAS-3. Since the expected throughput is computed on the base

of historical data, we used random algorithm for the first 1000 steps, made measurements 20 times respectively, and computed their average. Next, we need to specify

the number of past invocations we will use to calculate the estimated processing capacity. The number of the process instances at this time is set to 1000. Finally the

number of processing steps was measured according to the difference in the executors

8

60

Number of Process Instance =1000

50

40

30

OXTHAS1

OXTHAS2

OXTHAS3

20

10

15000

13000

11000

9000

7000

5000

3000

0

1000

total number of steps

when processing finished

70K

load index computation period

Figure 4: Used past history period variation

performances.

We set the number of Workflow engines to two, within each of the Workflow engines, the same number of process instances is processed. The information about where

each process instance is being processed is only known to the Workflow engine responsible of the process instance enactment. As for the considered business processes, we

made simple processes without branching. Moreover, in this paper, we do not take into

consideration the network delay, we assume that the processing does not fail, and it

terminates successfully. As for the processing performances of the executors, the size

of a process instances, activity instances, and so forth, they are determined at random.

Moreover, the number of activity instances for all the process instances have was set to

five. Finally, all the executors can carry out all kind of activity instances.

First, we will discuss which part of the past history we need to consider in determining the expected throughput. In (Figure.4), it is very clear that a difference has

come out: we can understand that the processing time is quite large when only a short

period from the past history is used. And after, it becomes invariable from 5000 up

to 6000. However, in case all the past history is used, the number of processing steps

becomes the smallest. Hence, we believe that computing the estimated processing capacity from a long period history is behind the fact that the temporary influence of the

load bias is eliminated and that stable expected throughput values are acquired. Moreover, (Figure.4) shows that it is better if we use at least the past history period between

5000 steps and 6000 steps, and above 6000.

In (Figure.5), we make the number of process instances vary and we measure

the processing time, and this for the above-mentioned techniques: random algorithm

round-robin, OXTHAS-1, OXTHA-2, and OXTHAS-3. Even if we use our proposed

expected throughput equation which is rather simple and based only on passing the

requests of activity instances to executors with large values,(Figure.5) shows that our

9

total number of steps

when processing finished

120 K

RoundRobin

100

Random

OXTHAS1

80

OXTHAS2

60

OXTHAS3

40

20

0

200

400 600 800 1000 1200 1400 1600 1800 2000

ProcessInstance number /WE

Figure 5: Variation of the processing time of process instances with different algorithms

proposal outperforms round-robin algorithm. Furthermore, in the obtained results, no

matter which OXTHAS is used, the results are always better than round-robin and random algorithm. We can understand here that by taking the size as basis for requests

assigning, the tendency towards allocating the activity instances to the same executor

is decreased, and the overall processing performances are notably ameliorated. Moreover, we can also notice that using the proposed expected throughput, simultaneously,

with the scheduling strategy is effective.

In (Figure.6), we make vary the executors performances according to their featured

capabilities and we measured the processing steps. What is meant by "One-set good"

is when only one executor is better than the others in its processing performances.

Similarly, "good average bad" is when there is executors with very good, average,

and bad processing performances that exist at the same time. "speciality" designates

a certain activity instance for which the processing performances of the executor are

particularly high.

"almost all the same" means that the processing performances of the executor are almost the same for all. From (Figure.6), we can say that OXTHAS-1 has not changed so

much if compared with round-robin or with the random algorithm. As for OXTHAS-2

and OXTHAS-3, except for cases when there is almost no gap in processing performances, they are effective, if compared with other techniques. Finally, we believe that

our proposal is effective when the capabilities of the executors differ.

5.4 Related work

in [8], authors considered the case when a certain process instance is supplied to a

Workflow engine for processing after a fixed period of time is elapsed. They proposed

10

70K

Process Instances number=1000

total number of steps

when processing finished

60

RoundRobin

Random

OXTHAS1

OXTHAS2

OXTHAS3

50

40

30

20

10

0

One-set good

good

average

bad

speciality

almost all the

same

Figure 6: How throughput varies according to the executor capabilities

a load-balancing technique that reduces the waiting time before the Workflow engine

starts processing the process instance by deciding to which Workflow engine a certain

process instance is allocated. In their approach, authors studied a distributed WFMS

architecture with distributed worklist management mechanism and load balancing sub

system. Although this load index has realized load-balancing for every process instance, when we consider the case where business processes have a large number of

activities or the case of business processes containing long activity processing time,

balancing the load per activity instance become required. This research does not deal

with load balancing of activity instances.

[9] has proposed an algorithm for scheduling with resources restrictions. It considers work assignment in a closed WFMS where there is only one Workflow engine. The

shortest work to be processed is assigned first. however, if we consider the case where

there are two or more Workflow engines, if each Workflow engine is only aware of its

assigned work, in case the network changes dynamically, this algorithm cannot be used

as it is.

6 Conclusions

In this paper, we proposed a load-balancing strategy for centralized Web services-based

Workflow Management System. Specifically, we have explained our model of Workflow management system and the consideration of the concept of load index in the context of Web services-based Workflows. Our contribution resided in proposing a load

11

index based on the history of the execution time and of the workload of each activity

instance. Our proposed load index takes into consideration the differences of the environment where the different activity instances are enacted. Furthermore, based on our

proposed concept of expected throughput, we proposed OXTHAS, our load balancing

strategy. In OXTHAS, the processing load size of each activity instance was taken into

consideration. After, we validated our proposal by implementing a simulation system.

As future directions, we intend to take into consideration the network delay and the

reliability of executors. We may also implement effectively a WFMS and confront the

results with the simulator results.

References

[1] Workflow Management Coalition:http://www.wfmc.org/

[2] BPEL:http://www-106.ibm.com/developerworks/webservices/library/ws-bpel/

[3] WS-AtomicTransaction:http://www-106.ibm.com/developerworks/library/ws-atomtran/

[4] WS-Coordination:http://www-106.ibm.com/developerworks/library/ws-coor/

[5] WS-CAF: http://www.oasis-open.org/committees/tc_home.php/?wgabbrevws-caf

[6] Katoh HIDEYUKI, Takashi KOBAYASHI, and Haruo YOKOTA: Failures recovery in

autumatic workflow executions, in Proc. of 15th IEICE Data Engineering Workshop

(DEWS2004) I-12-2, Mar (2004)

[7] Neila Ben LAKHAL, Takashi Kobayashi, and Haruo Yokota: THROWS: An architecture

for highly available distributed execution of web services compositions.In Proc. of RIDE

WSECEG’2004, 103–110. Mar (2004)

[8] Li jie Jin, Fabio Casati, Mehmet Sayal, and Ming-Chien Shan: Load balancing in distributed

workflowmanagement system. In Proc. SAC2001, Aug (2001)

[9] K.Nonobe and T.Ibaraki: Formulation and tabu search algorithm for the resource constrained project scheduling problem In Essays and Surveys in Metaheuristics, Kluwer Academic Pub(2002)

12