Document 17954101

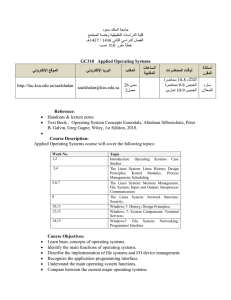

advertisement