Group Modeling for fMRI

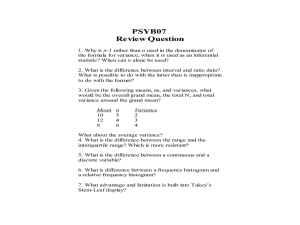

advertisement

Group Modeling

for fMRI

Thomas Nichols, Ph.D.

Assistant Professor

Department of Biostatistics

http://www.sph.umich.edu/~nichols

IPAM MBI

July 21, 2004

1

Overview

• Mixed effects motivation

• Evaluating mixed effects methods

• Case Studies

– Summary statistic approach (HF)

– SPM2

– FSL3

• Conclusions

2

Overview

• Mixed effects motivation

• Evaluating mixed effects methods

• Case Studies

–

–

–

–

Summary statistic approach (HF)

SnPM

SPM2

FSL3

• Conclusions

3

Lexicon

Hierarchical Models

• Mixed Effects Models

• Random Effects (RFX) Models

• Components of Variance

... all the same

... all alluding to multiple sources of variation

(in contrast to fixed effects)

4

Random Effects

Illustration

3 Ss, 5 replicated RT’s

x

• Standard linear model

Y X

assumes only one source

of iid random variation

• Consider this RT data

• Here, two sources

– Within subject var.

– Between subject var.

– Causes dependence in

Subject1

Subject2

Subject3

Residuals

Subject1

Subject2

Subject3

5

Fixed vs.

Random

Effects in fMRI

• Fixed Effects

– Intra-subject

variation suggests

all these subjects

different from zero

• Random Effects

– Intersubject

variation suggests

population not

very different from

zero

Distribution of

each subject’s

estimated effect

2FFX

Subj. 1

Subj. 2

Subj. 3

Subj. 4

Subj. 5

Subj. 6

0

2RFX

Distribution of

population effect

6

Fixed Effects

• Only variation (over subjects/sessions) is

measurement error

• True Response magnitude is fixed

7

Random/Mixed Effects

• Two sources of variation

– Measurement error

– Response magnitude

• Response magnitude is random

– Each subject/session has random magnitude

–

8

Random/Mixed Effects

• Two sources of variation

– Measurement error

– Response magnitude

• Response magnitude is random

– Each subject/session has random magnitude

– But note, population mean magnitude is fixed

9

Fixed vs. Random

• Fixed isn’t “wrong,” just usually isn’t of

interest

• Fixed Effects Inference

– “I can see this effect in this cohort”

• Random Effects Inference

– “If I were to sample a new cohort from the

population I would get the same result”

10

Overview

• Mixed effects motivation

• Evaluating mixed effects methods

• Case Studies

–

–

–

–

Summary statistic approach (HF)

SnPM

SPM2

FSL3

• Conclusions

11

Assessing RFX Models

Issues to Consider

• Assumptions & Limitations

– What must I assume?

– When can I use it

• Efficiency & Power

– How sensitive is it?

• Validity & Robustness

– Can I trust the P-values?

– Are the standard errors correct?

– If assumptions off, things still OK?

12

Issues: Assumptions

• Distributional Assumptions

– Gaussian? Nonparametric?

• Homogeneous Variance

– Over subjects?

– Over conditions?

• Independence

– Across subjects?

– Across conditions/repeated measures

– Note:

• Nonsphericity = (Heterogeneous Var) or (Dependence)

13

Issues: Soft Assumptions

Regularization

• Regularization

– Weakened homogeneity assumption

– Usually variance/autocorrelation regularized over space

• Examples

– fmristat - local pooling (smoothing) of (2RFX)/(2FFX)

– SnPM - local pooling (smoothing) of 2RFX

– FSL3 - Bayesian (noninformative) prior on 2RFX

14

Issues: Efficiency & Power

• Efficiency: 1/(Estmator Variance)

– Goes up with n

• Power: Chance of detecting effect

– Goes up with n

– Also goes up with degrees of freedom (DF)

• DF accounts for uncertainty in estimate of 2RFX

• Usually DF and n yoked, e.g. DF = n-p

15

Issues: Validity

• Are P-values accurate?

– I reject my null when P < 0.05

Is my risk of false positives controlled at 5%?

– “Exact” control

• FPR = a

– Valid control (possibly conservative)

• FPR a

• Problems when

– Standard Errors inaccurate

– Degrees of freedom inaccurate

16

Overview

• Mixed effects motivation

• Evaluating mixed effects methods

• Case Studies

–

–

–

–

Summary statistic approach (HF)

SnPM

SPM2

FSL3

• Conclusions

17

Four RFX Approaches in fMRI

• Holmes & Friston (HF)

– Summary Statistic approach (contrasts only)

–

Holmes & Friston (HBM 1998). Generalisability, Random Effects & Population Inference. NI, 7(4

(2/3)):S754, 1999.

• Holmes et al. (SnPM)

– Permutation inference on summary statistics

–

–

Nichols & Holmes (2001). Nonparametric Permutation Tests for Functional Neuroimaging: A Primer

with Examples. HBM, 15;1-25.

Holmes, Blair, Watson & Ford (1996). Nonparametric Analysis of Statistic Images from Functional

Mapping Experiments. JCBFM, 16:7-22.

• Friston et al. (SPM2)

– Empirical Bayesian approach

–

–

Friston et al. Classical and Bayesian inference in neuroimagining: theory. NI 16(2):465-483, 2002

Friston et al. Classical and Bayesian inference in neuroimaging: variance component estimation in

fMRI. NI: 16(2):484-512, 2002.

• Beckmann et al. & Woolrich et al. (FSL3)

– Summary Statistics (contrast estimates and variance)

–

–

Beckmann, Jenkinson & Smith. General Multilevel linear modeling for group analysis in fMRI. NI

20(2):1052-1063 (2003)

Woolrich, Behrens et al. Multilevel linear modeling for fMRI group analysis using Bayesian inference.

NI 21:1732-1747 (2004)

18

Overview

• Mixed effects motivation

• Evaluating mixed effects methods

• Case Studies

–

–

–

–

Summary statistic approach (HF)

SnPM

SPM2

FSL3

• Conclusions

19

Case Studies:

Holmes & Friston

• Unweighted summary statistic approach

• 1- or 2-sample t test on contrast images

– Intrasubject variance images not used (c.f. FSL)

• Proceedure

– Fit GLM for each subject i

– Compute cbi, contrast estimate

– Analyze {cbi}i

20

Case Studies: HF

Assumptions

• Distribution

– Normality

– Independent subjects

• Homogeneous Variance

– Intrasubject variance homogeneous

• 2FFX same for all subjects

– Balanced designs

21

Case Studies: HF

Limitations

• Limitations

– Only single image per

subject

– If 2 or more conditions,

Must run separate model

for each contrast

• Limitation a strength!

– No sphericity assumption

made on conditions

– Though nonsphericity

itself may be of interest...

From HBM

Poster

WE 253

22

Case Studies: HF

Efficiency

• If assumptions true

– Optimal, fully efficient

• If 2FFX differs between

subjects

– Reduced efficiency

– Here, optimal ˆ requires

down-weighting the 3

highly variable subjects

0

ˆ

23

Case Studies: HF

Validity

• If assumptions true

– Exact P-values

• If 2FFX differs btw subj.

– Standard errors OK

• Est. of 2RFX unbiased

– DF not OK

• Here, 3 Ss dominate

• DF < 5 = 6-1

0

2RFX

24

Case Studies: HF

Robustness

• Heterogeneity of 2FFX across subjects...

– How bad is bad?

• Dramatic imbalance

(rough rules of thumb only!)

– Some subjects missing 1/2 or more sessions

– Measured covariates of interest having dramatically

different efficiency

• E.g. Split event related predictor by correct/incorrect

• One subj 5% trials correct, other subj 80% trials correct

• Dramatic heteroscedasticity

– A “bad” subject, e.g. head movement, spike artifacts

25

Overview

• Mixed effects motivation

• Evaluating mixed effects methods

• Case Studies

–

–

–

–

Summary statistic approach (HF)

SnPM

SPM2

FSL3

• Conclusions

26

Case Studies: SnPM

• No Gaussian assumption

• Instead, uses data to find empirical

distribution

5%

5%

Parametric Null Distribution

Nonparametric Null Distribution

27

Permutation Test

Toy Example

• Data from V1 voxel in visual stim. experiment

A: Active, flashing checkerboard B: Baseline, fixation

6 blocks, ABABAB Just consider block averages...

A

B

A

B

A

B

103.00

90.48

99.93

87.83

99.76

96.06

• Null hypothesis Ho

– No experimental effect, A & B labels arbitrary

• Statistic

– Mean difference

28

Permutation Test

Toy Example

• Under Ho

– Consider all equivalent relabelings

AAABBB

ABABAB

BAAABB

BABBAA

AABABB

ABABBA

BAABAB

BBAAAB

AABBAB

ABBAAB

BAABBA

BBAABA

AABBBA

ABBABA

BABAAB

BBABAA

ABAABB

ABBBAA

BABABA

BBBAAA

29

Permutation Test

Toy Example

• Under Ho

– Consider all equivalent relabelings

– Compute all possible statistic values

AAABBB 4.82

ABABAB 9.45

BAAABB -1.48

BABBAA -6.86

AABABB -3.25

ABABBA 6.97

BAABAB 1.10

BBAAAB 3.15

AABBAB -0.67

ABBAAB 1.38

BAABBA -1.38

BBAABA 0.67

AABBBA -3.15

ABBABA -1.10

BABAAB -6.97

BBABAA 3.25

ABAABB 6.86

ABBBAA 1.48

BABABA -9.45

BBBAAA -4.82

30

Permutation Test

Toy Example

• Under Ho

– Consider all equivalent relabelings

– Compute all possible statistic values

– Find 95%ile of permutation distribution

AAABBB 4.82

ABABAB 9.45

BAAABB -1.48

BABBAA -6.86

AABABB -3.25

ABABBA 6.97

BAABAB 1.10

BBAAAB 3.15

AABBAB -0.67

ABBAAB 1.38

BAABBA -1.38

BBAABA 0.67

AABBBA -3.15

ABBABA -1.10

BABAAB -6.97

BBABAA 3.25

ABAABB 6.86

ABBBAA 1.48

BABABA -9.45

BBBAAA -4.82

31

Permutation Test

Toy Example

• Under Ho

– Consider all equivalent relabelings

– Compute all possible statistic values

– Find 95%ile of permutation distribution

AAABBB 4.82

ABABAB 9.45

BAAABB -1.48

BABBAA -6.86

AABABB -3.25

ABABBA 6.97

BAABAB 1.10

BBAAAB 3.15

AABBAB -0.67

ABBAAB 1.38

BAABBA -1.38

BBAABA 0.67

AABBBA -3.15

ABBABA -1.10

BABAAB -6.97

BBABAA 3.25

ABAABB 6.86

ABBBAA 1.48

BABABA -9.45

BBBAAA -4.82

32

Permutation Test

Toy Example

• Under Ho

– Consider all equivalent relabelings

– Compute all possible statistic values

– Find 95%ile of permutation distribution

-8

-4

0

4

8

33

Case Studies: SnPM

Assumptions

• 1-Sample t on difference data

– Under null, differences distn symmetric about 0

• OK if 2FFX differs btw subjects!

– Subjects independent

• 2-Sample t

– Under null, distn of all data the same

• Implies 2FFX must be the same across subjects

– Subjects independent

34

Case Studies: SnPM

Efficiency

• Just as with HF...

– Lower efficiency if heterogeneous variance

– Efficiency increases with n

– Efficiency increases with DF

• How to increase DF w/out increasing n?

• Variance smoothing!

35

Case Studies: SnPM

Smoothed Variance t

• For low df, variance estimates poor

– Variance image “spikey”

• Consider smoothed variance t statistic

mean difference

variance

t-statistic

36

Permutation Test

Smoothed Variance t

• Smoothed variance estimate better

– Variance “regularized”

– df effectively increased, but no RFT result

mean difference

smoothed

variance

Smoothed

Variance

t-statistic

37

Small Group

fMRI Example

• 12 subject study

– Item recognition task

– 1-sample t test on diff. imgs

– FWE threshold

uRF = 9.87

uBonf = 9.80

5 sig. vox.

t11 Statistic, RF & Bonf. Threshold

378 sig. vox.

Smoothed Variance

t Statistic,

Permutation Distn Maximum t11

Nonparametric Threshold

uPerm = 7.67

58 sig. vox.

t11 Statistic, Nonparametric Threshold

38

Working memory data: Marshuetz et al (2000)

Case Studies: SnPM

Validity

• If assumptions true

– P-values’s exact

• Note on “Scope of inference”

– SnPM can make inference on population

– Simply need to assume random sampling of

population

• Just as with parametric methods!

39

Case Studies: SnPM

Robustness

• If data not exchangeable under null

– Can be invalid, P-values too liberal

– More typically, valid but conservative

40

Overview

• Mixed effects motivation

• Evaluating mixed effects methods

• Case Studies

–

–

–

–

Summary statistic approach (HF)

SnPM

SPM2

FSL3

• Conclusions

41

Case Study: SPM2

• 1 effect per subject

– Uses Holmes & Friston approach

• >1 effect per subject

– Variance basis function approach used...

42

SPM2 Notation: iid case

Cor( ) I

y=X +

N1

Np

p1

N1

• 12 subjects,

4 conditions

X

Error covariance

N

– Use F-test to find

differences btw conditions

• Standard Assumptions

– Identical distn

– Independence

– “Sphericity”... but here

not realistic!

N

43

Multiple Variance Components

Cor ( ) k Qk

y=X +

N1

Np

p1

N1

k

Error covariance

• 12 subjects, 4 conditions

• Measurements btw

subjects uncorrelated

• Measurements w/in

subjects correlated

Errors can now have

different variances and

there can be correlations

Allows for ‘nonsphericity’

N

N

44

Non-Sphericity Modeling

Error Covariance

– Errors are

independent

but not identical

– Errors are not

independent

and not identical

45

Case Study: SPM2

• Assumptions & Limitations

– Cor ( ) k Qk assumed to globally

k

homogeneous

– k’s only estimated from voxels with large F

– Most realistically, Cor() spatially heterogeneous

– Intrasubject variance assumed homogeneous

46

Case Study: SPM2

• Efficiency & Power

– If assumptions true, fully efficient

• Validity & Robustness

– P-values could be wrong (over or under) if

local Cor() very different from globally

assumed

– Stronger assumptions than Holmes & Friston

47

Overview

• Mixed effects motivation

• Evaluating mixed effects methods

• Case Studies

–

–

–

–

Summary statistic approach (HF)

SnPM

SPM2

FSL3

• Conclusions

48

FSL3: Full Mixed Effects Model

Y X K K K First-level, combines sessions

K X G G G Second-level, combines subjects

G X H H H Third-level, combines/compares groups

49

FSL3: Summary Statistics

50

Summary Stats Equivalence

ct H 0

ct H 0

Crucially, summary stats here are not just

estimated effects.

Summary Stats needed for equivalence:

ˆ

cov( ˆ )

dof

Beckman et al.,

2003

Case Study: FSL3’s FLAME

• Uses summary-stats model equivalent to

full Mixed Effects model

• Doesn’t assume intrasubject variance is

homogeneous

– Designs can be unbalanced

– Subjects measurement error can vary

52

Case Study: FSL3’s FLAME

• Bayesian Estimation

– Priors, priors, priors

– Use reference prior

• Final inference on posterior of

– | y has Multivariate T distn (MVT)

but with unknown dof

53

Approximating MVTs

Gaussian

FAST

Estimate

p( | Y , ˆ )

p( | Y ) MVT

dof?

Model

MCMC

BIDET

p( | Y )

Samples

SLOW

BIDET =

Bayesian Inference with

Distribution Estimation using T

Overview

• Mixed effects motivation

• Evaluating mixed effects methods

• Case Studies

–

–

–

–

Summary statistic approach (HF)

SnPM

SPM2

FSL3

• Conclusions

55

Conclusions

• Random Effects crucial for pop. inference

• Don’t fall in love with your model!

• Evaluate...

– Assumptions

– Efficiency/Power

– Validity & Robustness

56