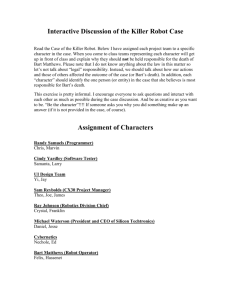

Rustan Leino: Good afternoon, everyone. Thank you all... have the pleasure to introduce Bart Jacobs, who has been...

advertisement

Rustan Leino: Good afternoon, everyone. Thank you all for coming. I'm Rustan Leino. And today I have the pleasure to introduce Bart Jacobs, who has been an intern with us for a long stretch of time, about a year almost, I think. And he was extremely productive during those internships and we were sad to see him go, but it was good that he did, because he has gone and done wonderful things in all those years. And one of those achievements has been -- well, he's worked on checkers for dynamic frames, for implicit dynamic frames. I think implicit dynamic frames, some of those ideas he had when he was here and he was trying to convince me that he they were good ideas, and in retrospect they were really great ideas. So he's in the last several years been working quite a bit with his VeriFast verifier, which takes both C and Java as input. And then the programs are specified using separation logic. Now separation logic is used in many contexts. But his tool really makes it a reality that you can really make use of it in specifying the programs. And if you see Bart only every so often, you turn around and then all of a sudden there are new wonderful features that are so impressive in the tool, and that happens constantly. So today he's going to tell us about one of those, which is modular, I think, termination tracking. He'll probably explain what it is. Bart Jacobs: Thanks, Rustan. Actually I'll be talking about four new features. Actually, three new features and one new one, one proof that all of it actually -Rustan Leino: Done in the last month. Bart Jacobs: I know. So let's see if we get through all of it. So actually these are the things that I will talk about. The first one is modular termination verification. So recently I added support for proving not just partial correctness but also total correctness of programs with VeriFast. So you can prove that programs terminate. Or if you don't want them to terminate, you can also prove that they are live, that they are responsive. So that's the first thing I will talk about. The second thing is the part which is not really a new feature, but which is like the foundations of some of VeriFast. So this is in the context of recent developments in the concurrency verification world. So people have come up with very intricate and very sophisticated higher-order logic for very specifying or verifying concurrency. But actually there is a very unsophisticated way of verifying fine-grained concurrency, and that's what is implemented in VeriFast. By the way all of these sophisticated ones don't have to support yet. They only exist in proof assistance, so you have to use them very laboriously and interactively. This one is supported in VeriFast. I will talk about that. And thirdly, I will talk about provably live exception handling. So most programs that you write, at least in Java, they will not be live. That is in the presence of exceptions they might deadlock. And in .NET it's slightly better because Insurgent 2.0, if that terminates within an exception, the entire process is killed. But that's still not quite the behavior you want. So I'll talk about how to deal with that and how to verify then these programs in the third part. And the fourth part I will talk about verifying Hello World. So we recently achieved verification of Hello World after a long time. So usually people, I mean it's the sites. People don't really concentrate in that kind of programs. Typically we only prove that they don't crash. We don't prove that the program does something. And so then in the fourth part I will talk about features for specifying and verifying that the program actually does something useful. So let's first look at modular termination verification. For that I have a different slide tech. All right. So this is work I did with people in the Netherlands. So first what is the problem precisely? So it's not just termination verification, it's modular termination verification. That's what makes it slightly new, the approach. I mean people have known how to verify termination for a long time. It's harder to see how to do it in a modular rate, especially in the presence of dynamic binding. So if you have a programming language like, for example, Java or C# with interfaces, and the methods are virtual methods, therefore are dynamically bound, how to give a contract, how to specify these methods such that you can prove such that the proof of each module separately, when taken together, implies that the entire program terminates. That's the question. So for example, how to specify these methods. And you want to prevent, for example, that this implementation verifies. You want to reject this implementation. This is one where the intersection methods delegates the work to the object that's passed in as an argument. So you want to check whether the received object intersects some other set, and to do that you ask the other set whether it intersects you. For partial correctness, that's perfectly fine. For total correctness, that's not fine, because you're not doing any work. So if you instantiate such a set and then you call intersect on it passing itself in, then you have an infinite loop, so how to catch that. So what, of course one proof system is one that allows everything. That cannot prove everything, that's perfectly sound, that's not useful. So we want to allow many programs, including this one, so we have an implementation of sets, that is the empty sets, so it doesn't contain anything, it doesn't intersect with anything. We have this sets that equals other sets, plus one element inserted into it, so it contains some elements X if X equals elem, or the other set contains X. And then this set intersects with other set if the other set contains, actually this should be elem instead of this. Or the other set intersects with other. Okay, so that's fine. We want to also support if a set is based on top of another set. For example, if you defined a union of two sets, for example this way, and then you identify another union. So the reason why I give this example is because you might think that you can prove termination by looking at the size of the sets that has to go down at each call. But here, the size the sets doesn't go down at each call, because here you simply delegate to another class without doing any work yourself. But it's the class that's earlier in the program. And we also want to support that. So these are some examples that you want to support. Now, the proposed solution is built on top of separation logic. So at first I'm going to quickly recall separation logic for object oriented programs before adding the new feature that I propose. So how to specify partial correctness for such an interface. So what you typically define in this separation logic is you define a predicate that abstract needed notes, the resources required by the set. So more specifically it will denote the memory locations that comprise the set's representation. So the fields of the objects that the set uses to store its own state. That's what is represented by this predicate. So then the contents method will require that you give it access to the set since the contents method doesn't modify the set, it only requires some fractional permission to it. And we don't care how much the fraction is, so it's an underscore. This means give me some fraction of the permission to access the set so we can read the set modified, and we will give back some fraction of permission. The same for intersects. We require access to the sets, so this set as well as to the other set and we give it back. So now, what is the theory underlying that? It's as follows. Or actually I think it's just that this title is in the wrong place. We're already in the middle of explaining this. So what is an implementation of this interface? What does that look like? Well, for example, for insert, we give a body to this predicate as follows. The representation of an insert object consists of the elem field. So we have to have permission to access the elem field. We don't care about its value, and separately we have to have permission to access the sets zero field. So this, by the way, this syntax for declaring a task, is like scalar, so you declared the fields of the class in parentheses after the class name. So this is scalar syntax for declaring fields of the class. So we have access to the elem field, to the set zero field, as well as to the set object pointed to by set zero. So you can have recursion here. So set is defined in terms of set itself, for another object in this case. But since it's an inductive predicate, so long as the recursive occurrence occurs in positive positions, it always has a well-defined meaning. So this is how we define the representation of a set. And then the body of the methods can be proved easily against the specification. And the client [indiscernible] can also be proved easily against the specification. So if we start with nothing, we know only true. Then we create an empty object so we get that empty set. We create an insert object, so we get the fields of this insert object and we can make it more abstract by summarizing this as set2.set. So we're folding up the set predicate for set2. So this is the body of the set predicate for set2, and this is if we followed it up. And then the same for set3. And now if we split this into to two halves, we can call it with itself. So here this program doesn't terminate, but it's safe, so it's partial correctness position holds. So we can prove it with partial correctness, even though this program doesn't terminate. Or actually it does terminate. Sorry. So in this case it terminates because empty and insert, they terminate. So the syntax of assertions is as follows. You have points to assertions, predicate calls, separating conjunction, existential quantification, and the Boolean constants. The method definitions look as follows. So you have a method header with a return type and parameter types. It requires some precondition and it ensures some post-condition. And it has a body. So what is the meaning of assertions? The meaning of assertions is given as follows. It's given in terms of an interpretation I for predicates and then in heap H, which has the contents of all the fields. So the points to assertion or [indiscernible] is true, if the tuple O comma F comma V is in the heap. That is if O.F is in the domain of the heap and the heap maps [indiscernible]. A predicate application is true if the application is in I, is in the interpretation of the predicates. So I is a parameter here. We will see later how we can define a specific interpretation for predicates. But here it's just delegated to I. And then separating conjunction is defined as follows. P1 star P2 holds in heap H if you can split the heap into H1 and H2. So this means that H1 and H2 split the heap up and P1 holds for H1 and P2 holds for H2. >>: What is I? Bart Jacobs: So I is the interpretation for predicate applications. It's a set of tuples of this form. And we will see later how we define a specific I. So if I give you the set of all predicate applications that are true, then I can define the meaning of arbitrary assertions that contain predicate applications. We will see later how we actually define a particular I. >>: It seems that you want both I and H to be functional so that you cannot have OFV in [indiscernible] and also have OFV prime for a different time. Bart Jacobs: Yes, that will always be the case too. That's right. So H is actually going to be a function from ode of F pairs to values. Or actually a partial function. So this is actually just short time for saying ode of F is in the domain and ode of F is mapped to V. >>: H is a partial? Bart Jacobs: That's right. >>: [Indiscernible]. Bart Jacobs: Well, it's a partial map from object reference fields name pairs to values. So you can have one field of an object being in the heap and another field of the same object not being in the heap. That would be useful later. So heaps actually represent permissions to access parts of the full heap. So this might be the full heap or this might be a partial view of the heap in a sense. So part of the heap. And actually we'll use this theorem, right, with the separating conjunction. What you're doing here is you're interpreting P1 in one part of H and P2 in the another part of H. So if P1 talks about one field of object, P2 should not talk about the same field, but it can talk about another field of the same subject. That's the core notion of separation logic. >>: [Indiscernible] Bart Jacobs: Not really. So I will also, so this predicate, they can also talk about the heap. Because note that H is passed in, so it's part of the tuple that is either in or not in I. So the predicates that bundle up a number of points to assertions, then bundle up a part of the heap. >>: [Inaudible] Bart Jacobs: No, not applicable just because this one is in I doesn't mean that it's going to be in I if you take a smaller H. >>: So I is just constant, it doesn't change as the program executes. Bart Jacobs: That's right. It doesn't change. >>: The partition of all of the predicates. And H keeps changing. Bart Jacobs: Yes. >>: What about local variables? Bart Jacobs: Local variables are not in here. So I is not, it cannot mention to, a predicate definition cannot mention a local variable. >>: But I'm wondering is why, to the left of the turnstile you have I and H, and I was also expecting that you have the valuation of local -- Bart Jacobs: Right. Typically you have that, but here I'm using a programming language that doesn't have mutable local variables. So you have read-only local variables. So I assume the values are substituted into the assertions already. That simplifies some things. But yes in general typically in the literature you have this. All right. So this is the meaning of assertions. Now, how do we mean, how do we define the meaning of predicates? Well, as follows. Given if we already have an interpretation I for predicates, we can define a new interpretation F of I as follows. So we look at the definition of the predicates. So suppose we want to know if this tuple is in F of I. So then we will look at what is the class of object O. If it's class C, then we will look at the definition of predicate B in class C. Suppose this is the definition of that predicate there. And then we will interpret this body under I and H. And if that's true then this tuple is in F of I. So we will use I for the recursive calls in the body of the definition. And given this functor, so to speak, you can take the least-fixed point. So this is the least-fixed point of F, which is well defined, because if is monotonic. So that's the [indiscernible] theorem. Just so we will have the property that F of I fixed equals I fixed. So that means that it satisfies the equations that are the predicate definitions. So that means that we can use this S, a reasonable interpretation for predicates. And in further slides will just write this to mean this. So I fixed will be implicit from now. >>: Does this have something to do with, I think a restriction that you said earlier where predicates appear inside definition of predicates only in positive form? Bart Jacobs: That's right. Yes. That's a crucial restriction to make this work. >>: Okay. Bart Jacobs: So here in this syntax, you will see that there's no negative constructs, so there's no implication or negation in the syntax of assertions, which [indiscernible] trivially that the predicate calls occur only in positive positions. >>: [Indiscernible]. Bart Jacobs: Right. No you can use this for Boolean expressions. So there are Boolean operators. So if you have something that evaluates to either true or false, if you substitute in the local variables, then that's fine. So that's allowed. You can have false in here; right. But you can't have a negation or implication that has either points to assertions or predicate calls in its operands. >>: So essentially you have [indiscernible] existential variables are like [indiscernible] at top level. Bart Jacobs: Maybe, yeah. >>: And what you said would be the case [indiscernible]. Bart Jacobs: Later I will see a way that you can still have negative effects about predicates in VeriFast, just not in this particular talk. In the next part of the talk, the second chapter, so to speak, I will show how you can use ghost commands as proofs of negative effects. So you will have nested triples [indiscernible] about ghost commands, and they can have a predicate in the precondition. And in a sense the precondition of a triple is a negative position. So in that way you can still have negative effects, so to speak, about predicates. >>: Can I just ask for clarification? Bart Jacobs: Sure. >>: Some of these restrictions I imagine you're inheriting because you use separation logic. And some of these restrictions are probably because you want this well-foundedness definition of your [indiscernible] predicates. Am I correct? Bart Jacobs: Well, so the restriction of positive positions is because I wanted to make this fixed point. Because I want this ->>: But you also said something that [indiscernible] logic arrow thing you cannot here or there or something, right? Bart Jacobs: The magic wands, I don't have it here. >>: But that points to your problem that the points cannot appear in some situations? Bart Jacobs: Right. So the points that I just said, it also cannot appear in negative positions here in this case. Actually that's not even needed to be able to make this ->>: Why do you have this restriction? Bart Jacobs: Actually, I've never needed negative points in any proofs. So I don't think it's particularly useful. >>: Okay. Bart Jacobs: All right. So now how do we express the semantics of programs? And you can use coinductive semantics for that. So if you run a command in a particular heap, it will have an outcome, or it can have one or more outcomes. And an outcome is either successful termination in N steps with result value V and final heap H or failure in N steps or divergence. And we will define the notion of adding a natural number to it with an outcome. So if it's a successful outcome, then you just add it to the number of steps. The same for failure, adding a number to divergence just means divergence. And given those definitions we can define the meaning of a call as follows. If you perform a call in heap H, then of course you will look up the method body. And the body will depend on the parameters X. You will look up the method body in the class of [indiscernible], which is C. And then if you execute this body with the arguments substituted for the parameters then you get some outcome. So the outcome of the call is going to be 1 plus the outcome of the body. And this should be interpreted as a co-inductive rule. So with double lines. And that means that if you have infinite recursion, if the body of M is itself a call of N on the same object, then the only possible outcome is divergence. Right? If I didn't have this 1 plus, by the way, if I just had 0 here, then all possible outcomes would be allowed by the semantics. And that's why I have this 1 plus so that you can only have divergence here. If it's an infinite recursion. All right. So given that we have a notation for the meaning of programs, how do we define the meaning of a triple? So that's defined as follows. So for partial correctness, if the precondition, so for any heap where the precondition holds, and if we get an outcome O, then either O is divergence, or we have a successful termination such that the post-condition holds in the post-heap with the result value substituted in. It's like the obvious meaning; right? So a triple is true if you start in any state that satisfies a precondition, running the commands will end up in the state that satisfies the post-condition. That's just what it says there. Nothing else. [Indiscernible] allow divergence here. Because this is a partial correctness semantics or proof system. So then we want to prove it. If we can prove a triple using the proof rules, then it is true. And I didn't show any proof rules here. But they are standard. So then how do you prove this theorem? Well, you will prove it by induction on the number of execution steps. So you will use the following lemma for all number of steps N given that we reach an outcome O, then it will not be failure in N steps. And if we successfully terminate in N steps, then the post-condition is satisfied. And you can prove it by induction on the number of steps. All right. And then by induction [indiscernible] induction on the derivation on the proof itself. So then how do we extend this logic to deal also with total correctness? What we add is just one thing, a call condition. And it will be in the first approximation, it will be qualified by a natural number. So actually this simply means that we have N, that we have permission to perform N calls here. So given that, the meaning of this assertion, so we will interpret the meaning of an assertion under I and H as before, but also N. So then of course if you claim that you have call permission and N prime, then N prime has to be at least N prime. So you can have additional call permissions, that's fine. Separating conjunction is also interpreted in the obvious ways. So we have to split not just the heap, but also the call permissions into two parts such that P1 holds for N1 and P2 holds for N2. So they are non-duplicatable, of course, these call permissions. And then the proof rule for method calls is extended so that it consumes one call permission at each call. So one call permission is taken out of the program's stock of call permissions at each call and it's gone. And since there are only finite number of call permissions at the start of the program, it follows that the program can only perform finitely number of calls. It's very simple. So how do we prove soundness for this? So the meaning of a triple is exactly as before except that we don't have this disjunct that says that we allowed divergence. That's gone. And then how do we prove that given the proof rules we have that triple is true? Now, we will define it, we will use a lemma that performs induction not on the number of steps but on the number of calls. So N is now the number of calls. And that will also work out. So you now perform induction on the number of calls. And nested, you will also look at the size of the command. So the only command where the size of the command itself doesn't decrease through execution is calls, and calls are dealt with using call permissions. So that's why this is a valid way of doing induction. All right. So now how do we use these call permissions for modular specifications? Because of course what you don't want is to have at the top of main a request for call permissions for exactly the number of calls that that particular program will make. That's not modular. Then you're revealing exactly how many calls you are making throughout a program. So you don't want to do that of course. Consider this very simple program. So we have a main method that calls square root, and square root calls square root helper some number of times. So a naive way to specify to perform modular verification of this program is to say, oh, well, square root helper doesn't perform many calls itself, so we're going to request call permission of zero. So that's just the same as true really, because we're not requesting anything. Square root, it performs two calls, so it requests call permission of two. And then main calls to square root, which itself needs two call permissions, so main needs three call permissions. Right? Okay, so that's a valid spec, a valid proof. But it's not modular. It reveals information that we want to hide. So how do we do that? And the way to do that, actually one other problem is that it becomes very tricky to specify ackermann. So if you want to have a crash clause for this main method that cause ackermann, the ackermann function, which is a well-known function that performs a lot of recursion, then you will need to specify the number of calls that are performed by ackermann. And that's quite tricky. You can do it, but it's not something you want to do. So you want to be able to abstract over the precise number of calls that are made. So of course I talk about ackermann, you'll hear me coming, I'm going to talk about ordinal numbers, about a lexicographic ordining. So in general we will look at well-founded orders. And an order is well-founded, well I'm sure all of you know, but let's just quickly recall. So a relation is not necessarily even an order. A relation is wellfounded if all non-negative, non-empty subsets of the domain of R have a minimal element. So for each non-empty set X, there is some element of X such that no other elements of X is less than it, or no element of X is less than X. That's minimal of course, minimality. And that's the same as saying that there are no infinite descending chains of R. Of course the natural numbers are well-founded. We can define well-foundedness. Given two well-founded relations, we can define the lexicographic composition of these relations. And here we will assume that the second element is the most significant one. So either the second component of the pair decreases or the second one stays the same and the first one decreases. So then we consider this pair to be less than this pair. So 10 comma 2 is less than 1 comma 3. And the other thing that you could do is multi-set order. So this is denoted as follows. Omega to the X. That means order over sets, over multi-sets of elements of X. And then the order is defined as follows. You can get from one multi-set to a smaller one by removing any element and replacing it with any number of smaller elements. That's how you move down in the order over the multi-sets. This is what we will use also in our proofs. So we will not use just natural numbers, but ordinals to qualify call commissions. And so we will interpret assertions under IH and lambda, where lambda is multi-set of ordinals. So each element of lambda is a call permission qualified by some ordinal. So call permission of alpha holds under some lambda if alpha is an element of lambda. Separating conjunction is interpreted as follows. We have to be able to split lambda so that one part satisfies P1 and the other part satisfies P2. We will also allow the program to perform ghost steps that weaken lambda. So if you have a certain stack of call permissions, you can replace any of those call permissions by any number of lesser call permissions. And that is denoted by this square subset symbol. So we can go from a state satisfying assertion B to one satisfying assertion B prime, if B prime is satisfied by a smaller lambda than B. So for example we then have the following loss or facts. Call permission of 1 can be weakened to call permission of zero star call permission of zero. And another example is call permission of 01 can be weekend to call permission of 10,0 and call permission of 20,0. Call permission of multi-set 3 can be weekend to call permission of 2,2,2, and call permission of 1. Because both of these multi-sets are smaller than this multi-set. >>: Is this the lambda prime lesser equal to [indiscernible]? Bart Jacobs: Lesser equal. This prime is the same one. It's the ordinary implication of assertions. So this is one assertion implies one other assertion, right. There's no star there. So how do we now use this to abstractly specify this program? And the trick here is to use method names as ordinals. So we will interpret method names, we will define an order on method names. Simply by the textual order of the program. So we will say square root helper is the minimal element, and square root is greater than square root helper, and main is greater than both square root and square root helper. And using this order, we can use, we can define call permission as follows. So we will use the method names as the set of ordinals. So main and all programs that you will see from now on, main will always require simply a call permission for itself for program.name. So the specification for the main method doesn't reveal anything about what the program does internally, how many calls it makes. It always simply requires main. Since main calls square root it needs, and square root itself, so by the way, more generally, any method will simply require a call permission qualified by its own name. So square root will require a call permission of square root. Square root helper will require a call permission of square root helper. So if main wants to call square root, it needs to weaken its own call permission to two call permissions over square root. Why two? One of them is consumed by the call itself. So remember the proof rule that we saw before? A call is valid if the precondition holds and separately we have an extra call position which is consumed and lost forever. So that's why main has to be weakened to two instances of square root. One of them is lost and the other one is passed into the body of square root to satisfy its precondition. And square root itself will also weaken its own permission to two copies of square root helper. And then square root helper will simply not use its call permission, but that's fine of course. So now how do we verify and specify ackermann? Ackermann itself, so the general rule is that if you have recursive functions or recursive methods, they should be internal to a module. So that means that the specification of the recursive method itself doesn't have to be abstract. It is okay for it to reveal the structure of the implementation, to be implementation specific. So what you then do is you have a separate method, a separate public method that is, that has an abstract specification. So ackermann itself requires a simply math ackermann. Here we have given a little bit more structure to the call permissions, so we are not using just method names, but also local ranks. So now the ordinals used to qualify call permissions are pairs of local ranks and method names. And the local ranks are the usual kinds of ranking functions that you use to verify termination of recursive methods. Yes? >>: Does this mean when I implement an interface I need to respect the order of the interface methods, how they were declared in the interface? Because in my implementation they're going to have to be in the same order to get the same ordinals? Bart Jacobs: Yes, but that can easily be -- you can easily work around that by having the implementations of the interface methods call into private, other private methods that are above all the other interface implementations. >>: [Indiscernible]. Bart Jacobs: They are all less than the implementation methods, and therefore you can call any of the implementation methods in any of the interface methods. So you simply duplicate all of the methods and it's not a problem. So it's not a fundamental restriction that way. So how do we then verify ackermann? So ackermann itself and the general rule will be public methods simply require a call permission qualified by 0 comma, its own name. So this doesn't reveal any information about the contents. And then the recursive method will have a call permission for ackermann and it's the local rank, the rank that is appropriate for ackermann, namely the two arguments lexicographically sorted. So ackermann weakens its own permission to MN ackermann iter, which is less than ackermann, because the second component is the most significant one. Ackerman iter is less than ackermann. So it can choose anything for the local rank when it performs this weakening. Okay, then the really hard part is dynamic binding. Yes? >>: [Indiscernible]. Bart Jacobs: Mutual recursion is done by, so each of these mutually recursive methods will request permission for the bottom one or the greatest one. In the paper there's an example like that. So how do we do dynamic binding? So suppose here I have now another example that uses dynamic binding. So we have an interface for real function so you can apply to a double to get another double. Then we have a method, the static methods that performs integration, that computes an approximation of the integral over F between some bounds A and B. So it's going to have to call F a number of times. How do we specify these methods? So here's a first attempt. Actually, no, this is just a partial correctness specification that like we saw before. So we define a predicate in real func and apply will require this predicate. It doesn't actually give anything back because since this is just a reflection of permission, the caller can always keep another reflection for further calls. So it doesn't have to give anything back. Integrate will require a fractional permission for F. So in the partial correctness setting, this is sufficient. This allows integrates to call any number of times. Now, if you also want to prove termination, how do we do this? Of course apply will want to be able to perform static calls of things declared earlier, like we saw before, like square root needs to be able to call square root helper. So by the ordinary rule that we saw before, apply should request a call permission for 0 comma, its own name. And then so this dot apply means actually a class of this dot apply. So this is the name of the specific apply method that implements that interface method. So this is not one name for the interface method itself, this is the name for the class method that implements that interface method. So then integrate, should, by the ordinary rule that we saw before, it should request call permission for itself so that itself can call methods before it, but also call permission for F dot apply. However, this is not sufficient. Specifically consider the following implementation of integrate. So integrate will call a recursive helper function that recursively applies F to some value between A and B, and then recursively calls itself again, right, as you would expect. Now, the problem is what we said here, this spec allows F dot apply to be called only once. Because F dot apply, so if you go back to the spec, F dot apply requires this full call permission that we're requesting in here. So F to the first call that will be completely consumed. So this is not sufficient. The specifically integrate iter will need N copies of F dot apply. For N being the recursive argument here that is descending during the recursion. So how do we fix that? And of course we could just put like omega here, the first infinite ordinal. But if you, but that restrains the implementation. For example, if you want to call this inside of ackermann, then you don't need omega, you would like something bigger than omega. So can we put the biggest ordinal? But there is no such thing as the biggest ordinal. So there's no good modular way to fix this without again using method names. And I will show how. So the way to do that is to not use local ranks, pair of local ranks and method names as ordinals, but local ranks and bags of multi names, so multi-sets of method names. So you do it as follows. Integrate, so for F dot apply itself, we simply require the singleton multi-set of this dot apply. But for integrate we will require the multi-set containing two elements, integrate itself, and F dot apply. And this allows integrate to weaken this permission to an arbitrary local rank when it goes from integrate to integrate iter. So when you weaken integrate F apply to integrate iter F apply, you can choose an arbitrary local rank. And this allows you to perform this call. And here you can perform any number of calls that you need. Now this still doesn't, now we still haven't achieved a fully general specification. Because F dot apply can only perform static calls here given this permission. It cannot perform any dynamically bound calls on objects that it prefers to by its fields. So if it has a reference to another object, then it cannot call that object because it doesn't know the methods of that object are statically below itself. So here too we need to pass not just this dot apply but some arbitrary extra set of method names. So this is an example of an implementation of real func calls into other objects. So this real func defined as the sum of two other real funcs, when you apply it, it will call F1 dot apply plus F2 dot apply. So that one isn't supported by the [indiscernible] that we just saw. So how do we fix that? So this by the way is the partial correctness specification of the sum class. So it's predicate real func will have the permission to access field F1 and it will have the real func predicate for F1. It will also have permission to access F2, and the real func predicate for F2. And I said, this is not going to be enough to verify this body. What we then do is we expose from the real func predicates the bag of methods reachable by the object. So each object will expose to its clients abstractly the bag of methods reachable from it. This in itself sounds very breaking of information hiding, but it isn't because we're not saying anything about what this bag is, we just allow the client to name it, just allow it to be mentioned in specifications, but we're not going to say anything about what is in there and what is not in there. So the spec for apply now becomes as follows. We have just as for partial correctness we have a flexible permission on this dot real func, and now we bind this method bag D and then we say we have call permission for all of those methods D. So this is multi-set. This will of course include apply itself. So for the sum we define it as follows. >>: [Indiscernible] it's a logical variable? Bart Jacobs: Yes. That's right. So how do we define the predicate for some example? So as before, sum needs to have access to F1 and to the real func of F1. And now we name the method bag of F1 and D1. We name the method bag of F2 as D2, and our own D is going to be our own apply methods, multi-set union D1, multi-set union D2. And this way apply can call all of the methods of F1 and F2 and it can apply statically higher methods of itself. So now the proof is easy. Because thanks to the fact that both D1 and D2 are smaller than D, because you can at least move this element. You can weaken this call permission to all of the call permissions required for the recursive calls. So this becomes the general specification for real func. And specification for integrate now becomes as follows. We need the real func predicate for F. It will have some bag of methods D and we need call permission for ourselves to integrate multi-set union D. So these are actually all of the methods reachable by integrate. This represents the method statically above integrate and this represents the methods reachable through the F object. So we can prove the implementation of integrate as follows. Integrate iter will require a call permission local rank N and method bag integrate iter union D. So how do we use, what is some client code that uses this specification? So we have a program main that will perform the integration of some linear function defined by some coefficient A and some constant B. So it will create a number of those linear functions and then will create a sum object of those linear functions, and then will call integrate. So how do we verify total correctness of this client program? At last linear itself will have to have implementation of the real func predicate. It's easy. So it has to have access to A and B. And its own method bag will simply be its own apply methods. And then the proof outline for the main function looks as follows. So main simply requires call permission for itself. So we have, if we create a linear object, we get a real func with method bag linear dot apply. We create another one, so we have also real func for linear dot apply for F2. Then we create a sum. We also have a real func for some dot apply and two copies of linear dot apply. And we can then weaken the call permission for main to two copies of call permission for sum dot apply and two times linear dot apply, that allows us to perform this integrated call. >>: So does this work because all of these limitations know statically how many method calls they need to make? Suppose it was instead of a linear function, something that, some dynamic structure and it needed to call as many as the [indiscernible] in its dynamic chain. Bart Jacobs: Right, but isn't that like the sum object? So sum is built from linear objects and it doesn't know itself and its built from linear object? >>: [Indiscernible]. Bart Jacobs: Well, they can themselves be again some [indiscernible] for example. >>: Yeah, that's true. Bart Jacobs: Right, this is actually I think the end. Well, actually there's one more problem remaining. Here I know what happens at construction time, so I also want to abstract over what happens at construction time. I can do it as follows. So what does a constructor function or a constructor methods for linear look like? It will require a call permission for itself simply. Actually I forgot the zero here. And it will say I give you an object that satisfies the real func predicate for sum D, and D will be less than myself. And thanks to this information, so here we don't know the exact value of D but we have an upper bound for it, and that allows the main function to weaken its own call permission to a call permission for D. And similar for sum. So create sum will require the usual call permissions for F1 and F2. And it will give back an object whose method bag is less than create sum union D1, union D2. So that's all I wanted to say about modular termination verification. And I see now that it's been an hour already. So I guess the other topics we should leave for some other time. Thank you very much. [Applause] Rustan Leino: Those other topics, you're free to ask questions for about, and Bart is here today and tomorrow. So if you want to chat with him with those [indiscernible]. Any other questions? >>: Your examples of functional language [indiscernible] allocating [indiscernible]. Is that all that's required? Bart Jacobs: No. So the logic fully supports mutation. And I mean I don't see at this point any reasons why the specification style also should not support mutation. For example, these predicates, it's perfectly fine for them for the arguments to change as you mutate the data structures. >>: But at some point mutation, you get in trouble if you [indiscernible]. Where does that show up? Bart Jacobs: So actually this already shows you how circularity would be shown. So actually if you create a circular list, then I would say that you know that you're doing that. So it would be within one module. And then you know how many elements it has. So I think it wouldn't be a problem. >>: [Indiscernible]. Bart Jacobs: Yes. >>: I mean what if you [indiscernible]? Bart Jacobs: Right. Right. Of course it's ruled out. I mean this is sound, so I can prove the program. The question is then if it doesn't terminate, then you cannot prove it. Right. So what do you still want to prove about it. That's the question I guess. >>: I guess my question is more how does that [indiscernible] what is the specific problem I run into [indiscernible]. Bart Jacobs: So it's hard to tell. It's going to fail. I guess I would have to try it to really be able to answer that. But note that of course these multi-sets, these bags are finite things. So they will have trouble to create an infinite search bag. So you would have to, during creation they would have to have some well-founded process to come up with these bags that would be impossible, I guess. >>: [Indiscernible]. Bart Jacobs: So this is for proving termination, right? So does that program terminate? >>: Well, I guess not [indiscernible]. Bart Jacobs: Then you cannot verify. You have to know that it's terminating, right, otherwise you cannot verify the [indiscernible]. >>: I suppose you're not shooting for maximum mobility, which allows to you prove anything more. Bart Jacobs: [Laughter]. >>: It actually verifies like that too. Bart Jacobs: [Laughter]. Rustan Leino: So Bart will be around. [Applause]