Document 17883906

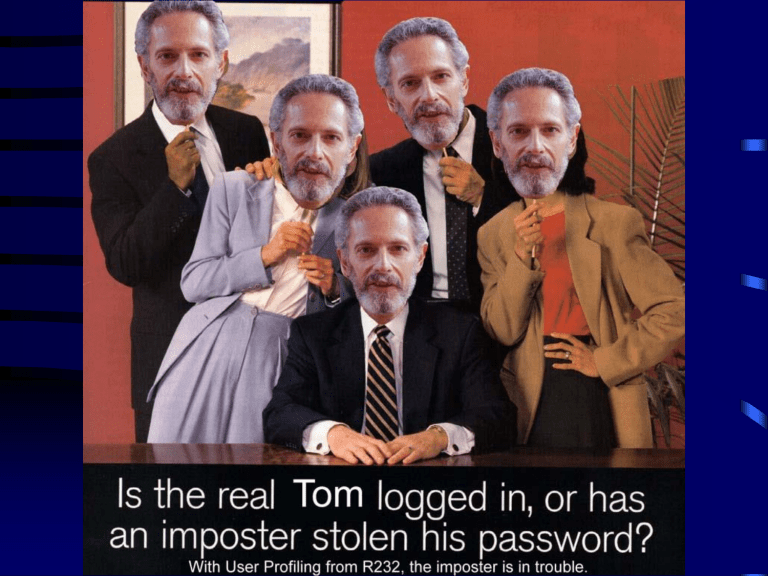

Authenticating Users by

Profiling Behavior

Tom Goldring

Defensive Computing Research Office

National Security Agency

Statement of the Problem

• Can we authenticate a user’s login based strictly on behavior?

• Do different sessions belonging to a single user look “similar”?

• Do sessions belonging to different users look “different”?

• What do “similar” and “different” mean?

Purpose

• We expect that the “behavior” of any given user, if defined appropriately, would be very hard to impersonate

• Unfortunately, behavior is turning out to be very hard to define

What about Biometrics?

• Things like e.g. fingerprints, voice recognition, and iris scanning constitute one line of defense, although they require additional per host hardware

• However, biometrics do not necessarily cover all scenarios:

– The unattended terminal

– Insider misuse

• R23 is currently sponsoring research in mouse movement monitoring and we hope to include keystrokes at some point as well

Speaking of Insider Misuse

• For example, spying, embezzlement, disgruntled employees, employees with additional privilege

• This is by far the most difficult problem, and at present we don’t know if it’s even tractable

• Lack of data

– Obtaining real data is extremely difficult if not impossible

– At present we don’t even have simulated data, however efforts are under way to remedy this shortcoming, e.g.

ARDA sponsored research

The Value of Studying Behavior

• Whether or not the authentication problem can be solved in other ways, it seems clear that for the Insider Misuse problem, some way to automatically process user behavior is absolutely necessary.

Authentication is a more

Tractable Problem

• Getting real data (of the legitimate variety, at least) is easy

• We can simulate illegitimate logins by taking a session and attributing it to an incorrect source

– This is open to criticism, but at least it’s a start

– As stated previously, research on simulating misuse is starting to get under way

• Many examples of Insider Misuse seem to look

“normal”

• In its simplest form, we authenticate an entire session

• Therefore, authentication is a reasonable place to start

Program Profiling is an Even

More Tractable Problem

• Application programs are designed to perform a limited range of specific tasks

• By comparison, human behavior is the worst of both worlds

– It has an extremely wide range of “normal”

– It can be extremely unpredictable

• Doing abnormal things sometimes is perfectly normal

– People sometimes change roles

– In a point and click environment, the “path of least resistance” causes people to look more like each other

Possible Data Sources for User

Profiling

• Ideally, we would like to recover session data that

– Contains everything (relevant) that the user does

– Encapsulates the user’s style

– Can be read and understood

Command line activity

• Up to now, Unix command line data has been the industry standard

• For most tasks, there are many ways to do them in Unix

• This looks like a plus, but actually it’s the opposite

• It’s human readable

• But it misses windows and scripts

• In window-based OS’s it’s becoming (if not already) a thing of the past

• Almost never appears in data from our target OS

(Windows NT)

• Therefore we no longer consider it a viable data source

System calls

• Appropriate for Program Profiling, but less so for

User Profiling

• Very fine granularity

• But: (human behavior) / (OS behavior) very low

• Next to impossible to guess what the user is doing

Process table

• This is the mechanism that all multitasking OS’s use to keep track of things and share resources

– So it isn’t about to go away

• Windows and scripts spawn processes

• Built in tree structure

• Nevertheless, we still need to filter out machine behavior so that we can reconstruct what the user did

Window titles

• In Windows NT, everything the user does occurs in some window

• Every window has a title bar, PID, and PPID

• Combining these with the process table gives superior data

– now very easy to filter out system noise by matching process id’s with that of active window

– solves the “explorer” problem

– anyone can read the data and tell what the user is doing

– a wealth of new information, e.g. subject lines of emails, names of web pages, files and directories

Data Sources We Would Like to

Investigate

• Keystrokes

• Mouse movements

– Speed, random movements, location (app. Window, title bar, taskbar, desktop)

– Degree to which user maximizes windows vs. Resizing, or minimizes vs. Switching

• These can be combined to study individual style

– Hotkeys vs. Mouse clicks (many examples, some of which are application dependent)

• File system usage

But …

• Our data now consists of successive window titles with process information in between

• So we have a mixture of two different types of data, making feature selection somewhat less obvious.

• Ideally, feature values should

– be different for different users, but

– be similar for different sessions belonging to the same user.

What’s in the Data?

• Contents of the title bar whenever

– A new window is created

– Focus switches to a previously existing window

– Title bar changes in current window

• For process table:

– Birth

– Death

– Continuation (existing process uses up CPU time)

– Background

– Ancestry

• Timing

– Date and time of login

– Clock time since login

– CPU time

(2418) 9862.598 outlook pid = 262 Inbox - Microsoft Outlook

:explorer:302:outlook:172:outlook:262:

(2419) 9862.598 outlook c 14.030 :outlook:262:

(2420) 9862.598 outlook c 14.050 :outlook:172:

(2421) 9880.924 explorer pid = 286 Exploring - D:\proto\misc

:explorer:302:explorer:321:explorer:286:

(2422) 9880.924 explorer c 0.511 :explorer:286:

(2423) 9880.924 explorer c 0.520 :explorer:321:

(2426) 9887.233 explorer c 0.991 :explorer:302:

(2427) 9887.233 wordpad b 0.010

:explorer:321:wordpad:191:

(2428) 9887.233 wordpad b 0.000

:explorer:321:wordpad:191:wordpad:276:

(2429) 9887.533 wordpad pid = 276 Document - WordPad

:explorer:321:wordpad:191:wordpad:276:

(2437) 9887.543 wordpad c 0.060 :wordpad:276:

(2438) 9887.543 wordpad b 0.000 :wordpad:274:

(2439) 9887.543 wordpad b 0.000 :wordpad:69:

(2440) 9887.543 wordpad c 0.010 :wordpad:191:

(2441) 9887.844 wordpad pid = 276 things to do.dat - WordPad

:explorer:321:wordpad:191:wordpad:276:

(2443) 9887.844 wordpad c 0.090 :wordpad:276:

(2444) 9887.844 wordpad c 0.070 :wordpad:191:

(2445) 9892.350 outlook a 14.040 :outlook:172:outlook:262:

(2447) 9894.453 wordpad c 0.110 :wordpad:276:

(2448) 9894.453 wordpad c 0.100 :wordpad:191:

(2449) 9894.754 wordpad c 0.120 :wordpad:276:

(2450) 9894.754 wordpad c 0.120 :wordpad:191:

(2453) 9895.655 outlook a 14.050 :outlook:172:outlook:262:

(2455) 9895.956 wordpad c 0.140 :wordpad:276:

(2456) 9895.956 wordpad c 0.130 :wordpad:191:

(2457) 9896.256 wordpad c 0.160 :wordpad:276:

(2458) 9896.256 wordpad c 0.150 :wordpad:191:

(2459) 9896.556 wordpad c 0.180 :wordpad:276:

(2460) 9896.556 wordpad c 0.170 :wordpad:191:

(2461) 9901.363 outlook a 14.060 :outlook:172:outlook:262:

(2465) 9901.664 explorer pid = 286 Exploring - D:\proto\misc

Some candidate features

• Timing

– time between windows

– time between new windows

• # windows open at once

– sampled at some time interval

– weighted by time open

• words in window title

– total # words

– (# W words) / (total # words)

The Complete Feature Set

• Log (time inactive) for any period > 1 minute

• Log (elapsed time between new windows)

• Log (elapsed time since login) for new windows

• Log (1 + # windows open) sampled every 10 minutes

• Log (# windows open weighted by time open)

• Log (1 + # windows open) whenever it changes

• Log (total # windows opened) whenever it changes

• # characters in W words

• (# characters in W words) / (total # characters)

The Complete Feature Set (cont.)

• # W words

• # non - W words

• (# W words) / (total # words)

• Log (1 + # process lines) between successive windows

• total CPU time used per window

• elapsed time per window

• (total CPU time used) / (elapsed time)

• Log ((total CPU time used) / (elapsed time since login))

But These Features Occur in a

Nonstandard Way

• Define a session as whatever occurs from login to logout

• Most classifiers would want to see a complete feature vector for each session

• But what we actually have is a feature stream for each session: features can be generated:

– Whenever a new window is opened

– Whenever the window or its title changes

– Whenever a period of inactivity occurs

– At sampled time intervals

Derived Feature Vectors

• We need to reduce this stream to a single feature vector per session, because that’s what most classifiers want to see

• One way is to use the K-S two sample test for each individual feature

• This results in a single vector per session with dimension equal to the number of features

Derived Feature Vectors (cont.)

• The test session gives one empirical distribution

• The model gives another (usually larger)

• The K-S test measures how well these distributions match

– If one is empty but not both, we have a total mismatch

– If both are empty, we have a perfect match

– If neither is empty, we compute | max difference | between the two cdf ’s

• So as claimed, we obtain a single vector per session with dimension equal to the # of features

• It also makes the analysis much more confusing

An Experiment

• This is “real” data – it was collected on actual users doing real work on an internal NT network

• Data = 10 users, 35 sessions for each user

An Experiment (cont.)

• For any session, let PU denote the “putative user” (i.e. the login name that comes with the session), and let TU denote the “true user” (the person who actually generated the session)

• For each user u, we want to see how well the sessions labeled as u match “self” vs. “other”

• In the sequel, let M = # of users, T = # training sessions per user

Building Models from Training

Data

• We used SVM, which is a binary classifier, so we need to define “positive” examples

(self) and “negative” examples (other)

• For self, create derived feature vectors by using K-S to match each session (TU = u ) against the composite of u

’s other sessions

– this gives

T positive examples

One way to define “other”

• Suppose we intend to evaluate a test session by scoring it against a pool of user models and seeing who wins:

– match each of u

1

’s sessions against the composite of u

2

’s, for all other u

2

– this gives

T ( M – 1) negative examples

Another way to define “other”

• Score a test session only against PU’s model

• Say the null hypothesis is TU = PU, and the alternative hypothesis is TU = someone else

– this suggests deriving negative feature vectors by matching each of u

2

’s sessions (for each u

2 other than u

1

) against the composite of u

1

’s

– it also gives

T ( M – 1) negative examples

Methodology

• For each user, use first 10 sessions for training, test on next 5

• Build SVM and Random Forest models from training data

• Classify each test session against each model to create a matrix of scores M (next slide)

– each row is a session, user number is on the left

– 10 columns, one for each model scored against

• Look at plots of the two pdf

’s for each column

– red = self (x in

M )

– green = other (* in M )

– left plot = SVM, right plot = Random Forest

Matrix of scores

1 2 3 4 5 6 7 8 9 10

-----------------------------------------------------------------------

1 x * * * * * * * * *

1 x * * * * * * * * *

…

2 * x * * * * * * * *

2 * x * * * * * * * *

…

10 * * * * * * * * * x

10 * * * * * * * * * x

Future Work

• Reduce / modify the feature set

• Try other classifiers

• Have a non-technical person read the sessions and intuitively identify user characteristics

• Come up with ways to visualize what goes on inside a session