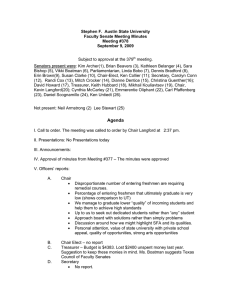

24352 >>: It's my pleasure to introduce John Langford, newly... Research in our delightful New York City branch. Gosh,...

advertisement

24352 >>: It's my pleasure to introduce John Langford, newly of Microsoft Research in our delightful New York City branch. Gosh, John has been around machine learning for years, doing things like isomap and [inaudible], and also doing fun sort of learning theory things like the workshop on error bounds, less than a half. I remember that. That was fun. Got his Ph.D. about ten years ago at CMU and was a techer back before that. So here he is. >> John Langford: Okay. So this is not my normal style of talk, because I usually do theory. But last summer we got upset that our learning algorithms weren't fast enough. We decided to crank on it really hard. I'm going to tell you how we got linear learning working at very large scales very quickly. So this is a true story, when I was at Cal Tech finishing up I applied for a fellowship, I think it was the Heinz fellowship. They sent somebody out to interview me. The interviewer said what do you want to do? I said, hmm, I'd like to solve AI. And the interviewer said how do you want to do that? And I said, well, I'll use parallel learning algorithms. And he said, no. It was more bracing than no. >>: Did he have gray hair at the time? >> John Langford: Yeah, I think so. [laughter] okay. So obviously I did not get the fellowship. [laughter] even worse, he was right to some extent. It is the case that in the race between creating a better learning algorithm and just trying to make a parallel learning algorithm, often the better learning algorithm wins. And it was always winning at that point in time. So I'm going to show you a baseline learning algorithm. This is where we started at. This is a document classification dataset, which is 424 megabytes compressed. And if we look at this in detail, we see something very typical. We have a class label and we have a bunch of features, which are TFID transformed feature values. And then on the next line we see another example and so forth. Right? So this is very typical. And now I have a six-year-old laptop with an encrypted hard drive. How long does it take to actually learn a good classifier on this dataset? >>: One class. >> John Langford: We have two classes. >>: I'm sorry, two classes. [laughter]. >>: Two classes, classifier, two seconds. >> John Langford: Two seconds would be nice. On my desktop at home, which is not six years old, takes about two seconds. On this machine, about 40 seconds or so. What's happening here this is progressive validation loss. We're running through the examples. We evaluate on the example. We add that into the running average. And then we do an update. And I think the important thing about this is the deviations are a test set, one pass over the data. Turns out for this dataset if you have a TFID transform and you have a very tricked out update linear update or online update rule, which we have, you can get an average squared loss of 4 percent or .04, and it turns out on a test set, it's about 5 and 8 percent error rate, which is about the best possible that anybody's done on this dataset as far as I know. So this is a baseline. This is where we're starting from. This is only 31 seconds. Good. We're just learning a linear predictor. Documentation classification is easy because words are very strong indicators of document class. There are roughly 60 million features that we went through, 780,000 examples and took about 31 seconds this time. Okay. So that's nice. We have bigger datasets. So in particular at Yahoo! we were playing around with an ad dataset, which had two features. So I should go back a little bit. Let's go back here. This is one feature. Everything that's not there doesn't count as a feature. We're only counting the non-zero entries in the data matrix. This is sparse representation. So when I say two tera features, I'm meaning two tera sparse features. So two tera non-zero features. Right? And we still want to learn a linear predictor. A lot more parameters because you need to have that. And here's a few more details. There's 17 billion examples. There's a little over 100 non-zero features per example. 16 million parameters that we're learning, and we have a thousand node cluster to learn on. And the question is: How long does it take? Well, how do we do it, first of all. But more importantly just how long does it take to do it? >>: [inaudible] feature terabyte of features. >> John Langford: No, I mean a tera feature. entries in your data matrix. 10 to the 12 nonzero >>: You say [inaudible] parameters, what are the parameters. >> John Langford: So those are the weights. >>: WIs? >> John Langford: What's that? Yeah, WIs. >>: So there are that many features that are nonzero ever or summed over all the cases? >> John Langford: There's this many non-zero entries near data matrix. So the number of bytes, if you used a byte representation, would be maybe 20 terabytes, perhaps, ten bytes per feature. >>: Is that 17 you have 17 [inaudible] that's how we found ->>: No, it's the number of ->>: So your total -- your total matrix is 17 billion times. >> John Langford: 17 million rows and 16 million columns. number of nonzero entries is two tera. And the >>: Is that overfitting, a thousand times more examples than weights? >> John Langford: So overfitting becomes less of a concern but it is actually some concern. The issue is the sparsity. So some of the features just don't come up very often. So it's possible overfit on those features even though you can't overfit on the majority of the mass. >>: And you're doing something about that, you'll tell us about that? >> John Langford: Yeah, but...but let's go back to the demonstration. So I'm using zero regularization when I do this. It turns out if you have a very good online update rule you don't need to worry about regularization that much. It's kind of built into the online process itself. >>: [inaudible] one of those [inaudible] [laughter]. >>: Regularization [inaudible]. >> John Langford: So the question is how long does it take it? >>: 40 seconds. >> John Langford: That would be fantastic. >>: Sadly, though. >> John Langford: Those extra zeros really matter. [laughter]. >>: Told me the answer. >> John Langford: Yeah. >>: 29 ->> John Langford: 29 would be nice. Can't quite do that. Did 70 minutes. So now the important thing here is that if you just look at the overall throughput of the system. We have to do multiple passes on this data, by the way, because it's much rawer than the document classification dataset. So if you take, look at the overall throughput ends up being 500 mega features per second. Each individual machine in that cluster just had a gigabit per second ethernet. I don't know exactly how many bytes it takes to specify a feature, but or bits, but it's certainly more than two. We're beating the IO bandwidth of any single machine in our network. >>: Do you know if Hadoop keeps it in binary or keeps it -- [inaudible] at this point. >> John Langford: Starting from ASCII. >>: So you're actually passing over the ASCII multiple times? >> John Langford: No. There's a lot of tricks we throw in. But we do start from ASCII, because it's kind of human readable and debugable. But BW has a nice little cache format and I kind of lied here because I was really using the cached form of the dataset, which is, of course, unreadable. Whoops. So it's 290 megabytes in binary. Okay. So this is the only example I know of a learning algorithm, which is sort of provably faster than any single machine learning algorithm that's ever invented in the future, because we beat the IO bandwidth, right? We beat the IO bandwidth limit. That's finally an answer to this guy that turned me down. All right. So we had a book with Misha, where people contributed many chapters on parallel learning methods. And for each of these parallel learning methods, if you take a look at sort of the baseline they compared with in this features per second type measure and then how fast their system was. So this was some people at Google. They had a radial basis function support vector machine. Running on 500 nodes using the RBC dataset, the same one I showed you. They had some speed-up from a relatively small baseline. This is an ensemble tree. This is NEC guys. They had a much stronger baseline support vector machine. And they spread it up more than we expected. They only had 48 nodes. This is more than a factor of 48. This is a logarithmic scale here. And there they're winning some nice caching effects. This is MapReduce decision trees from Google. They have some reasonable decision tree baseline and then they just speed it up in a pretty straightforward way. This is from Microsoft, Krista and Krista's decision trees. So they were working with relatively small number of nodes. Only 32. They had a very strong baseline, and then they sped it up a little bit more. And then this is what I just demonstrated to you. And this is what I'm talking about now. So the baseline got worse, because the dataset is rawer and we have to pass over the data multiple times. So we can no longer do a single pass. We get a much bigger speedup because we're dealing with a thousand machines effectively. Okay. So how do we do this? Well, we do things in binary, we used, take advantage of the hashing trick, who knows the hashing trick here? So the idea with the hashing trick is if you're dealing with, quickly if you're dealing with this many feature types datasets you can often just hash the individual features to map them into some particular fixed dimensionality weight vector space, right? So when I say 16 million, I don't mean there are 16 million unique features. What I mean is that I'm using the hashing trip to map them to using a 24 bit hash. And so what's scary here you get collisions between different features, but turns out that if the features are a little bit redundant that works out pretty well. Machine learning algorithm can learn to compress around collisions. We're using online learning. We're using implicit features. We're doing a bunch of tricks to improve the online learning. We're using LBFGS to finish up with batch learning. And we switch between these two. And there's a bunch of tricks in how we're doing parallel learning as well, all of which are aimed at either making the communication faster or making the learning a little bit more intelligent in the parallel setting. So the key thing we did, the most key thing is this Hadoop L reduce, which I'll go through first. So All Reduce is an operation from NPI. Who knows All Reduce? Okay. Just a couple. So the idea is you start with a bunch of nodes. Each node has a number. And then you call all reduce, and every node has the sum of all numbers. Right? And then the question is what is the algorithm for doing that? And one such algorithm is you create a binary tree over the nodes and then you add things up going up the tree. So one plus two plus five is eight. And three plus four, plus six is 13. And then eight plus 13 plus seven is 28. And then you broadcast down the tree. Basically that's All Reduce. And this is a very nice primitive in several ways. In a network, in general, you have two things that you care about. One of them is latency. The other one is bandwidth. Typically in machine learning, when you are calling All Reduce, you don't want to call it just one number, want to call on vector of numbers. You can pipeline the All Reduce operation and latency doesn't matter. The other thing -- then there's also a bandwidth and this particular implementation of All Reduce is within the constant factor of the minimum. So for the internal node you have one, two, coming in, one going out. One coming out and two going out. So it's six total. Six times the number of bytes you're doing All Reduce on. So that's within a constant factor of the best possible. And so we're deconditioned as far as using our bandwidth. And then the last point is actually the most important one. The code for doing this is exactly the same as the code that I just used here. It's exact, I wrote no new functions except for the All Reduce function itself. And I can just look at my sequential code and go, okay, I'll do All Reduce there and there, and then it runs in parallel. This is one of the few times in my life that having the right language primitive felt like it was phenomenal. This comes after I spend several years doing parallel programming the hard way. It's very easy to experiment with parallelizing any algorithm you want using All Reduce. So here's an example. If we're doing online learning on each individual node what we do we pass through a bunch of examples doing our little online updates, and we finish with our pass and we call All Reduce on our weights and we average our weights, and then maybe we do another pass. So we just add this -- if we're doing things in a sequential environment there would be no All Reduce. If we want to do things in parallel we just add in the right All Reduce call. So we did this for the other algorithms that we were using. This is simple scratch reduce algorithm. It also gets a little more complex. We want to have more intelligent online update rules which keep track of some notion of how much update has been done to an individual feature. Then you want to do a nonuniform average of the different weights because that makes sense because the weights which have seen this feature more often and updated more for that feature should have a stronger weight in the overall average. So it turns out you can do nonuniform averaging easily enough. You need to use two All Reduce calls, and do conjugant gradient and LBGFS. So that's All Reduce, that's what NPI gives you. We're not actually using NPI, and the reason why is because NPI was not made with data in mind. So typically if you tried to use NPI, you'd have this first step which was load all the data, which it would be pretty painful. And anyways, the data at Yahoo! existed on Hadoop clusters. You didn't want to shift the data off the clusters because the data was very heavy. What Hadoop would give you was the ability to move a program to the data, right? Rather than moving the data. It's very nice as far as research usage. And then another thing that Hadoop would give you is automated restart and failure. So with NPI, if one of your nodes goes down, to him the job fails. That's actually true for us, with All Reduce, but it's not as true. And in particular it's not true for the first pass of the data. Because we delay initialization of the communication infrastructure until after every node has passed over its preset data. That means the most common failure mode, a disk failure, will get triggered in the first pass. That job will get killed and it will be restarted on the same piece of data on a different node. And then with respect to the overall competition across the cluster, all that happens is things are slowed down a little bit in the first pass. So this deals with the robustness issues to a large extent in practice. My experience was that as long as your job was 12,000 node hours or so then you didn't see failures, unless they were your own bug. >>: [inaudible]. >> John Langford: What's that? >>: 10,000 node hours before this or after this? >> John Langford: It was after this, but I can't entirely ascribe to this. There's another fact, some sort of convention in Unix land, ports above 2 to the 15 can be randomly used. We were using those on purpose, until there were collisions going on. That changed also happened and then at all our problems went away. >>: So the data has already been prepartitioned across the nodes and you have multiple codes for each -- >> John Langford: That's right. So Hadoop has a file system called HDFS, which stores everything partitioned across the nodes in triplicate. >>: So 2.1 tera features is not a lot if you map it to a thousand machines. It's like two gigabytes per machine. >> John Langford: It would be more like 20 gigabytes, but 20. is not a bite. It's more than a bite, typically. >>: Not terribly on each individual machine. Feature That's good. >>: So I'm just questioning, is NPI really formal? Because it seems like if you were doing initialization on Hadoop, you can do it [inaudible]. >>: NTI basically isn't an option because we didn't have the cluster, didn't have a separate cluster to write NP on. And NPI kind of, I mean, doesn't have a resource scheduling component like Hadoop does. So a big company that wants to make sure that its cluster is getting utilized, isn't going to want to run NPI because they want to share machines as much as possible. There were various efforts at Yahoo! to run NPI on Hadoop. It was never pretty. So one last trick, which is very important. When you have a thousand nodes, it's a barrier operation. That means you run as fastest as the slowest node, right? So if you have a thousand nodes, one is going to be kind of slow. And in particular, it's common for the node to be a factor of 10 slower than the fastest node. Maybe even factor of 30. So Hadoop has a mechanism called speculative execution, which says that if one of your jobs is running slow, you restart the same job on the same data on a different node and these two processes erase. So we take advantage of that. And that allows -- it's a factor of 10 improvement in the overall speed of the system. >>: So is it mapping instead of you have exact copy, on a different node and still dispute? >> John Langford: You have exact outputs in different nodes, and the second one is starting later than the first one, because the first one has been noticed to be slow. So we're going to lose a factor of two or three, which is actually what we observe, compared to a single machine performance. But we don't lose a factor of 30. And that's a big win. >>: I guess my question is so the factor of 30 slow down isn't that particular data was bad in some way, something else. >> John Langford: No, typically what happens is the data, the data is on individual nodes. Schedule tries to figure out which nodes to move the computation to. Occasionally it moves the data and computation but mostly it just moves the computation. And often it tries to oversubscribe individual nodes for performance reasons, overall throughput of the system. And it fails. And it fails badly on some particular node. Or maybe some node happens to have more than one piece of the data. Right? In which case it gets overloaded. So these kinds of things come up very commonly in a large cluster. >>: Did you have the cluster [inaudible] on the job right now? >> John Langford: No. They gave us access to the production clusters. Not the actual production clusters, but the development clusters, the ones that were used. And they gave us access and let us use thousand nodes maybe even 2,000. But exclusive access was too much to ask for. All right. So this is reliable execution at the 10,000 node hours. We only went to about a thousand node hours. And so maybe the larger scale problems here that -- one thing we checked, by the way, is there's a lot of cheap tricks when you do large scale learning. You could, for example, sub sample the data and run sub sample data. Turns out for this dataset you actually did worse sub sampled and noticeably worse. >>: Use it as initialization. >>: This was already a sub sampled dataset. This is throwing out 99 percent of the non-clicks. We couldn't throw out anymore without losing performance. >>: Could you -- would you be any faster to use the sub-sub sample data. >> John Langford: It's possible. The thing which is a little bit tricky is the communication time is nontrivial here. So if you sub sample the dataset and then you ran on a thousand nodes of the sub sample dataset, the communication time would still be eating into your total time budget quite a bit. >>: If it's [inaudible] instead of a thousand nodes? >> John Langford: Yes. So that's like a second or something, gigabit per second ethernet network. It was in fact maybe about ten seconds in practice, because there's collisions and what not. factor of six. Also we have that Okay. So what am I doing? No, wrong way. Okay. So that's the communication infrastructure. Decent communication infrastructure necessary but not sufficient for good machine learning algorithm. is radically better than MapReduce, at least for Hadoop, iteration for MapReduce is about a minute and here is about ten times ping. can -- just in terms of performance it's much, much better for synchronizing different nodes. is This time You It's also much, much easier to program with All Reduce, which I think is even more an important thing in practice. >>: So you said you weren't using NPI infrastructure, you coded your own ->> John Langford: Yes, we coded it as a library. So it is a library in BW, open source project, that we ran. So it's very easy for you to just use that library. It's one file that you just grab and stick into your -- it compiles as a library, in fact. So it should be very easy to use if you wanted to. >>: What's the ->>: NPI-ish issues like they're not cooperating with everybody broadcasting. >> John Langford: I mean, the failure modes of All Reduce are the same except the interface is set up to encourage the late initialization, because when you call it, you call it with all the things required to initialize. It works with speculative execution. So if two nodes say, hey, I'm node three in computation four, then it just takes the first one and uses that. And it ignores the other one. In order to make this all work, you need a way to, for the nodes to organize themselves into the tree. So the way that works, there's a little daemon that runs on the gateway and for every map job we point it to the gateway, so the job talks to the daemon and says, hey, I'm job three and I'm three of four, with some particular nods, which you need to have distinct for each [inaudible] invocation. And once it collects a complete set of one of four, two of four, three of four, four of four, then it tries to be intelligent about creating the tree. So it sorts to IP addresses and then it builds the tree on the sorted list of IP addresses. So that means in particular if you have the same job at the same IP address, then there tends to be communication just inside of the computer, right? It's not guaranteed. It's not perfect. interact bandwidth usage. But it tries to minimize the >>: Does it do this for every [inaudible]. >> John Langford: No, it does it once, the initialization. And it just keeps the sockets open for overall usage. So once the communication infrastructure is created, if another dies, then the overall process dies. But, again, that wasn't the problem out to about 10,000 node hours. Okay. So now let's talk about the algorithms. Trying to do gradient descent. So gradient descent is the core of all of these algorithms. But gradient descent is not quite adequate. And the way I think about it not being inadequacy is units. So it's kind of a physics point of view. So squared loss, you can take the derivative of squared like this, and then the important thing this is like and we have the feature. to think about if you have loss, then looks a constant here And if you look at the prediction, this is the feature times the weight. So that means that if the feature's double the weights need to have in order to keep the same prediction, right? And this is a feature. So we're adding a learning rate times a feature to something which is inverse in the features unit-wise. And that unit class causes issues. >>: Normalized elements [inaudible] they try to make the [inaudible] they try to divide by the unit, the norm of X. >> John Langford: Yes, in fact that's one of the tricks. >>: That's an old algorithm. >> John Langford: Simple thing. So natural units of 1 over I and XI is units of I. So things don't work very well. So too far in a particular direction. Okay. So we're doing adaptive, safe online gradient descent. So online you're aware of. Each individual example. Adaptive is where they update in direction I is rescaled according to 1 over the square root of the sum of the gradient squared for that feature. You have essentially a per feature learning rate where the learning rate gets scaled down as you do more and more updates to that individual feature. >>: Is this connected to these algorithms for second order [inaudible] for learning. >> John Langford: Yeah. So in terms of performance. My impression this is at least as good. In terms of the actual update rule, it does differ in some details. So we're looking at the gradients here. So it could be -- there's two reasons why some of the gradients is smaller. One reason is because you've never seen that feature before, which is helpful. And I think the second order gradient descent approaches get at that pretty well. But a second reason why some of the gradients might be small is because every time that feature came up, you predicted correctly. In that case, the update rules tend to differ in what they do. >>: You have the CW. >> John Langford: Confidence weighted. >>: Confidence weighted, along the same lines. >> John Langford: The truth is I haven't tested it empirically to compare the two. I would like to do that but I haven't had time. Anyway, that's what we're using. That's helpful. Another thing is the trick you mentioned rescaling the features so that the units work outover all. That's helpful. The last one is safe updates. So this is when Nick coast visited me we were worrying about doing online learning with large importance weights. The obvious way to deal with an importance weight, if you have an importance weight of two, it's like saying this example is twice as important as normal. Obvious thing would be is to put a 2 here. But that works pretty badly. Because essentially because you're trying to have a very aggressive learning rate with online learning and that makes it too aggressive. So instead what you can do, you can say all right, A, example with importance weight 2 should be like having two examples of importance weight one in a row. And you can say, hmm, maybe this should also be like having four examples with importance weight half in a row or maybe it's like eight with importance weight one quarter in a row and so forth. And you can take the limit of infinitely many, infinitely small updates and solve in closed form to figure out what that update rule would be. The important thing about this update rule for our purposes is, okay, so this in fact does help you with importance weights. We don't have any importance weights here. Turns out that it also helps with just importance weight always one. Because it's not equivalent to just the normal update rule. It takes into account the global structure of the loss function. >>: That's why you have to use squared loss. >> John Langford: Doesn't have to be squared loss. Solved it for many losses, logistic as well. The starting example was actually logistic loss. So it's safe because if you have these infinitely small, infinitely many, infinitely small updates, the update can never pass the label for any of the same loss functions. So for hinge loss, squared loss, logistic loss the update never passes label. So you never have a large importance weight to throw you out into crazy land. That allows you to have a much more aggressive learning weight than you otherwise could have. Even with importance weight one. >>: How do you enforce that? You're doing that locally for each guy as they're stepping in on a given node how do you enforce that at the -can you enforce that at the higher level as well if they're combined. >> John Langford: We're using this inequality to make sure that combining makes sense. So, yeah. So we do online learning on each individual node. We use All Reduce to average the weights. Then we switch to LBFGS. So LBFGS is not an algorithm that I did not learn as a grad student. If you think my education was missing something. >>: It was just being formulated while you were a grad student. >> John Langford: No, I think LBFGS was actually 1980. >>: LBFGS or. >> John Langford: BFGS was '70, LBFGS was '80. that way. [laughter]. It's easy to remember So LBFGS is a batch algorithm. Batch algorithm suffer a lot in terms of speed. But it turns out that it's very good at getting that last bit of optimization done well. It uses -- so what you would want to do, if you could afford it, would be something like a Newton update. But it involves inverting a Hessian. You can't even represent a Hessian on this many parameters. Instead what you do, you approximate the inverse Hessian directly. This is the core approximation. So you have the change in the weights from one pass to the next, add a product with a change in the weights. Any other change in the weights, interproduct with a change in the gradient. And if you think about the units for a moment, actually has the right units. Turned out to be a pretty decent approximation to the inverse Hessian. Build it up over multiple rounds. You get a better and better approximation of the inverse Hessian. And you can converge very quickly to a good solution. Now we use the average of this output to initialize this, which is a cheap trick. Turns out to be extremely helpful. Okay. So now use a map Hadoop job for process control. We use all reduce code to synchronize the state. We save input examples into a cache file for later passes. And we use the Hessian trick to reduce the input complexity. These are all very important things individually. But they're not related to the actual parallelism. And then it's open source in [inaudible]. This is the online learning software that we've been putting together. I guess online and batch learning now. Okay. We did a few studies of this system. So we had a smaller dataset, and we varied between ten and 100 nodes. And then for each number of nodes we ran ten times. And then you can look at -- so if things were perfectly linear in their speedup they would look like this line, we're not perfectly linear in our speed-up. Things do go a little bit slower as you go towards more and more nodes. But you do get significant speedups. So this is between ten and 100. This takes a factor of 10 longer to compute or almost a factor of ten longer to compute than this does. You can also look at the variation between the min and the max running time. You can see there's some variation. You have to expect that on a cluster you don't really control. But it's not too bad. Okay. So now we can look at how this algorithm works. These two really obvious things to compare with. One of them is what if we take our online learning algorithm and just do repeated averaging. There was a paper published about this kind of approach. And you can see that things do indeed get better over time as you do repeated averaging of online learning. And it's still getting better out here at 50 passes. And another approach you can do you can just say I'm going to run LBFGS. And it does nothing for ten passes and starts to get better and better and better and keeps getting better. And then you can do online learning for one pass and you can switch to LBFGS. And then you're done at about 20 passes. This is a spliced site recognition dataset. This is one of the largest publicly available linear prediction is reasonable type datasets that I know of. It's probably the largest that I know of. You can also do online for five passes and then switch over to LBFGS and you see that maybe it tops out a little bit later. So in our experiments it seemed like one online pass was pretty good at putting you near enough to the Optima that the LBFGS would really nail things pretty quickly. We compared with raised datasets with other algorithms. So there's a couple other algorithms. One of them -- I think Marty gave a talk about here before. The other one Lynn and Ofer worked on. And Ron. And who was the fourth author? >>: [inaudible]. >> John Langford: What's that. >>: [inaudible]. >> John Langford: by an overcomplete the nodes and then you average things That's right. Okay. So Marty's algorithm operates partition. A single example appears on one cord of you learn independently on each of these nodes and together at the end. And this is essentially measuring -- this is effective number of passes. This is measuring the degree of over completion for the partition, right? So at five it appears, for example, in five nodes. And then there's the mini batch approach. And here we're just measuring the number of passes through the data. Because we keep passing through the data multiple times doing the mini batches. And, well, LBFGS really helps you kick that last bit of performance. And that's a pretty big one. >>: In the time it's dominated by the gradient computation. >> John Langford: second or less. Oh, yeah, by far. The actual LBFGS itself is like a >>: You one it in one node. >> John Langford: Runs in all nodes. It's all reduce. So we look at sort of the communication computation breakdown for the system, and it's about ten seconds to do the synchronization. It's about a second or less to do the LBFGS itself. The slow node problem is still there. So it's about half of the communication -- of the time is wasted in a barrier. And about half is for computing the gradient. >>: What is the [inaudible] LBFGS [inaudible]. >> John Langford: You'd have to look in the paper. I think the default in BW is 15. But we often used five or ten. This is, by the way -- this graph kind of understates the difference, the performance between these algorithms. Because if you look at computational time, this would be substantially worse. This one would be much worse because the communication complexity is higher. This one would be significantly worse. Okay. So I'm about done. We're creating -- we decided to make a new machine learning mailing list. Machine-learning, because we had it at Yahoo! I found that useful for interacting with product groups of various sorts. So I think many people will be automatically subscribed and you can unsubscribe yourself. But if you are talking to product group people, doing machine learning, it will be cool to point this out to them. BW, you can just search for. There's a mailing list that's external. There's demand enough we can create an internal one. I'm trying to work with Misha to create a Windows version. Hopefully this week. And this tutorial at NIPS which is off a wiki. This is my first talk here. I wanted to mention a few other things. So there's Capshas [phonetic] and isomap that you mentioned. Worked on learning reductions, how to decompose complex prediction problems into simple prediction problems. The solution to the simple problems gives you a solution to the complex problems. We're implementing these in VW right now. Some of these are also logarithmic time reductions. So if you are trying to do multi-class classification, most common approaches take order K time where you have K classes. Turns out you can actually do log K effectively in many situations. We've looked at active learning. So this is selective sampling version of active learning. And I guess the thing that we figured out here is how to deal with noise. So we can deal with the same kind of noise that you typically analyze in learning settings with active learning. And we have algorithms now that are very efficient and effective. And then there's a lot of work on contextual bandwidth settings where I guess the motivating example at Yahoo! was ads. But anytime a user comes to a website and the website decides something to show the user, then the user reacts to that, you can try to use a machine learning to predict what the website should be presenting to the user. And there's a bunch of issues related to bias and exploration exploitation around that, that we've solved to a large extent. right. Thank you. [applause]. >>: Any other questions? Okay. Thanks, John. All